Yilei Shi

Multitask Learning for Earth Observation Data Classification with Hybrid Quantum Network

Jan 29, 2026Abstract:Quantum machine learning (QML) has gained increasing attention as a potential solution to address the challenges of computation requirements in the future. Earth observation (EO) has entered the era of Big Data, and the computational demands for effectively analyzing large EO data with complex deep learning models have become a bottleneck. Motivated by this, we aim to leverage quantum computing for EO data classification and explore its advantages despite the current limitations of quantum devices. This paper presents a hybrid model that incorporates multitask learning to assist efficient data encoding and employs a location weight module with quantum convolution operations to extract valid features for classification. The validity of our proposed model was evaluated using multiple EO benchmarks. Additionally, we experimentally explored the generalizability of our model and investigated the factors contributing to its advantage, highlighting the potential of QML in EO data analysis.

AutoRegressive Generation with B-rep Holistic Token Sequence Representation

Jan 23, 2026Abstract:Previous representation and generation approaches for the B-rep relied on graph-based representations that disentangle geometric and topological features through decoupled computational pipelines, thereby precluding the application of sequence-based generative frameworks, such as transformer architectures that have demonstrated remarkable performance. In this paper, we propose BrepARG, the first attempt to encode B-rep's geometry and topology into a holistic token sequence representation, enabling sequence-based B-rep generation with an autoregressive architecture. Specifically, BrepARG encodes B-rep into 3 types of tokens: geometry and position tokens representing geometric features, and face index tokens representing topology. Then the holistic token sequence is constructed hierarchically, starting with constructing the geometry blocks (i.e., faces and edges) using the above tokens, followed by geometry block sequencing. Finally, we assemble the holistic sequence representation for the entire B-rep. We also construct a transformer-based autoregressive model that learns the distribution over holistic token sequences via next-token prediction, using a multi-layer decoder-only architecture with causal masking. Experiments demonstrate that BrepARG achieves state-of-the-art (SOTA) performance. BrepARG validates the feasibility of representing B-rep as holistic token sequences, opening new directions for B-rep generation.

Reconstructing Building Height from Spaceborne TomoSAR Point Clouds Using a Dual-Topology Network

Jan 02, 2026Abstract:Reliable building height estimation is essential for various urban applications. Spaceborne SAR tomography (TomoSAR) provides weather-independent, side-looking observations that capture facade-level structure, offering a promising alternative to conventional optical methods. However, TomoSAR point clouds often suffer from noise, anisotropic point distributions, and data voids on incoherent surfaces, all of which hinder accurate height reconstruction. To address these challenges, we introduce a learning-based framework for converting raw TomoSAR points into high-resolution building height maps. Our dual-topology network alternates between a point branch that models irregular scatterer features and a grid branch that enforces spatial consistency. By jointly processing these representations, the network denoises the input points and inpaints missing regions to produce continuous height estimates. To our knowledge, this is the first proof of concept for large-scale urban height mapping directly from TomoSAR point clouds. Extensive experiments on data from Munich and Berlin validate the effectiveness of our approach. Moreover, we demonstrate that our framework can be extended to incorporate optical satellite imagery, further enhancing reconstruction quality. The source code is available at https://github.com/zhu-xlab/tomosar2height.

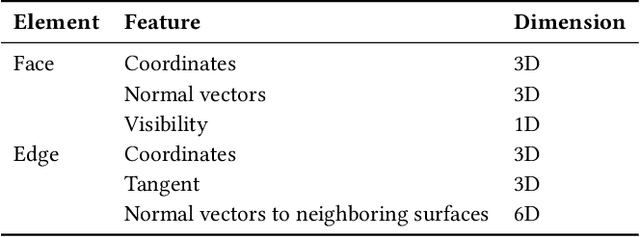

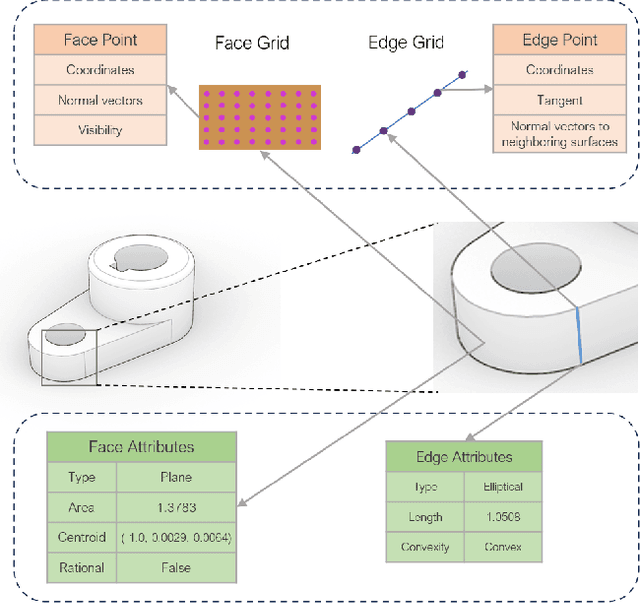

BrepLLM: Native Boundary Representation Understanding with Large Language Models

Dec 18, 2025

Abstract:Current token-sequence-based Large Language Models (LLMs) are not well-suited for directly processing 3D Boundary Representation (Brep) models that contain complex geometric and topological information. We propose BrepLLM, the first framework that enables LLMs to parse and reason over raw Brep data, bridging the modality gap between structured 3D geometry and natural language. BrepLLM employs a two-stage training pipeline: Cross-modal Alignment Pre-training and Multi-stage LLM Fine-tuning. In the first stage, an adaptive UV sampling strategy converts Breps into graphs representation with geometric and topological information. We then design a hierarchical BrepEncoder to extract features from geometry (i.e., faces and edges) and topology, producing both a single global token and a sequence of node tokens. Then we align the global token with text embeddings from a frozen CLIP text encoder (ViT-L/14) via contrastive learning. In the second stage, we integrate the pretrained BrepEncoder into an LLM. We then align its sequence of node tokens using a three-stage progressive training strategy: (1) training an MLP-based semantic mapping from Brep representation to 2D with 2D-LLM priors. (2) performing fine-tuning of the LLM. (3) designing a Mixture-of-Query Experts (MQE) to enhance geometric diversity modeling. We also construct Brep2Text, a dataset comprising 269,444 Brep-text question-answer pairs. Experiments show that BrepLLM achieves state-of-the-art (SOTA) results on 3D object classification and captioning tasks.

ProPL: Universal Semi-Supervised Ultrasound Image Segmentation via Prompt-Guided Pseudo-Labeling

Nov 19, 2025

Abstract:Existing approaches for the problem of ultrasound image segmentation, whether supervised or semi-supervised, are typically specialized for specific anatomical structures or tasks, limiting their practical utility in clinical settings. In this paper, we pioneer the task of universal semi-supervised ultrasound image segmentation and propose ProPL, a framework that can handle multiple organs and segmentation tasks while leveraging both labeled and unlabeled data. At its core, ProPL employs a shared vision encoder coupled with prompt-guided dual decoders, enabling flexible task adaptation through a prompting-upon-decoding mechanism and reliable self-training via an uncertainty-driven pseudo-label calibration (UPLC) module. To facilitate research in this direction, we introduce a comprehensive ultrasound dataset spanning 5 organs and 8 segmentation tasks. Extensive experiments demonstrate that ProPL outperforms state-of-the-art methods across various metrics, establishing a new benchmark for universal ultrasound image segmentation.

Multimodal Large Language Models for Medical Report Generation via Customized Prompt Tuning

Jun 18, 2025Abstract:Medical report generation from imaging data remains a challenging task in clinical practice. While large language models (LLMs) show great promise in addressing this challenge, their effective integration with medical imaging data still deserves in-depth exploration. In this paper, we present MRG-LLM, a novel multimodal large language model (MLLM) that combines a frozen LLM with a learnable visual encoder and introduces a dynamic prompt customization mechanism. Our key innovation lies in generating instance-specific prompts tailored to individual medical images through conditional affine transformations derived from visual features. We propose two implementations: prompt-wise and promptbook-wise customization, enabling precise and targeted report generation. Extensive experiments on IU X-ray and MIMIC-CXR datasets demonstrate that MRG-LLM achieves state-of-the-art performance in medical report generation. Our code will be made publicly available.

Taming Stable Diffusion for Computed Tomography Blind Super-Resolution

Jun 13, 2025Abstract:High-resolution computed tomography (CT) imaging is essential for medical diagnosis but requires increased radiation exposure, creating a critical trade-off between image quality and patient safety. While deep learning methods have shown promise in CT super-resolution, they face challenges with complex degradations and limited medical training data. Meanwhile, large-scale pre-trained diffusion models, particularly Stable Diffusion, have demonstrated remarkable capabilities in synthesizing fine details across various vision tasks. Motivated by this, we propose a novel framework that adapts Stable Diffusion for CT blind super-resolution. We employ a practical degradation model to synthesize realistic low-quality images and leverage a pre-trained vision-language model to generate corresponding descriptions. Subsequently, we perform super-resolution using Stable Diffusion with a specialized controlling strategy, conditioned on both low-resolution inputs and the generated text descriptions. Extensive experiments show that our method outperforms existing approaches, demonstrating its potential for achieving high-quality CT imaging at reduced radiation doses. Our code will be made publicly available.

GlobalBuildingAtlas: An Open Global and Complete Dataset of Building Polygons, Heights and LoD1 3D Models

Jun 04, 2025

Abstract:We introduce GlobalBuildingAtlas, a publicly available dataset providing global and complete coverage of building polygons, heights and Level of Detail 1 (LoD1) 3D building models. This is the first open dataset to offer high quality, consistent, and complete building data in 2D and 3D form at the individual building level on a global scale. Towards this dataset, we developed machine learning-based pipelines to derive building polygons and heights (called GBA.Height) from global PlanetScope satellite data, respectively. Also a quality-based fusion strategy was employed to generate higher-quality polygons (called GBA.Polygon) based on existing open building polygons, including our own derived one. With more than 2.75 billion buildings worldwide, GBA.Polygon surpasses the most comprehensive database to date by more than 1 billion buildings. GBA.Height offers the most detailed and accurate global 3D building height maps to date, achieving a spatial resolution of 3x3 meters-30 times finer than previous global products (90 m), enabling a high-resolution and reliable analysis of building volumes at both local and global scales. Finally, we generated a global LoD1 building model (called GBA.LoD1) from the resulting GBA.Polygon and GBA.Height. GBA.LoD1 represents the first complete global LoD1 building models, including 2.68 billion building instances with predicted heights, i.e., with a height completeness of more than 97%, achieving RMSEs ranging from 1.5 m to 8.9 m across different continents. With its height accuracy, comprehensive global coverage and rich spatial details, GlobalBuildingAltas offers novel insights on the status quo of global buildings, which unlocks unprecedented geospatial analysis possibilities, as showcased by a better illustration of where people live and a more comprehensive monitoring of the progress on the 11th Sustainable Development Goal of the United Nations.

Global Collinearity-aware Polygonizer for Polygonal Building Mapping in Remote Sensing

May 02, 2025Abstract:This paper addresses the challenge of mapping polygonal buildings from remote sensing images and introduces a novel algorithm, the Global Collinearity-aware Polygonizer (GCP). GCP, built upon an instance segmentation framework, processes binary masks produced by any instance segmentation model. The algorithm begins by collecting polylines sampled along the contours of the binary masks. These polylines undergo a refinement process using a transformer-based regression module to ensure they accurately fit the contours of the targeted building instances. Subsequently, a collinearity-aware polygon simplification module simplifies these refined polylines and generate the final polygon representation. This module employs dynamic programming technique to optimize an objective function that balances the simplicity and fidelity of the polygons, achieving globally optimal solutions. Furthermore, the optimized collinearity-aware objective is seamlessly integrated into network training, enhancing the cohesiveness of the entire pipeline. The effectiveness of GCP has been validated on two public benchmarks for polygonal building mapping. Further experiments reveal that applying the collinearity-aware polygon simplification module to arbitrary polylines, without prior knowledge, enhances accuracy over traditional methods such as the Douglas-Peucker algorithm. This finding underscores the broad applicability of GCP. The code for the proposed method will be made available at https://github.com/zhu-xlab.

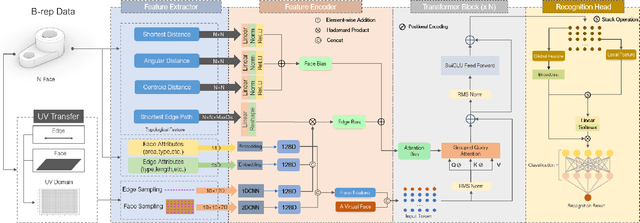

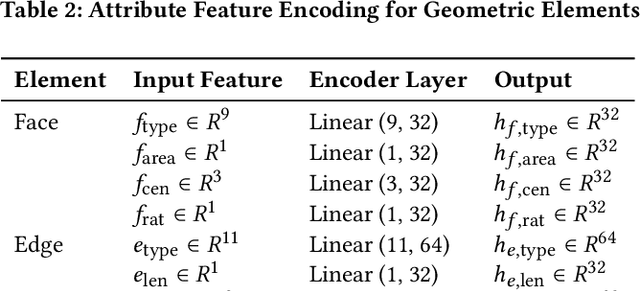

BRepFormer: Transformer-Based B-rep Geometric Feature Recognition

Apr 10, 2025

Abstract:Recognizing geometric features on B-rep models is a cornerstone technique for multimedia content-based retrieval and has been widely applied in intelligent manufacturing. However, previous research often merely focused on Machining Feature Recognition (MFR), falling short in effectively capturing the intricate topological and geometric characteristics of complex geometry features. In this paper, we propose BRepFormer, a novel transformer-based model to recognize both machining feature and complex CAD models' features. BRepFormer encodes and fuses the geometric and topological features of the models. Afterwards, BRepFormer utilizes a transformer architecture for feature propagation and a recognition head to identify geometry features. During each iteration of the transformer, we incorporate a bias that combines edge features and topology features to reinforce geometric constraints on each face. In addition, we also proposed a dataset named Complex B-rep Feature Dataset (CBF), comprising 20,000 B-rep models. By covering more complex B-rep models, it is better aligned with industrial applications. The experimental results demonstrate that BRepFormer achieves state-of-the-art accuracy on the MFInstSeg, MFTRCAD, and our CBF datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge