Cheng Bian

Difficulty-aware Glaucoma Classification with Multi-Rater Consensus Modeling

Jul 29, 2020

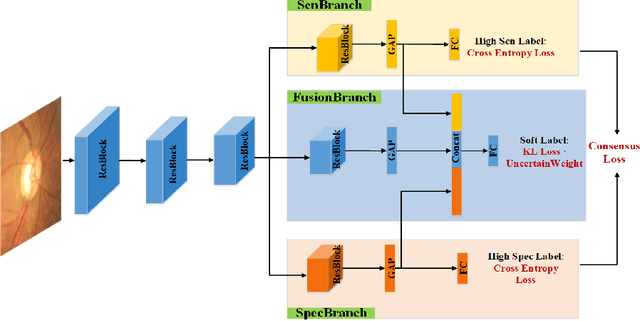

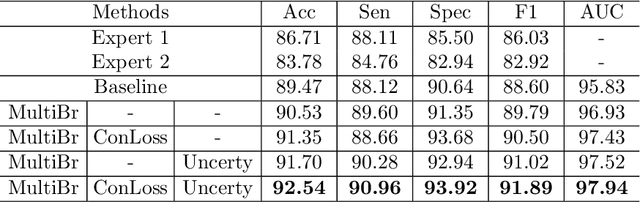

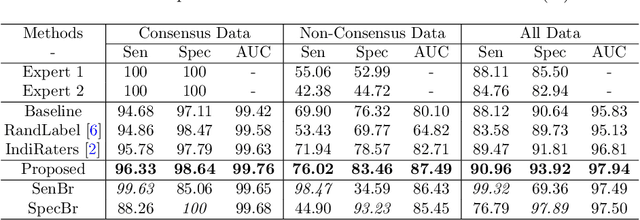

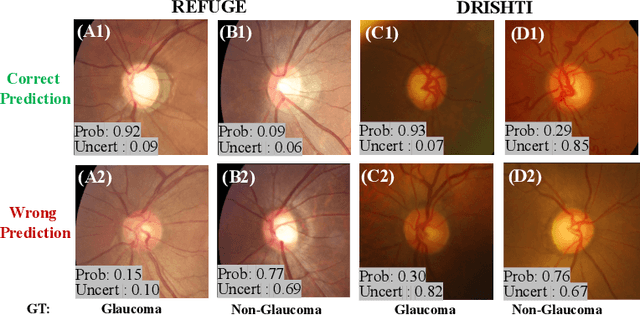

Abstract:Medical images are generally labeled by multiple experts before the final ground-truth labels are determined. Consensus or disagreement among experts regarding individual images reflects the gradeability and difficulty levels of the image. However, when being used for model training, only the final ground-truth label is utilized, while the critical information contained in the raw multi-rater gradings regarding the image being an easy/hard case is discarded. In this paper, we aim to take advantage of the raw multi-rater gradings to improve the deep learning model performance for the glaucoma classification task. Specifically, a multi-branch model structure is proposed to predict the most sensitive, most specifical and a balanced fused result for the input images. In order to encourage the sensitivity branch and specificity branch to generate consistent results for consensus labels and opposite results for disagreement labels, a consensus loss is proposed to constrain the output of the two branches. Meanwhile, the consistency/inconsistency between the prediction results of the two branches implies the image being an easy/hard case, which is further utilized to encourage the balanced fusion branch to concentrate more on the hard cases. Compared with models trained only with the final ground-truth labels, the proposed method using multi-rater consensus information has achieved superior performance, and it is also able to estimate the difficulty levels of individual input images when making the prediction.

Comparing to Learn: Surpassing ImageNet Pretraining on Radiographs By Comparing Image Representations

Jul 22, 2020

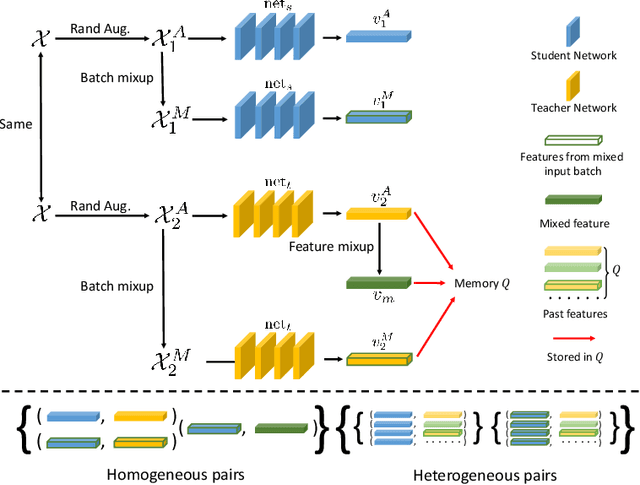

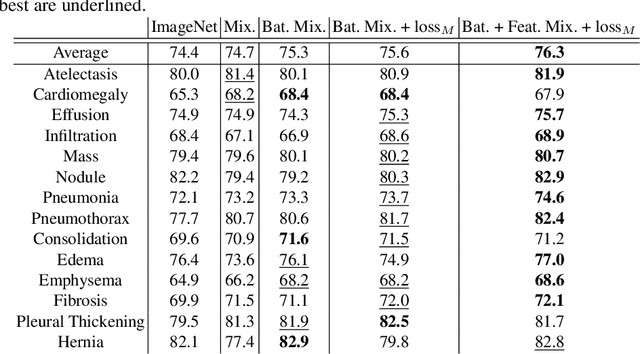

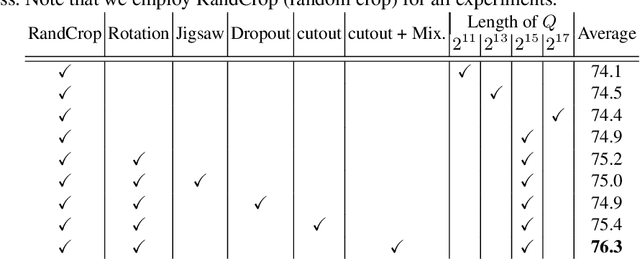

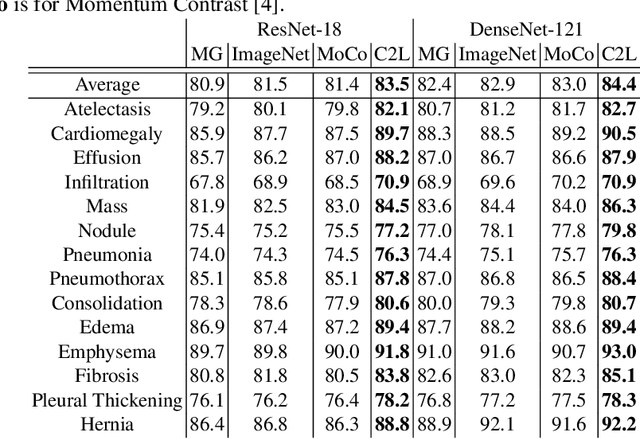

Abstract:In deep learning era, pretrained models play an important role in medical image analysis, in which ImageNet pretraining has been widely adopted as the best way. However, it is undeniable that there exists an obvious domain gap between natural images and medical images. To bridge this gap, we propose a new pretraining method which learns from 700k radiographs given no manual annotations. We call our method as Comparing to Learn (C2L) because it learns robust features by comparing different image representations. To verify the effectiveness of C2L, we conduct comprehensive ablation studies and evaluate it on different tasks and datasets. The experimental results on radiographs show that C2L can outperform ImageNet pretraining and previous state-of-the-art approaches significantly. Code and models are available.

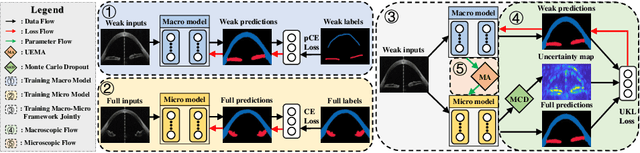

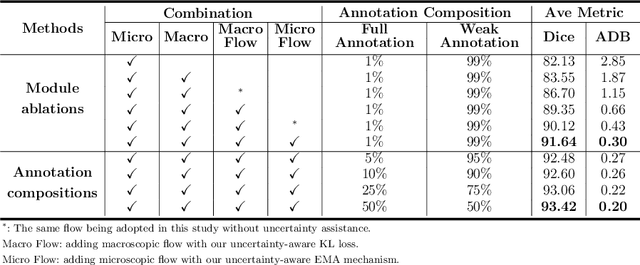

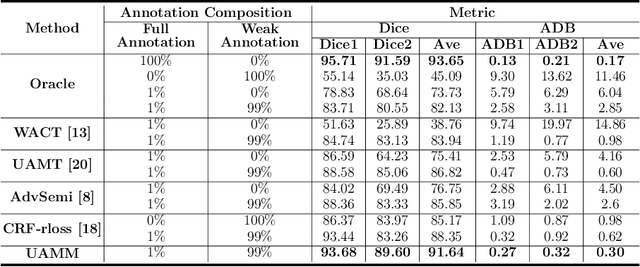

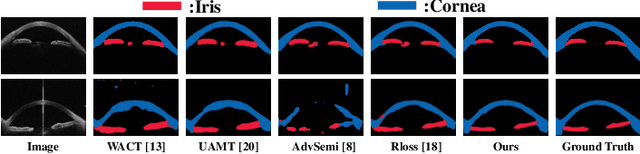

A Macro-Micro Weakly-supervised Framework for AS-OCT Tissue Segmentation

Jul 20, 2020

Abstract:Primary angle closure glaucoma (PACG) is the leading cause of irreversible blindness among Asian people. Early detection of PACG is essential, so as to provide timely treatment and minimize the vision loss. In the clinical practice, PACG is diagnosed by analyzing the angle between the cornea and iris with anterior segment optical coherence tomography (AS-OCT). The rapid development of deep learning technologies provides the feasibility of building a computer-aided system for the fast and accurate segmentation of cornea and iris tissues. However, the application of deep learning methods in the medical imaging field is still restricted by the lack of enough fully-annotated samples. In this paper, we propose a novel framework to segment the target tissues accurately for the AS-OCT images, by using the combination of weakly-annotated images (majority) and fully-annotated images (minority). The proposed framework consists of two models which provide reliable guidance for each other. In addition, uncertainty guided strategies are adopted to increase the accuracy and stability of the guidance. Detailed experiments on the publicly available AGE dataset demonstrate that the proposed framework outperforms the state-of-the-art semi-/weakly-supervised methods and has a comparable performance as the fully-supervised method. Therefore, the proposed method is demonstrated to be effective in exploiting information contained in the weakly-annotated images and has the capability to substantively relieve the annotation workload.

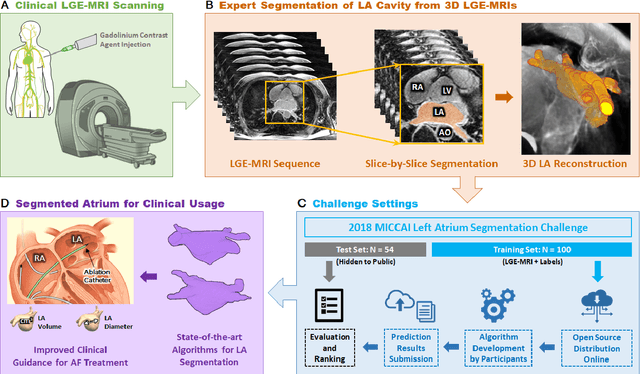

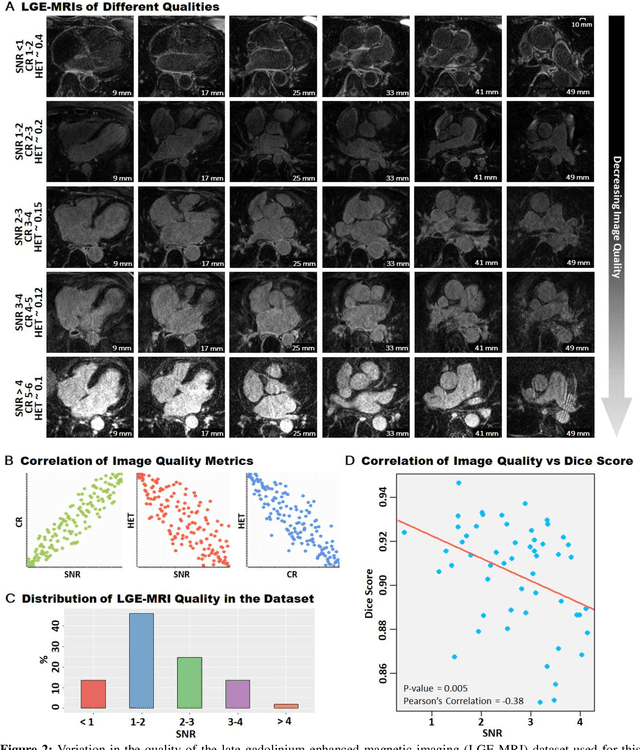

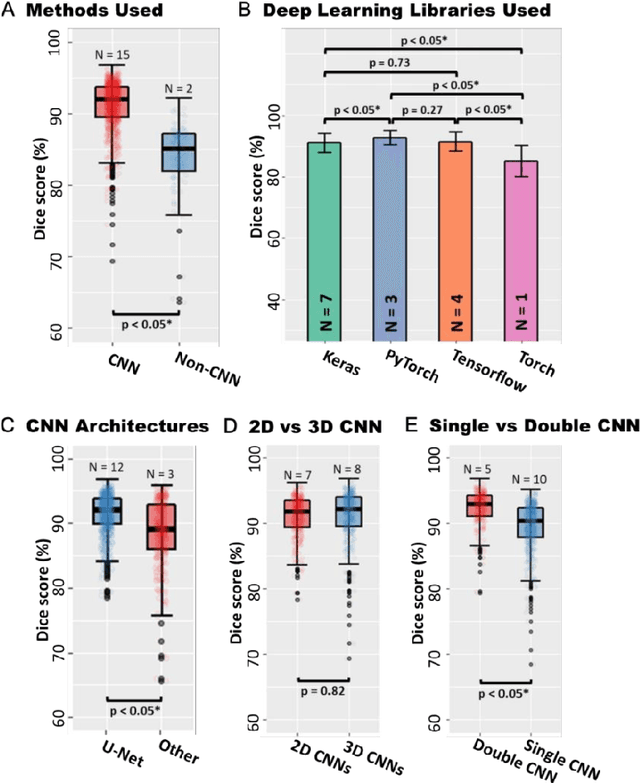

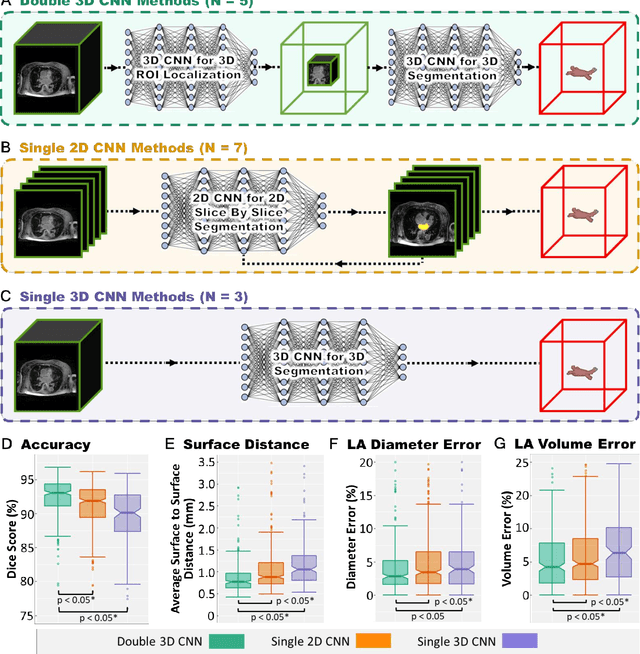

A Global Benchmark of Algorithms for Segmenting Late Gadolinium-Enhanced Cardiac Magnetic Resonance Imaging

May 07, 2020

Abstract:Segmentation of cardiac images, particularly late gadolinium-enhanced magnetic resonance imaging (LGE-MRI) widely used for visualizing diseased cardiac structures, is a crucial first step for clinical diagnosis and treatment. However, direct segmentation of LGE-MRIs is challenging due to its attenuated contrast. Since most clinical studies have relied on manual and labor-intensive approaches, automatic methods are of high interest, particularly optimized machine learning approaches. To address this, we organized the "2018 Left Atrium Segmentation Challenge" using 154 3D LGE-MRIs, currently the world's largest cardiac LGE-MRI dataset, and associated labels of the left atrium segmented by three medical experts, ultimately attracting the participation of 27 international teams. In this paper, extensive analysis of the submitted algorithms using technical and biological metrics was performed by undergoing subgroup analysis and conducting hyper-parameter analysis, offering an overall picture of the major design choices of convolutional neural networks (CNNs) and practical considerations for achieving state-of-the-art left atrium segmentation. Results show the top method achieved a dice score of 93.2% and a mean surface to a surface distance of 0.7 mm, significantly outperforming prior state-of-the-art. Particularly, our analysis demonstrated that double, sequentially used CNNs, in which a first CNN is used for automatic region-of-interest localization and a subsequent CNN is used for refined regional segmentation, achieved far superior results than traditional methods and pipelines containing single CNNs. This large-scale benchmarking study makes a significant step towards much-improved segmentation methods for cardiac LGE-MRIs, and will serve as an important benchmark for evaluating and comparing the future works in the field.

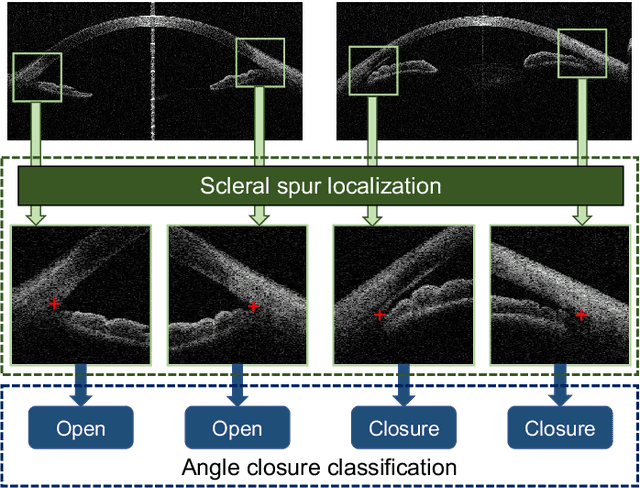

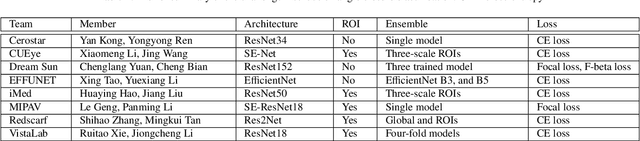

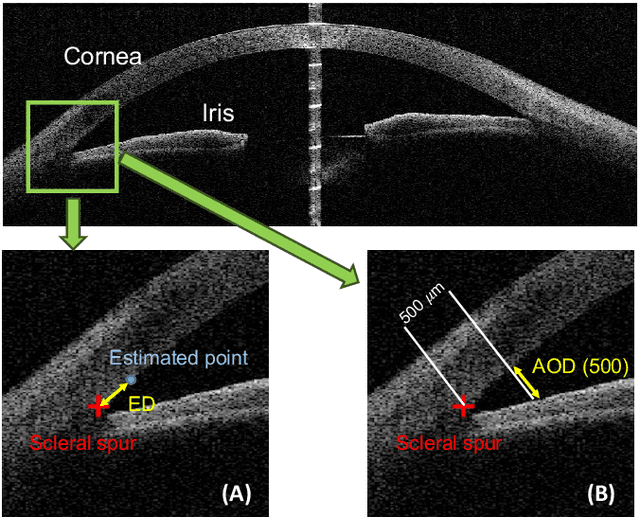

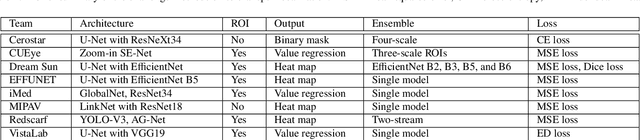

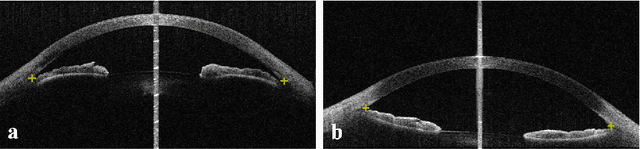

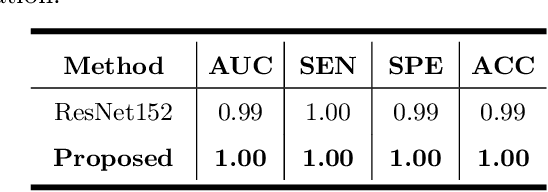

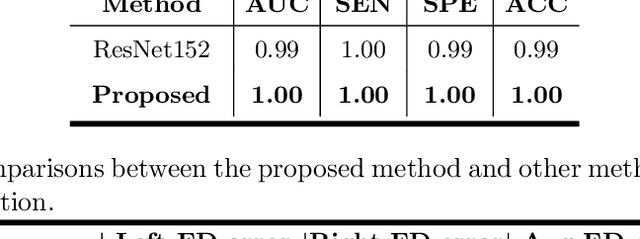

AGE Challenge: Angle Closure Glaucoma Evaluation in Anterior Segment Optical Coherence Tomography

May 05, 2020

Abstract:Angle closure glaucoma (ACG) is a more aggressive disease than open-angle glaucoma, where the abnormal anatomical structures of the anterior chamber angle (ACA) may cause an elevated intraocular pressure and gradually leads to glaucomatous optic neuropathy and eventually to visual impairment and blindness. Anterior Segment Optical Coherence Tomography (AS-OCT) imaging provides a fast and contactless way to discriminate angle closure from open angle. Although many medical image analysis algorithms have been developed for glaucoma diagnosis, only a few studies have focused on AS-OCT imaging. In particular, there is no public AS-OCT dataset available for evaluating the existing methods in a uniform way, which limits the progress in the development of automated techniques for angle closure detection and assessment. To address this, we organized the Angle closure Glaucoma Evaluation challenge (AGE), held in conjunction with MICCAI 2019. The AGE challenge consisted of two tasks: scleral spur localization and angle closure classification. For this challenge, we released a large data of 4800 annotated AS-OCT images from 199 patients, and also proposed an evaluation framework to benchmark and compare different models. During the AGE challenge, over 200 teams registered online, and more than 1100 results were submitted for online evaluation. Finally, eight teams participated in the onsite challenge. In this paper, we summarize these eight onsite challenge methods and analyze their corresponding results in the two tasks. We further discuss limitations and future directions. In the AGE challenge, the top-performing approach had an average Euclidean Distance of 10 pixel in scleral spur localization, while in the task of angle closure classification, all the algorithms achieved the satisfactory performances, especially, 100% accuracy rate for top-two performances.

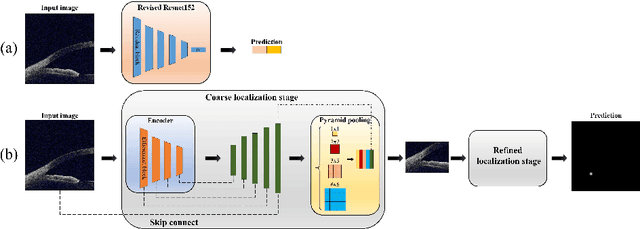

Identification of primary angle-closure on AS-OCT images with Convolutional Neural Networks

Oct 23, 2019

Abstract:Primary angle-closure disease (PACD) is a severe retinal disease, which might cause irreversible vision loss. In clinic, accurate identification of angle-closure and localization of the scleral spur's position on anterior segment optical coherence tomography (AS-OCT) is essential for the diagnosis of PACD. However, manual delineation might confine in low accuracy and low efficiency. In this paper, we propose an efficient and accurate end-to-end architecture for angle-closure classification and scleral spur localization. Specifically, we utilize a revised ResNet152 as our backbone to improve the accuracy of the angle-closure identification. For scleral spur localization, we adopt EfficientNet as encoder because of its powerful feature extraction potential. By combining the skip-connect module and pyramid pooling module, the network is able to collect semantic cues in feature maps from multiple dimensions and scales. Afterward, we propose a novel keypoint registration loss to constrain the model's attention to the intensity and location of the scleral spur area. Several experiments are extensively conducted to evaluate our method on the angle-closure glaucoma evaluation (AGE) Challenge dataset. The results show that our proposed architecture ranks the first place of the classification task on the test dataset and achieves the average Euclidean distance error of 12.00 pixels in the scleral spur localization task.

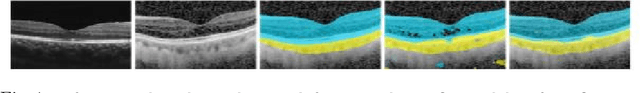

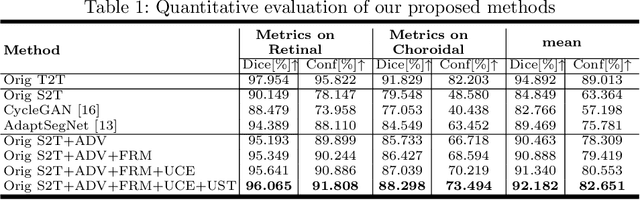

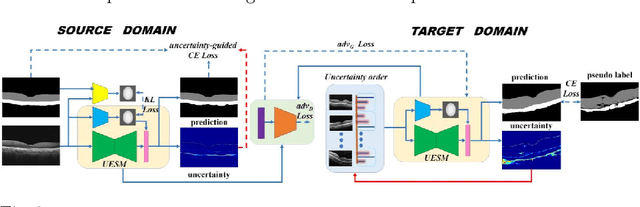

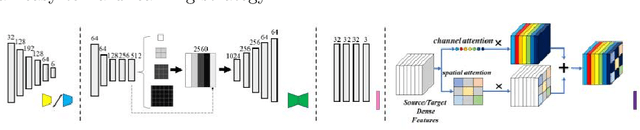

Uncertainty-Guided Domain Alignment for Layer Segmentation in OCT Images

Aug 30, 2019

Abstract:Automatic and accurate segmentation for retinal and choroidal layers of Optical Coherence Tomography (OCT) is crucial for detection of various ocular diseases. However, because of the variations in different equipments, OCT data obtained from different manufacturers might encounter appearance discrepancy, which could lead to performance fluctuation to a deep neural network. In this paper, we propose an uncertainty-guided domain alignment method to aim at alleviating this problem to transfer discriminative knowledge across distinct domains. We disign a novel uncertainty-guided cross-entropy loss for boosting the performance over areas with high uncertainty. An uncertainty-guided curriculum transfer strategy is developed for the self-training (ST), which regards uncertainty as efficient and effective guidance to optimize the learning process in target domain. Adversarial learning with feature recalibration module (FRM) is applied to transfer informative knowledge from the domain feature spaces adaptively. The experiments on two OCT datasets show that the proposed methods can obtain significant segmentation improvements compared with the baseline models.

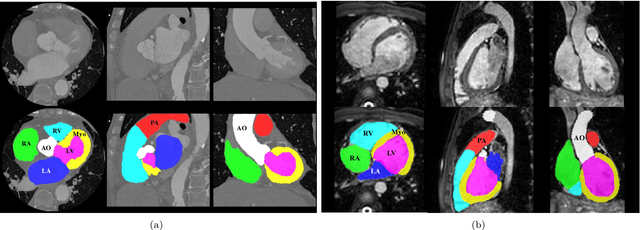

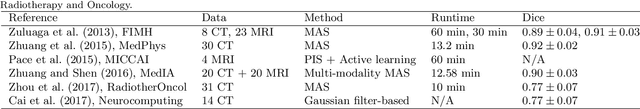

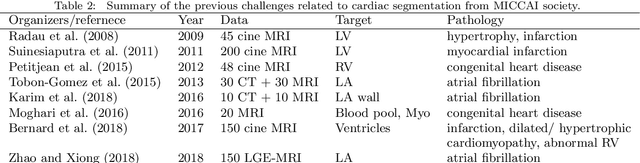

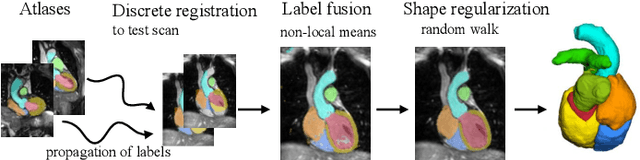

Evaluation of Algorithms for Multi-Modality Whole Heart Segmentation: An Open-Access Grand Challenge

Feb 21, 2019

Abstract:Knowledge of whole heart anatomy is a prerequisite for many clinical applications. Whole heart segmentation (WHS), which delineates substructures of the heart, can be very valuable for modeling and analysis of the anatomy and functions of the heart. However, automating this segmentation can be arduous due to the large variation of the heart shape, and different image qualities of the clinical data. To achieve this goal, a set of training data is generally needed for constructing priors or for training. In addition, it is difficult to perform comparisons between different methods, largely due to differences in the datasets and evaluation metrics used. This manuscript presents the methodologies and evaluation results for the WHS algorithms selected from the submissions to the Multi-Modality Whole Heart Segmentation (MM-WHS) challenge, in conjunction with MICCAI 2017. The challenge provides 120 three-dimensional cardiac images covering the whole heart, including 60 CT and 60 MRI volumes, all acquired in clinical environments with manual delineation. Ten algorithms for CT data and eleven algorithms for MRI data, submitted from twelve groups, have been evaluated. The results show that many of the deep learning (DL) based methods achieved high accuracy, even though the number of training datasets was limited. A number of them also reported poor results in the blinded evaluation, probably due to overfitting in their training. The conventional algorithms, mainly based on multi-atlas segmentation, demonstrated robust and stable performance, even though the accuracy is not as good as the best DL method in CT segmentation. The challenge, including the provision of the annotated training data and the blinded evaluation for submitted algorithms on the test data, continues as an ongoing benchmarking resource via its homepage (\url{www.sdspeople.fudan.edu.cn/zhuangxiahai/0/mmwhs/}).

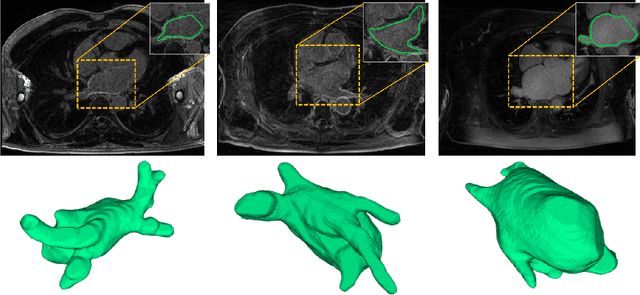

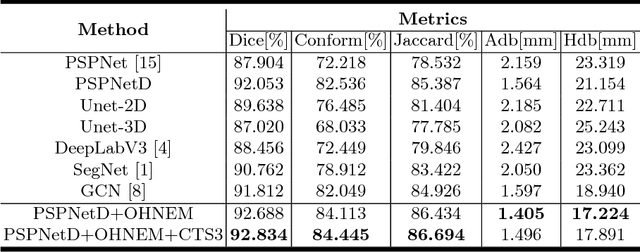

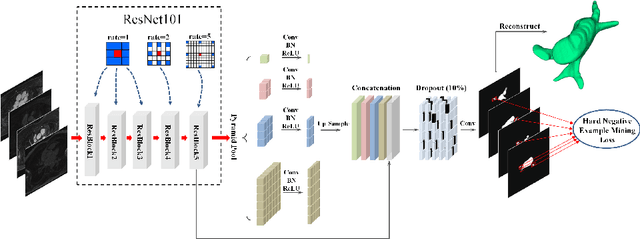

Pyramid Network with Online Hard Example Mining for Accurate Left Atrium Segmentation

Dec 14, 2018

Abstract:Accurately segmenting left atrium in MR volume can benefit the ablation procedure of atrial fibrillation. Traditional automated solutions often fail in relieving experts from the labor-intensive manual labeling. In this paper, we propose a deep neural network based solution for automated left atrium segmentation in gadolinium-enhanced MR volumes with promising performance. We firstly argue that, for this volumetric segmentation task, networks in 2D fashion can present great superiorities in time efficiency and segmentation accuracy than networks with 3D fashion. Considering the highly varying shape of atrium and the branchy structure of associated pulmonary veins, we propose to adopt a pyramid module to collect semantic cues in feature maps from multiple scales for fine-grained segmentation. Also, to promote our network in classifying the hard examples, we propose an Online Hard Negative Example Mining strategy to identify voxels in slices with low classification certainties and penalize the wrong predictions on them. Finally, we devise a competitive training scheme to further boost the generalization ability of networks. Extensively verified on 20 testing volumes, our proposed framework achieves an average Dice of 92.83% in segmenting the left atria and pulmonary veins.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge