Chao Tao

Towards Accurate UAV Image Perception: Guiding Vision-Language Models with Stronger Task Prompts

Dec 08, 2025

Abstract:Existing image perception methods based on VLMs generally follow a paradigm wherein models extract and analyze image content based on user-provided textual task prompts. However, such methods face limitations when applied to UAV imagery, which presents challenges like target confusion, scale variations, and complex backgrounds. These challenges arise because VLMs' understanding of image content depends on the semantic alignment between visual and textual tokens. When the task prompt is simplistic and the image content is complex, achieving effective alignment becomes difficult, limiting the model's ability to focus on task-relevant information. To address this issue, we introduce AerialVP, the first agent framework for task prompt enhancement in UAV image perception. AerialVP proactively extracts multi-dimensional auxiliary information from UAV images to enhance task prompts, overcoming the limitations of traditional VLM-based approaches. Specifically, the enhancement process includes three stages: (1) analyzing the task prompt to identify the task type and enhancement needs, (2) selecting appropriate tools from the tool repository, and (3) generating enhanced task prompts based on the analysis and selected tools. To evaluate AerialVP, we introduce AerialSense, a comprehensive benchmark for UAV image perception that includes Aerial Visual Reasoning, Aerial Visual Question Answering, and Aerial Visual Grounding tasks. AerialSense provides a standardized basis for evaluating model generalization and performance across diverse resolutions, lighting conditions, and both urban and natural scenes. Experimental results demonstrate that AerialVP significantly enhances task prompt guidance, leading to stable and substantial performance improvements in both open-source and proprietary VLMs. Our work will be available at https://github.com/lostwolves/AerialVP.

Remote Sensing Image Intelligent Interpretation with the Language-Centered Perspective: Principles, Methods and Challenges

Aug 09, 2025

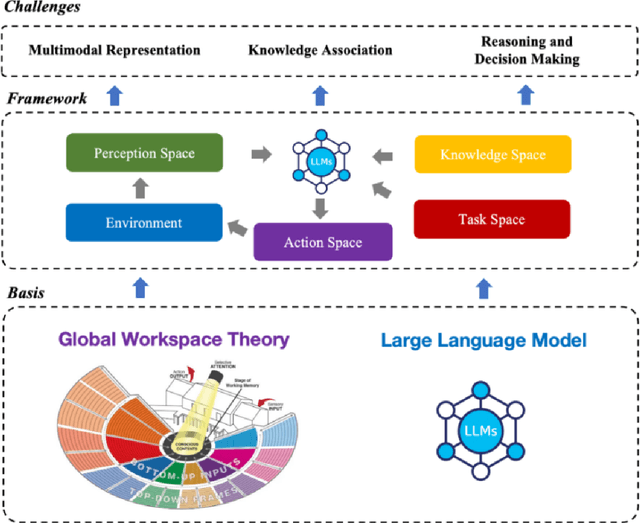

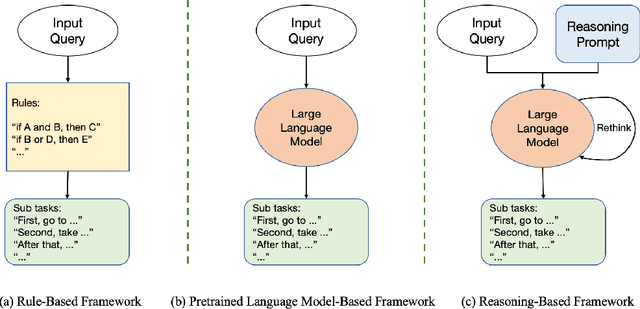

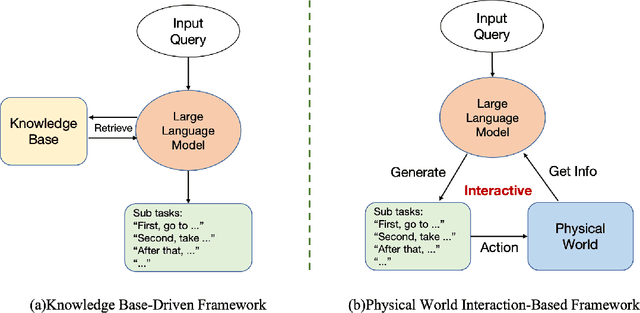

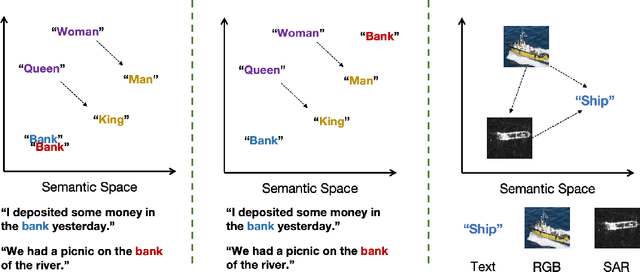

Abstract:The mainstream paradigm of remote sensing image interpretation has long been dominated by vision-centered models, which rely on visual features for semantic understanding. However, these models face inherent limitations in handling multi-modal reasoning, semantic abstraction, and interactive decision-making. While recent advances have introduced Large Language Models (LLMs) into remote sensing workflows, existing studies primarily focus on downstream applications, lacking a unified theoretical framework that explains the cognitive role of language. This review advocates a paradigm shift from vision-centered to language-centered remote sensing interpretation. Drawing inspiration from the Global Workspace Theory (GWT) of human cognition, We propose a language-centered framework for remote sensing interpretation that treats LLMs as the cognitive central hub integrating perceptual, task, knowledge and action spaces to enable unified understanding, reasoning, and decision-making. We first explore the potential of LLMs as the central cognitive component in remote sensing interpretation, and then summarize core technical challenges, including unified multimodal representation, knowledge association, and reasoning and decision-making. Furthermore, we construct a global workspace-driven interpretation mechanism and review how language-centered solutions address each challenge. Finally, we outline future research directions from four perspectives: adaptive alignment of multimodal data, task understanding under dynamic knowledge constraints, trustworthy reasoning, and autonomous interaction. This work aims to provide a conceptual foundation for the next generation of remote sensing interpretation systems and establish a roadmap toward cognition-driven intelligent geospatial analysis.

A Gift from the Integration of Discriminative and Diffusion-based Generative Learning: Boundary Refinement Remote Sensing Semantic Segmentation

Jul 02, 2025Abstract:Remote sensing semantic segmentation must address both what the ground objects are within an image and where they are located. Consequently, segmentation models must ensure not only the semantic correctness of large-scale patches (low-frequency information) but also the precise localization of boundaries between patches (high-frequency information). However, most existing approaches rely heavily on discriminative learning, which excels at capturing low-frequency features, while overlooking its inherent limitations in learning high-frequency features for semantic segmentation. Recent studies have revealed that diffusion generative models excel at generating high-frequency details. Our theoretical analysis confirms that the diffusion denoising process significantly enhances the model's ability to learn high-frequency features; however, we also observe that these models exhibit insufficient semantic inference for low-frequency features when guided solely by the original image. Therefore, we integrate the strengths of both discriminative and generative learning, proposing the Integration of Discriminative and diffusion-based Generative learning for Boundary Refinement (IDGBR) framework. The framework first generates a coarse segmentation map using a discriminative backbone model. This map and the original image are fed into a conditioning guidance network to jointly learn a guidance representation subsequently leveraged by an iterative denoising diffusion process refining the coarse segmentation. Extensive experiments across five remote sensing semantic segmentation datasets (binary and multi-class segmentation) confirm our framework's capability of consistent boundary refinement for coarse results from diverse discriminative architectures. The source code will be available at https://github.com/KeyanHu-git/IDGBR.

A Joint Learning Framework with Feature Reconstruction and Prediction for Incomplete Satellite Image Time Series in Agricultural Semantic Segmentation

May 25, 2025Abstract:Satellite Image Time Series (SITS) is crucial for agricultural semantic segmentation. However, Cloud contamination introduces time gaps in SITS, disrupting temporal dependencies and causing feature shifts, leading to degraded performance of models trained on complete SITS. Existing methods typically address this by reconstructing the entire SITS before prediction or using data augmentation to simulate missing data. Yet, full reconstruction may introduce noise and redundancy, while the data-augmented model can only handle limited missing patterns, leading to poor generalization. We propose a joint learning framework with feature reconstruction and prediction to address incomplete SITS more effectively. During training, we simulate data-missing scenarios using temporal masks. The two tasks are guided by both ground-truth labels and the teacher model trained on complete SITS. The prediction task constrains the model from selectively reconstructing critical features from masked inputs that align with the teacher's temporal feature representations. It reduces unnecessary reconstruction and limits noise propagation. By integrating reconstructed features into the prediction task, the model avoids learning shortcuts and maintains its ability to handle varied missing patterns and complete SITS. Experiments on SITS from Hunan Province, Western France, and Catalonia show that our method improves mean F1-scores by 6.93% in cropland extraction and 7.09% in crop classification over baselines. It also generalizes well across satellite sensors, including Sentinel-2 and PlanetScope, under varying temporal missing rates and model backbones.

BEDI: A Comprehensive Benchmark for Evaluating Embodied Agents on UAVs

May 23, 2025Abstract:With the rapid advancement of low-altitude remote sensing and Vision-Language Models (VLMs), Embodied Agents based on Unmanned Aerial Vehicles (UAVs) have shown significant potential in autonomous tasks. However, current evaluation methods for UAV-Embodied Agents (UAV-EAs) remain constrained by the lack of standardized benchmarks, diverse testing scenarios and open system interfaces. To address these challenges, we propose BEDI (Benchmark for Embodied Drone Intelligence), a systematic and standardized benchmark designed for evaluating UAV-EAs. Specifically, we introduce a novel Dynamic Chain-of-Embodied-Task paradigm based on the perception-decision-action loop, which decomposes complex UAV tasks into standardized, measurable subtasks. Building on this paradigm, we design a unified evaluation framework encompassing five core sub-skills: semantic perception, spatial perception, motion control, tool utilization, and task planning. Furthermore, we construct a hybrid testing platform that integrates static real-world environments with dynamic virtual scenarios, enabling comprehensive performance assessment of UAV-EAs across varied contexts. The platform also offers open and standardized interfaces, allowing researchers to customize tasks and extend scenarios, thereby enhancing flexibility and scalability in the evaluation process. Finally, through empirical evaluations of several state-of-the-art (SOTA) VLMs, we reveal their limitations in embodied UAV tasks, underscoring the critical role of the BEDI benchmark in advancing embodied intelligence research and model optimization. By filling the gap in systematic and standardized evaluation within this field, BEDI facilitates objective model comparison and lays a robust foundation for future development in this field. Our benchmark will be released at https://github.com/lostwolves/BEDI .

A large-scale image-text dataset benchmark for farmland segmentation

Mar 29, 2025Abstract:The traditional deep learning paradigm that solely relies on labeled data has limitations in representing the spatial relationships between farmland elements and the surrounding environment.It struggles to effectively model the dynamic temporal evolution and spatial heterogeneity of farmland. Language,as a structured knowledge carrier,can explicitly express the spatiotemporal characteristics of farmland, such as its shape, distribution,and surrounding environmental information.Therefore,a language-driven learning paradigm can effectively alleviate the challenges posed by the spatiotemporal heterogeneity of farmland.However,in the field of remote sensing imagery of farmland,there is currently no comprehensive benchmark dataset to support this research direction.To fill this gap,we introduced language based descriptions of farmland and developed FarmSeg-VL dataset,the first fine-grained image-text dataset designed for spatiotemporal farmland segmentation.Firstly, this article proposed a semi-automatic annotation method that can accurately assign caption to each image, ensuring high data quality and semantic richness while improving the efficiency of dataset construction.Secondly,the FarmSeg-VL exhibits significant spatiotemporal characteristics.In terms of the temporal dimension,it covers all four seasons.In terms of the spatial dimension,it covers eight typical agricultural regions across China.In addition, in terms of captions,FarmSeg-VL covers rich spatiotemporal characteristics of farmland,including its inherent properties,phenological characteristics, spatial distribution,topographic and geomorphic features,and the distribution of surrounding environments.Finally,we present a performance analysis of VLMs and the deep learning models that rely solely on labels trained on the FarmSeg-VL,demonstrating its potential as a standard benchmark for farmland segmentation.

MEET: A Million-Scale Dataset for Fine-Grained Geospatial Scene Classification with Zoom-Free Remote Sensing Imagery

Mar 14, 2025Abstract:Accurate fine-grained geospatial scene classification using remote sensing imagery is essential for a wide range of applications. However, existing approaches often rely on manually zooming remote sensing images at different scales to create typical scene samples. This approach fails to adequately support the fixed-resolution image interpretation requirements in real-world scenarios. To address this limitation, we introduce the Million-scale finE-grained geospatial scEne classification dataseT (MEET), which contains over 1.03 million zoom-free remote sensing scene samples, manually annotated into 80 fine-grained categories. In MEET, each scene sample follows a scene-inscene layout, where the central scene serves as the reference, and auxiliary scenes provide crucial spatial context for finegrained classification. Moreover, to tackle the emerging challenge of scene-in-scene classification, we present the Context-Aware Transformer (CAT), a model specifically designed for this task, which adaptively fuses spatial context to accurately classify the scene samples. CAT adaptively fuses spatial context to accurately classify the scene samples by learning attentional features that capture the relationships between the center and auxiliary scenes. Based on MEET, we establish a comprehensive benchmark for fine-grained geospatial scene classification, evaluating CAT against 11 competitive baselines. The results demonstrate that CAT significantly outperforms these baselines, achieving a 1.88% higher balanced accuracy (BA) with the Swin-Large backbone, and a notable 7.87% improvement with the Swin-Huge backbone. Further experiments validate the effectiveness of each module in CAT and show the practical applicability of CAT in the urban functional zone mapping. The source code and dataset will be publicly available at https://jerrywyn.github.io/project/MEET.html.

Enhancing Scene Classification in Cloudy Image Scenarios: A Collaborative Transfer Method with Information Regulation Mechanism using Optical Cloud-Covered and SAR Remote Sensing Images

Jan 08, 2025

Abstract:In remote sensing scene classification, leveraging the transfer methods with well-trained optical models is an efficient way to overcome label scarcity. However, cloud contamination leads to optical information loss and significant impacts on feature distribution, challenging the reliability and stability of transferred target models. Common solutions include cloud removal for optical data or directly using Synthetic aperture radar (SAR) data in the target domain. However, cloud removal requires substantial auxiliary data for support and pre-training, while directly using SAR disregards the unobstructed portions of optical data. This study presents a scene classification transfer method that synergistically combines multi-modality data, which aims to transfer the source domain model trained on cloudfree optical data to the target domain that includes both cloudy optical and SAR data at low cost. Specifically, the framework incorporates two parts: (1) the collaborative transfer strategy, based on knowledge distillation, enables the efficient prior knowledge transfer across heterogeneous data; (2) the information regulation mechanism (IRM) is proposed to address the modality imbalance issue during transfer. It employs auxiliary models to measure the contribution discrepancy of each modality, and automatically balances the information utilization of modalities during the target model learning process at the sample-level. The transfer experiments were conducted on simulated and real cloud datasets, demonstrating the superior performance of the proposed method compared to other solutions in cloud-covered scenarios. We also verified the importance and limitations of IRM, and further discussed and visualized the modality imbalance problem during the model transfer. Codes are available at https://github.com/wangyuze-csu/ESCCS

Weakly Supervised Framework Considering Multi-temporal Information for Large-scale Cropland Mapping with Satellite Imagery

Nov 27, 2024Abstract:Accurately mapping large-scale cropland is crucial for agricultural production management and planning. Currently, the combination of remote sensing data and deep learning techniques has shown outstanding performance in cropland mapping. However, those approaches require massive precise labels, which are labor-intensive. To reduce the label cost, this study presented a weakly supervised framework considering multi-temporal information for large-scale cropland mapping. Specifically, we extract high-quality labels according to their consistency among global land cover (GLC) products to construct the supervised learning signal. On the one hand, to alleviate the overfitting problem caused by the model's over-trust of remaining errors in high-quality labels, we encode the similarity/aggregation of cropland in the visual/spatial domain to construct the unsupervised learning signal, and take it as the regularization term to constrain the supervised part. On the other hand, to sufficiently leverage the plentiful information in the samples without high-quality labels, we also incorporate the unsupervised learning signal in these samples, enriching the diversity of the feature space. After that, to capture the phenological features of croplands, we introduce dense satellite image time series (SITS) to extend the proposed framework in the temporal dimension. We also visualized the high dimensional phenological features to uncover how multi-temporal information benefits cropland extraction, and assessed the method's robustness under conditions of data scarcity. The proposed framework has been experimentally validated for strong adaptability across three study areas (Hunan Province, Southeast France, and Kansas) in large-scale cropland mapping, and the internal mechanism and temporal generalizability are also investigated.

IFShip: A Large Vision-Language Model for Interpretable Fine-grained Ship Classification via Domain Knowledge-Enhanced Instruction Tuning

Aug 13, 2024Abstract:End-to-end interpretation is currently the prevailing paradigm for remote sensing fine-grained ship classification (RS-FGSC) task. However, its inference process is uninterpretable, leading to criticism as a black box model. To address this issue, we propose a large vision-language model (LVLM) named IFShip for interpretable fine-grained ship classification. Unlike traditional methods, IFShip excels in interpretability by accurately conveying the reasoning process of FGSC in natural language. Specifically, we first design a domain knowledge-enhanced Chain-of-Thought (COT) prompt generation mechanism. This mechanism is used to semi-automatically construct a task-specific instruction-following dataset named TITANIC-FGS, which emulates human-like logical decision-making. We then train the IFShip model using task instructions tuned with the TITANIC-FGS dataset. Building on IFShip, we develop an FGSC visual chatbot that redefines the FGSC problem as a step-by-step reasoning task and conveys the reasoning process in natural language. Experimental results reveal that the proposed method surpasses state-of-the-art FGSC algorithms in both classification interpretability and accuracy. Moreover, compared to LVLMs like LLaVA and MiniGPT-4, our approach demonstrates superior expertise in the FGSC task. It provides an accurate chain of reasoning when fine-grained ship types are recognizable to the human eye and offers interpretable explanations when they are not.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge