Bo Zhao

Inject Once Survive Later: Backdooring Vision-Language-Action Models to Persist Through Downstream Fine-tuning

Jan 31, 2026Abstract:Vision-Language-Action (VLA) models have become foundational to modern embodied AI systems. By integrating visual perception, language understanding, and action planning, they enable general-purpose task execution across diverse environments. Despite their importance, the security of VLA models remains underexplored -- particularly in the context of backdoor attacks, which pose realistic threats in physical-world deployments. While recent methods attempt to inject backdoors into VLA models, these backdoors are easily erased during downstream adaptation, as user-side fine-tuning with clean data significantly alters model parameters, rendering them impractical for real-world applications. To address these challenges, we propose INFUSE (INjection into Fine-tUne-inSensitive modulEs), the first backdoor attack framework for VLA base models that remains effective even with arbitrary user fine-tuning. INFUSE begins by analyzing parameter sensitivity across diverse fine-tuning scenarios to identify modules that remain largely unchanged -- the fine-tune-insensitive modules. It then injects backdoors into these stable modules while freezing the rest, ensuring malicious behavior persists after extensive user fine-tuning. Comprehensive experiments across multiple VLA architectures demonstrate INFUSE's effectiveness. After user-side fine-tuning, INFUSE maintains mean attack success rates of 91.0% on simulation environments and 79.8% on real-world robot tasks, substantially surpassing BadVLA (38.8% and 36.6%, respectively), while preserving clean-task performance comparable to standard models. These results uncover a critical threat: backdoors implanted before distribution can persist through fine-tuning and remain effective at deployment.

ConLA: Contrastive Latent Action Learning from Human Videos for Robotic Manipulation

Jan 31, 2026Abstract:Vision-Language-Action (VLA) models achieve preliminary generalization through pretraining on large scale robot teleoperation datasets. However, acquiring datasets that comprehensively cover diverse tasks and environments is extremely costly and difficult to scale. In contrast, human demonstration videos offer a rich and scalable source of diverse scenes and manipulation behaviors, yet their lack of explicit action supervision hinders direct utilization. Prior work leverages VQ-VAE based frameworks to learn latent actions from human videos in an unsupervised manner. Nevertheless, since the training objective primarily focuses on reconstructing visual appearances rather than capturing inter-frame dynamics, the learned representations tend to rely on spurious visual cues, leading to shortcut learning and entangled latent representations that hinder transferability. To address this, we propose ConLA, an unsupervised pretraining framework for learning robotic policies from human videos. ConLA introduces a contrastive disentanglement mechanism that leverages action category priors and temporal cues to isolate motion dynamics from visual content, effectively mitigating shortcut learning. Extensive experiments show that ConLA achieves strong performance across diverse benchmarks. Notably, by pretraining solely on human videos, our method for the first time surpasses the performance obtained with real robot trajectory pretraining, highlighting its ability to extract pure and semantically consistent latent action representations for scalable robot learning.

Scalable Analytic Classifiers with Associative Drift Compensation for Class-Incremental Learning of Vision Transformers

Jan 29, 2026Abstract:Class-incremental learning (CIL) with Vision Transformers (ViTs) faces a major computational bottleneck during the classifier reconstruction phase, where most existing methods rely on costly iterative stochastic gradient descent (SGD). We observe that analytic Regularized Gaussian Discriminant Analysis (RGDA) provides a Bayes-optimal alternative with accuracy comparable to SGD-based classifiers; however, its quadratic inference complexity limits its use in large-scale CIL scenarios. To overcome this, we propose Low-Rank Factorized RGDA (LR-RGDA), a scalable classifier that combines RGDA's expressivity with the efficiency of linear classifiers. By exploiting the low-rank structure of the covariance via the Woodbury matrix identity, LR-RGDA decomposes the discriminant function into a global affine term refined by a low-rank quadratic perturbation, reducing the inference complexity from $\mathcal{O}(Cd^2)$ to $\mathcal{O}(d^2 + Crd^2)$, where $C$ is the class number, $d$ the feature dimension, and $r \ll d$ the subspace rank. To mitigate representation drift caused by backbone updates, we further introduce Hopfield-based Distribution Compensator (HopDC), a training-free mechanism that uses modern continuous Hopfield Networks to recalibrate historical class statistics through associative memory dynamics on unlabeled anchors, accompanied by a theoretical bound on the estimation error. Extensive experiments on diverse CIL benchmarks demonstrate that our framework achieves state-of-the-art performance, providing a scalable solution for large-scale class-incremental learning with ViTs. Code: https://github.com/raoxuan98-hash/lr_rgda_hopdc.

Demystifying Mergeability: Interpretable Properties to Predict Model Merging Success

Jan 29, 2026Abstract:Model merging combines knowledge from separately fine-tuned models, yet success factors remain poorly understood. While recent work treats mergeability as an intrinsic property, we show with an architecture-agnostic framework that it fundamentally depends on both the merging method and the partner tasks. Using linear optimization over a set of interpretable pairwise metrics (e.g., gradient L2 distance), we uncover properties correlating with post-merge performance across four merging methods. We find substantial variation in success drivers (46.7% metric overlap; 55.3% sign agreement), revealing method-specific "fingerprints". Crucially, however, subspace overlap and gradient alignment metrics consistently emerge as foundational, method-agnostic prerequisites for compatibility. These findings provide a diagnostic foundation for understanding mergeability and motivate future fine-tuning strategies that explicitly encourage these properties.

The Script is All You Need: An Agentic Framework for Long-Horizon Dialogue-to-Cinematic Video Generation

Jan 27, 2026Abstract:Recent advances in video generation have produced models capable of synthesizing stunning visual content from simple text prompts. However, these models struggle to generate long-form, coherent narratives from high-level concepts like dialogue, revealing a ``semantic gap'' between a creative idea and its cinematic execution. To bridge this gap, we introduce a novel, end-to-end agentic framework for dialogue-to-cinematic-video generation. Central to our framework is ScripterAgent, a model trained to translate coarse dialogue into a fine-grained, executable cinematic script. To enable this, we construct ScriptBench, a new large-scale benchmark with rich multimodal context, annotated via an expert-guided pipeline. The generated script then guides DirectorAgent, which orchestrates state-of-the-art video models using a cross-scene continuous generation strategy to ensure long-horizon coherence. Our comprehensive evaluation, featuring an AI-powered CriticAgent and a new Visual-Script Alignment (VSA) metric, shows our framework significantly improves script faithfulness and temporal fidelity across all tested video models. Furthermore, our analysis uncovers a crucial trade-off in current SOTA models between visual spectacle and strict script adherence, providing valuable insights for the future of automated filmmaking.

EgoGrasp: World-Space Hand-Object Interaction Estimation from Egocentric Videos

Jan 03, 2026Abstract:We propose EgoGrasp, the first method to reconstruct world-space hand-object interactions (W-HOI) from egocentric monocular videos with dynamic cameras in the wild. Accurate W-HOI reconstruction is critical for understanding human behavior and enabling applications in embodied intelligence and virtual reality. However, existing hand-object interactions (HOI) methods are limited to single images or camera coordinates, failing to model temporal dynamics or consistent global trajectories. Some recent approaches attempt world-space hand estimation but overlook object poses and HOI constraints. Their performance also suffers under severe camera motion and frequent occlusions common in egocentric in-the-wild videos. To address these challenges, we introduce a multi-stage framework with a robust pre-process pipeline built on newly developed spatial intelligence models, a whole-body HOI prior model based on decoupled diffusion models, and a multi-objective test-time optimization paradigm. Our HOI prior model is template-free and scalable to multiple objects. In experiments, we prove our method achieving state-of-the-art performance in W-HOI reconstruction.

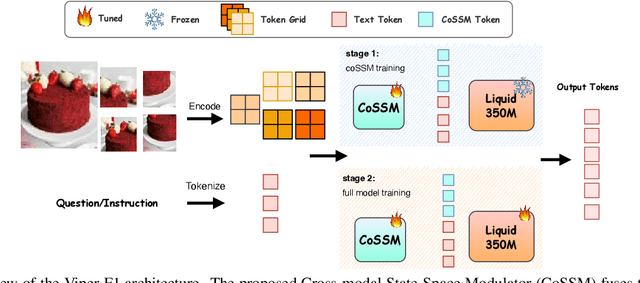

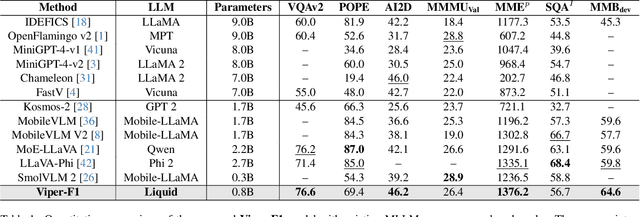

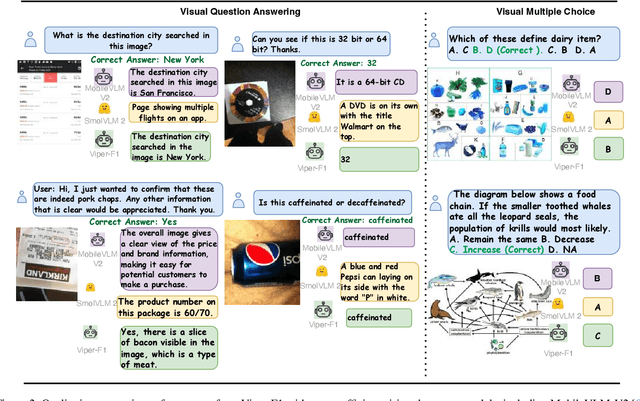

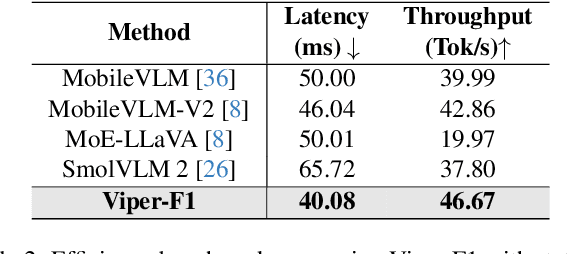

Viper-F1: Fast and Fine-Grained Multimodal Understanding with Cross-Modal State-Space Modulation

Nov 18, 2025

Abstract:Recent advances in multimodal large language models (MLLMs) have enabled impressive progress in vision-language understanding, yet their high computational cost limits deployment in resource-constrained scenarios such as robotic manipulation, personal assistants, and smart cameras. Most existing methods rely on Transformer-based cross-attention, whose quadratic complexity hinders efficiency. Moreover, small vision-language models often struggle to precisely capture fine-grained, task-relevant visual regions, leading to degraded performance on fine-grained reasoning tasks that limit their effectiveness in the real world. To address these issues, we introduce Viper-F1, a Hybrid State-Space Vision-Language Model that replaces attention with efficient Liquid State-Space Dynamics. To further enhance visual grounding, we propose a Token-Grid Correlation Module, which computes lightweight correlations between text tokens and image patches and modulates the state-space dynamics via FiLM conditioning. This enables the model to selectively emphasize visual regions relevant to the textual prompt while maintaining linear-time inference. Experimental results across multiple benchmarks demonstrate that Viper-F1 achieves accurate, fine-grained understanding with significantly improved efficiency.

Compensating Distribution Drifts in Class-incremental Learning of Pre-trained Vision Transformers

Nov 13, 2025

Abstract:Recent advances have shown that sequential fine-tuning (SeqFT) of pre-trained vision transformers (ViTs), followed by classifier refinement using approximate distributions of class features, can be an effective strategy for class-incremental learning (CIL). However, this approach is susceptible to distribution drift, caused by the sequential optimization of shared backbone parameters. This results in a mismatch between the distributions of the previously learned classes and that of the updater model, ultimately degrading the effectiveness of classifier performance over time. To address this issue, we introduce a latent space transition operator and propose Sequential Learning with Drift Compensation (SLDC). SLDC aims to align feature distributions across tasks to mitigate the impact of drift. First, we present a linear variant of SLDC, which learns a linear operator by solving a regularized least-squares problem that maps features before and after fine-tuning. Next, we extend this with a weakly nonlinear SLDC variant, which assumes that the ideal transition operator lies between purely linear and fully nonlinear transformations. This is implemented using learnable, weakly nonlinear mappings that balance flexibility and generalization. To further reduce representation drift, we apply knowledge distillation (KD) in both algorithmic variants. Extensive experiments on standard CIL benchmarks demonstrate that SLDC significantly improves the performance of SeqFT. Notably, by combining KD to address representation drift with SLDC to compensate distribution drift, SeqFT achieves performance comparable to joint training across all evaluated datasets. Code: https://github.com/raoxuan98-hash/sldc.git.

Evo-1: Lightweight Vision-Language-Action Model with Preserved Semantic Alignment

Nov 06, 2025Abstract:Vision-Language-Action (VLA) models have emerged as a powerful framework that unifies perception, language, and control, enabling robots to perform diverse tasks through multimodal understanding. However, current VLA models typically contain massive parameters and rely heavily on large-scale robot data pretraining, leading to high computational costs during training, as well as limited deployability for real-time inference. Moreover, most training paradigms often degrade the perceptual representations of the vision-language backbone, resulting in overfitting and poor generalization to downstream tasks. In this work, we present Evo-1, a lightweight VLA model that reduces computation and improves deployment efficiency, while maintaining strong performance without pretraining on robot data. Evo-1 builds on a native multimodal Vision-Language model (VLM), incorporating a novel cross-modulated diffusion transformer along with an optimized integration module, together forming an effective architecture. We further introduce a two-stage training paradigm that progressively aligns action with perception, preserving the representations of the VLM. Notably, with only 0.77 billion parameters, Evo-1 achieves state-of-the-art results on the Meta-World and RoboTwin suite, surpassing the previous best models by 12.4% and 6.9%, respectively, and also attains a competitive result of 94.8% on LIBERO. In real-world evaluations, Evo-1 attains a 78% success rate with high inference frequency and low memory overhead, outperforming all baseline methods. We release code, data, and model weights to facilitate future research on lightweight and efficient VLA models.

Twirlator: A Pipeline for Analyzing Subgroup Symmetry Effects in Quantum Machine Learning Ansatzes

Nov 06, 2025Abstract:Leveraging data symmetries has been a key driver of performance gains in geometric deep learning and geometric and equivariant quantum machine learning. While symmetrization appears to be a promising method, its practical overhead, such as additional gates, reduced expressibility, and other factors, is not well understood in quantum machine learning. In this work, we develop an automated pipeline to measure various characteristics of quantum machine learning ansatzes with respect to symmetries that can appear in the learning task. We define the degree of symmetry in the learning problem as the size of the subgroup it admits. Subgroups define partial symmetries, which have not been extensively studied in previous research, which has focused on symmetries defined by whole groups. Symmetrizing the 19 common ansatzes with respect to these varying-sized subgroup representations, we compute three classes of metrics that describe how the common ansatz structures behave under varying amounts of symmetries. The first metric is based on the norm of the difference between the original and symmetrized generators, while the second metric counts depth, size, and other characteristics from the symmetrized circuits. The third class of metrics includes expressibility and entangling capability. The results demonstrate varying gate overhead across the studied ansatzes and confirm that increased symmetry reduces expressibility of the circuits. In most cases, increased symmetry increases entanglement capability. These results help select sufficiently expressible and computationally efficient ansatze patterns for geometric quantum machine learning applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge