"Text Classification": models, code, and papers

Sparsify-then-Classify: From Internal Neurons of Large Language Models To Efficient Text Classifiers

Nov 27, 2023Among the many tasks that Large Language Models (LLMs) have revolutionized is text classification. However, existing approaches for applying pretrained LLMs to text classification predominantly rely on using single token outputs from only the last layer of hidden states. As a result, they suffer from limitations in efficiency, task-specificity, and interpretability. In our work, we contribute an approach that uses all internal representations by employing multiple pooling strategies on all activation and hidden states. Our novel lightweight strategy, Sparsify-then-Classify (STC) first sparsifies task-specific features layer-by-layer, then aggregates across layers for text classification. STC can be applied as a seamless plug-and-play module on top of existing LLMs. Our experiments on a comprehensive set of models and datasets demonstrate that STC not only consistently improves the classification performance of pretrained and fine-tuned models, but is also more efficient for both training and inference, and is more intrinsically interpretable.

CrisisKAN: Knowledge-infused and Explainable Multimodal Attention Network for Crisis Event Classification

Jan 11, 2024Pervasive use of social media has become the emerging source for real-time information (like images, text, or both) to identify various events. Despite the rapid growth of image and text-based event classification, the state-of-the-art (SOTA) models find it challenging to bridge the semantic gap between features of image and text modalities due to inconsistent encoding. Also, the black-box nature of models fails to explain the model's outcomes for building trust in high-stakes situations such as disasters, pandemic. Additionally, the word limit imposed on social media posts can potentially introduce bias towards specific events. To address these issues, we proposed CrisisKAN, a novel Knowledge-infused and Explainable Multimodal Attention Network that entails images and texts in conjunction with external knowledge from Wikipedia to classify crisis events. To enrich the context-specific understanding of textual information, we integrated Wikipedia knowledge using proposed wiki extraction algorithm. Along with this, a guided cross-attention module is implemented to fill the semantic gap in integrating visual and textual data. In order to ensure reliability, we employ a model-specific approach called Gradient-weighted Class Activation Mapping (Grad-CAM) that provides a robust explanation of the predictions of the proposed model. The comprehensive experiments conducted on the CrisisMMD dataset yield in-depth analysis across various crisis-specific tasks and settings. As a result, CrisisKAN outperforms existing SOTA methodologies and provides a novel view in the domain of explainable multimodal event classification.

Reliability Analysis of Psychological Concept Extraction and Classification in User-penned Text

Jan 12, 2024The social NLP research community witness a recent surge in the computational advancements of mental health analysis to build responsible AI models for a complex interplay between language use and self-perception. Such responsible AI models aid in quantifying the psychological concepts from user-penned texts on social media. On thinking beyond the low-level (classification) task, we advance the existing binary classification dataset, towards a higher-level task of reliability analysis through the lens of explanations, posing it as one of the safety measures. We annotate the LoST dataset to capture nuanced textual cues that suggest the presence of low self-esteem in the posts of Reddit users. We further state that the NLP models developed for determining the presence of low self-esteem, focus more on three types of textual cues: (i) Trigger: words that triggers mental disturbance, (ii) LoST indicators: text indicators emphasizing low self-esteem, and (iii) Consequences: words describing the consequences of mental disturbance. We implement existing classifiers to examine the attention mechanism in pre-trained language models (PLMs) for a domain-specific psychology-grounded task. Our findings suggest the need of shifting the focus of PLMs from Trigger and Consequences to a more comprehensive explanation, emphasizing LoST indicators while determining low self-esteem in Reddit posts.

Object Recognition from Scientific Document based on Compartment Refinement Framework

Dec 15, 2023With the rapid development of the internet in the past decade, it has become increasingly important to extract valuable information from vast resources efficiently, which is crucial for establishing a comprehensive digital ecosystem, particularly in the context of research surveys and comprehension. The foundation of these tasks focuses on accurate extraction and deep mining of data from scientific documents, which are essential for building a robust data infrastructure. However, parsing raw data or extracting data from complex scientific documents have been ongoing challenges. Current data extraction methods for scientific documents typically use rule-based (RB) or machine learning (ML) approaches. However, using rule-based methods can incur high coding costs for articles with intricate typesetting. Conversely, relying solely on machine learning methods necessitates annotation work for complex content types within the scientific document, which can be costly. Additionally, few studies have thoroughly defined and explored the hierarchical layout within scientific documents. The lack of a comprehensive definition of the internal structure and elements of the documents indirectly impacts the accuracy of text classification and object recognition tasks. From the perspective of analyzing the standard layout and typesetting used in the specified publication, we propose a new document layout analysis framework called CTBR(Compartment & Text Blocks Refinement). Firstly, we define scientific documents into hierarchical divisions: base domain, compartment, and text blocks. Next, we conduct an in-depth exploration and classification of the meanings of text blocks. Finally, we utilize the results of text block classification to implement object recognition within scientific documents based on rule-based compartment segmentation.

RankAug: Augmented data ranking for text classification

Nov 08, 2023

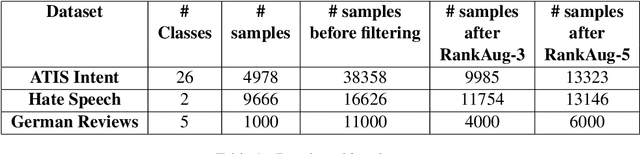

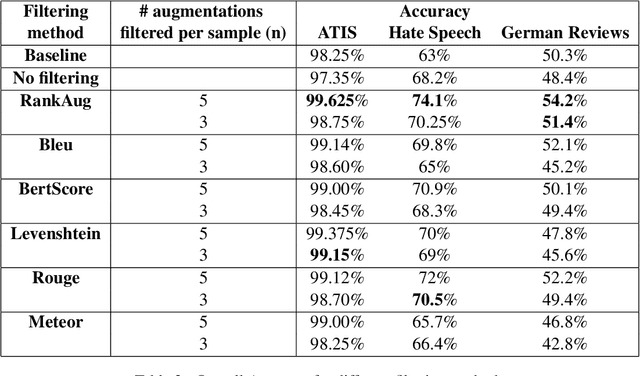

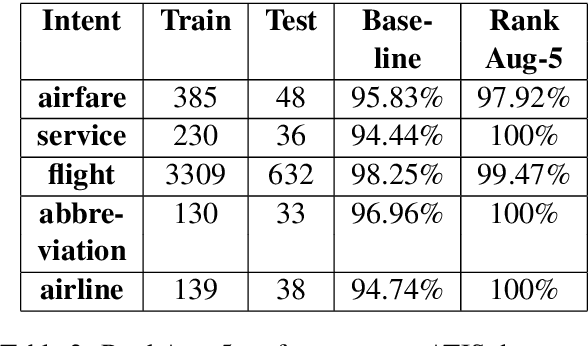

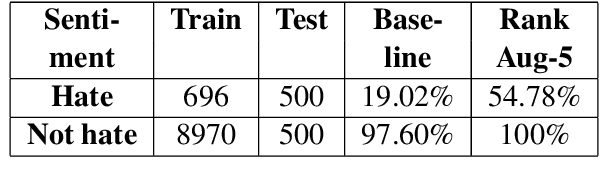

Research on data generation and augmentation has been focused majorly on enhancing generation models, leaving a notable gap in the exploration and refinement of methods for evaluating synthetic data. There are several text similarity metrics within the context of generated data filtering which can impact the performance of specific Natural Language Understanding (NLU) tasks, specifically focusing on intent and sentiment classification. In this study, we propose RankAug, a text-ranking approach that detects and filters out the top augmented texts in terms of being most similar in meaning with lexical and syntactical diversity. Through experiments conducted on multiple datasets, we demonstrate that the judicious selection of filtering techniques can yield a substantial improvement of up to 35% in classification accuracy for under-represented classes.

InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks

Jan 15, 2024The exponential growth of large language models (LLMs) has opened up numerous possibilities for multimodal AGI systems. However, the progress in vision and vision-language foundation models, which are also critical elements of multi-modal AGI, has not kept pace with LLMs. In this work, we design a large-scale vision-language foundation model (InternVL), which scales up the vision foundation model to 6 billion parameters and progressively aligns it with the LLM, using web-scale image-text data from various sources. This model can be broadly applied to and achieve state-of-the-art performance on 32 generic visual-linguistic benchmarks including visual perception tasks such as image-level or pixel-level recognition, vision-language tasks such as zero-shot image/video classification, zero-shot image/video-text retrieval, and link with LLMs to create multi-modal dialogue systems. It has powerful visual capabilities and can be a good alternative to the ViT-22B. We hope that our research could contribute to the development of multi-modal large models. Code and models are available at https://github.com/OpenGVLab/InternVL.

Synthetic Data Generation with Large Language Models for Text Classification: Potential and Limitations

Oct 13, 2023

The collection and curation of high-quality training data is crucial for developing text classification models with superior performance, but it is often associated with significant costs and time investment. Researchers have recently explored using large language models (LLMs) to generate synthetic datasets as an alternative approach. However, the effectiveness of the LLM-generated synthetic data in supporting model training is inconsistent across different classification tasks. To better understand factors that moderate the effectiveness of the LLM-generated synthetic data, in this study, we look into how the performance of models trained on these synthetic data may vary with the subjectivity of classification. Our results indicate that subjectivity, at both the task level and instance level, is negatively associated with the performance of the model trained on synthetic data. We conclude by discussing the implications of our work on the potential and limitations of leveraging LLM for synthetic data generation.

REDUCR: Robust Data Downsampling Using Class Priority Reweighting

Dec 01, 2023Modern machine learning models are becoming increasingly expensive to train for real-world image and text classification tasks, where massive web-scale data is collected in a streaming fashion. To reduce the training cost, online batch selection techniques have been developed to choose the most informative datapoints. However, these techniques can suffer from poor worst-class generalization performance due to class imbalance and distributional shifts. This work introduces REDUCR, a robust and efficient data downsampling method that uses class priority reweighting. REDUCR reduces the training data while preserving worst-class generalization performance. REDUCR assigns priority weights to datapoints in a class-aware manner using an online learning algorithm. We demonstrate the data efficiency and robust performance of REDUCR on vision and text classification tasks. On web-scraped datasets with imbalanced class distributions, REDUCR significantly improves worst-class test accuracy (and average accuracy), surpassing state-of-the-art methods by around 15%.

Self-supervised speech representation and contextual text embedding for match-mismatch classification with EEG recording

Jan 10, 2024Relating speech to EEG holds considerable importance but challenging. In this study, deep convolutional network was employed to extract spatiotemporal features from EEG data. Self-supervised speech representation and contextual text embedding were used as speech features. Contrastive learning was used to related EEG features to speech features. The experimental results demonstrate the benefits of using self-supervised speech representation and contextual text embedding. Through feature fusion and model ensemble, an accuracy of 60.29% was achieved, and the performance was ranked as No.2 in Task1 of the Auditory EEG Challenge (ICASSP 2024).

JointMatch: A Unified Approach for Diverse and Collaborative Pseudo-Labeling to Semi-Supervised Text Classification

Oct 23, 2023

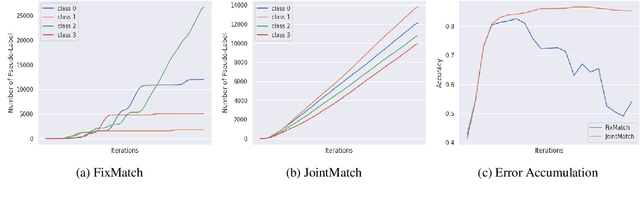

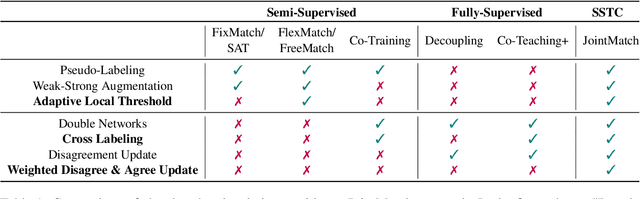

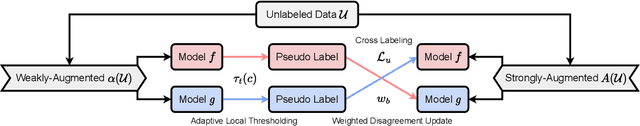

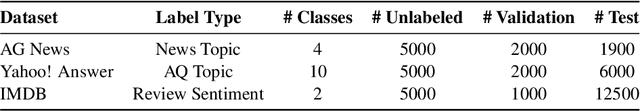

Semi-supervised text classification (SSTC) has gained increasing attention due to its ability to leverage unlabeled data. However, existing approaches based on pseudo-labeling suffer from the issues of pseudo-label bias and error accumulation. In this paper, we propose JointMatch, a holistic approach for SSTC that addresses these challenges by unifying ideas from recent semi-supervised learning and the task of learning with noise. JointMatch adaptively adjusts classwise thresholds based on the learning status of different classes to mitigate model bias towards current easy classes. Additionally, JointMatch alleviates error accumulation by utilizing two differently initialized networks to teach each other in a cross-labeling manner. To maintain divergence between the two networks for mutual learning, we introduce a strategy that weighs more disagreement data while also allowing the utilization of high-quality agreement data for training. Experimental results on benchmark datasets demonstrate the superior performance of JointMatch, achieving a significant 5.13% improvement on average. Notably, JointMatch delivers impressive results even in the extremely-scarce-label setting, obtaining 86% accuracy on AG News with only 5 labels per class. We make our code available at https://github.com/HenryPengZou/JointMatch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge