autonomous cars

Autonomous cars are self-driving vehicles that use artificial intelligence (AI) and sensors to navigate and operate without human intervention, using high-resolution cameras and lidars that detect what happens in the car's immediate surroundings. They have the potential to revolutionize transportation by improving safety, efficiency, and accessibility.

Papers and Code

Analysis of the Motion Sickness and the Lack of Comfort in Car Passengers

Jan 29, 2025Advanced driving assistance systems (ADAS) are primarily designed to increase driving safety and reduce traffic congestion without paying too much attention to passenger comfort or motion sickness. However, in view of autonomous cars, and taking into account that the lack of comfort and motion sickness increase in passengers, analysis from a comfort perspective is essential in the future car investigation. The aim of this work is to study in detail how passenger's comfort evaluation parameters vary depending on the driving style, car or road. The database used has been developed by compiling the accelerations suffered by passengers when three drivers cruise two different vehicles on different types of routes. In order to evaluate both comfort and motion sickness, first, the numerical values of the main comfort evaluation variables reported in the literature have been analyzed. Moreover, a complementary statistical analysis of probability density and a power spectral analysis are performed. Finally, quantitative results are compared with passenger qualitative feedback. The results show the high dependence of comfort evaluation variables' value with the road type. In addition, it has been demonstrated that the driving style and vehicle dynamics amplify or attenuate those values. Additionally, it has been demonstrated that contributions from longitudinal and lateral accelerations have a much greater effect in the lack of comfort than vertical ones. Finally, based on the concrete results obtained, a new experimental campaign is proposed.

Virtual Roads, Smarter Safety: A Digital Twin Framework for Mixed Autonomous Traffic Safety Analysis

Apr 24, 2025

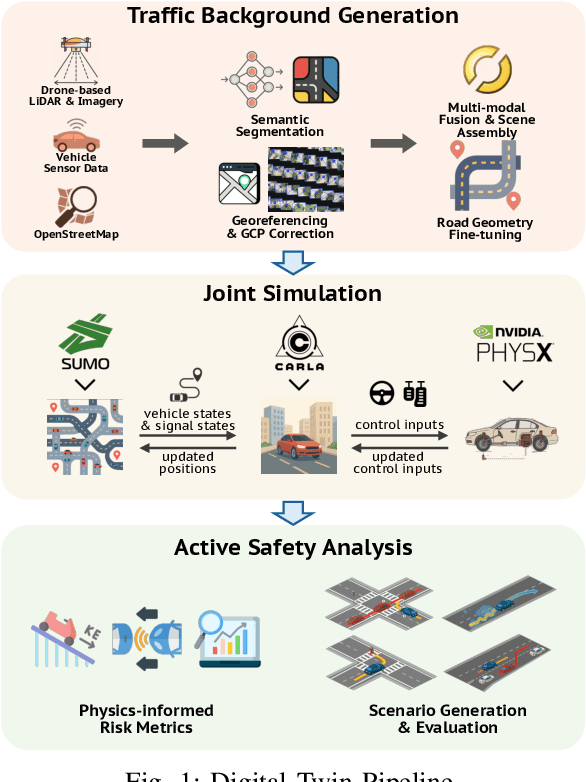

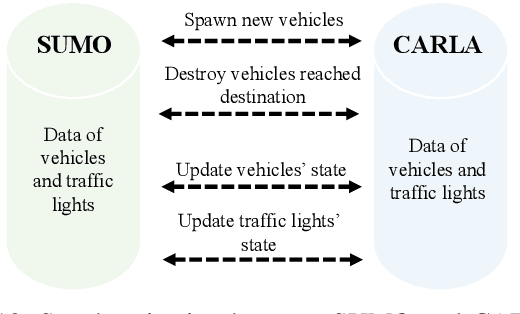

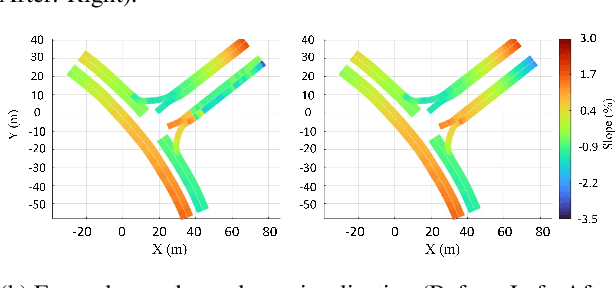

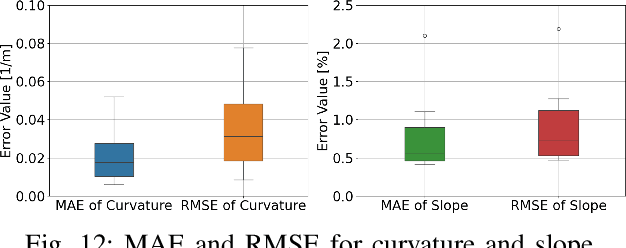

This paper presents a digital-twin platform for active safety analysis in mixed traffic environments. The platform is built using a multi-modal data-enabled traffic environment constructed from drone-based aerial LiDAR, OpenStreetMap, and vehicle sensor data (e.g., GPS and inclinometer readings). High-resolution 3D road geometries are generated through AI-powered semantic segmentation and georeferencing of aerial LiDAR data. To simulate real-world driving scenarios, the platform integrates the CAR Learning to Act (CARLA) simulator, Simulation of Urban MObility (SUMO) traffic model, and NVIDIA PhysX vehicle dynamics engine. CARLA provides detailed micro-level sensor and perception data, while SUMO manages macro-level traffic flow. NVIDIA PhysX enables accurate modeling of vehicle behaviors under diverse conditions, accounting for mass distribution, tire friction, and center of mass. This integrated system supports high-fidelity simulations that capture the complex interactions between autonomous and conventional vehicles. Experimental results demonstrate the platform's ability to reproduce realistic vehicle dynamics and traffic scenarios, enhancing the analysis of active safety measures. Overall, the proposed framework advances traffic safety research by enabling in-depth, physics-informed evaluation of vehicle behavior in dynamic and heterogeneous traffic environments.

Clip4Retrofit: Enabling Real-Time Image Labeling on Edge Devices via Cross-Architecture CLIP Distillation

May 23, 2025Foundation models like CLIP (Contrastive Language-Image Pretraining) have revolutionized vision-language tasks by enabling zero-shot and few-shot learning through cross-modal alignment. However, their computational complexity and large memory footprint make them unsuitable for deployment on resource-constrained edge devices, such as in-car cameras used for image collection and real-time processing. To address this challenge, we propose Clip4Retrofit, an efficient model distillation framework that enables real-time image labeling on edge devices. The framework is deployed on the Retrofit camera, a cost-effective edge device retrofitted into thousands of vehicles, despite strict limitations on compute performance and memory. Our approach distills the knowledge of the CLIP model into a lightweight student model, combining EfficientNet-B3 with multi-layer perceptron (MLP) projection heads to preserve cross-modal alignment while significantly reducing computational requirements. We demonstrate that our distilled model achieves a balance between efficiency and performance, making it ideal for deployment in real-world scenarios. Experimental results show that Clip4Retrofit can perform real-time image labeling and object identification on edge devices with limited resources, offering a practical solution for applications such as autonomous driving and retrofitting existing systems. This work bridges the gap between state-of-the-art vision-language models and their deployment in resource-constrained environments, paving the way for broader adoption of foundation models in edge computing.

On learning racing policies with reinforcement learning

Apr 03, 2025Fully autonomous vehicles promise enhanced safety and efficiency. However, ensuring reliable operation in challenging corner cases requires control algorithms capable of performing at the vehicle limits. We address this requirement by considering the task of autonomous racing and propose solving it by learning a racing policy using Reinforcement Learning (RL). Our approach leverages domain randomization, actuator dynamics modeling, and policy architecture design to enable reliable and safe zero-shot deployment on a real platform. Evaluated on the F1TENTH race car, our RL policy not only surpasses a state-of-the-art Model Predictive Control (MPC), but, to the best of our knowledge, also represents the first instance of an RL policy outperforming expert human drivers in RC racing. This work identifies the key factors driving this performance improvement, providing critical insights for the design of robust RL-based control strategies for autonomous vehicles.

Deployable and Generalizable Motion Prediction: Taxonomy, Open Challenges and Future Directions

May 14, 2025

Motion prediction, the anticipation of future agent states or scene evolution, is rooted in human cognition, bridging perception and decision-making. It enables intelligent systems, such as robots and self-driving cars, to act safely in dynamic, human-involved environments, and informs broader time-series reasoning challenges. With advances in methods, representations, and datasets, the field has seen rapid progress, reflected in quickly evolving benchmark results. Yet, when state-of-the-art methods are deployed in the real world, they often struggle to generalize to open-world conditions and fall short of deployment standards. This reveals a gap between research benchmarks, which are often idealized or ill-posed, and real-world complexity. To address this gap, this survey revisits the generalization and deployability of motion prediction models, with an emphasis on the applications of robotics, autonomous driving, and human motion. We first offer a comprehensive taxonomy of motion prediction methods, covering representations, modeling strategies, application domains, and evaluation protocols. We then study two key challenges: (1) how to push motion prediction models to be deployable to realistic deployment standards, where motion prediction does not act in a vacuum, but functions as one module of closed-loop autonomy stacks - it takes input from the localization and perception, and informs downstream planning and control. 2) how to generalize motion prediction models from limited seen scenarios/datasets to the open-world settings. Throughout the paper, we highlight critical open challenges to guide future work, aiming to recalibrate the community's efforts, fostering progress that is not only measurable but also meaningful for real-world applications.

Robusto-1 Dataset: Comparing Humans and VLMs on real out-of-distribution Autonomous Driving VQA from Peru

Mar 10, 2025

As multimodal foundational models start being deployed experimentally in Self-Driving cars, a reasonable question we ask ourselves is how similar to humans do these systems respond in certain driving situations -- especially those that are out-of-distribution? To study this, we create the Robusto-1 dataset that uses dashcam video data from Peru, a country with one of the worst (aggressive) drivers in the world, a high traffic index, and a high ratio of bizarre to non-bizarre street objects likely never seen in training. In particular, to preliminarly test at a cognitive level how well Foundational Visual Language Models (VLMs) compare to Humans in Driving, we move away from bounding boxes, segmentation maps, occupancy maps or trajectory estimation to multi-modal Visual Question Answering (VQA) comparing both humans and machines through a popular method in systems neuroscience known as Representational Similarity Analysis (RSA). Depending on the type of questions we ask and the answers these systems give, we will show in what cases do VLMs and Humans converge or diverge allowing us to probe on their cognitive alignment. We find that the degree of alignment varies significantly depending on the type of questions asked to each type of system (Humans vs VLMs), highlighting a gap in their alignment.

Bandwidth Allocation for Cloud-Augmented Autonomous Driving

Mar 26, 2025Autonomous vehicle (AV) control systems increasingly rely on ML models for tasks such as perception and planning. Current practice is to run these models on the car's local hardware due to real-time latency constraints and reliability concerns, which limits model size and thus accuracy. Prior work has observed that we could augment current systems by running larger models in the cloud, relying on faster cloud runtimes to offset the cellular network latency. However, prior work does not account for an important practical constraint: limited cellular bandwidth. We show that, for typical bandwidth levels, proposed techniques for cloud-augmented AV models take too long to transfer data, thus mostly falling back to the on-car models and resulting in no accuracy improvement. In this work, we show that realizing cloud-augmented AV models requires intelligent use of this scarce bandwidth, i.e. carefully allocating bandwidth across tasks and providing multiple data compression and model options. We formulate this as a resource allocation problem to maximize car utility, and present our system \sysname which achieves an increase in average model accuracy by up to 15 percentage points on driving scenarios from the Waymo Open Dataset.

Enhancing Autonomous Driving Safety with Collision Scenario Integration

Mar 05, 2025Autonomous vehicle safety is crucial for the successful deployment of self-driving cars. However, most existing planning methods rely heavily on imitation learning, which limits their ability to leverage collision data effectively. Moreover, collecting collision or near-collision data is inherently challenging, as it involves risks and raises ethical and practical concerns. In this paper, we propose SafeFusion, a training framework to learn from collision data. Instead of over-relying on imitation learning, SafeFusion integrates safety-oriented metrics during training to enable collision avoidance learning. In addition, to address the scarcity of collision data, we propose CollisionGen, a scalable data generation pipeline to generate diverse, high-quality scenarios using natural language prompts, generative models, and rule-based filtering. Experimental results show that our approach improves planning performance in collision-prone scenarios by 56\% over previous state-of-the-art planners while maintaining effectiveness in regular driving situations. Our work provides a scalable and effective solution for advancing the safety of autonomous driving systems.

AUTHENTICATION: Identifying Rare Failure Modes in Autonomous Vehicle Perception Systems using Adversarially Guided Diffusion Models

Apr 24, 2025Autonomous Vehicles (AVs) rely on artificial intelligence (AI) to accurately detect objects and interpret their surroundings. However, even when trained using millions of miles of real-world data, AVs are often unable to detect rare failure modes (RFMs). The problem of RFMs is commonly referred to as the "long-tail challenge", due to the distribution of data including many instances that are very rarely seen. In this paper, we present a novel approach that utilizes advanced generative and explainable AI techniques to aid in understanding RFMs. Our methods can be used to enhance the robustness and reliability of AVs when combined with both downstream model training and testing. We extract segmentation masks for objects of interest (e.g., cars) and invert them to create environmental masks. These masks, combined with carefully crafted text prompts, are fed into a custom diffusion model. We leverage the Stable Diffusion inpainting model guided by adversarial noise optimization to generate images containing diverse environments designed to evade object detection models and expose vulnerabilities in AI systems. Finally, we produce natural language descriptions of the generated RFMs that can guide developers and policymakers to improve the safety and reliability of AV systems.

ViVa-SAFELAND: a New Freeware for Safe Validation of Vision-based Navigation in Aerial Vehicles

Mar 18, 2025ViVa-SAFELAND is an open source software library, aimed to test and evaluate vision-based navigation strategies for aerial vehicles, with special interest in autonomous landing, while complying with legal regulations and people's safety. It consists of a collection of high definition aerial videos, focusing on real unstructured urban scenarios, recording moving obstacles of interest, such as cars and people. Then, an Emulated Aerial Vehicle (EAV) with a virtual moving camera is implemented in order to ``navigate" inside the video, according to high-order commands. ViVa-SAFELAND provides a new, safe, simple and fair comparison baseline to evaluate and compare different visual navigation solutions under the same conditions, and to randomize variables along several trials. It also facilitates the development of autonomous landing and navigation strategies, as well as the generation of image datasets for different training tasks. Moreover, it is useful for training either human of autonomous pilots using deep learning. The effectiveness of the framework for validating vision algorithms is demonstrated through two case studies, detection of moving objects and risk assessment segmentation. To our knowledge, this is the first safe validation framework of its kind, to test and compare visual navigation solution for aerial vehicles, which is a crucial aspect for urban deployment in complex real scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge