Image To Image Translation

Image-to-image translation is the process of converting an image from one domain to another using deep learning techniques.

Papers and Code

Deep Feedback Models

Sep 19, 2025Deep Feedback Models (DFMs) are a new class of stateful neural networks that combine bottom up input with high level representations over time. This feedback mechanism introduces dynamics into otherwise static architectures, enabling DFMs to iteratively refine their internal state and mimic aspects of biological decision making. We model this process as a differential equation solved through a recurrent neural network, stabilized via exponential decay to ensure convergence. To evaluate their effectiveness, we measure DFMs under two key conditions: robustness to noise and generalization with limited data. In both object recognition and segmentation tasks, DFMs consistently outperform their feedforward counterparts, particularly in low data or high noise regimes. In addition, DFMs translate to medical imaging settings, while being robust against various types of noise corruption. These findings highlight the importance of feedback in achieving stable, robust, and generalizable learning. Code is available at https://github.com/DCalhas/deep_feedback_models.

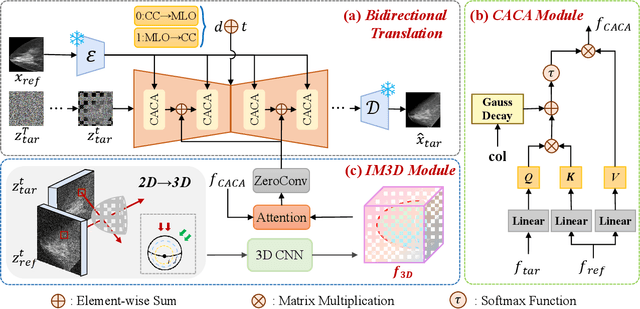

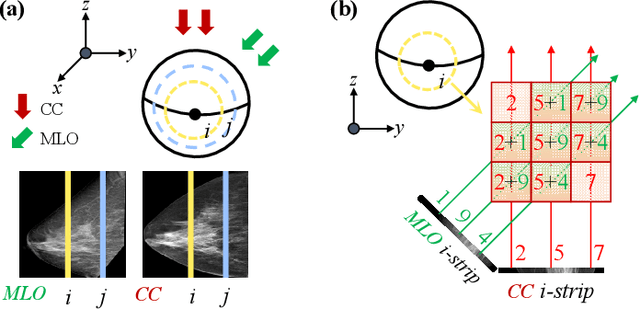

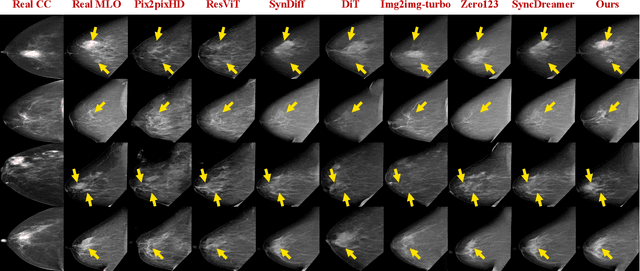

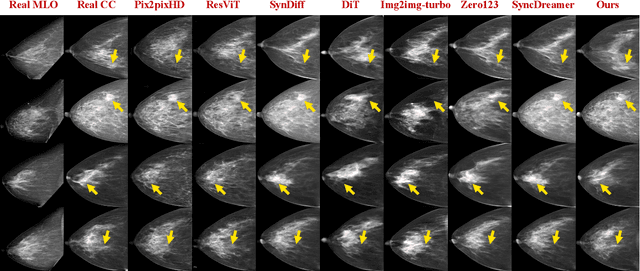

Bidirectional Mammogram View Translation with Column-Aware and Implicit 3D Conditional Diffusion

Oct 06, 2025

Dual-view mammography, including craniocaudal (CC) and mediolateral oblique (MLO) projections, offers complementary anatomical views crucial for breast cancer diagnosis. However, in real-world clinical workflows, one view may be missing, corrupted, or degraded due to acquisition errors or compression artifacts, limiting the effectiveness of downstream analysis. View-to-view translation can help recover missing views and improve lesion alignment. Unlike natural images, this task in mammography is highly challenging due to large non-rigid deformations and severe tissue overlap in X-ray projections, which obscure pixel-level correspondences. In this paper, we propose Column-Aware and Implicit 3D Diffusion (CA3D-Diff), a novel bidirectional mammogram view translation framework based on conditional diffusion model. To address cross-view structural misalignment, we first design a column-aware cross-attention mechanism that leverages the geometric property that anatomically corresponding regions tend to lie in similar column positions across views. A Gaussian-decayed bias is applied to emphasize local column-wise correlations while suppressing distant mismatches. Furthermore, we introduce an implicit 3D structure reconstruction module that back-projects noisy 2D latents into a coarse 3D feature volume based on breast-view projection geometry. The reconstructed 3D structure is refined and injected into the denoising UNet to guide cross-view generation with enhanced anatomical awareness. Extensive experiments demonstrate that CA3D-Diff achieves superior performance in bidirectional tasks, outperforming state-of-the-art methods in visual fidelity and structural consistency. Furthermore, the synthesized views effectively improve single-view malignancy classification in screening settings, demonstrating the practical value of our method in real-world diagnostics.

Comparative Analysis of GAN and Diffusion for MRI-to-CT translation

Sep 26, 2025

Computed tomography (CT) is essential for treatment and diagnostics; In case CT are missing or otherwise difficult to obtain, methods for generating synthetic CT (sCT) images from magnetic resonance imaging (MRI) images are sought after. Therefore, it is valuable to establish a reference for what strategies are most effective for MRI-to-CT translation. In this paper, we compare the performance of two frequently used architectures for MRI-to-CT translation: a conditional generative adversarial network (cGAN) and a conditional denoising diffusion probabilistic model (cDDPM). We chose well-established implementations to represent each architecture: Pix2Pix for cGAN, and Palette for cDDPM. We separate the classical 3D translation problem into a sequence of 2D translations on the transverse plane, to investigate the viability of a strategy that reduces the computational cost. We also investigate the impact of conditioning the generative process on a single MRI image/slice and on multiple MRI slices. The performance is assessed using a thorough evaluation protocol, including a novel slice-wise metric Similarity Of Slices (SIMOS), which measures the continuity between transverse slices when compiling the sCTs into 3D format. Our comparative analysis revealed that MRI-to-CT generative models benefit from multi-channel conditional input and using cDDPM as an architecture.

DistillMatch: Leveraging Knowledge Distillation from Vision Foundation Model for Multimodal Image Matching

Sep 19, 2025Multimodal image matching seeks pixel-level correspondences between images of different modalities, crucial for cross-modal perception, fusion and analysis. However, the significant appearance differences between modalities make this task challenging. Due to the scarcity of high-quality annotated datasets, existing deep learning methods that extract modality-common features for matching perform poorly and lack adaptability to diverse scenarios. Vision Foundation Model (VFM), trained on large-scale data, yields generalizable and robust feature representations adapted to data and tasks of various modalities, including multimodal matching. Thus, we propose DistillMatch, a multimodal image matching method using knowledge distillation from VFM. DistillMatch employs knowledge distillation to build a lightweight student model that extracts high-level semantic features from VFM (including DINOv2 and DINOv3) to assist matching across modalities. To retain modality-specific information, it extracts and injects modality category information into the other modality's features, which enhances the model's understanding of cross-modal correlations. Furthermore, we design V2I-GAN to boost the model's generalization by translating visible to pseudo-infrared images for data augmentation. Experiments show that DistillMatch outperforms existing algorithms on public datasets.

Time-Correlated Video Bridge Matching

Oct 14, 2025Diffusion models excel in noise-to-data generation tasks, providing a mapping from a Gaussian distribution to a more complex data distribution. However they struggle to model translations between complex distributions, limiting their effectiveness in data-to-data tasks. While Bridge Matching (BM) models address this by finding the translation between data distributions, their application to time-correlated data sequences remains unexplored. This is a critical limitation for video generation and manipulation tasks, where maintaining temporal coherence is particularly important. To address this gap, we propose Time-Correlated Video Bridge Matching (TCVBM), a framework that extends BM to time-correlated data sequences in the video domain. TCVBM explicitly models inter-sequence dependencies within the diffusion bridge, directly incorporating temporal correlations into the sampling process. We compare our approach to classical methods based on bridge matching and diffusion models for three video-related tasks: frame interpolation, image-to-video generation, and video super-resolution. TCVBM achieves superior performance across multiple quantitative metrics, demonstrating enhanced generation quality and reconstruction fidelity.

Continual Action Quality Assessment via Adaptive Manifold-Aligned Graph Regularization

Oct 08, 2025

Action Quality Assessment (AQA) quantifies human actions in videos, supporting applications in sports scoring, rehabilitation, and skill evaluation. A major challenge lies in the non-stationary nature of quality distributions in real-world scenarios, which limits the generalization ability of conventional methods. We introduce Continual AQA (CAQA), which equips AQA with Continual Learning (CL) capabilities to handle evolving distributions while mitigating catastrophic forgetting. Although parameter-efficient fine-tuning of pretrained models has shown promise in CL for image classification, we find it insufficient for CAQA. Our empirical and theoretical analyses reveal two insights: (i) Full-Parameter Fine-Tuning (FPFT) is necessary for effective representation learning; yet (ii) uncontrolled FPFT induces overfitting and feature manifold shift, thereby aggravating forgetting. To address this, we propose Adaptive Manifold-Aligned Graph Regularization (MAGR++), which couples backbone fine-tuning that stabilizes shallow layers while adapting deeper ones with a two-step feature rectification pipeline: a manifold projector to translate deviated historical features into the current representation space, and a graph regularizer to align local and global distributions. We construct four CAQA benchmarks from three datasets with tailored evaluation protocols and strong baselines, enabling systematic cross-dataset comparison. Extensive experiments show that MAGR++ achieves state-of-the-art performance, with average correlation gains of 3.6% offline and 12.2% online over the strongest baseline, confirming its robustness and effectiveness. Our code is available at https://github.com/ZhouKanglei/MAGRPP.

Multilingual Vision-Language Models, A Survey

Sep 26, 2025This survey examines multilingual vision-language models that process text and images across languages. We review 31 models and 21 benchmarks, spanning encoder-only and generative architectures, and identify a key tension between language neutrality (consistent cross-lingual representations) and cultural awareness (adaptation to cultural contexts). Current training methods favor neutrality through contrastive learning, while cultural awareness depends on diverse data. Two-thirds of evaluation benchmarks use translation-based approaches prioritizing semantic consistency, though recent work incorporates culturally grounded content. We find discrepancies in cross-lingual capabilities and gaps between training objectives and evaluation goals.

Graph2Eval: Automatic Multimodal Task Generation for Agents via Knowledge Graphs

Oct 01, 2025

As multimodal LLM-driven agents continue to advance in autonomy and generalization, evaluation based on static datasets can no longer adequately assess their true capabilities in dynamic environments and diverse tasks. Existing LLM-based synthetic data methods are largely designed for LLM training and evaluation, and thus cannot be directly applied to agent tasks that require tool use and interactive capabilities. While recent studies have explored automatic agent task generation with LLMs, most efforts remain limited to text or image analysis, without systematically modeling multi-step interactions in web environments. To address these challenges, we propose Graph2Eval, a knowledge graph-based framework that automatically generates both multimodal document comprehension tasks and web interaction tasks, enabling comprehensive evaluation of agents' reasoning, collaboration, and interactive capabilities. In our approach, knowledge graphs constructed from multi-source external data serve as the task space, where we translate semantic relations into structured multimodal tasks using subgraph sampling, task templates, and meta-paths. A multi-stage filtering pipeline based on node reachability, LLM scoring, and similarity analysis is applied to guarantee the quality and executability of the generated tasks. Furthermore, Graph2Eval supports end-to-end evaluation of multiple agent types (Single-Agent, Multi-Agent, Web Agent) and measures reasoning, collaboration, and interaction capabilities. We instantiate the framework with Graph2Eval-Bench, a curated dataset of 1,319 tasks spanning document comprehension and web interaction scenarios. Experiments show that Graph2Eval efficiently generates tasks that differentiate agent and model performance, revealing gaps in reasoning, collaboration, and web interaction across different settings and offering a new perspective for agent evaluation.

GP3: A 3D Geometry-Aware Policy with Multi-View Images for Robotic Manipulation

Sep 19, 2025Effective robotic manipulation relies on a precise understanding of 3D scene geometry, and one of the most straightforward ways to acquire such geometry is through multi-view observations. Motivated by this, we present GP3 -- a 3D geometry-aware robotic manipulation policy that leverages multi-view input. GP3 employs a spatial encoder to infer dense spatial features from RGB observations, which enable the estimation of depth and camera parameters, leading to a compact yet expressive 3D scene representation tailored for manipulation. This representation is fused with language instructions and translated into continuous actions via a lightweight policy head. Comprehensive experiments demonstrate that GP3 consistently outperforms state-of-the-art methods on simulated benchmarks. Furthermore, GP3 transfers effectively to real-world robots without depth sensors or pre-mapped environments, requiring only minimal fine-tuning. These results highlight GP3 as a practical, sensor-agnostic solution for geometry-aware robotic manipulation.

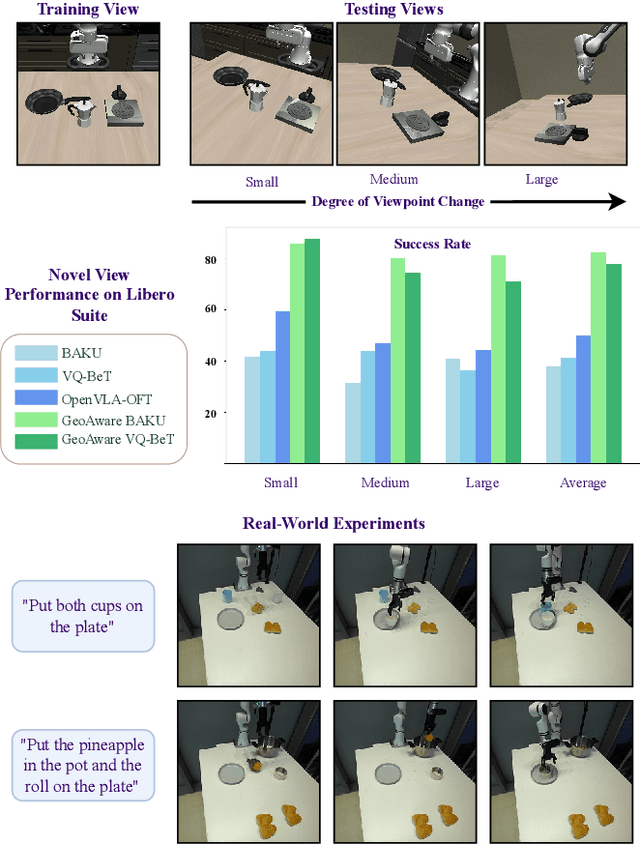

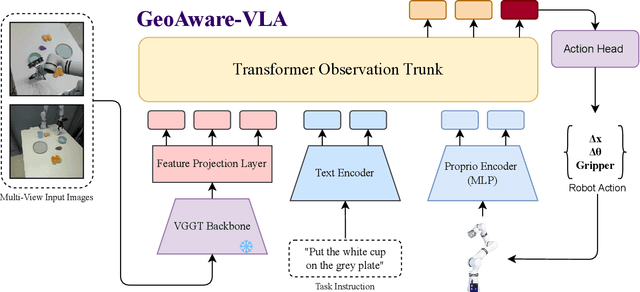

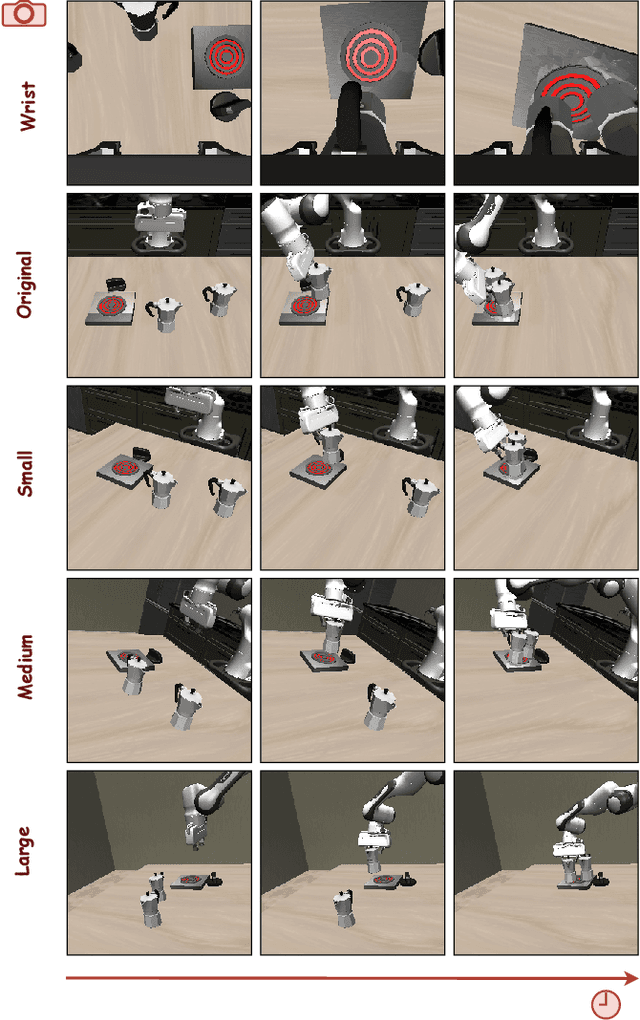

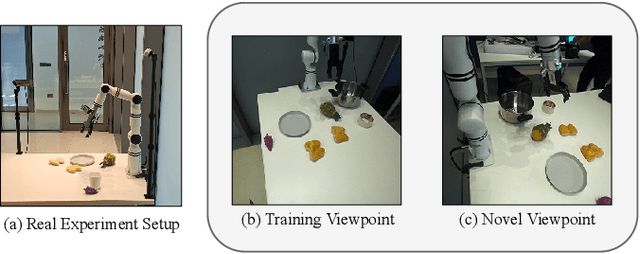

GeoAware-VLA: Implicit Geometry Aware Vision-Language-Action Model

Sep 17, 2025

Vision-Language-Action (VLA) models often fail to generalize to novel camera viewpoints, a limitation stemming from their difficulty in inferring robust 3D geometry from 2D images. We introduce GeoAware-VLA, a simple yet effective approach that enhances viewpoint invariance by integrating strong geometric priors into the vision backbone. Instead of training a visual encoder or relying on explicit 3D data, we leverage a frozen, pretrained geometric vision model as a feature extractor. A trainable projection layer then adapts these geometrically-rich features for the policy decoder, relieving it of the burden of learning 3D consistency from scratch. Through extensive evaluations on LIBERO benchmark subsets, we show GeoAware-VLA achieves substantial improvements in zero-shot generalization to novel camera poses, boosting success rates by over 2x in simulation. Crucially, these benefits translate to the physical world; our model shows a significant performance gain on a real robot, especially when evaluated from unseen camera angles. Our approach proves effective across both continuous and discrete action spaces, highlighting that robust geometric grounding is a key component for creating more generalizable robotic agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge