Farshid Hajati

Smart Fault Detection in Nanosatellite Electrical Power System

Jan 01, 2026Abstract:This paper presents a new detection method of faults at Nanosatellites' electrical power without an Attitude Determination Control Subsystem (ADCS) at the LEO orbit. Each part of this system is at risk of fault due to pressure tolerance, launcher pressure, and environmental circumstances. Common faults are line to line fault and open circuit for the photovoltaic subsystem, short circuit and open circuit IGBT at DC to DC converter, and regulator fault of the ground battery. The system is simulated without fault based on a neural network using solar radiation and solar panel's surface temperature as input data and current and load as outputs. Finally, using the neural network classifier, different faults are diagnosed by pattern and type of fault. For fault classification, other machine learning methods are also used, such as PCA classification, decision tree, and KNN.

Early Prediction of Sepsis using Heart Rate Signals and Genetic Optimized LSTM Algorithm

Dec 30, 2025Abstract:Sepsis, characterized by a dysregulated immune response to infection, results in significant mortality, morbidity, and healthcare costs. The timely prediction of sepsis progression is crucial for reducing adverse outcomes through early intervention. Despite the development of numerous models for Intensive Care Unit (ICU) patients, there remains a notable gap in approaches for the early detection of sepsis in non-ward settings. This research introduces and evaluates four novel machine learning algorithms designed for predicting the onset of sepsis on wearable devices by analyzing heart rate data. The architecture of these models was refined through a genetic algorithm, optimizing for performance, computational complexity, and memory requirements. Performance metrics were subsequently extracted for each model to evaluate their feasibility for implementation on wearable devices capable of accurate heart rate monitoring. The models were initially tailored for a prediction window of one hour, later extended to four hours through transfer learning. The encouraging outcomes of this study suggest the potential for wearable technology to facilitate early sepsis detection outside ICU and ward environments.

Medical Image Classification on Imbalanced Data Using ProGAN and SMA-Optimized ResNet: Application to COVID-19

Dec 30, 2025Abstract:The challenge of imbalanced data is prominent in medical image classification. This challenge arises when there is a significant disparity in the number of images belonging to a particular class, such as the presence or absence of a specific disease, as compared to the number of images belonging to other classes. This issue is especially notable during pandemics, which may result in an even more significant imbalance in the dataset. Researchers have employed various approaches in recent years to detect COVID-19 infected individuals accurately and quickly, with artificial intelligence and machine learning algorithms at the forefront. However, the lack of sufficient and balanced data remains a significant obstacle to these methods. This study addresses the challenge by proposing a progressive generative adversarial network to generate synthetic data to supplement the real ones. The proposed method suggests a weighted approach to combine synthetic data with real ones before inputting it into a deep network classifier. A multi-objective meta-heuristic population-based optimization algorithm is employed to optimize the hyper-parameters of the classifier. The proposed model exhibits superior cross-validated metrics compared to existing methods when applied to a large and imbalanced chest X-ray image dataset of COVID-19. The proposed model achieves 95.5% and 98.5% accuracy for 4-class and 2-class imbalanced classification problems, respectively. The successful experimental outcomes demonstrate the effectiveness of the proposed model in classifying medical images using imbalanced data during pandemics.

Cryptocurrency Price Prediction Using Parallel Gated Recurrent Units

Dec 27, 2025Abstract:According to the advent of cryptocurrencies and Bitcoin, many investments and businesses are now conducted online through cryptocurrencies. Among them, Bitcoin uses blockchain technology to make transactions secure, transparent, traceable, and immutable. It also exhibits significant price fluctuations and performance, which has attracted substantial attention, especially in financial sectors. Consequently, a wide range of investors and individuals have turned to investing in the cryptocurrency market. One of the most important challenges in economics is price forecasting for future trades. Cryptocurrencies are no exception, and investors are looking for methods to predict prices; various theories and methods have been proposed in this field. This paper presents a new deep model, called \emph{Parallel Gated Recurrent Units} (PGRU), for cryptocurrency price prediction. In this model, recurrent neural networks forecast prices in a parallel and independent way. The parallel networks utilize different inputs, each representing distinct price-related features. Finally, the outputs of the parallel networks are combined by a neural network to forecast the future price of cryptocurrencies. The experimental results indicate that the proposed model achieves mean absolute percentage errors (MAPE) of 3.243% and 2.641% for window lengths 20 and 15, respectively. Our method therefore attains higher accuracy and efficiency with fewer input data and lower computational cost compared to existing methods.

Gold Price Prediction Using Long Short-Term Memory and Multi-Layer Perceptron with Gray Wolf Optimizer

Dec 27, 2025Abstract:The global gold market, by its fundamentals, has long been home to many financial institutions, banks, governments, funds, and micro-investors. Due to the inherent complexity and relationship between important economic and political components, accurate forecasting of financial markets has always been challenging. Therefore, providing a model that can accurately predict the future of the markets is very important and will be of great benefit to their developers. In this paper, an artificial intelligence-based algorithm for daily and monthly gold forecasting is presented. Two Long short-term memory (LSTM) networks are responsible for daily and monthly forecasting, the results of which are integrated into a Multilayer perceptrons (MLP) network and provide the final forecast of the next day prices. The algorithm forecasts the highest, lowest, and closing prices on the daily and monthly time frame. Based on these forecasts, a trading strategy for live market trading was developed, according to which the proposed model had a return of 171% in three months. Also, the number of internal neurons in each network is optimized by the Gray Wolf optimization (GWO) algorithm based on the least RMSE error. The dataset was collected between 2010 and 2021 and includes data on macroeconomic, energy markets, stocks, and currency status of developed countries. Our proposed LSTM-MLP model predicted the daily closing price of gold with the Mean absolute error (MAE) of $ 0.21 and the next month's price with $ 22.23.

Interpretable graph-based models on multimodal biomedical data integration: A technical review and benchmarking

May 03, 2025

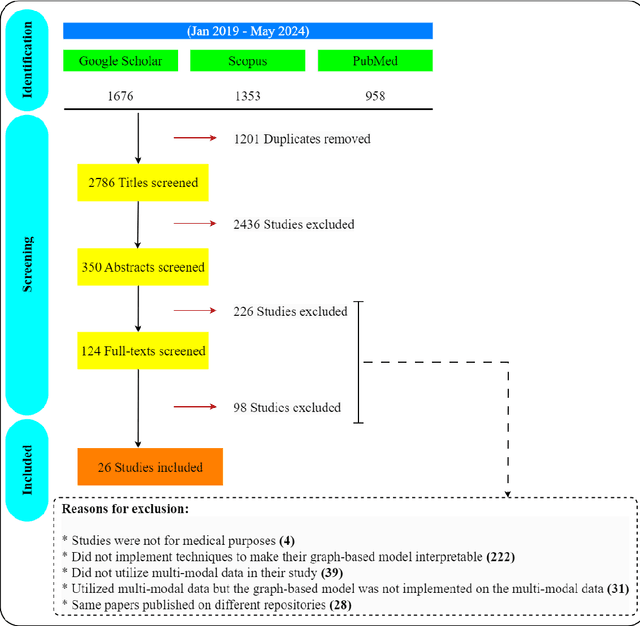

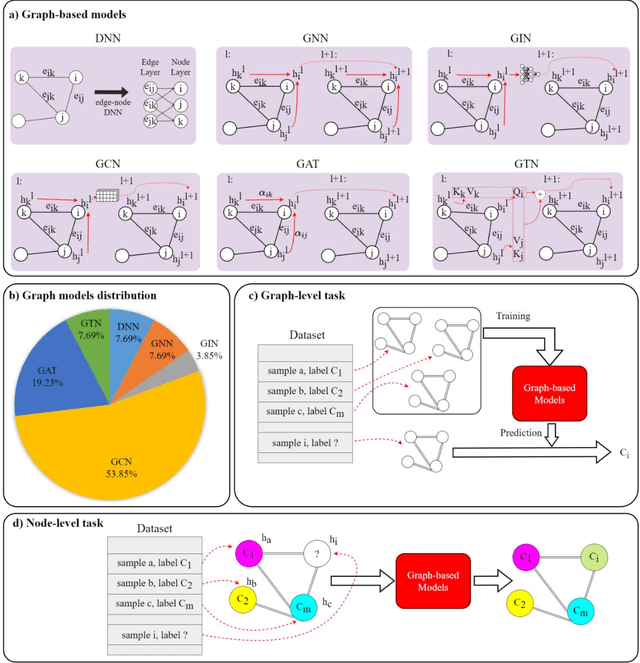

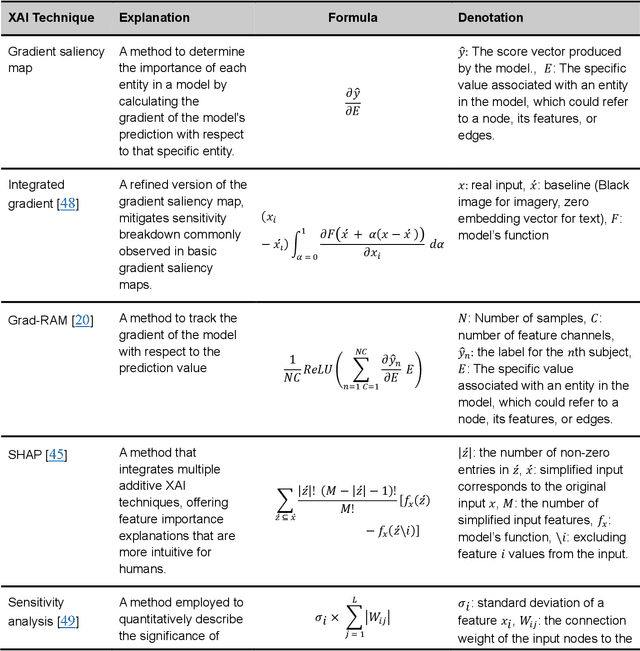

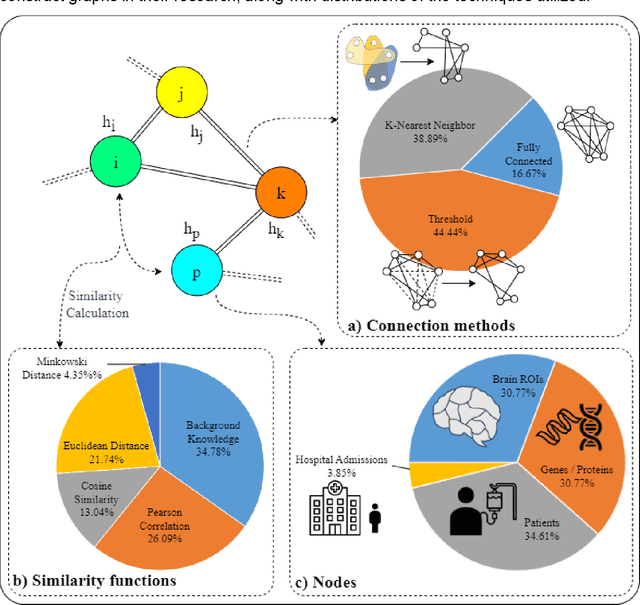

Abstract:Integrating heterogeneous biomedical data including imaging, omics, and clinical records supports accurate diagnosis and personalised care. Graph-based models fuse such non-Euclidean data by capturing spatial and relational structure, yet clinical uptake requires regulator-ready interpretability. We present the first technical survey of interpretable graph based models for multimodal biomedical data, covering 26 studies published between Jan 2019 and Sep 2024. Most target disease classification, notably cancer and rely on static graphs from simple similarity measures, while graph-native explainers are rare; post-hoc methods adapted from non-graph domains such as gradient saliency, and SHAP predominate. We group existing approaches into four interpretability families, outline trends such as graph-in-graph hierarchies, knowledge-graph edges, and dynamic topology learning, and perform a practical benchmark. Using an Alzheimer disease cohort, we compare Sensitivity Analysis, Gradient Saliency, SHAP and Graph Masking. SHAP and Sensitivity Analysis recover the broadest set of known AD pathways and Gene-Ontology terms, whereas Gradient Saliency and Graph Masking surface complementary metabolic and transport signatures. Permutation tests show all four beat random gene sets, but with distinct trade-offs: SHAP and Graph Masking offer deeper biology at higher compute cost, while Gradient Saliency and Sensitivity Analysis are quicker though coarser. We also provide a step-by-step flowchart covering graph construction, explainer choice and resource budgeting to help researchers balance transparency and performance. This review synthesises the state of interpretable graph learning for multimodal medicine, benchmarks leading techniques, and charts future directions, from advanced XAI tools to under-studied diseases, serving as a concise reference for method developers and translational scientists.

Meta-learning in healthcare: A survey

Aug 05, 2023Abstract:As a subset of machine learning, meta-learning, or learning to learn, aims at improving the model's capabilities by employing prior knowledge and experience. A meta-learning paradigm can appropriately tackle the conventional challenges of traditional learning approaches, such as insufficient number of samples, domain shifts, and generalization. These unique characteristics position meta-learning as a suitable choice for developing influential solutions in various healthcare contexts, where the available data is often insufficient, and the data collection methodologies are different. This survey discusses meta-learning broad applications in the healthcare domain to provide insight into how and where it can address critical healthcare challenges. We first describe the theoretical foundations and pivotal methods of meta-learning. We then divide the employed meta-learning approaches in the healthcare domain into two main categories of multi/single-task learning and many/few-shot learning and survey the studies. Finally, we highlight the current challenges in meta-learning research, discuss the potential solutions and provide future perspectives on meta-learning in healthcare.

RVD: A Handheld Device-Based Fundus Video Dataset for Retinal Vessel Segmentation

Jul 13, 2023Abstract:Retinal vessel segmentation is generally grounded in image-based datasets collected with bench-top devices. The static images naturally lose the dynamic characteristics of retina fluctuation, resulting in diminished dataset richness, and the usage of bench-top devices further restricts dataset scalability due to its limited accessibility. Considering these limitations, we introduce the first video-based retinal dataset by employing handheld devices for data acquisition. The dataset comprises 635 smartphone-based fundus videos collected from four different clinics, involving 415 patients from 50 to 75 years old. It delivers comprehensive and precise annotations of retinal structures in both spatial and temporal dimensions, aiming to advance the landscape of vasculature segmentation. Specifically, the dataset provides three levels of spatial annotations: binary vessel masks for overall retinal structure delineation, general vein-artery masks for distinguishing the vein and artery, and fine-grained vein-artery masks for further characterizing the granularities of each artery and vein. In addition, the dataset offers temporal annotations that capture the vessel pulsation characteristics, assisting in detecting ocular diseases that require fine-grained recognition of hemodynamic fluctuation. In application, our dataset exhibits a significant domain shift with respect to data captured by bench-top devices, thus posing great challenges to existing methods. In the experiments, we provide evaluation metrics and benchmark results on our dataset, reflecting both the potential and challenges it offers for vessel segmentation tasks. We hope this challenging dataset would significantly contribute to the development of eye disease diagnosis and early prevention.

Deep conv-attention model for diagnosing left bundle branch block from 12-lead electrocardiograms

Dec 07, 2022Abstract:Cardiac resynchronization therapy (CRT) is a treatment that is used to compensate for irregularities in the heartbeat. Studies have shown that this treatment is more effective in heart patients with left bundle branch block (LBBB) arrhythmia. Therefore, identifying this arrhythmia is an important initial step in determining whether or not to use CRT. On the other hand, traditional methods for detecting LBBB on electrocardiograms (ECG) are often associated with errors. Thus, there is a need for an accurate method to diagnose this arrhythmia from ECG data. Machine learning, as a new field of study, has helped to increase human systems' performance. Deep learning, as a newer subfield of machine learning, has more power to analyze data and increase systems accuracy. This study presents a deep learning model for the detection of LBBB arrhythmia from 12-lead ECG data. This model consists of 1D dilated convolutional layers. Attention mechanism has also been used to identify important input data features and classify inputs more accurately. The proposed model is trained and validated on a database containing 10344 12-lead ECG samples using the 10-fold cross-validation method. The final results obtained by the model on the 12-lead ECG data are as follows. Accuracy: 98.80+-0.08%, specificity: 99.33+-0.11 %, F1 score: 73.97+-1.8%, and area under the receiver operating characteristics curve (AUC): 0.875+-0.0192. These results indicate that the proposed model in this study can effectively diagnose LBBB with good efficiency and, if used in medical centers, will greatly help diagnose this arrhythmia and early treatment.

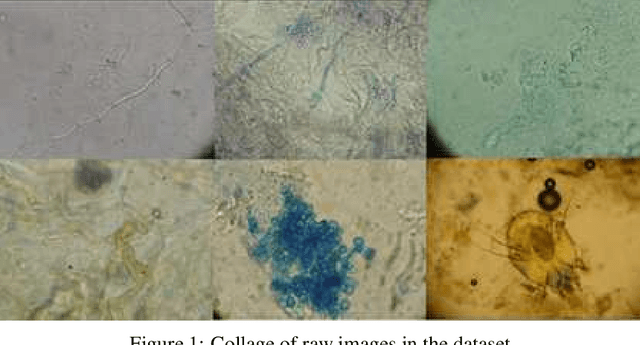

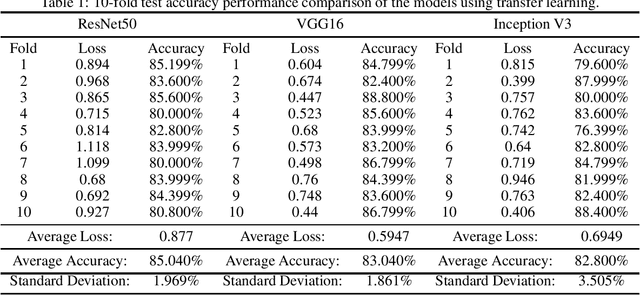

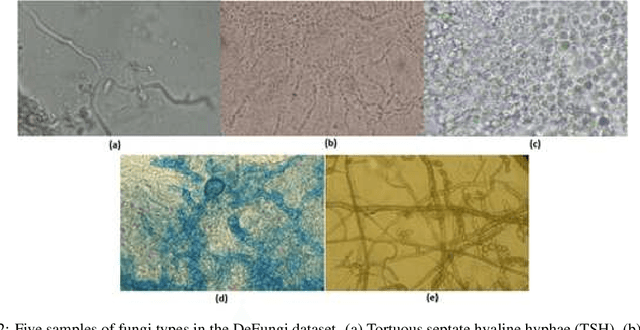

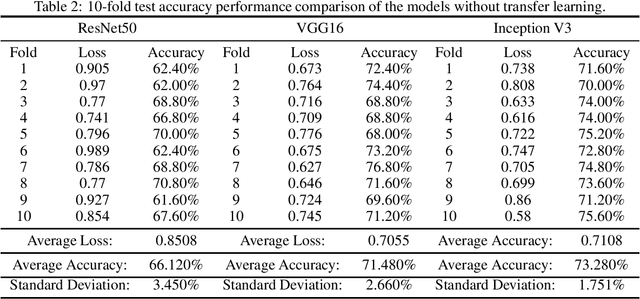

DeFungi: Direct Mycological Examination of Microscopic Fungi Images

Sep 15, 2021

Abstract:Traditionally, diagnosis and treatment of fungal infections in humans depend heavily on face-to-face consultations or examinations made by specialized laboratory scientists known as mycologists. In many cases, such as the recent mucormycosis spread in the COVID-19 pandemic, an initial treatment can be safely suggested to the patient during the earliest stage of the mycological diagnostic process by performing a direct examination of biopsies or samples through a microscope. Computer-aided diagnosis systems using deep learning models have been trained and used for the late mycological diagnostic stages. However, there are no reference literature works made for the early stages. A mycological laboratory in Colombia donated the images used for the development of this research work. They were manually labelled into five classes and curated with a subject matter expert assistance. The images were later cropped and patched with automated code routines to produce the final dataset. This paper presents experimental results classifying five fungi types using two different deep learning approaches and three different convolutional neural network models, VGG16, Inception V3, and ResNet50. The first approach benchmarks the classification performance for the models trained from scratch, while the second approach benchmarks the classification performance using pre-trained models based on the ImageNet dataset. Using k-fold cross-validation testing on the 5-class dataset, the best performing model trained from scratch was Inception V3, reporting 73.2% accuracy. Also, the best performing model using transfer learning was VGG16 reporting 85.04%. The statistics provided by the two approaches create an initial point of reference to encourage future research works to improve classification performance. Furthermore, the dataset built is published in Kaggle and GitHub to foster future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge