Multi-View Learning for Vision-and-Language Navigation

Mar 03, 2020Qiaolin Xia, Xiujun Li, Chunyuan Li, Yonatan Bisk, Zhifang Sui, Jianfeng Gao, Yejin Choi, Noah A. Smith

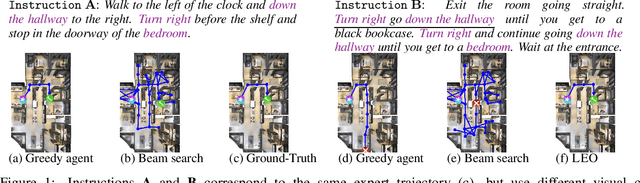

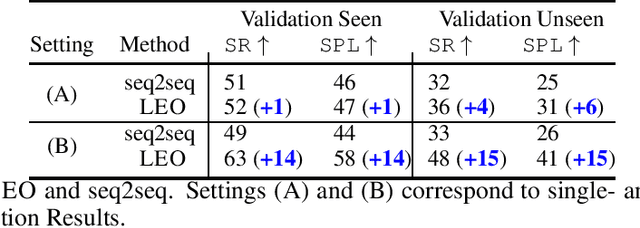

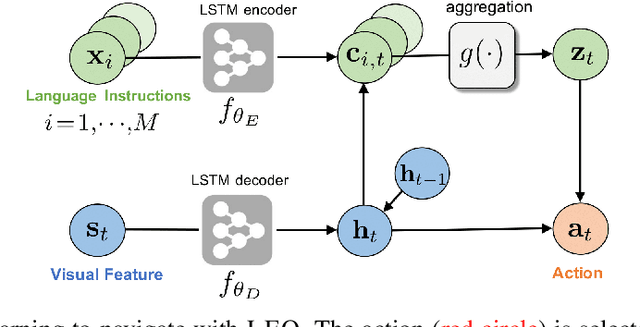

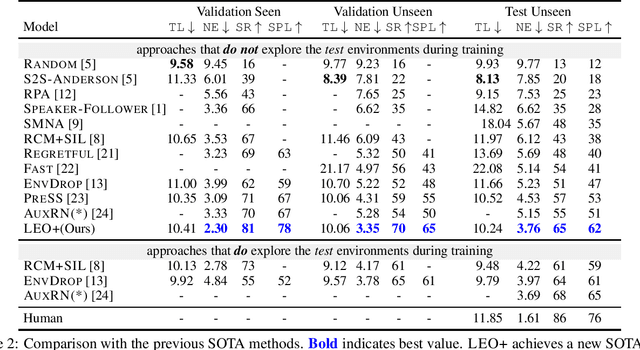

Learning to navigate in a visual environment following natural language instructions is a challenging task because natural language instructions are highly variable, ambiguous, and under-specified. In this paper, we present a novel training paradigm, Learn from EveryOne (LEO), which leverages multiple instructions (as different views) for the same trajectory to resolve language ambiguity and improve generalization. By sharing parameters across instructions, our approach learns more effectively from limited training data and generalizes better in unseen environments. On the recent Room-to-Room (R2R) benchmark dataset, LEO achieves 16% improvement (absolute) over a greedy agent as the base agent (25.3% $\rightarrow$ 41.4%) in Success Rate weighted by Path Length (SPL). Further, LEO is complementary to most existing models for vision-and-language navigation, allowing for easy integration with the existing techniques, leading to LEO+, which creates the new state of the art, pushing the R2R benchmark to 62% (9% absolute improvement).

ALFRED: A Benchmark for Interpreting Grounded Instructions for Everyday Tasks

Dec 03, 2019Mohit Shridhar, Jesse Thomason, Daniel Gordon, Yonatan Bisk, Winson Han, Roozbeh Mottaghi, Luke Zettlemoyer, Dieter Fox

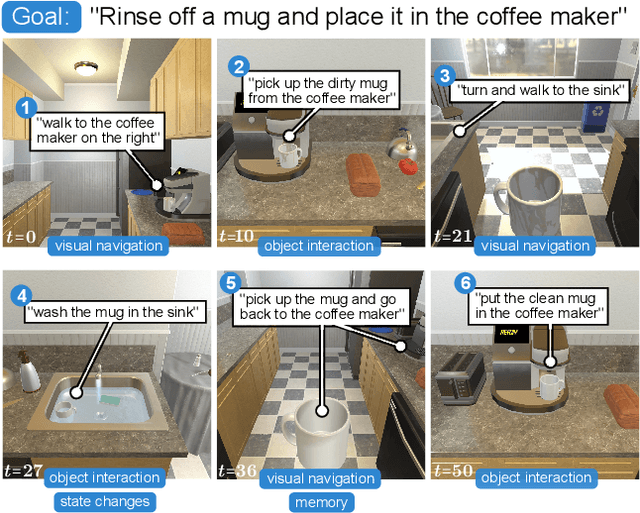

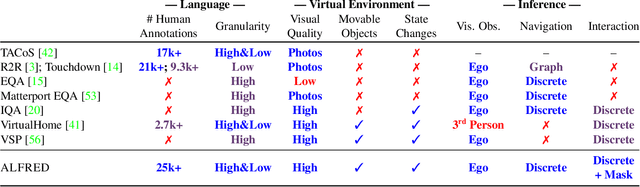

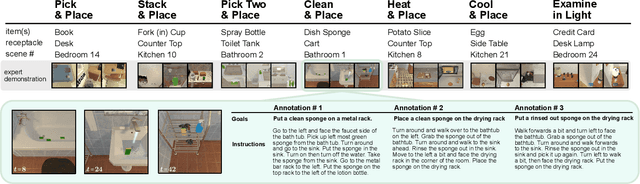

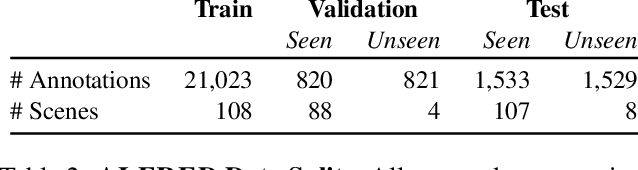

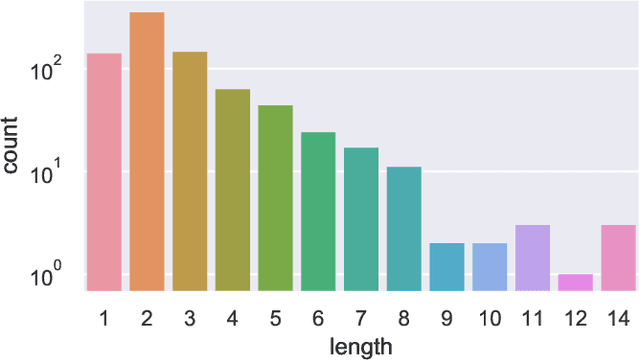

We present ALFRED (Action Learning From Realistic Environments and Directives), a benchmark for learning a mapping from natural language instructions and egocentric vision to sequences of actions for household tasks. Long composition rollouts with non-reversible state changes are among the phenomena we include to shrink the gap between research benchmarks and real-world applications. ALFRED consists of expert demonstrations in interactive visual environments for 25k natural language directives. These directives contain both high-level goals like "Rinse off a mug and place it in the coffee maker." and low-level language instructions like "Walk to the coffee maker on the right." ALFRED tasks are more complex in terms of sequence length, action space, and language than existing vision-and-language task datasets. We show that a baseline model designed for recent embodied vision-and-language tasks performs poorly on ALFRED, suggesting that there is significant room for developing innovative grounded visual language understanding models with this benchmark.

PIQA: Reasoning about Physical Commonsense in Natural Language

Nov 26, 2019Yonatan Bisk, Rowan Zellers, Ronan Le Bras, Jianfeng Gao, Yejin Choi

To apply eyeshadow without a brush, should I use a cotton swab or a toothpick? Questions requiring this kind of physical commonsense pose a challenge to today's natural language understanding systems. While recent pretrained models (such as BERT) have made progress on question answering over more abstract domains - such as news articles and encyclopedia entries, where text is plentiful - in more physical domains, text is inherently limited due to reporting bias. Can AI systems learn to reliably answer physical common-sense questions without experiencing the physical world? In this paper, we introduce the task of physical commonsense reasoning and a corresponding benchmark dataset Physical Interaction: Question Answering or PIQA. Though humans find the dataset easy (95% accuracy), large pretrained models struggle (77%). We provide analysis about the dimensions of knowledge that existing models lack, which offers significant opportunities for future research.

Robust Navigation with Language Pretraining and Stochastic Sampling

Sep 05, 2019Xiujun Li, Chunyuan Li, Qiaolin Xia, Yonatan Bisk, Asli Celikyilmaz, Jianfeng Gao, Noah Smith, Yejin Choi

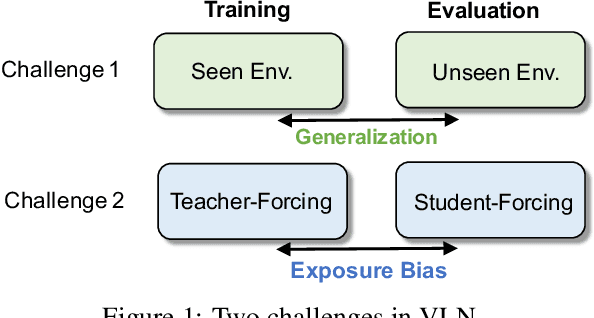

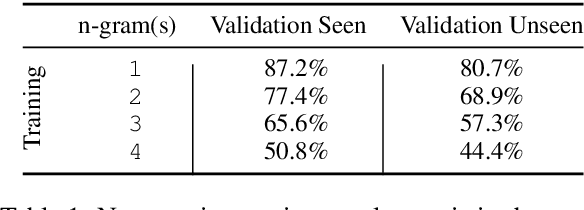

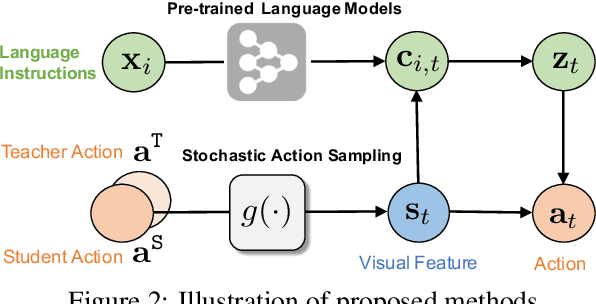

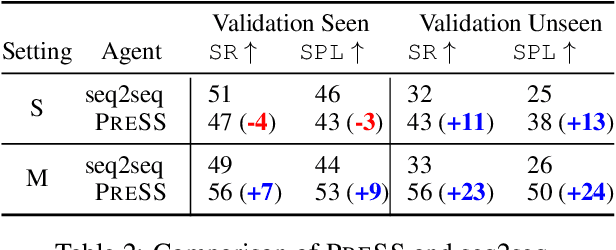

Core to the vision-and-language navigation (VLN) challenge is building robust instruction representations and action decoding schemes, which can generalize well to previously unseen instructions and environments. In this paper, we report two simple but highly effective methods to address these challenges and lead to a new state-of-the-art performance. First, we adapt large-scale pretrained language models to learn text representations that generalize better to previously unseen instructions. Second, we propose a stochastic sampling scheme to reduce the considerable gap between the expert actions in training and sampled actions in test, so that the agent can learn to correct its own mistakes during long sequential action decoding. Combining the two techniques, we achieve a new state of the art on the Room-to-Room benchmark with 6% absolute gain over the previous best result (47% -> 53%) on the Success Rate weighted by Path Length metric.

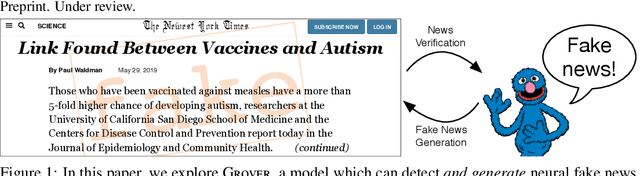

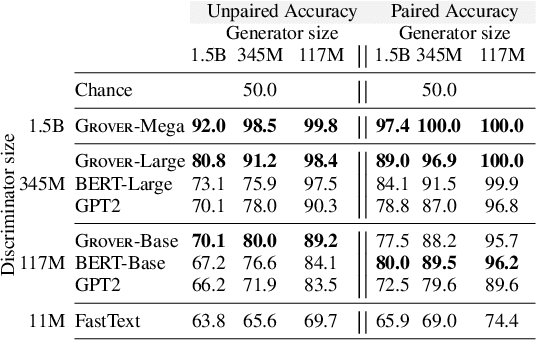

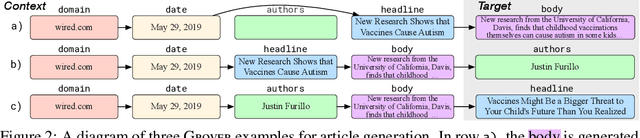

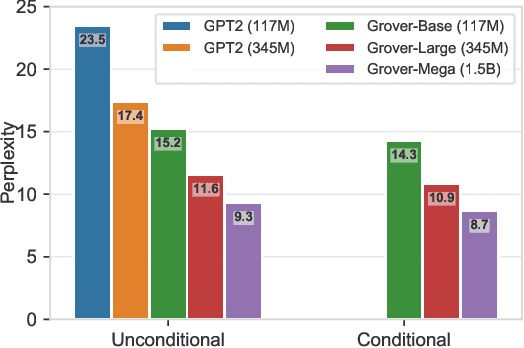

Defending Against Neural Fake News

May 29, 2019Rowan Zellers, Ari Holtzman, Hannah Rashkin, Yonatan Bisk, Ali Farhadi, Franziska Roesner, Yejin Choi

Recent progress in natural language generation has raised dual-use concerns. While applications like summarization and translation are positive, the underlying technology also might enable adversaries to generate neural fake news: targeted propaganda that closely mimics the style of real news. Modern computer security relies on careful threat modeling: identifying potential threats and vulnerabilities from an adversary's point of view, and exploring potential mitigations to these threats. Likewise, developing robust defenses against neural fake news requires us first to carefully investigate and characterize the risks of these models. We thus present a model for controllable text generation called Grover. Given a headline like `Link Found Between Vaccines and Autism,' Grover can generate the rest of the article; humans find these generations to be more trustworthy than human-written disinformation. Developing robust verification techniques against generators like Grover is critical. We find that best current discriminators can classify neural fake news from real, human-written, news with 73% accuracy, assuming access to a moderate level of training data. Counterintuitively, the best defense against Grover turns out to be Grover itself, with 92% accuracy, demonstrating the importance of public release of strong generators. We investigate these results further, showing that exposure bias -- and sampling strategies that alleviate its effects -- both leave artifacts that similar discriminators can pick up on. We conclude by discussing ethical issues regarding the technology, and plan to release Grover publicly, helping pave the way for better detection of neural fake news.

HellaSwag: Can a Machine Really Finish Your Sentence?

May 19, 2019Rowan Zellers, Ari Holtzman, Yonatan Bisk, Ali Farhadi, Yejin Choi

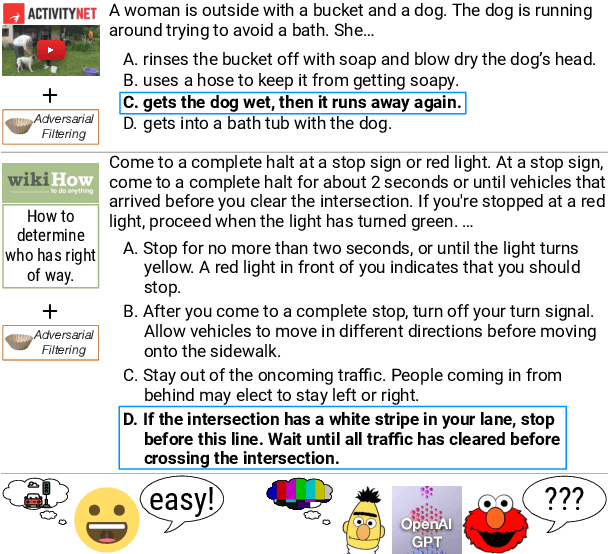

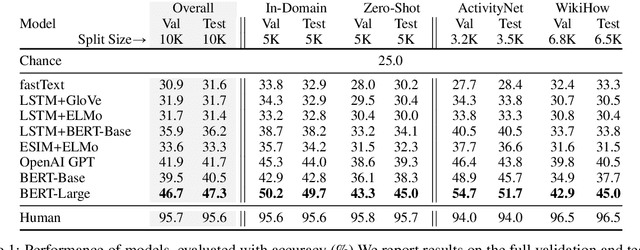

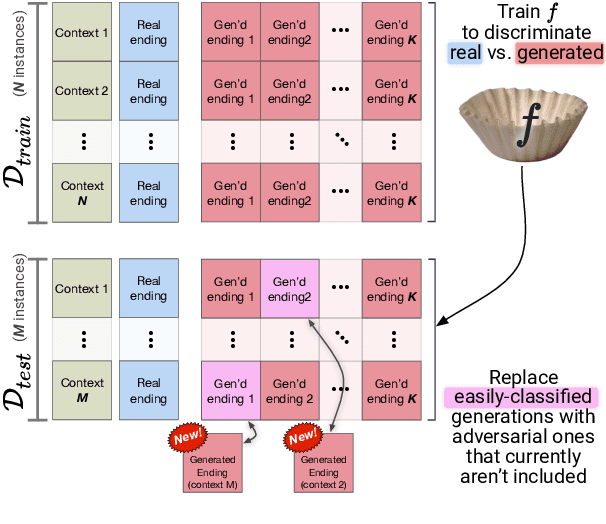

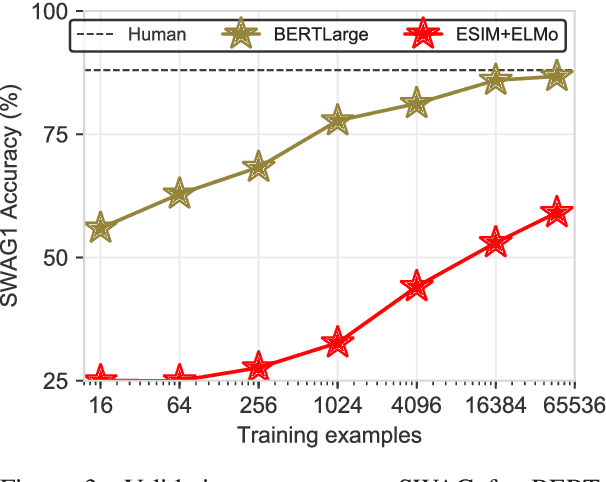

Recent work by Zellers et al. (2018) introduced a new task of commonsense natural language inference: given an event description such as "A woman sits at a piano," a machine must select the most likely followup: "She sets her fingers on the keys." With the introduction of BERT, near human-level performance was reached. Does this mean that machines can perform human level commonsense inference? In this paper, we show that commonsense inference still proves difficult for even state-of-the-art models, by presenting HellaSwag, a new challenge dataset. Though its questions are trivial for humans (>95% accuracy), state-of-the-art models struggle (<48%). We achieve this via Adversarial Filtering (AF), a data collection paradigm wherein a series of discriminators iteratively select an adversarial set of machine-generated wrong answers. AF proves to be surprisingly robust. The key insight is to scale up the length and complexity of the dataset examples towards a critical 'Goldilocks' zone wherein generated text is ridiculous to humans, yet often misclassified by state-of-the-art models. Our construction of HellaSwag, and its resulting difficulty, sheds light on the inner workings of deep pretrained models. More broadly, it suggests a new path forward for NLP research, in which benchmarks co-evolve with the evolving state-of-the-art in an adversarial way, so as to present ever-harder challenges.

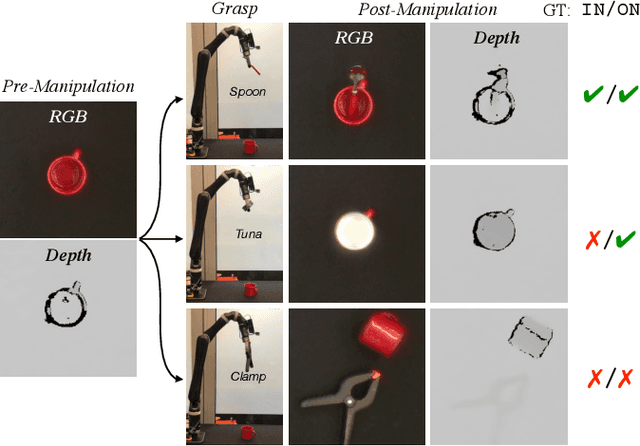

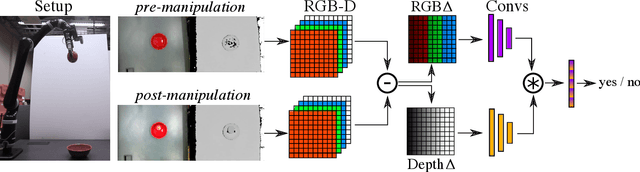

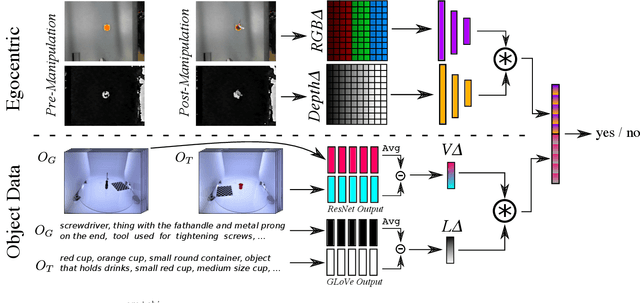

Improving Robot Success Detection using Static Object Data

Apr 02, 2019Rosario Scalise, Jesse Thomason, Yonatan Bisk, Siddhartha Srinivasa

We use static object data to improve success detection for stacking objects on and nesting objects in one another. Such actions are necessary for certain robotics tasks, e.g., clearing a dining table or packing a warehouse bin. However, using an RGB-D camera to detect success can be insufficient: same-colored objects can be difficult to differentiate, and reflective silverware cause noisy depth camera perception. We show that adding static data about the objects themselves improves the performance of an end-to-end pipeline for classifying action outcomes. Images of the objects, and language expressions describing them, encode prior geometry, shape, and size information that refine classification accuracy. We collect over 13 hours of egocentric manipulation data for training a model to reason about whether a robot successfully placed unseen objects in or on one another. The model achieves up to a 57% absolute gain over the task baseline on pairs of previously unseen objects.

Tactical Rewind: Self-Correction via Backtracking in Vision-and-Language Navigation

Apr 02, 2019Liyiming Ke, Xiujun Li, Yonatan Bisk, Ari Holtzman, Zhe Gan, Jingjing Liu, Jianfeng Gao, Yejin Choi, Siddhartha Srinivasa

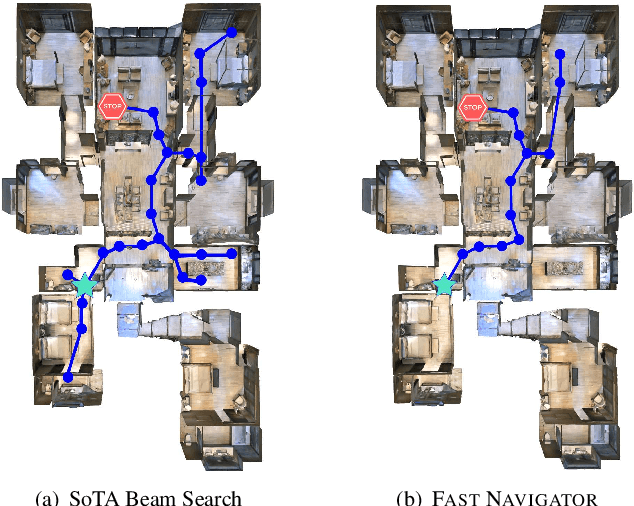

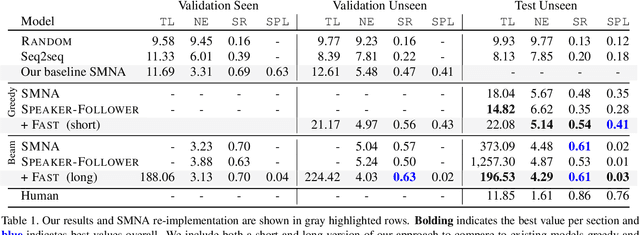

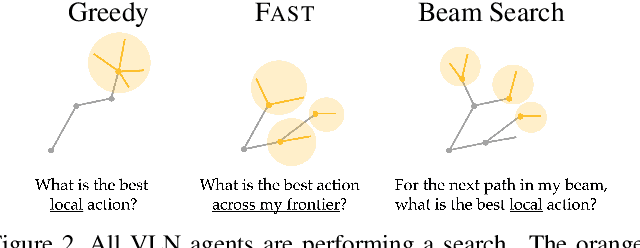

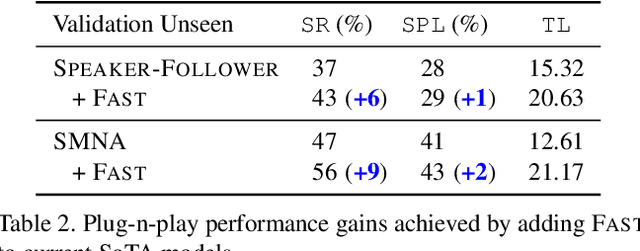

We present the Frontier Aware Search with backTracking (FAST) Navigator, a general framework for action decoding, that achieves state-of-the-art results on the Room-to-Room (R2R) Vision-and-Language navigation challenge of Anderson et. al. (2018). Given a natural language instruction and photo-realistic image views of a previously unseen environment, the agent was tasked with navigating from source to target location as quickly as possible. While all current approaches make local action decisions or score entire trajectories using beam search, ours balances local and global signals when exploring an unobserved environment. Importantly, this lets us act greedily but use global signals to backtrack when necessary. Applying FAST framework to existing state-of-the-art models achieved a 17% relative gain, an absolute 6% gain on Success rate weighted by Path Length (SPL).

Prospection: Interpretable Plans From Language By Predicting the Future

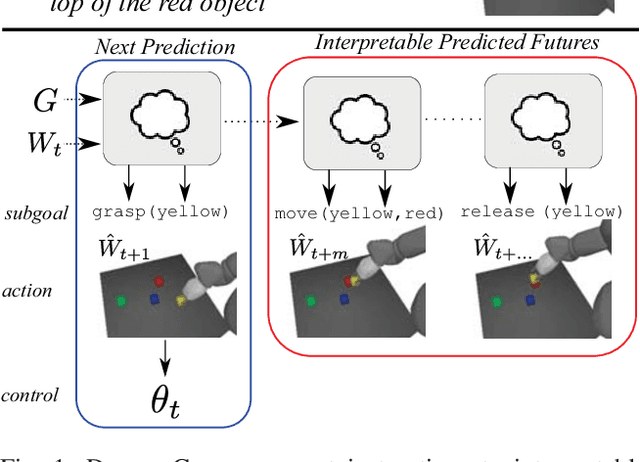

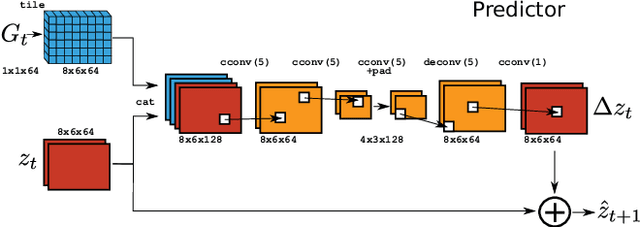

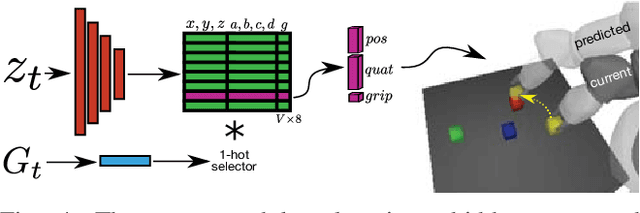

Mar 20, 2019Chris Paxton, Yonatan Bisk, Jesse Thomason, Arunkumar Byravan, Dieter Fox

High-level human instructions often correspond to behaviors with multiple implicit steps. In order for robots to be useful in the real world, they must be able to to reason over both motions and intermediate goals implied by human instructions. In this work, we propose a framework for learning representations that convert from a natural-language command to a sequence of intermediate goals for execution on a robot. A key feature of this framework is prospection, training an agent not just to correctly execute the prescribed command, but to predict a horizon of consequences of an action before taking it. We demonstrate the fidelity of plans generated by our framework when interpreting real, crowd-sourced natural language commands for a robot in simulated scenes.

Character-based Surprisal as a Model of Human Reading in the Presence of Errors

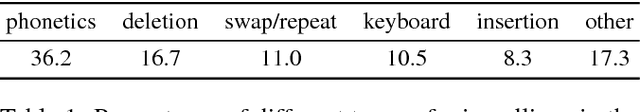

Feb 02, 2019Michael Hahn, Frank Keller, Yonatan Bisk, Yonatan Belinkov

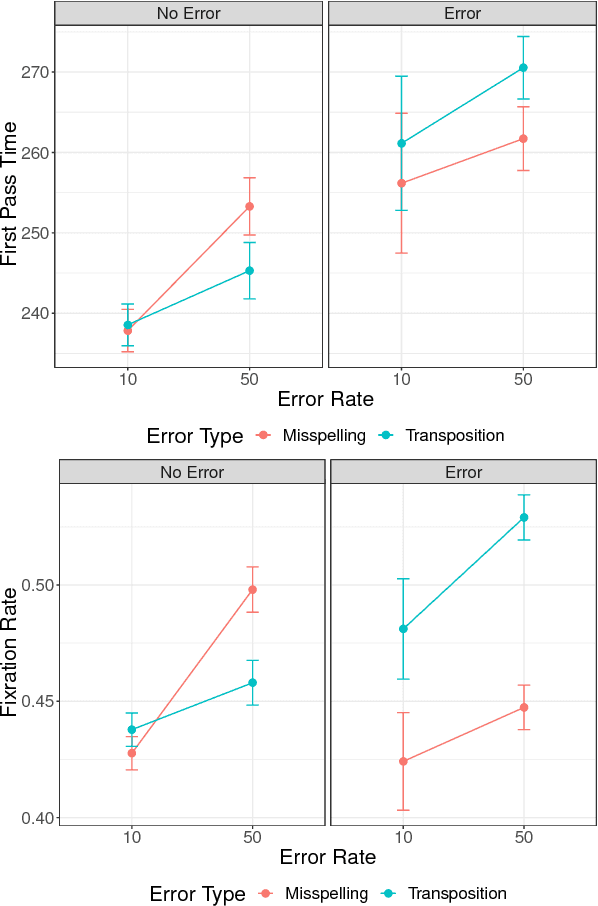

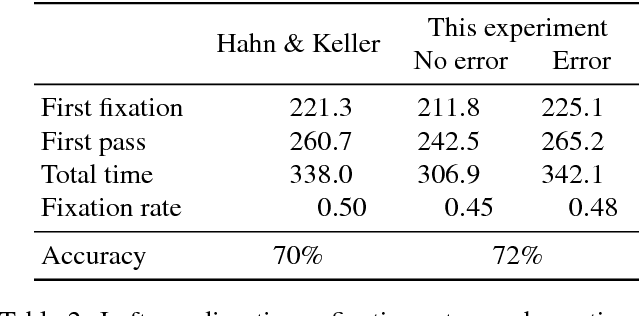

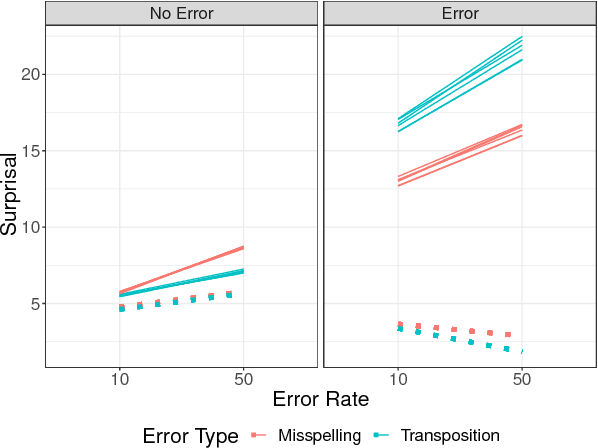

Intuitively, human readers cope easily with errors in text; typos, misspelling, word substitutions, etc. do not unduly disrupt natural reading. Previous work indicates that letter transpositions result in increased reading times, but it is unclear if this effect generalizes to more natural errors. In this paper, we report an eye-tracking study that compares two error types (letter transpositions and naturally occurring misspelling) and two error rates (10% or 50% of all words contain errors). We find that human readers show unimpaired comprehension in spite of these errors, but error words cause more reading difficulty than correct words. Also, transpositions are more difficult than misspellings, and a high error rate increases difficulty for all words, including correct ones. We then present a computational model that uses character-based (rather than traditional word-based) surprisal to account for these results. The model explains that transpositions are harder than misspellings because they contain unexpected letter combinations. It also explains the error rate effect: upcoming words are more difficultto predict when the context is degraded, leading to increased surprisal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge