Ying Chen

AdaInt: Learning Adaptive Intervals for 3D Lookup Tables on Real-time Image Enhancement

Apr 29, 2022

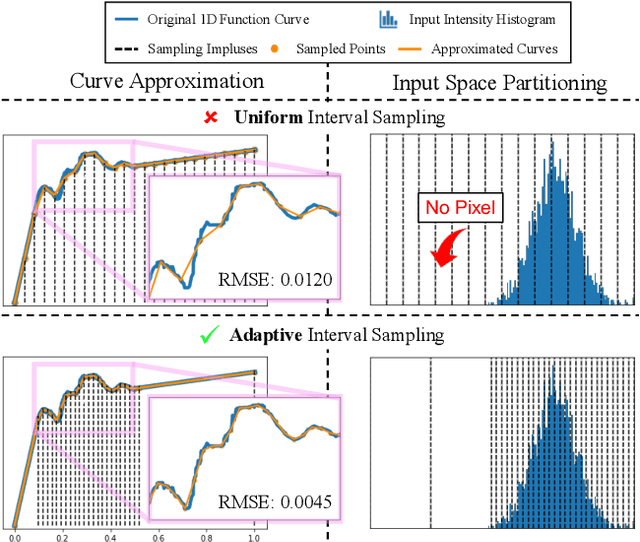

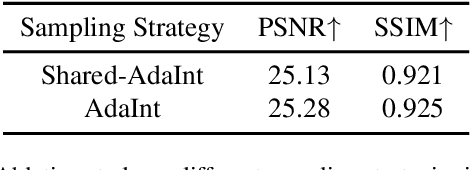

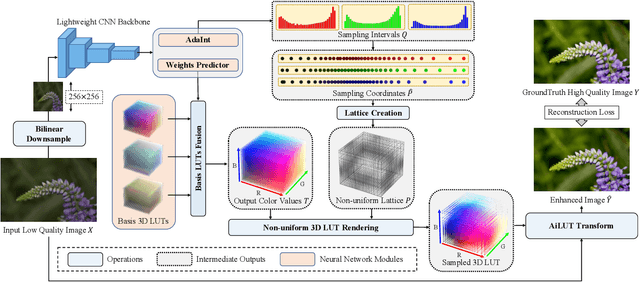

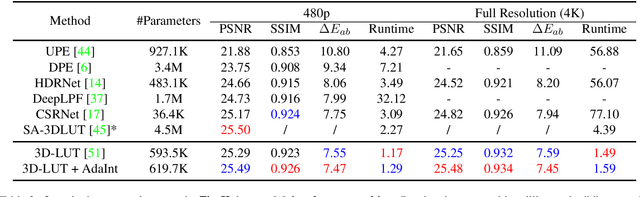

Abstract:The 3D Lookup Table (3D LUT) is a highly-efficient tool for real-time image enhancement tasks, which models a non-linear 3D color transform by sparsely sampling it into a discretized 3D lattice. Previous works have made efforts to learn image-adaptive output color values of LUTs for flexible enhancement but neglect the importance of sampling strategy. They adopt a sub-optimal uniform sampling point allocation, limiting the expressiveness of the learned LUTs since the (tri-)linear interpolation between uniform sampling points in the LUT transform might fail to model local non-linearities of the color transform. Focusing on this problem, we present AdaInt (Adaptive Intervals Learning), a novel mechanism to achieve a more flexible sampling point allocation by adaptively learning the non-uniform sampling intervals in the 3D color space. In this way, a 3D LUT can increase its capability by conducting dense sampling in color ranges requiring highly non-linear transforms and sparse sampling for near-linear transforms. The proposed AdaInt could be implemented as a compact and efficient plug-and-play module for a 3D LUT-based method. To enable the end-to-end learning of AdaInt, we design a novel differentiable operator called AiLUT-Transform (Adaptive Interval LUT Transform) to locate input colors in the non-uniform 3D LUT and provide gradients to the sampling intervals. Experiments demonstrate that methods equipped with AdaInt can achieve state-of-the-art performance on two public benchmark datasets with a negligible overhead increase. Our source code is available at https://github.com/ImCharlesY/AdaInt.

NTIRE 2022 Challenge on Super-Resolution and Quality Enhancement of Compressed Video: Dataset, Methods and Results

Apr 25, 2022

Abstract:This paper reviews the NTIRE 2022 Challenge on Super-Resolution and Quality Enhancement of Compressed Video. In this challenge, we proposed the LDV 2.0 dataset, which includes the LDV dataset (240 videos) and 95 additional videos. This challenge includes three tracks. Track 1 aims at enhancing the videos compressed by HEVC at a fixed QP. Track 2 and Track 3 target both the super-resolution and quality enhancement of HEVC compressed video. They require x2 and x4 super-resolution, respectively. The three tracks totally attract more than 600 registrations. In the test phase, 8 teams, 8 teams and 12 teams submitted the final results to Tracks 1, 2 and 3, respectively. The proposed methods and solutions gauge the state-of-the-art of super-resolution and quality enhancement of compressed video. The proposed LDV 2.0 dataset is available at https://github.com/RenYang-home/LDV_dataset. The homepage of this challenge (including open-sourced codes) is at https://github.com/RenYang-home/NTIRE22_VEnh_SR.

Progressive Training of A Two-Stage Framework for Video Restoration

Apr 21, 2022

Abstract:As a widely studied task, video restoration aims to enhance the quality of the videos with multiple potential degradations, such as noises, blurs and compression artifacts. Among video restorations, compressed video quality enhancement and video super-resolution are two of the main tacks with significant values in practical scenarios. Recently, recurrent neural networks and transformers attract increasing research interests in this field, due to their impressive capability in sequence-to-sequence modeling. However, the training of these models is not only costly but also relatively hard to converge, with gradient exploding and vanishing problems. To cope with these problems, we proposed a two-stage framework including a multi-frame recurrent network and a single-frame transformer. Besides, multiple training strategies, such as transfer learning and progressive training, are developed to shorten the training time and improve the model performance. Benefiting from the above technical contributions, our solution wins two champions and a runner-up in the NTIRE 2022 super-resolution and quality enhancement of compressed video challenges.

Pyramid Frequency Network with Spatial Attention Residual Refinement Module for Monocular Depth Estimation

Apr 05, 2022

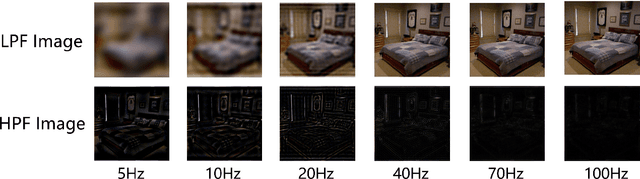

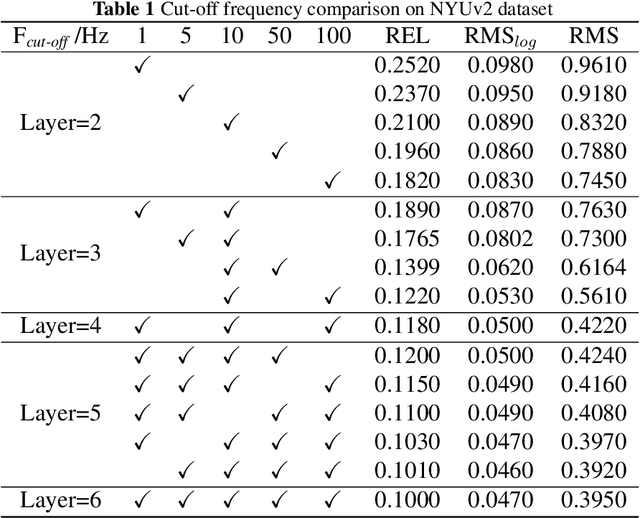

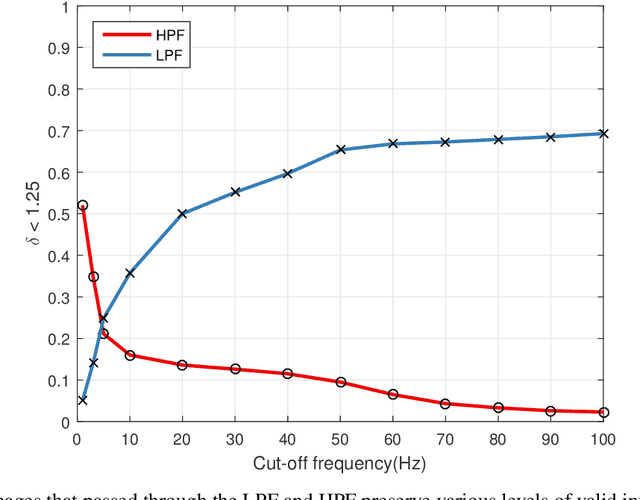

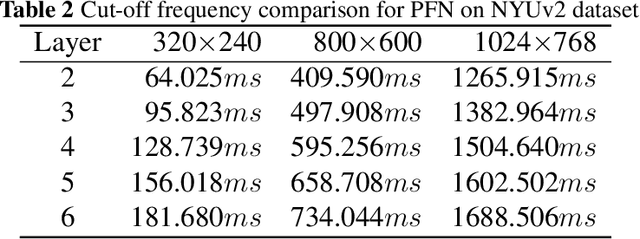

Abstract:Deep-learning-based approaches to depth estimation are rapidly advancing, offering superior performance over existing methods. To estimate the depth in real-world scenarios, depth estimation models require the robustness of various noise environments. In this work, a Pyramid Frequency Network(PFN) with Spatial Attention Residual Refinement Module(SARRM) is proposed to deal with the weak robustness of existing deep-learning methods. To reconstruct depth maps with accurate details, the SARRM constructs a residual fusion method with an attention mechanism to refine the blur depth. The frequency division strategy is designed, and the frequency pyramid network is developed to extract features from multiple frequency bands. With the frequency strategy, PFN achieves better visual accuracy than state-of-the-art methods in both indoor and outdoor scenes on Make3D, KITTI depth, and NYUv2 datasets. Additional experiments on the noisy NYUv2 dataset demonstrate that PFN is more reliable than existing deep-learning methods in high-noise scenes.

An Intelligent End-to-End Neural Architecture Search Framework for Electricity Forecasting Model Development

Mar 25, 2022

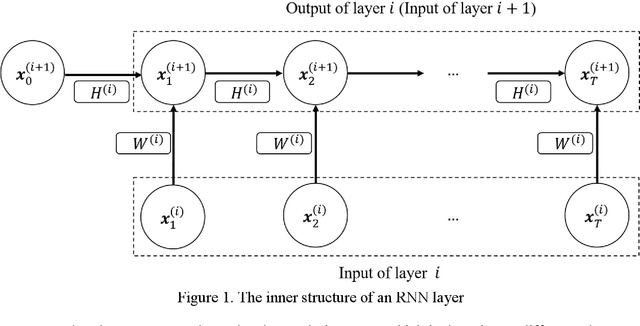

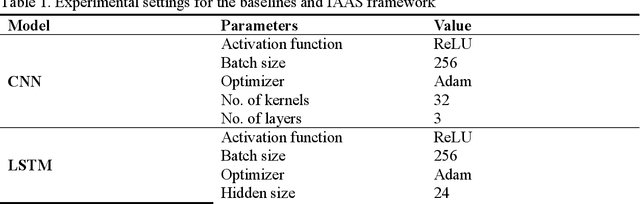

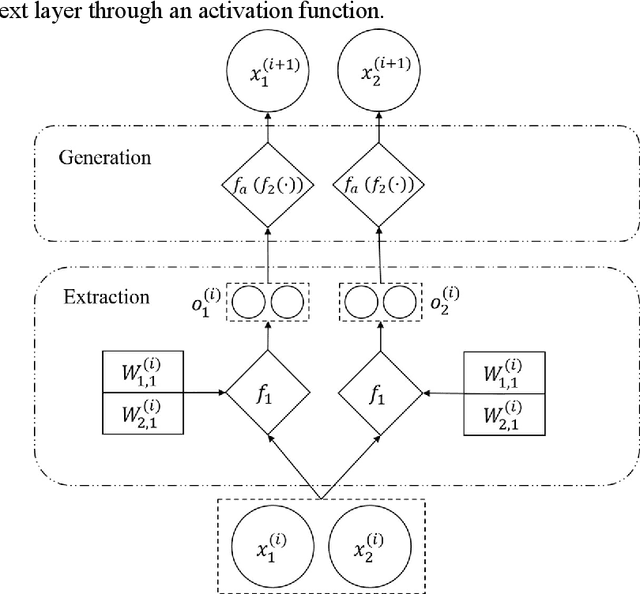

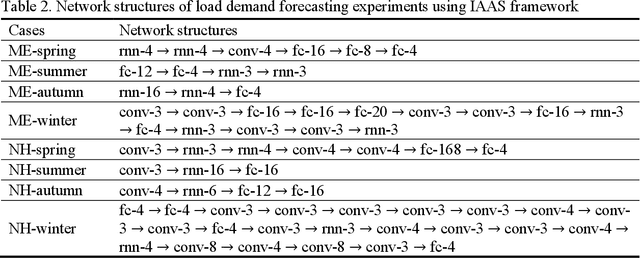

Abstract:Recent years have witnessed an exponential growth in developing deep learning (DL) models for the time-series electricity forecasting in power systems. However, most of the proposed models are designed based on the designers' inherent knowledge and experience without elaborating on the suitability of the proposed neural architectures. Moreover, these models cannot be self-adjusted to the dynamically changing data patterns due to an inflexible design of their structures. Even though several latest studies have considered application of the neural architecture search (NAS) technique for obtaining a network with an optimized structure in the electricity forecasting sector, their training process is quite time-consuming, computationally expensive and not intelligent, indicating that the NAS application in electricity forecasting area is still at an infancy phase. In this research study, we propose an intelligent automated architecture search (IAAS) framework for the development of time-series electricity forecasting models. The proposed framework contains two primary components, i.e., network function-preserving transformation operation and reinforcement learning (RL)-based network transformation control. In the first component, we introduce a theoretical function-preserving transformation of recurrent neural networks (RNN) to the literature for capturing the hidden temporal patterns within the time-series data. In the second component, we develop three RL-based transformation actors and a net pool to intelligently and effectively search a high-quality neural architecture. After conducting comprehensive experiments on two publicly-available electricity load datasets and two wind power datasets, we demonstrate that the proposed IAAS framework significantly outperforms the ten existing models or methods in terms of forecasting accuracy and stability.

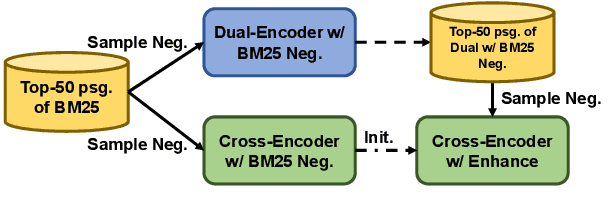

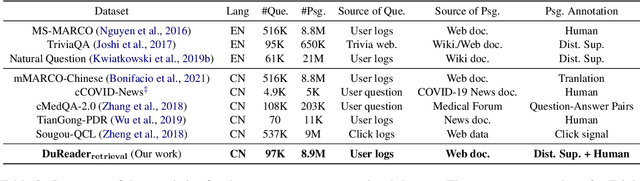

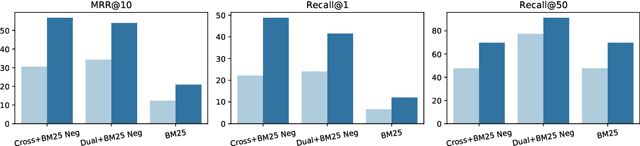

DuReader_retrieval: A Large-scale Chinese Benchmark for Passage Retrieval from Web Search Engine

Mar 19, 2022

Abstract:In this paper, we present DuReader_retrieval, a large-scale Chinese dataset for passage retrieval. DuReader_retrieval contains more than 90K queries and over 8M unique passages from Baidu search. To ensure the quality of our benchmark and address the shortcomings in other existing datasets, we (1) reduce the false negatives in development and testing sets by pooling the results from multiple retrievers with human annotations, (2) and remove the semantically similar questions between training with development and testing sets. We further introduce two extra out-of-domain testing sets for benchmarking the domain generalization capability. Our experiment results demonstrate that DuReader_retrieval is challenging and there is still plenty of room for the community to improve, e.g. the generalization across domains, salient phrase and syntax mismatch between query and paragraph and robustness. DuReader_retrieval will be publicly available at https://github.com/baidu/DuReader/tree/master/DuReader-Retrieval

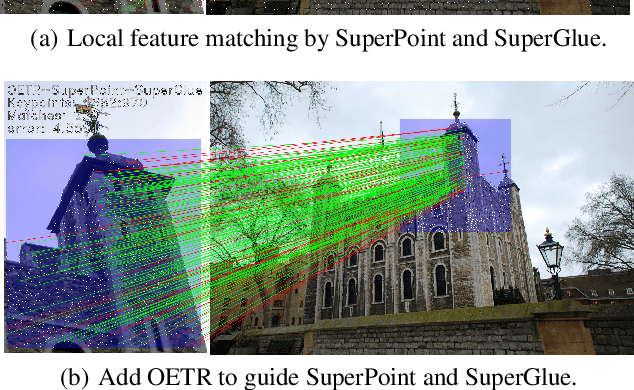

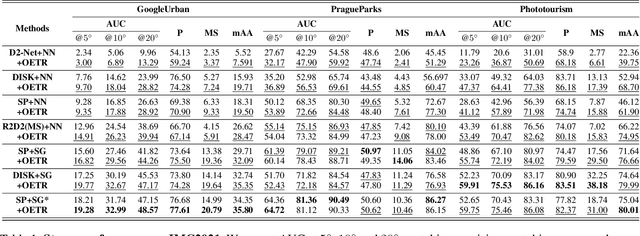

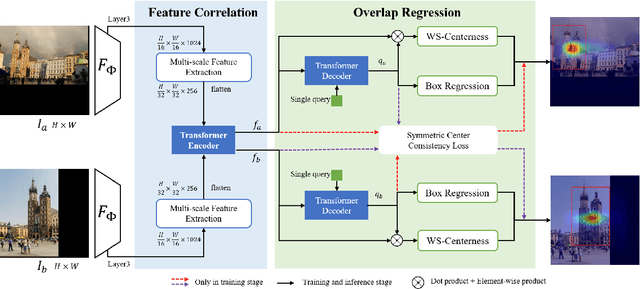

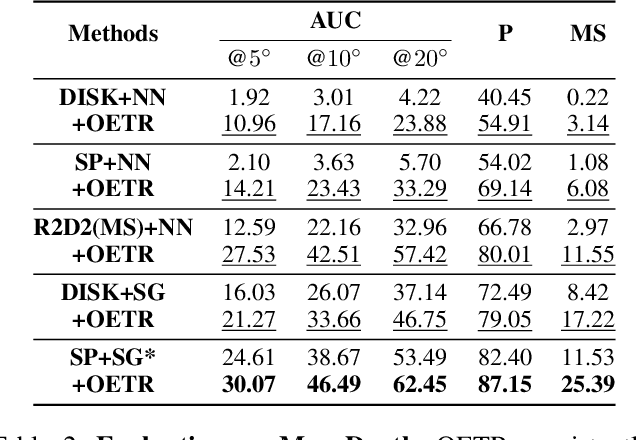

Guide Local Feature Matching by Overlap Estimation

Feb 23, 2022

Abstract:Local image feature matching under large appearance, viewpoint, and distance changes is challenging yet important. Conventional methods detect and match tentative local features across the whole images, with heuristic consistency checks to guarantee reliable matches. In this paper, we introduce a novel Overlap Estimation method conditioned on image pairs with TRansformer, named OETR, to constrain local feature matching in the commonly visible region. OETR performs overlap estimation in a two-step process of feature correlation and then overlap regression. As a preprocessing module, OETR can be plugged into any existing local feature detection and matching pipeline, to mitigate potential view angle or scale variance. Intensive experiments show that OETR can boost state-of-the-art local feature matching performance substantially, especially for image pairs with small shared regions. The code will be publicly available at https://github.com/AbyssGaze/OETR.

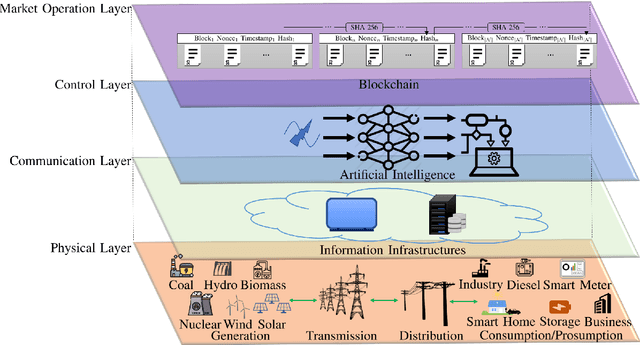

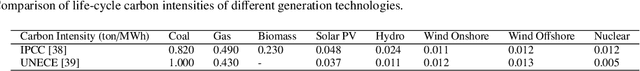

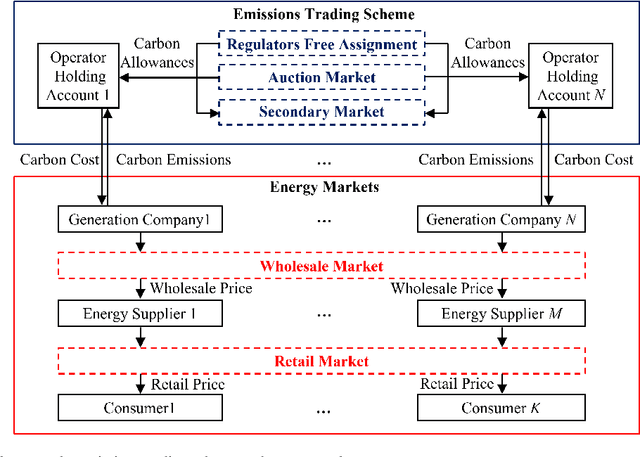

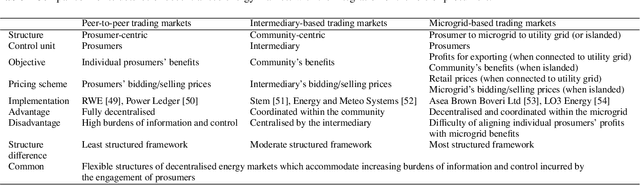

Applications of blockchain and artificial intelligence technologies for enabling prosumers in smart grids: A review

Feb 21, 2022

Abstract:Governments' net zero emission target aims at increasing the share of renewable energy sources as well as influencing the behaviours of consumers to support the cost-effective balancing of energy supply and demand. These will be achieved by the advanced information and control infrastructures of smart grids which allow the interoperability among various stakeholders. Under this circumstance, increasing number of consumers produce, store, and consume energy, giving them a new role of prosumers. The integration of prosumers and accommodation of incurred bidirectional flows of energy and information rely on two key factors: flexible structures of energy markets and intelligent operations of power systems. The blockchain and artificial intelligence (AI) are innovative technologies to fulfil these two factors, by which the blockchain provides decentralised trading platforms for energy markets and the AI supports the optimal operational control of power systems. This paper attempts to address how to incorporate the blockchain and AI in the smart grids for facilitating prosumers to participate in energy markets. To achieve this objective, first, this paper reviews how policy designs price carbon emissions caused by the fossil-fuel based generation so as to facilitate the integration of prosumers with renewable energy sources. Second, the potential structures of energy markets with the support of the blockchain technologies are discussed. Last, how to apply the AI for enhancing the state monitoring and decision making during the operations of power systems is introduced.

* Accepted by Renewable & Sustainable Energy Reviews on 21 Feb 2022

DuQM: A Chinese Dataset of Linguistically Perturbed Natural Questions for Evaluating the Robustness of Question Matching Models

Dec 16, 2021

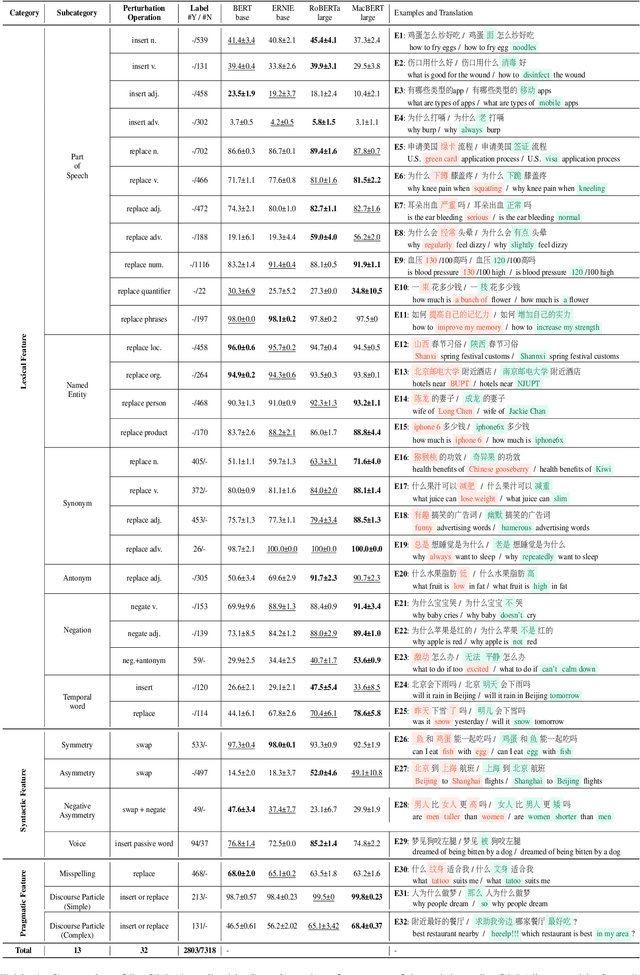

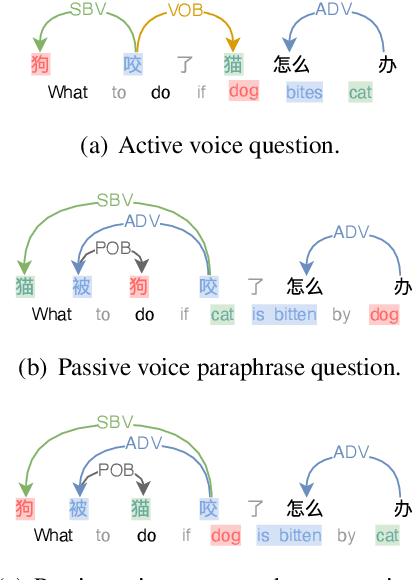

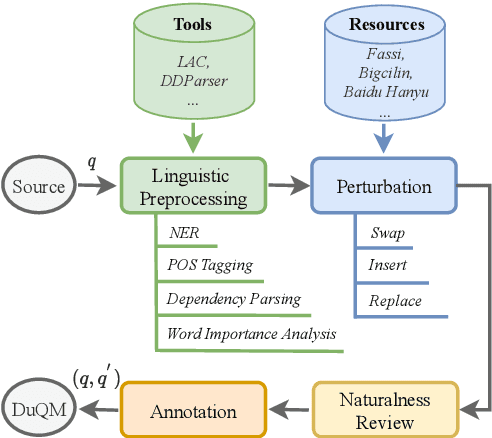

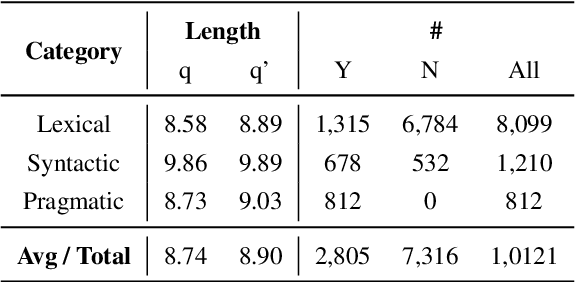

Abstract:In this paper, we focus on studying robustness evaluation of Chinese question matching. Most of the previous work on analyzing robustness issue focus on just one or a few types of artificial adversarial examples. Instead, we argue that it is necessary to formulate a comprehensive evaluation about the linguistic capabilities of models on natural texts. For this purpose, we create a Chinese dataset namely DuQM which contains natural questions with linguistic perturbations to evaluate the robustness of question matching models. DuQM contains 3 categories and 13 subcategories with 32 linguistic perturbations. The extensive experiments demonstrate that DuQM has a better ability to distinguish different models. Importantly, the detailed breakdown of evaluation by linguistic phenomenon in DuQM helps us easily diagnose the strength and weakness of different models. Additionally, our experiment results show that the effect of artificial adversarial examples does not work on the natural texts.

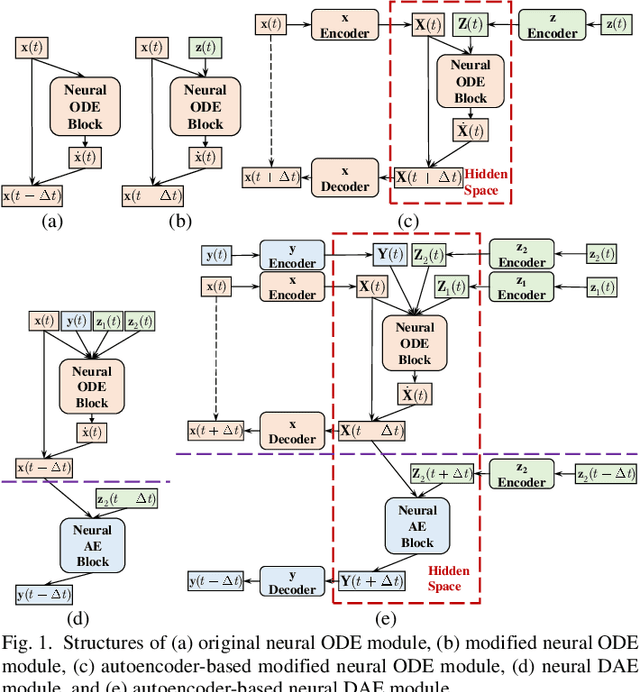

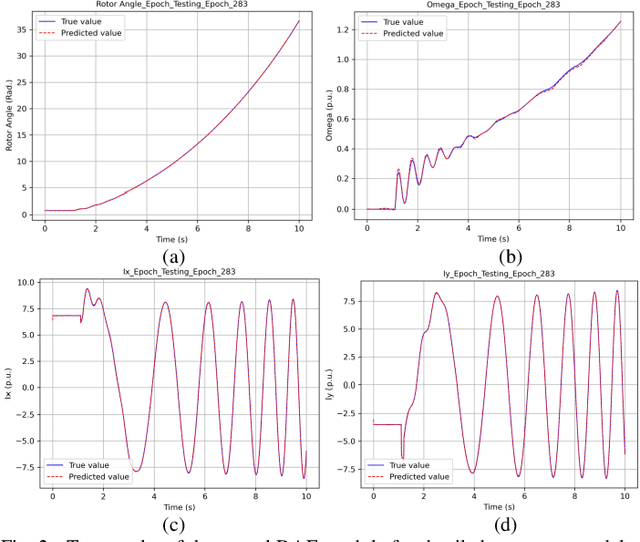

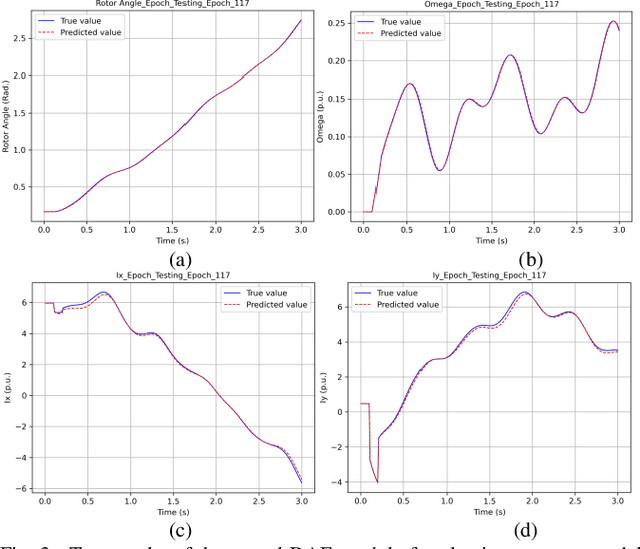

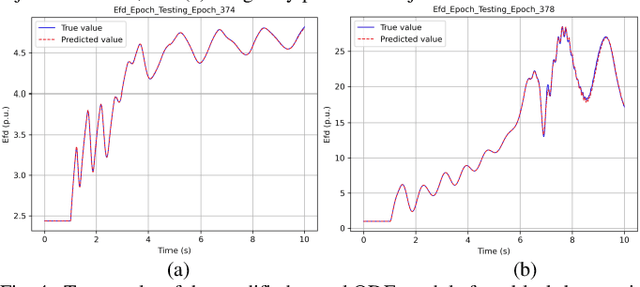

Neural ODE and DAE Modules for Power System Dynamic Modeling

Oct 25, 2021

Abstract:The time-domain simulation is the fundamental tool for power system transient stability analysis. Accurate and reliable simulations rely on accurate dynamic component modeling. In practical power systems, dynamic component modeling has long faced the challenges of model determination and model calibration, especially with the rapid development of renewable generation and power electronics. In this paper, based on the general framework of neural ordinary differential equations (ODEs), a modified neural ODE module and a neural differential-algebraic equations (DAEs) module for power system dynamic component modeling are proposed. The modules adopt an autoencoder to raise the dimension of state variables, model the dynamics of components with artificial neural networks (ANNs), and keep the numerical integration structure. In the neural DAE module, an additional ANN is used to calculate injection currents. The neural models can be easily integrated into time-domain simulations. With datasets consisting of sampled curves of input variables and output variables, the proposed modules can be used to fulfill the tasks of parameter inference, physics-data-integrated modeling, black-box modeling, etc., and can be easily integrated into power system dynamic simulations. Some simple numerical tests are carried out in the IEEE-39 system and prove the validity and potentiality of the proposed modules.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge