Yi Wei

FashionBERT: Text and Image Matching with Adaptive Loss for Cross-modal Retrieval

May 29, 2020

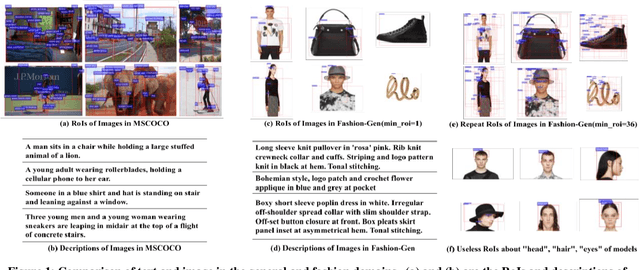

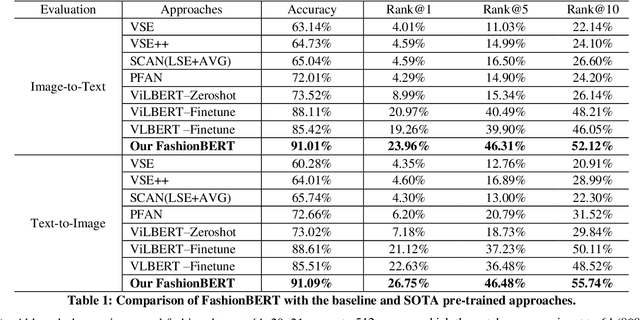

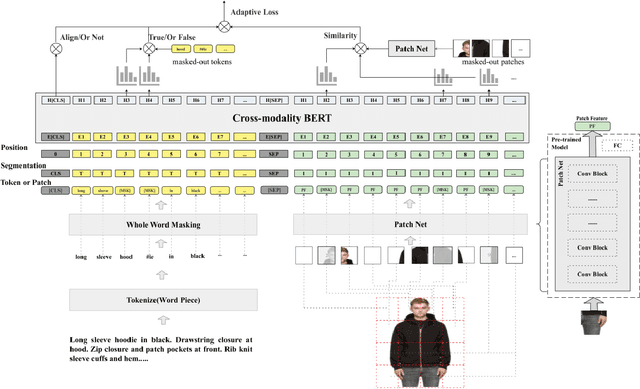

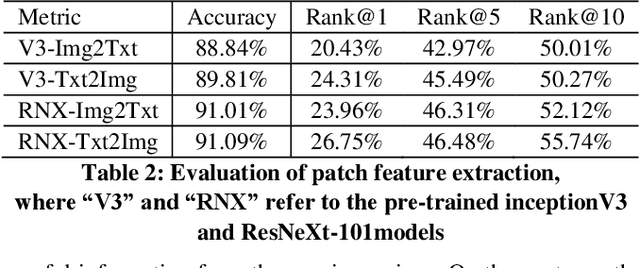

Abstract:In this paper, we address the text and image matching in cross-modal retrieval of the fashion industry. Different from the matching in the general domain, the fashion matching is required to pay much more attention to the fine-grained information in the fashion images and texts. Pioneer approaches detect the region of interests (i.e., RoIs) from images and use the RoI embeddings as image representations. In general, RoIs tend to represent the "object-level" information in the fashion images, while fashion texts are prone to describe more detailed information, e.g. styles, attributes. RoIs are thus not fine-grained enough for fashion text and image matching. To this end, we propose FashionBERT, which leverages patches as image features. With the pre-trained BERT model as the backbone network, FashionBERT learns high level representations of texts and images. Meanwhile, we propose an adaptive loss to trade off multitask learning in the FashionBERT modeling. Two tasks (i.e., text and image matching and cross-modal retrieval) are incorporated to evaluate FashionBERT. On the public dataset, experiments demonstrate FashionBERT achieves significant improvements in performances than the baseline and state-of-the-art approaches. In practice, FashionBERT is applied in a concrete cross-modal retrieval application. We provide the detailed matching performance and inference efficiency analysis.

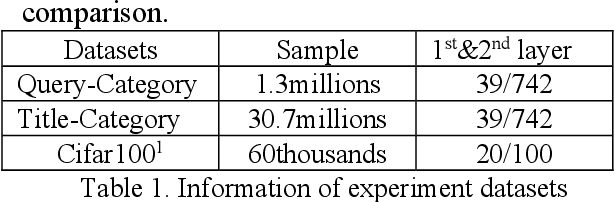

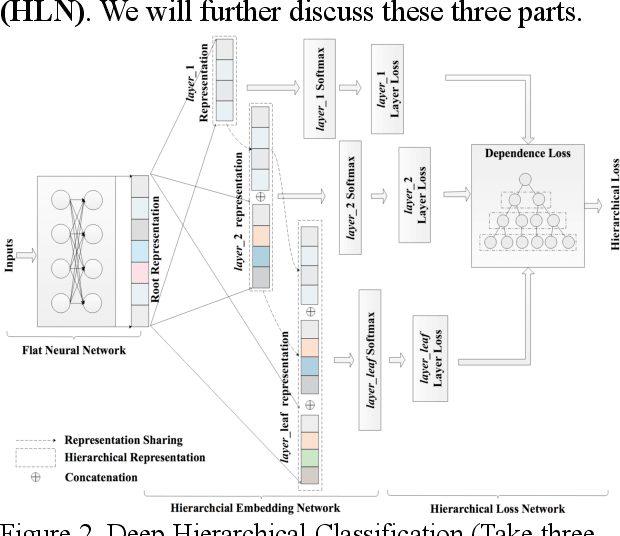

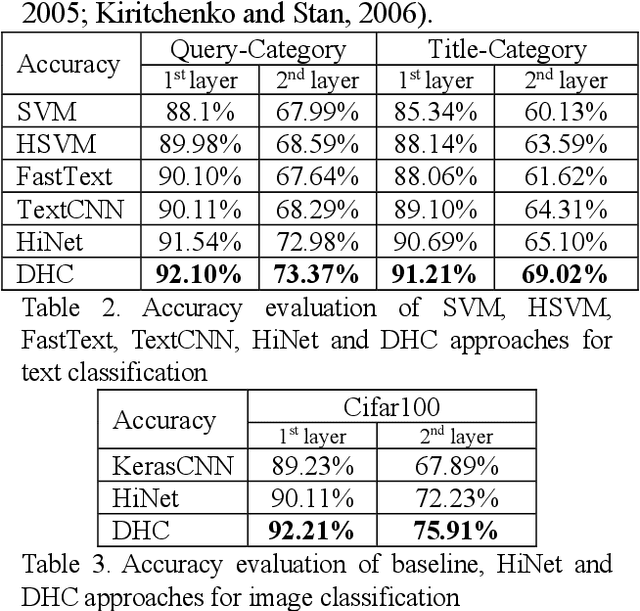

Deep Hierarchical Classification for Category Prediction in E-commerce System

May 14, 2020

Abstract:In e-commerce system, category prediction is to automatically predict categories of given texts. Different from traditional classification where there are no relations between classes, category prediction is reckoned as a standard hierarchical classification problem since categories are usually organized as a hierarchical tree. In this paper, we address hierarchical category prediction. We propose a Deep Hierarchical Classification framework, which incorporates the multi-scale hierarchical information in neural networks and introduces a representation sharing strategy according to the category tree. We also define a novel combined loss function to punish hierarchical prediction losses. The evaluation shows that the proposed approach outperforms existing approaches in accuracy.

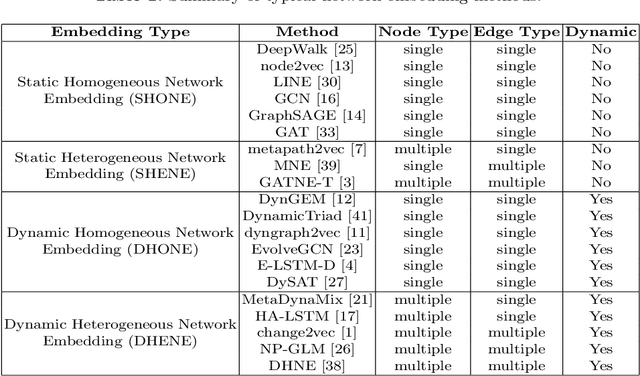

Modeling Dynamic Heterogeneous Network for Link Prediction using Hierarchical Attention with Temporal RNN

Apr 01, 2020

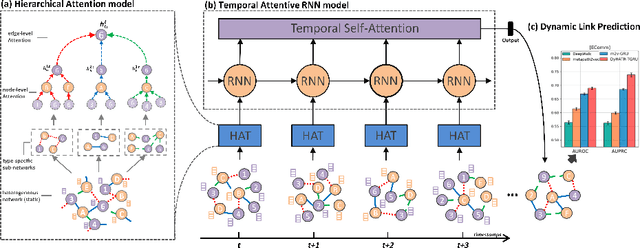

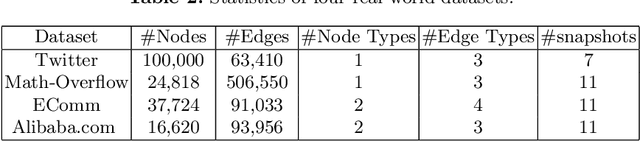

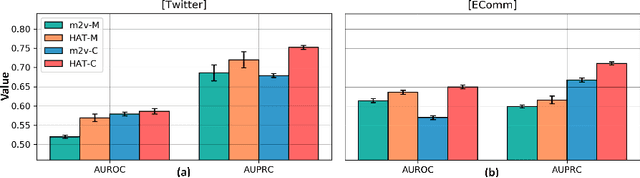

Abstract:Network embedding aims to learn low-dimensional representations of nodes while capturing structure information of networks. It has achieved great success on many tasks of network analysis such as link prediction and node classification. Most of existing network embedding algorithms focus on how to learn static homogeneous networks effectively. However, networks in the real world are more complex, e.g., networks may consist of several types of nodes and edges (called heterogeneous information) and may vary over time in terms of dynamic nodes and edges (called evolutionary patterns). Limited work has been done for network embedding of dynamic heterogeneous networks as it is challenging to learn both evolutionary and heterogeneous information simultaneously. In this paper, we propose a novel dynamic heterogeneous network embedding method, termed as DyHATR, which uses hierarchical attention to learn heterogeneous information and incorporates recurrent neural networks with temporal attention to capture evolutionary patterns. We benchmark our method on four real-world datasets for the task of link prediction. Experimental results show that DyHATR significantly outperforms several state-of-the-art baselines.

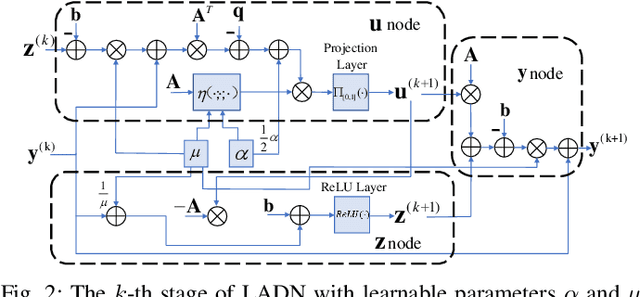

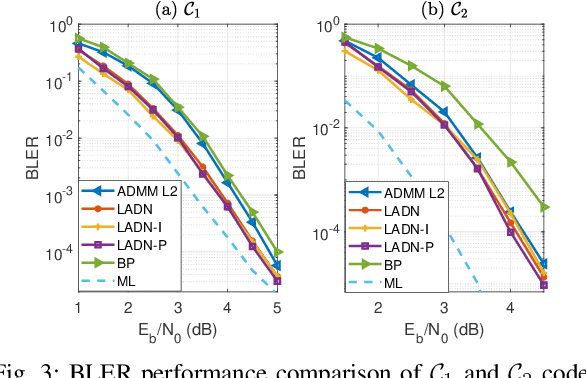

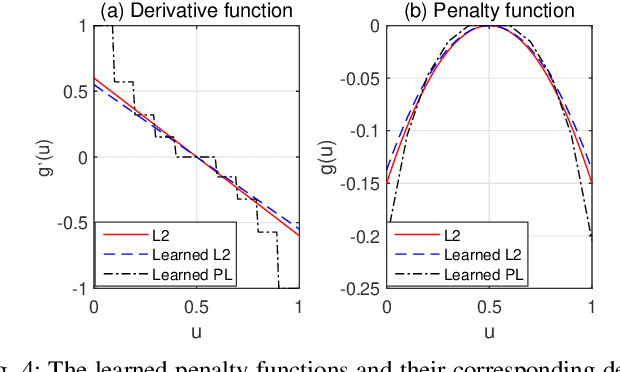

ADMM-based Decoder for Binary Linear Codes Aided by Deep Learning

Feb 14, 2020

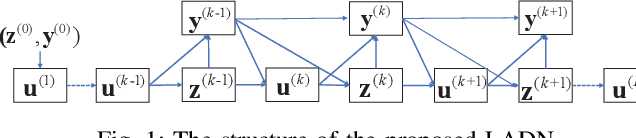

Abstract:Inspired by the recent advances in deep learning (DL), this work presents a deep neural network aided decoding algorithm for binary linear codes. Based on the concept of deep unfolding, we design a decoding network by unfolding the alternating direction method of multipliers (ADMM)-penalized decoder. In addition, we propose two improved versions of the proposed network. The first one transforms the penalty parameter into a set of iteration-dependent ones, and the second one adopts a specially designed penalty function, which is based on a piecewise linear function with adjustable slopes. Numerical results show that the resulting DL-aided decoders outperform the original ADMM-penalized decoder for various low density parity check (LDPC) codes with similar computational complexity.

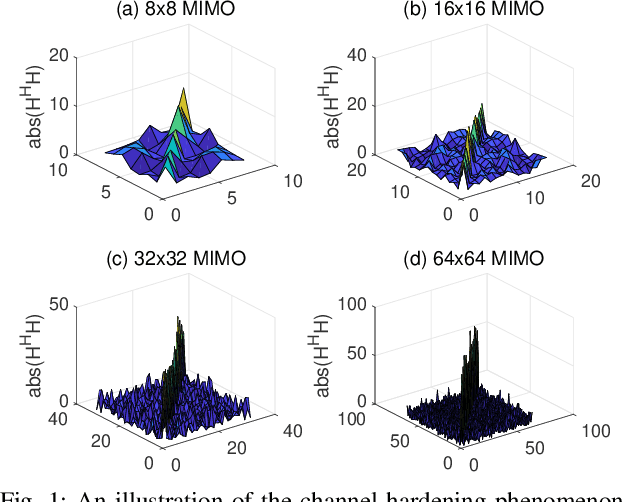

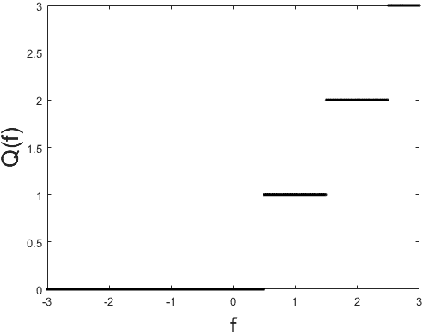

Learned Conjugate Gradient Descent Network for Massive MIMO Detection

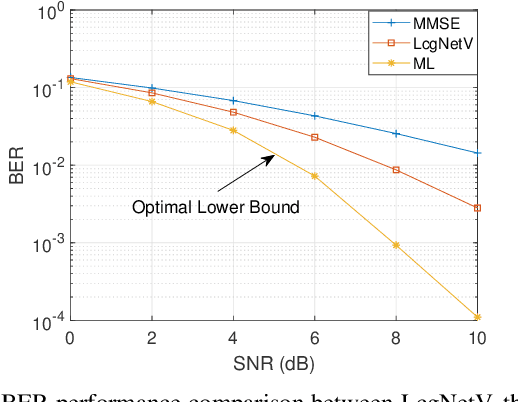

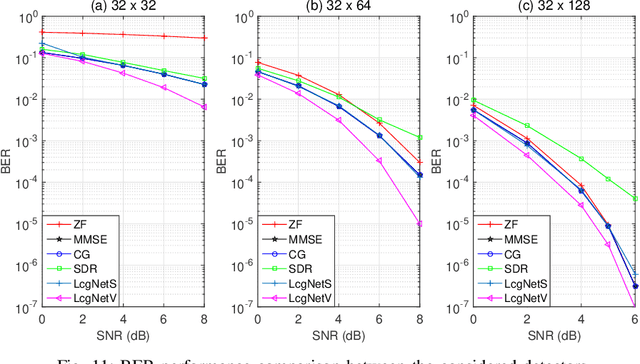

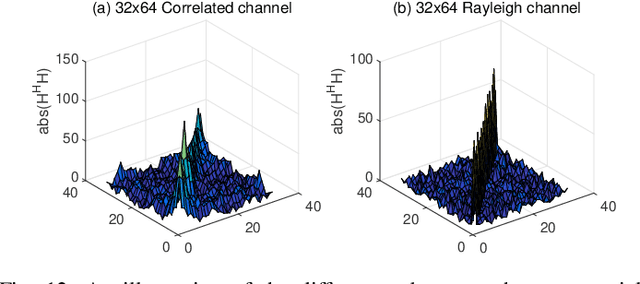

Jun 11, 2019

Abstract:In this work, we consider the use of model-driven deep learning techniques for massive multiple-input multiple-output (MIMO) detection. Compared with conventional MIMO systems, massive MIMO promises improved spectral efficiency, coverage and range. Unfortunately, these benefits are coming at the cost of significantly increased computational complexity. To reduce the complexity of signal detection and guarantee the performance, we present a learned conjugate gradient descent network (LcgNet), which is constructed by unfolding the iterative conjugate gradient descent (CG) detector. In the proposed network, instead of calculating the exact values of the scalar step-sizes, we explicitly learn their universal values. Also, we can enhance the proposed network by augmenting the dimensions of these step-sizes. Furthermore, in order to reduce the memory costs, a novel quantized LcgNet is proposed, where a low-resolution nonuniform quantizer is integrated into the LcgNet to smartly quantize the aforementioned step-sizes. The quantizer is based on a specially designed soft staircase function with learnable parameters to adjust its shape. Meanwhile, due to fact that the number of learnable parameters is limited, the proposed networks are easy and fast to train. Numerical results demonstrate that the proposed network can achieve promising performance with much lower complexity.

Conditional Single-view Shape Generation for Multi-view Stereo Reconstruction

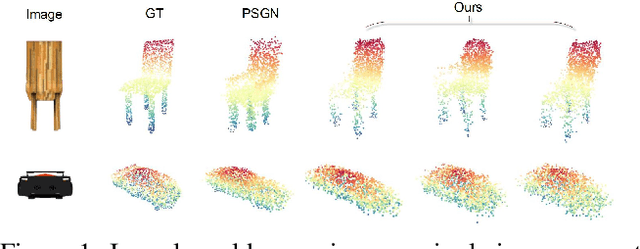

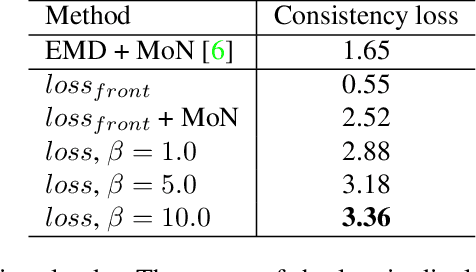

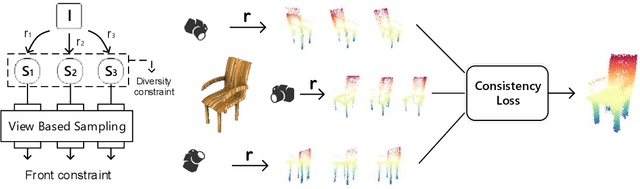

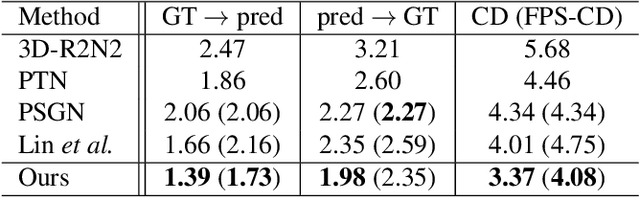

Apr 21, 2019

Abstract:In this paper, we present a new perspective towards image-based shape generation. Most existing deep learning based shape reconstruction methods employ a single-view deterministic model which is sometimes insufficient to determine a single groundtruth shape because the back part is occluded. In this work, we first introduce a conditional generative network to model the uncertainty for single-view reconstruction. Then, we formulate the task of multi-view reconstruction as taking the intersection of the predicted shape spaces on each single image. We design new differentiable guidance including the front constraint, the diversity constraint, and the consistency loss to enable effective single-view conditional generation and multi-view synthesis. Experimental results and ablation studies show that our proposed approach outperforms state-of-the-art methods on 3D reconstruction test error and demonstrate its generalization ability on real world data.

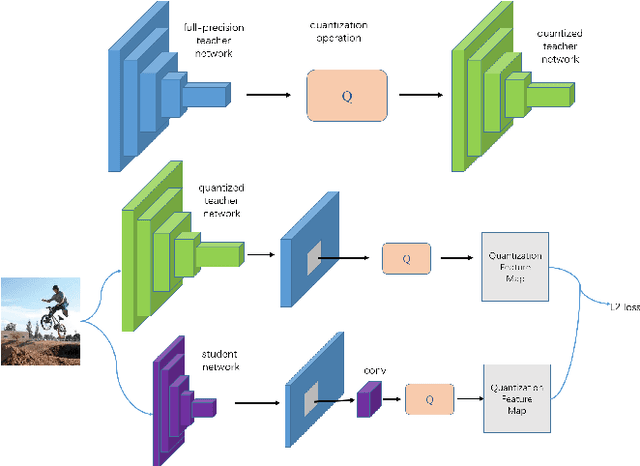

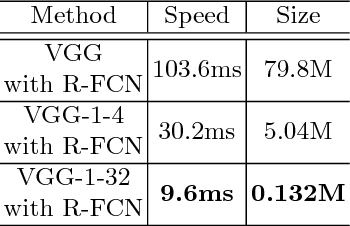

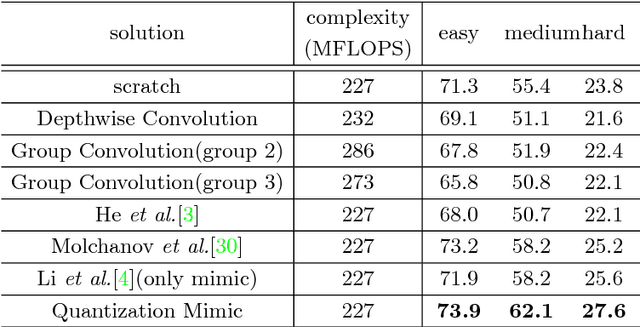

Quantization Mimic: Towards Very Tiny CNN for Object Detection

Sep 13, 2018

Abstract:In this paper, we propose a simple and general framework for training very tiny CNNs for object detection. Due to limited representation ability, it is challenging to train very tiny networks for complicated tasks like detection. To the best of our knowledge, our method, called Quantization Mimic, is the first one focusing on very tiny networks. We utilize two types of acceleration methods: mimic and quantization. Mimic improves the performance of a student network by transfering knowledge from a teacher network. Quantization converts a full-precision network to a quantized one without large degradation of performance. If the teacher network is quantized, the search scope of the student network will be smaller. Using this feature of the quantization, we propose Quantization Mimic. It first quantizes the large network, then mimic a quantized small network. The quantization operation can help student network to better match the feature maps from teacher network. To evaluate our approach, we carry out experiments on various popular CNNs including VGG and Resnet, as well as different detection frameworks including Faster R-CNN and R-FCN. Experiments on Pascal VOC and WIDER FACE verify that our Quantization Mimic algorithm can be applied on various settings and outperforms state-of-the-art model acceleration methods given limited computing resouces.

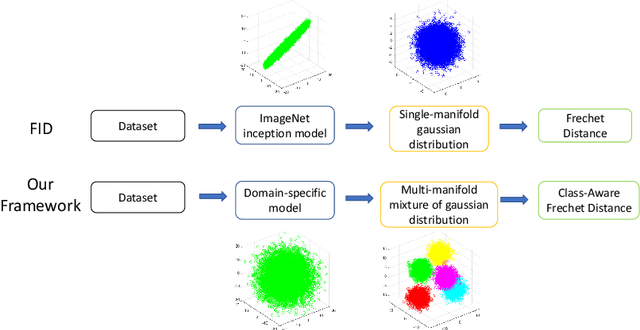

An Improved Evaluation Framework for Generative Adversarial Networks

Jul 19, 2018

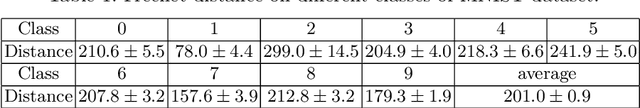

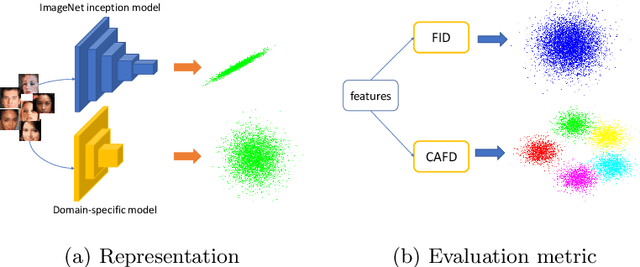

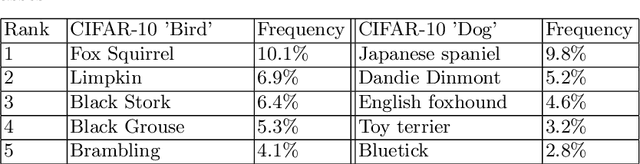

Abstract:In this paper, we propose an improved quantitative evaluation framework for Generative Adversarial Networks (GANs) on generating domain-specific images, where we improve conventional evaluation methods on two levels: the feature representation and the evaluation metric. Unlike most existing evaluation frameworks which transfer the representation of ImageNet inception model to map images onto the feature space, our framework uses a specialized encoder to acquire fine-grained domain-specific representation. Moreover, for datasets with multiple classes, we propose Class-Aware Frechet Distance (CAFD), which employs a Gaussian mixture model on the feature space to better fit the multi-manifold feature distribution. Experiments and analysis on both the feature level and the image level were conducted to demonstrate improvements of our proposed framework over the recently proposed state-of-the-art FID method. To our best knowledge, we are the first to provide counter examples where FID gives inconsistent results with human judgments. It is shown in the experiments that our framework is able to overcome the shortness of FID and improves robustness. Code will be made available.

Two-Stream Binocular Network: Accurate Near Field Finger Detection Based On Binocular Images

Apr 26, 2018

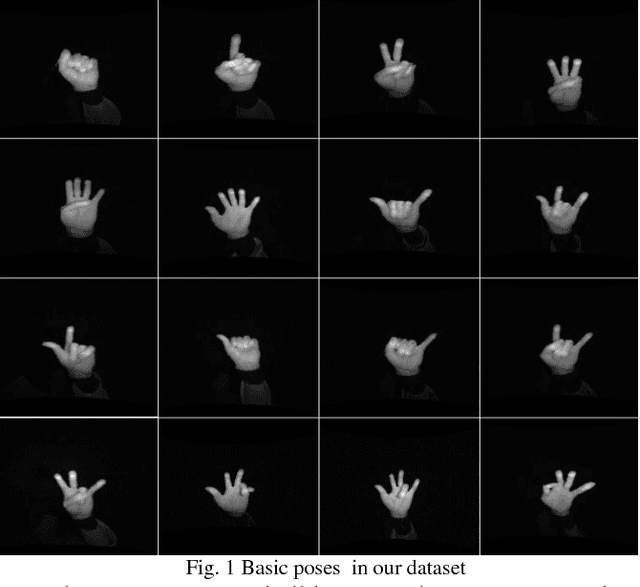

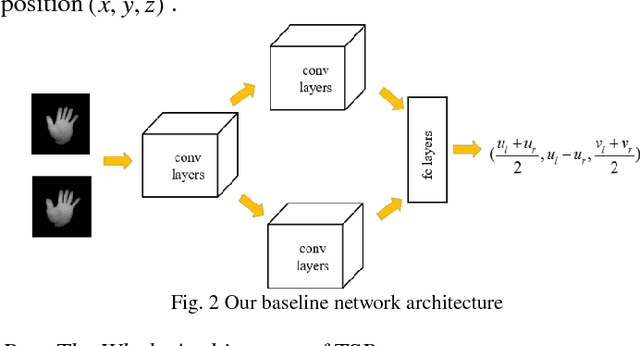

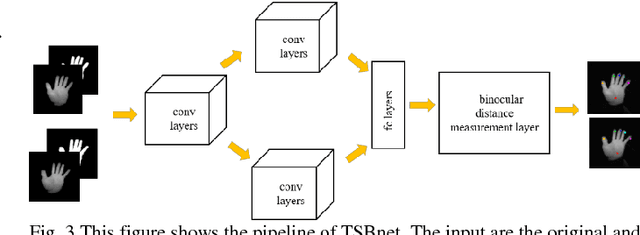

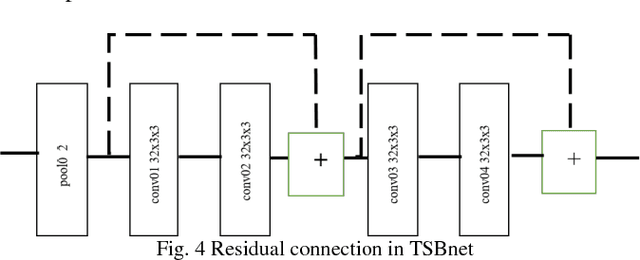

Abstract:Fingertip detection plays an important role in human computer interaction. Previous works transform binocular images into depth images. Then depth-based hand pose estimation methods are used to predict 3D positions of fingertips. Different from previous works, we propose a new framework, named Two-Stream Binocular Network (TSBnet) to detect fingertips from binocular images directly. TSBnet first shares convolutional layers for low level features of right and left images. Then it extracts high level features in two-stream convolutional networks separately. Further, we add a new layer: binocular distance measurement layer to improve performance of our model. To verify our scheme, we build a binocular hand image dataset, containing about 117k pairs of images in training set and 10k pairs of images in test set. Our methods achieve an average error of 10.9mm on our test set, outperforming previous work by 5.9mm (relatively 35.1%).

* Published in: Visual Communications and Image Processing (VCIP), 2017 IEEE. Original IEEE publication available on https://ieeexplore.ieee.org/abstract/document/8305146/. Dataset available on https://sites.google.com/view/thuhand17

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge