Scaling Pre-trained Language Models to Deeper via Parameter-efficient Architecture

Mar 27, 2023Peiyu Liu, Ze-Feng Gao, Wayne Xin Zhao, Ji-Rong Wen

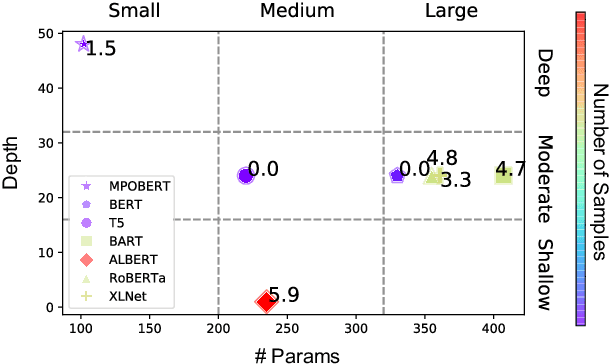

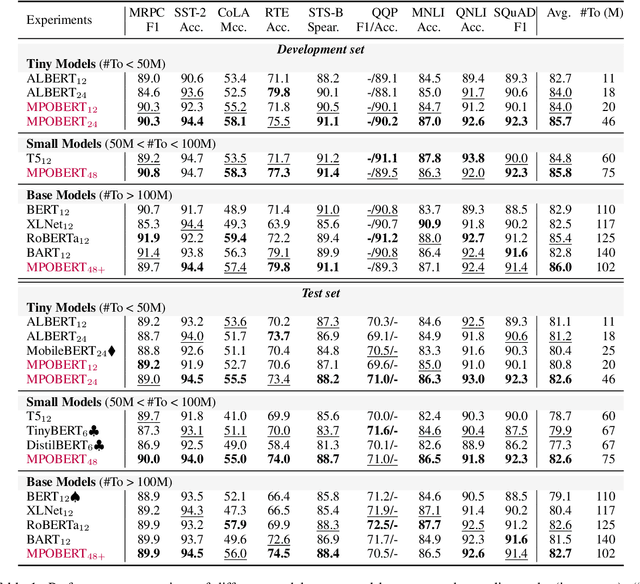

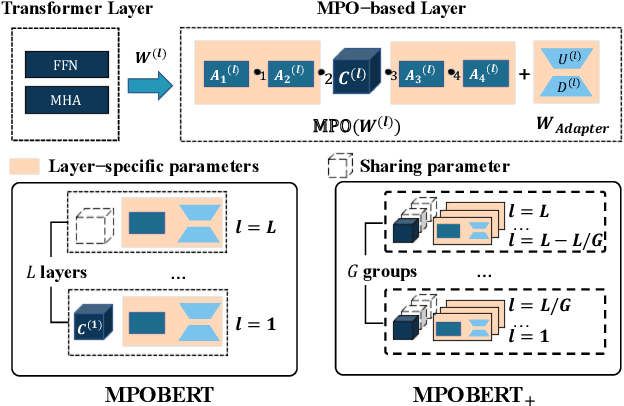

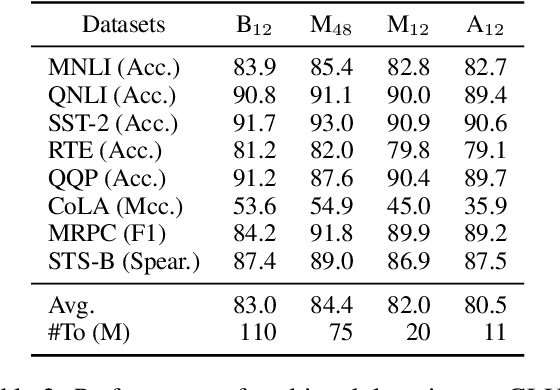

In this paper, we propose a highly parameter-efficient approach to scaling pre-trained language models (PLMs) to a deeper model depth. Unlike prior work that shares all parameters or uses extra blocks, we design a more capable parameter-sharing architecture based on matrix product operator (MPO). MPO decomposition can reorganize and factorize the information of a parameter matrix into two parts: the major part that contains the major information (central tensor) and the supplementary part that only has a small proportion of parameters (auxiliary tensors). Based on such a decomposition, our architecture shares the central tensor across all layers for reducing the model size and meanwhile keeps layer-specific auxiliary tensors (also using adapters) for enhancing the adaptation flexibility. To improve the model training, we further propose a stable initialization algorithm tailored for the MPO-based architecture. Extensive experiments have demonstrated the effectiveness of our proposed model in reducing the model size and achieving highly competitive performance.

Diffusion Models for Non-autoregressive Text Generation: A Survey

Mar 12, 2023Yifan Li, Kun Zhou, Wayne Xin Zhao, Ji-Rong Wen

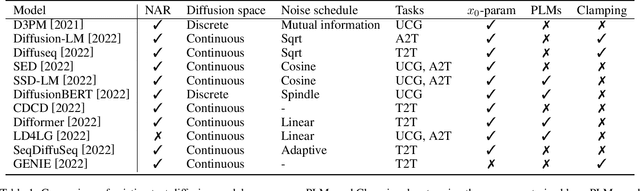

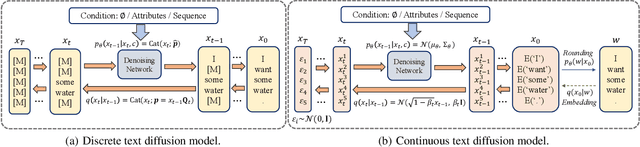

Non-autoregressive (NAR) text generation has attracted much attention in the field of natural language processing, which greatly reduces the inference latency but has to sacrifice the generation accuracy. Recently, diffusion models, a class of latent variable generative models, have been introduced into NAR text generation, showing improved generation quality. In this survey, we review the recent progress in diffusion models for NAR text generation. As the background, we first present the general definition of diffusion models and the text diffusion models, and then discuss their merits for NAR generation. As the core content, we further introduce two mainstream diffusion models in existing text diffusion works, and review the key designs of the diffusion process. Moreover, we discuss the utilization of pre-trained language models (PLMs) for text diffusion models and introduce optimization techniques for text data. Finally, we discuss several promising directions and conclude this paper. Our survey aims to provide researchers with a systematic reference of related research on text diffusion models for NAR generation.

A Survey on Long Text Modeling with Transformers

Feb 28, 2023Zican Dong, Tianyi Tang, Lunyi Li, Wayne Xin Zhao

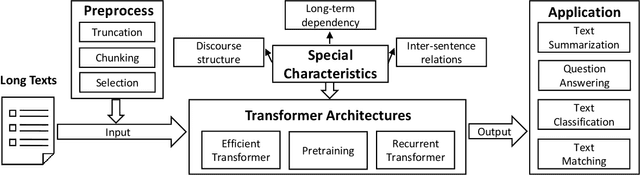

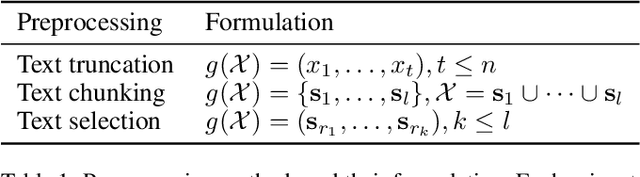

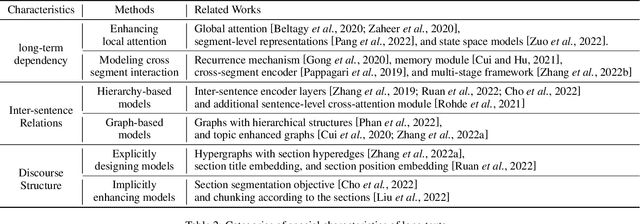

Modeling long texts has been an essential technique in the field of natural language processing (NLP). With the ever-growing number of long documents, it is important to develop effective modeling methods that can process and analyze such texts. However, long texts pose important research challenges for existing text models, with more complex semantics and special characteristics. In this paper, we provide an overview of the recent advances on long texts modeling based on Transformer models. Firstly, we introduce the formal definition of long text modeling. Then, as the core content, we discuss how to process long input to satisfy the length limitation and design improved Transformer architectures to effectively extend the maximum context length. Following this, we discuss how to adapt Transformer models to capture the special characteristics of long texts. Finally, we describe four typical applications involving long text modeling and conclude this paper with a discussion of future directions. Our survey intends to provide researchers with a synthesis and pointer to related work on long text modeling.

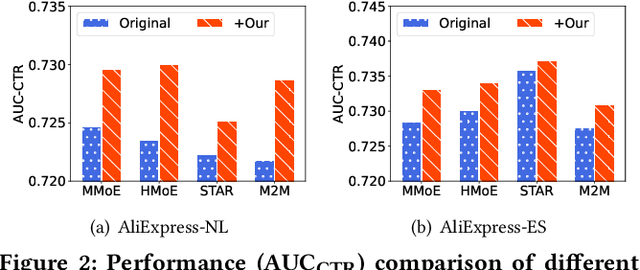

Hybrid Contrastive Constraints for Multi-Scenario Ad Ranking

Feb 06, 2023Shanlei Mu, Penghui Wei, Wayne Xin Zhao, Shaoguo Liu, Liang Wang, Bo Zheng

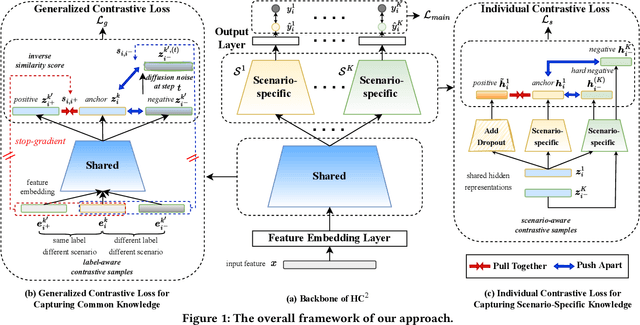

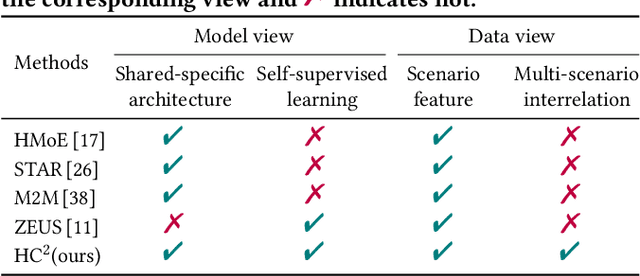

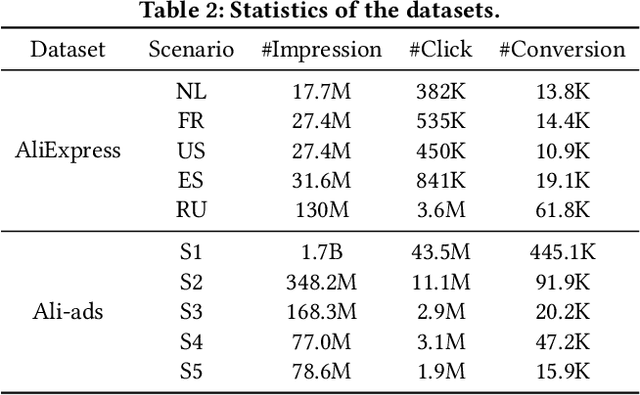

Multi-scenario ad ranking aims at leveraging the data from multiple domains or channels for training a unified ranking model to improve the performance at each individual scenario. Although the research on this task has made important progress, it still lacks the consideration of cross-scenario relations, thus leading to limitation in learning capability and difficulty in interrelation modeling. In this paper, we propose a Hybrid Contrastive Constrained approach (HC^2) for multi-scenario ad ranking. To enhance the modeling of data interrelation, we elaborately design a hybrid contrastive learning approach to capture commonalities and differences among multiple scenarios. The core of our approach consists of two elaborated contrastive losses, namely generalized and individual contrastive loss, which aim at capturing common knowledge and scenario-specific knowledge, respectively. To adapt contrastive learning to the complex multi-scenario setting, we propose a series of important improvements. For generalized contrastive loss, we enhance contrastive learning by extending the contrastive samples (label-aware and diffusion noise enhanced contrastive samples) and reweighting the contrastive samples (reciprocal similarity weighting). For individual contrastive loss, we use the strategies of dropout-based augmentation and {cross-scenario encoding} for generating meaningful positive and negative contrastive samples, respectively. Extensive experiments on both offline evaluation and online test have demonstrated the effectiveness of the proposed HC$^2$ by comparing it with a number of competitive baselines.

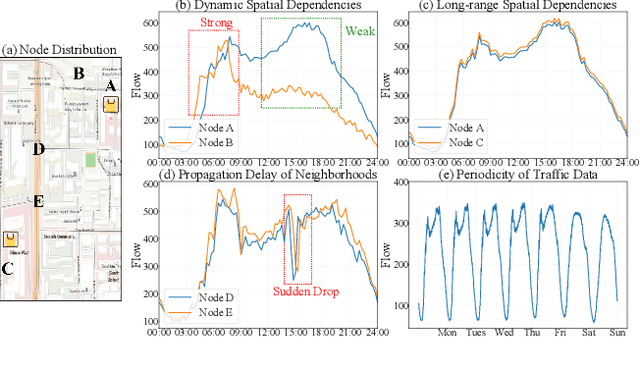

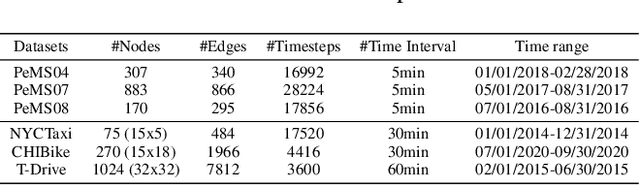

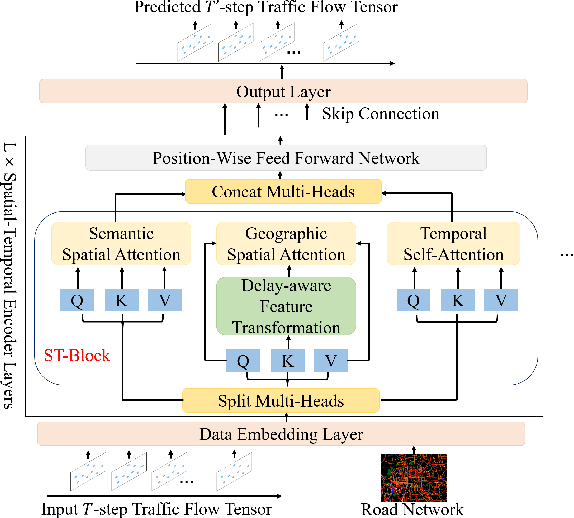

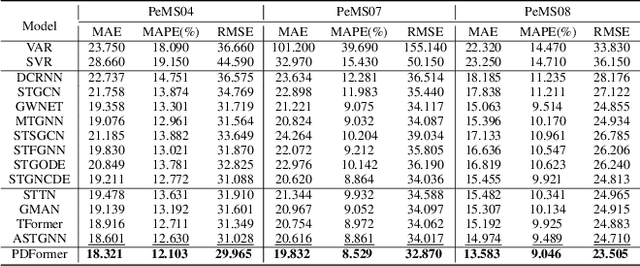

PDFormer: Propagation Delay-aware Dynamic Long-range Transformer for Traffic Flow Prediction

Jan 19, 2023Jiawei Jiang, Chengkai Han, Wayne Xin Zhao, Jingyuan Wang

As a core technology of Intelligent Transportation System, traffic flow prediction has a wide range of applications. The fundamental challenge in traffic flow prediction is to effectively model the complex spatial-temporal dependencies in traffic data. Spatial-temporal Graph Neural Network (GNN) models have emerged as one of the most promising methods to solve this problem. However, GNN-based models have three major limitations for traffic prediction: i) Most methods model spatial dependencies in a static manner, which limits the ability to learn dynamic urban traffic patterns; ii) Most methods only consider short-range spatial information and are unable to capture long-range spatial dependencies; iii) These methods ignore the fact that the propagation of traffic conditions between locations has a time delay in traffic systems. To this end, we propose a novel Propagation Delay-aware dynamic long-range transFormer, namely PDFormer, for accurate traffic flow prediction. Specifically, we design a spatial self-attention module to capture the dynamic spatial dependencies. Then, two graph masking matrices are introduced to highlight spatial dependencies from short- and long-range views. Moreover, a traffic delay-aware feature transformation module is proposed to empower PDFormer with the capability of explicitly modeling the time delay of spatial information propagation. Extensive experimental results on six real-world public traffic datasets show that our method can not only achieve state-of-the-art performance but also exhibit competitive computational efficiency. Moreover, we visualize the learned spatial-temporal attention map to make our model highly interpretable.

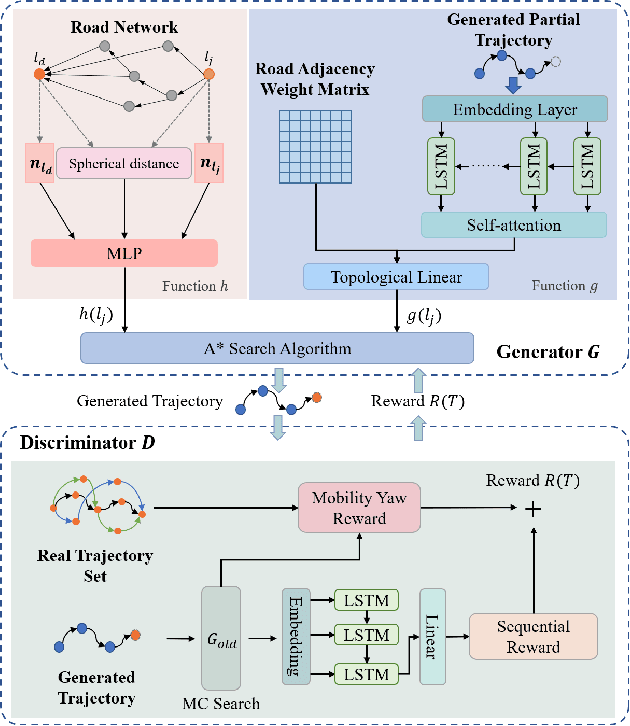

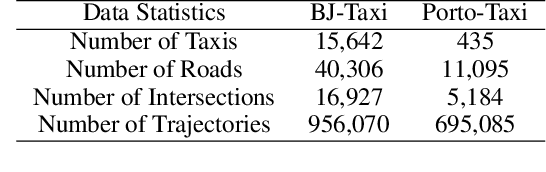

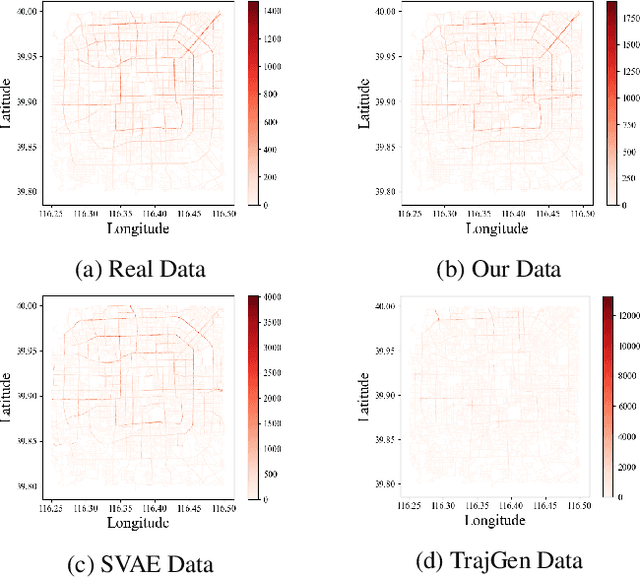

Continuous Trajectory Generation Based on Two-Stage GAN

Jan 16, 2023Wenjun Jiang, Wayne Xin Zhao, Jingyuan Wang, Jiawei Jiang

Simulating the human mobility and generating large-scale trajectories are of great use in many real-world applications, such as urban planning, epidemic spreading analysis, and geographic privacy protect. Although many previous works have studied the problem of trajectory generation, the continuity of the generated trajectories has been neglected, which makes these methods useless for practical urban simulation scenarios. To solve this problem, we propose a novel two-stage generative adversarial framework to generate the continuous trajectory on the road network, namely TS-TrajGen, which efficiently integrates prior domain knowledge of human mobility with model-free learning paradigm. Specifically, we build the generator under the human mobility hypothesis of the A* algorithm to learn the human mobility behavior. For the discriminator, we combine the sequential reward with the mobility yaw reward to enhance the effectiveness of the generator. Finally, we propose a novel two-stage generation process to overcome the weak point of the existing stochastic generation process. Extensive experiments on two real-world datasets and two case studies demonstrate that our framework yields significant improvements over the state-of-the-art methods.

TikTalk: A Multi-Modal Dialogue Dataset for Real-World Chitchat

Jan 14, 2023Hongpeng Lin, Ludan Ruan, Wenke Xia, Peiyu Liu, Jingyuan Wen, Yixin Xu, Di Hu, Ruihua Song, Wayne Xin Zhao, Qin Jin, Zhiwu Lu

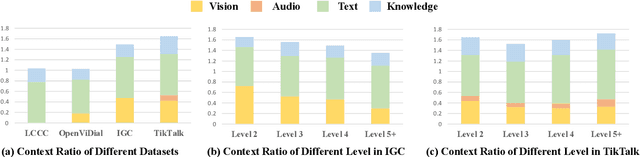

We present a novel multi-modal chitchat dialogue dataset-TikTalk aimed at facilitating the research of intelligent chatbots. It consists of the videos and corresponding dialogues users generate on video social applications. In contrast to existing multi-modal dialogue datasets, we construct dialogue corpora based on video comment-reply pairs, which is more similar to chitchat in real-world dialogue scenarios. Our dialogue context includes three modalities: text, vision, and audio. Compared with previous image-based dialogue datasets, the richer sources of context in TikTalk lead to a greater diversity of conversations. TikTalk contains over 38K videos and 367K dialogues. Data analysis shows that responses in TikTalk are in correlation with various contexts and external knowledge. It poses a great challenge for the deep understanding of multi-modal information and the generation of responses. We evaluate several baselines on three types of automatic metrics and conduct case studies. Experimental results demonstrate that there is still a large room for future improvement on TikTalk. Our dataset is available at \url{https://github.com/RUC-AIMind/TikTalk}.

TextBox 2.0: A Text Generation Library with Pre-trained Language Models

Dec 26, 2022Tianyi Tang, Junyi Li, Zhipeng Chen, Yiwen Hu, Zhuohao Yu, Wenxun Dai, Zican Dong, Xiaoxue Cheng, Yuhao Wang, Wayne Xin Zhao, Jian-Yun Nie, Ji-Rong Wen

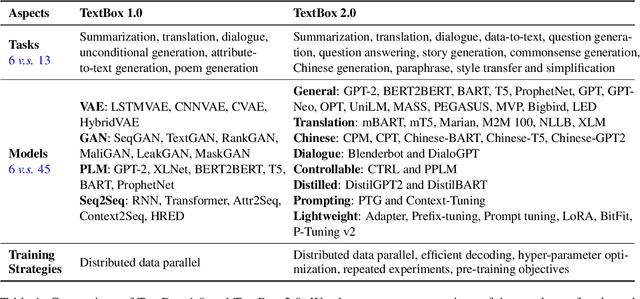

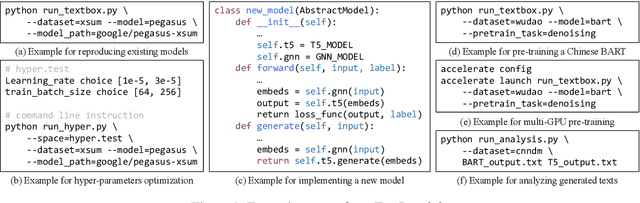

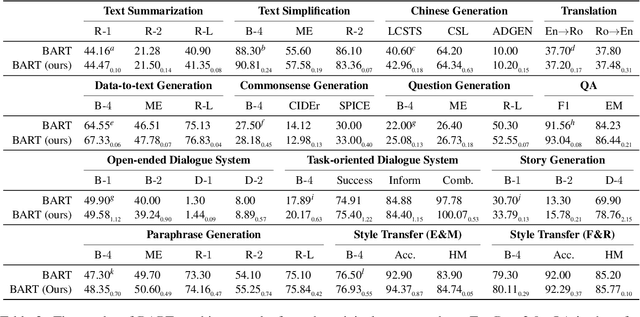

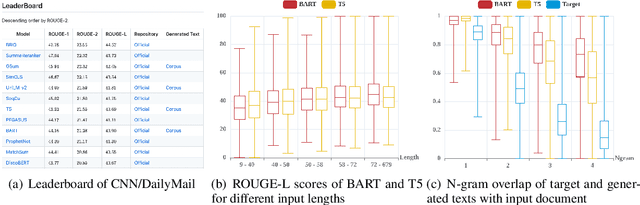

To facilitate research on text generation, this paper presents a comprehensive and unified library, TextBox 2.0, focusing on the use of pre-trained language models (PLMs). To be comprehensive, our library covers $13$ common text generation tasks and their corresponding $83$ datasets and further incorporates $45$ PLMs covering general, translation, Chinese, dialogue, controllable, distilled, prompting, and lightweight PLMs. We also implement $4$ efficient training strategies and provide $4$ generation objectives for pre-training new PLMs from scratch. To be unified, we design the interfaces to support the entire research pipeline (from data loading to training and evaluation), ensuring that each step can be fulfilled in a unified way. Despite the rich functionality, it is easy to use our library, either through the friendly Python API or command line. To validate the effectiveness of our library, we conduct extensive experiments and exemplify four types of research scenarios. The project is released at the link: https://github.com/RUCAIBox/TextBox.

Visually-augmented pretrained language models for NLP tasks without images

Dec 15, 2022Hangyu Guo, Kun Zhou, Wayne Xin Zhao, Qinyu Zhang, Ji-Rong Wen

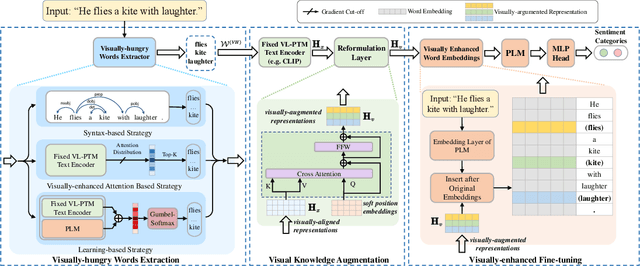

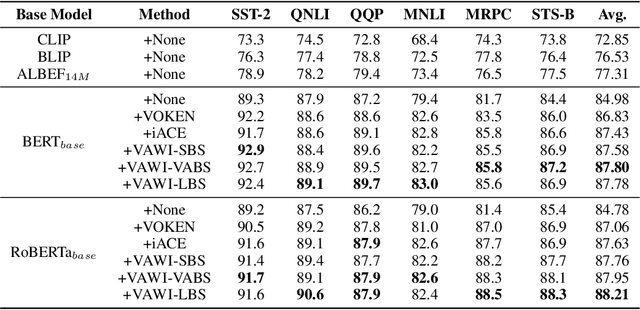

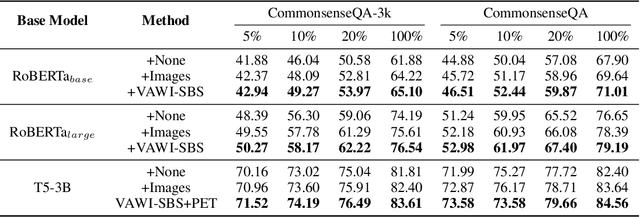

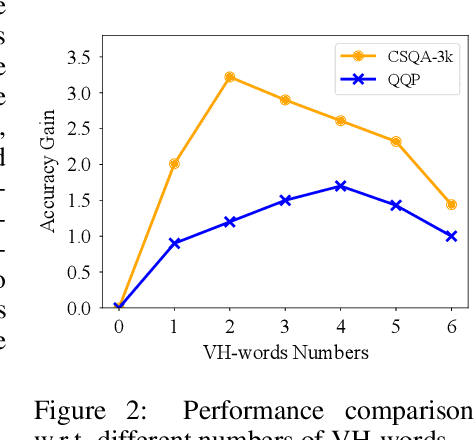

Although pre-trained language models (PLMs) have shown impressive performance by text-only self-supervised training, they are found lack of visual semantics or commonsense, e.g., sizes, shapes, and colors of commonplace objects. Existing solutions often rely on explicit images for visual knowledge augmentation (requiring time-consuming retrieval or generation), and they also conduct the augmentation for the whole input text, without considering whether it is actually needed in specific inputs or tasks. To address these issues, we propose a novel visually-augmented fine-tuning approach that can be generally applied to various PLMs or NLP tasks, without using any retrieved or generated images, namely VAWI. Specifically, we first identify the visually-hungry words (VH-words) from input text via a token selector, where three different methods have been proposed, including syntax-, attention- and learning-based strategies. Then, we adopt a fixed CLIP text encoder to generate the visually-augmented representations of these VH-words. As it has been pre-trained by vision-language alignment task on the large-scale corpus, it is capable of injecting visual semantics into the aligned text representations. Finally, the visually-augmented features will be fused and transformed into the pre-designed visual prompts based on VH-words, which can be inserted into PLMs to enrich the visual semantics in word representations. We conduct extensive experiments on ten NLP tasks, i.e., GLUE benchmark, CommonsenseQA, CommonGen, and SNLI-VE. Experimental results show that our approach can consistently improve the performance of BERT, RoBERTa, BART, and T5 at different scales, and outperform several competitive baselines significantly. Our codes and data are publicly available at~\url{https://github.com/RUCAIBox/VAWI}.

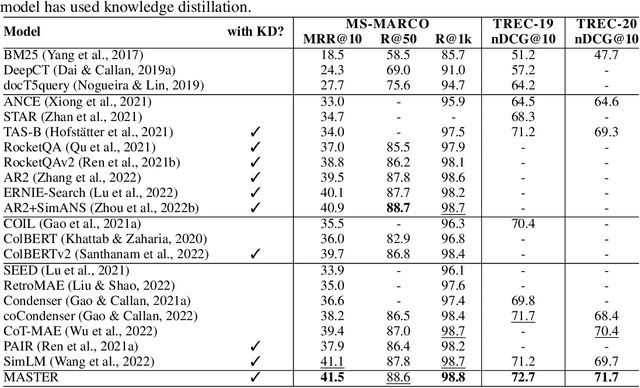

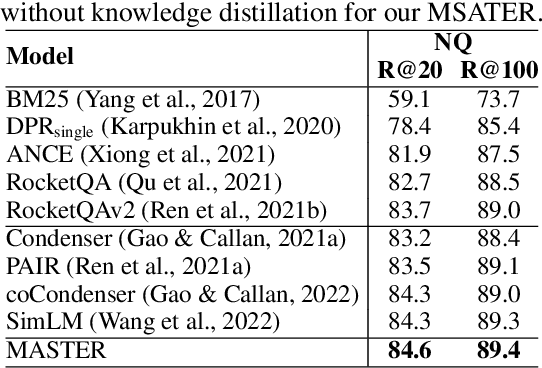

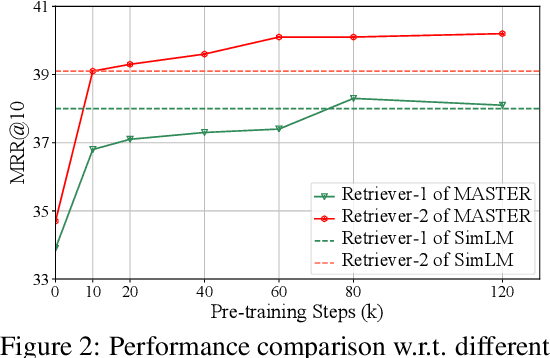

MASTER: Multi-task Pre-trained Bottlenecked Masked Autoencoders are Better Dense Retrievers

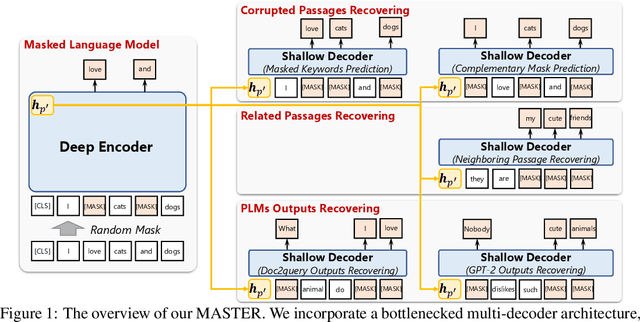

Dec 15, 2022Kun Zhou, Xiao Liu, Yeyun Gong, Wayne Xin Zhao, Daxin Jiang, Nan Duan, Ji-Rong Wen

Dense retrieval aims to map queries and passages into low-dimensional vector space for efficient similarity measuring, showing promising effectiveness in various large-scale retrieval tasks. Since most existing methods commonly adopt pre-trained Transformers (e.g. BERT) for parameter initialization, some work focuses on proposing new pre-training tasks for compressing the useful semantic information from passages into dense vectors, achieving remarkable performances. However, it is still challenging to effectively capture the rich semantic information and relations about passages into the dense vectors via one single particular pre-training task. In this work, we propose a multi-task pre-trained model, MASTER, that unifies and integrates multiple pre-training tasks with different learning objectives under the bottlenecked masked autoencoder architecture. Concretely, MASTER utilizes a multi-decoder architecture to integrate three types of pre-training tasks: corrupted passages recovering, related passage recovering and PLMs outputs recovering. By incorporating a shared deep encoder, we construct a representation bottleneck in our architecture, compressing the abundant semantic information across tasks into dense vectors. The first two types of tasks concentrate on capturing the semantic information of passages and relationships among them within the pre-training corpus. The third one can capture the knowledge beyond the corpus from external PLMs (e.g. GPT-2). Extensive experiments on several large-scale passage retrieval datasets have shown that our approach outperforms the previous state-of-the-art dense retrieval methods. Our code and data are publicly released in https://github.com/microsoft/SimXNS

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge