Tony Q. S. Quek

Duality-Guided Graph Learning for Real-Time Joint Connectivity and Routing in LEO Mega-Constellations

Jan 29, 2026Abstract:Laser inter-satellite links (LISLs) of low Earth orbit (LEO) mega-constellations enable high-capacity backbone connectivity in non-terrestrial networks, but their management is challenged by limited laser communication terminals, mechanical pointing constraints, and rapidly time-varying network topologies. This paper studies the joint problem of LISL connection establishment, traffic routing, and flow-rate allocation under heterogeneous global traffic demand and gateway availability. We formulate the problem as a mixed-integer optimization over large-scale, time-varying constellation graphs and develop a Lagrangian dual decomposition that interprets per-link dual variables as congestion prices coordinating connectivity and routing decisions. To overcome the prohibitive latency of iterative dual updates, we propose DeepLaDu, a Lagrangian duality-guided deep learning framework that trains a graph neural network (GNN) to directly infer per-link (edge-level) congestion prices from the constellation state in a single forward pass. We enable scalable and stable training using a subgradient-based edge-level loss in DeepLaDu. We analyze the convergence and computational complexity of the proposed approach and evaluate it using realistic Starlink-like constellations with optical and traffic constraints. Simulation results show that DeepLaDu achieves up to 20\% higher network throughput than non-joint or heuristic baselines, while matching the performance of iterative dual optimization with orders-of-magnitude lower computation time, suitable for real-time operation in dynamic LEO networks.

Vision-Language-Model-Guided Differentiable Ray Tracing for Fast and Accurate Multi-Material RF Parameter Estimation

Jan 26, 2026Abstract:Accurate radio-frequency (RF) material parameters are essential for electromagnetic digital twins in 6G systems, yet gradient-based inverse ray tracing (RT) remains sensitive to initialization and costly under limited measurements. This paper proposes a vision-language-model (VLM) guided framework that accelerates and stabilizes multi-material parameter estimation in a differentiable RT (DRT) engine. A VLM parses scene images to infer material categories and maps them to quantitative priors via an ITU-R material table, yielding informed conductivity initializations. The VLM further selects informative transmitter/receiver placements that promote diverse, material-discriminative paths. Starting from these priors, the DRT performs gradient-based refinement using measured received signal strengths. Experiments in NVIDIA Sionna on indoor scenes show 2-4$\times$ faster convergence and 10-100$\times$ lower final parameter error compared with uniform or random initialization and random placement baselines, achieving sub-0.1\% mean relative error with only a few receivers. Complexity analyses indicate per-iteration time scales near-linearly with the number of materials and measurement setups, while VLM-guided placement reduces the measurements required for accurate recovery. Ablations over RT depth and ray counts confirm further accuracy gains without significant per-iteration overhead. Results demonstrate that semantic priors from VLMs effectively guide physics-based optimization for fast and reliable RF material estimation.

CoCo-Fed: A Unified Framework for Memory- and Communication-Efficient Federated Learning at the Wireless Edge

Jan 02, 2026Abstract:The deployment of large-scale neural networks within the Open Radio Access Network (O-RAN) architecture is pivotal for enabling native edge intelligence. However, this paradigm faces two critical bottlenecks: the prohibitive memory footprint required for local training on resource-constrained gNBs, and the saturation of bandwidth-limited backhaul links during the global aggregation of high-dimensional model updates. To address these challenges, we propose CoCo-Fed, a novel Compression and Combination-based Federated learning framework that unifies local memory efficiency and global communication reduction. Locally, CoCo-Fed breaks the memory wall by performing a double-dimension down-projection of gradients, adapting the optimizer to operate on low-rank structures without introducing additional inference parameters/latency. Globally, we introduce a transmission protocol based on orthogonal subspace superposition, where layer-wise updates are projected and superimposed into a single consolidated matrix per gNB, drastically reducing the backhaul traffic. Beyond empirical designs, we establish a rigorous theoretical foundation, proving the convergence of CoCo-Fed even under unsupervised learning conditions suitable for wireless sensing tasks. Extensive simulations on an angle-of-arrival estimation task demonstrate that CoCo-Fed significantly outperforms state-of-the-art baselines in both memory and communication efficiency while maintaining robust convergence under non-IID settings.

OptiVote: Non-Coherent FSO Over-the-Air Majority Vote for Communication-Efficient Distributed Federated Learning in Space Data Centers

Dec 30, 2025Abstract:The rapid deployment of mega-constellations is driving the long-term vision of space data centers (SDCs), where interconnected satellites form in-orbit distributed computing and learning infrastructures. Enabling distributed federated learning in such systems is challenging because iterative training requires frequent aggregation over inter-satellite links that are bandwidth- and energy-constrained, and the link conditions can be highly dynamic. In this work, we exploit over-the-air computation (AirComp) as an in-network aggregation primitive. However, conventional coherent AirComp relies on stringent phase alignment, which is difficult to maintain in space environments due to satellite jitter and Doppler effects. To overcome this limitation, we propose OptiVote, a robust and communication-efficient non-coherent free-space optical (FSO) AirComp framework for federated learning toward Space Data Centers. OptiVote integrates sign stochastic gradient descent (signSGD) with a majority-vote (MV) aggregation principle and pulse-position modulation (PPM), where each satellite conveys local gradient signs by activating orthogonal PPM time slots. The aggregation node performs MV detection via non-coherent energy accumulation, transforming phase-sensitive field superposition into phase-agnostic optical intensity combining, thereby eliminating the need for precise phase synchronization and improving resilience under dynamic impairments. To mitigate aggregation bias induced by heterogeneous FSO channels, we further develop an importance-aware, channel state information (CSI)-free dynamic power control scheme that balances received energies without additional signaling. We provide theoretical analysis by characterizing the aggregate error probability under statistical FSO channels and establishing convergence guarantees for non-convex objectives.

Empower Low-Altitude Economy: A Reliability-Aware Dynamic Weighting Allocation for Multi-modal UAV Beam Prediction

Dec 30, 2025Abstract:The low-altitude economy (LAE) is rapidly expanding driven by urban air mobility, logistics drones, and aerial sensing, while fast and accurate beam prediction in uncrewed aerial vehicles (UAVs) communications is crucial for achieving reliable connectivity. Current research is shifting from single-signal to multi-modal collaborative approaches. However, existing multi-modal methods mostly employ fixed or empirical weights, assuming equal reliability across modalities at any given moment. Indeed, the importance of different modalities fluctuates dramatically with UAV motion scenarios, and static weighting amplifies the negative impact of degraded modalities. Furthermore, modal mismatch and weak alignment further undermine cross-scenario generalization. To this end, we propose a reliability-aware dynamic weighting scheme applied to a semantic-aware multi-modal beam prediction framework, named SaM2B. Specifically, SaM2B leverages lightweight cues such as environmental visual, flight posture, and geospatial data to adaptively allocate contributions across modalities at different time points through reliability-aware dynamic weight updates. Moreover, by utilizing cross-modal contrastive learning, we align the "multi-source representation beam semantics" associated with specific beam information to a shared semantic space, thereby enhancing discriminative power and robustness under modal noise and distribution shifts. Experiments on real-world low-altitude UAV datasets show that SaM2B achieves more satisfactory results than baseline methods.

Semantic Radio Access Networks: Architecture, State-of-the-Art, and Future Directions

Dec 24, 2025Abstract:Radio Access Network (RAN) is a bridge between user devices and the core network in mobile communication systems, responsible for the transmission and reception of wireless signals and air interface management. In recent years, Semantic Communication (SemCom) has represented a transformative communication paradigm that prioritizes meaning-level transmission over conventional bit-level delivery, thus providing improved spectrum efficiency, anti-interference ability in complex environments, flexible resource allocation, and enhanced user experience for RAN. However, there is still a lack of comprehensive reviews on the integration of SemCom into RAN. Motivated by this, we systematically explore recent advancements in Semantic RAN (SemRAN). We begin by introducing the fundamentals of RAN and SemCom, identifying the limitations of conventional RAN, and outlining the overall architecture of SemRAN. Subsequently, we review representative techniques of SemRAN across physical layer, data link layer, network layer, and security plane. Furthermore, we envision future services and applications enabled by SemRAN, alongside its current standardization progress. Finally, we conclude by identifying critical research challenges and outlining forward-looking directions to guide subsequent investigations in this burgeoning field.

Advancing LLM-Based Security Automation with Customized Group Relative Policy Optimization for Zero-Touch Networks

Dec 10, 2025Abstract:Zero-Touch Networks (ZTNs) represent a transformative paradigm toward fully automated and intelligent network management, providing the scalability and adaptability required for the complexity of sixth-generation (6G) networks. However, the distributed architecture, high openness, and deep heterogeneity of 6G networks expand the attack surface and pose unprecedented security challenges. To address this, security automation aims to enable intelligent security management across dynamic and complex environments, serving as a key capability for securing 6G ZTNs. Despite its promise, implementing security automation in 6G ZTNs presents two primary challenges: 1) automating the lifecycle from security strategy generation to validation and update under real-world, parallel, and adversarial conditions, and 2) adapting security strategies to evolving threats and dynamic environments. This motivates us to propose SecLoop and SA-GRPO. SecLoop constitutes the first fully automated framework that integrates large language models (LLMs) across the entire lifecycle of security strategy generation, orchestration, response, and feedback, enabling intelligent and adaptive defenses in dynamic network environments, thus tackling the first challenge. Furthermore, we propose SA-GRPO, a novel security-aware group relative policy optimization algorithm that iteratively refines security strategies by contrasting group feedback collected from parallel SecLoop executions, thereby addressing the second challenge. Extensive real-world experiments on five benchmarks, including 11 MITRE ATT&CK processes and over 20 types of attacks, demonstrate the superiority of the proposed SecLoop and SA-GRPO. We will release our platform to the community, facilitating the advancement of security automation towards next generation communications.

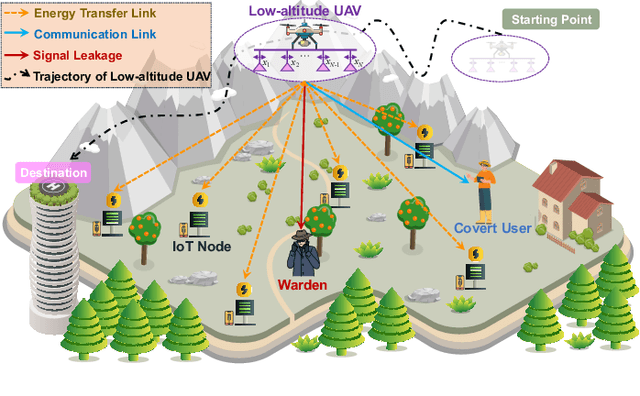

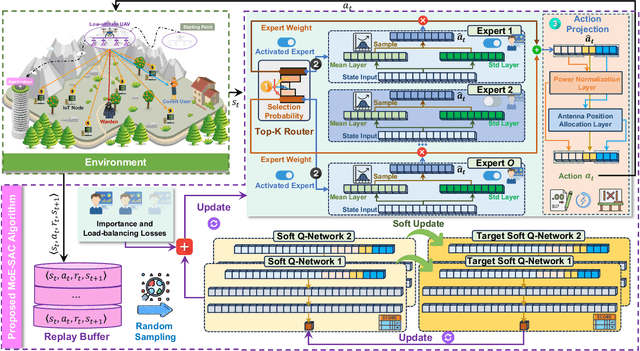

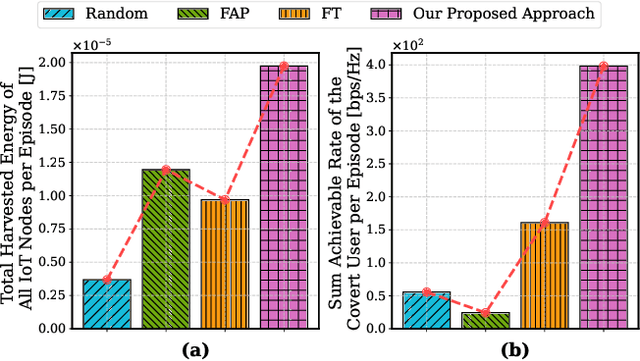

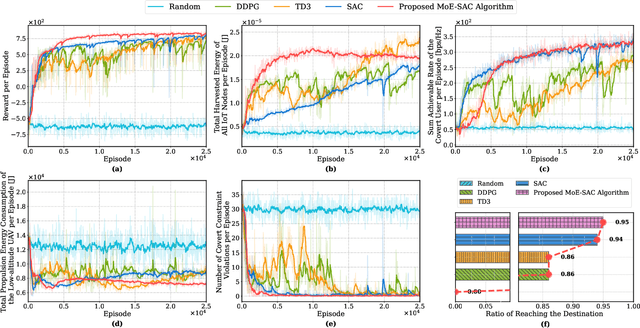

Low-Altitude UAV-Carried Movable Antenna for Joint Wireless Power Transfer and Covert Communications

Oct 30, 2025

Abstract:The proliferation of Internet of Things (IoT) networks has created an urgent need for sustainable energy solutions, particularly for the battery-constrained spatially distributed IoT nodes. While low-altitude uncrewed aerial vehicles (UAVs) employed with wireless power transfer (WPT) capabilities offer a promising solution, the line-of-sight channels that facilitate efficient energy delivery also expose sensitive operational data to adversaries. This paper proposes a novel low-altitude UAV-carried movable antenna-enhanced transmission system joint WPT and covert communications, which simultaneously performs energy supplements to IoT nodes and establishes transmission links with a covert user by leveraging wireless energy signals as a natural cover. Then, we formulate a multi-objective optimization problem that jointly maximizes the total harvested energy of IoT nodes and sum achievable rate of the covert user, while minimizing the propulsion energy consumption of the low-altitude UAV. To address the non-convex and temporally coupled optimization problem, we propose a mixture-of-experts-augmented soft actor-critic (MoE-SAC) algorithm that employs a sparse Top-K gated mixture-of-shallow-experts architecture to represent multimodal policy distributions arising from the conflicting optimization objectives. We also incorporate an action projection module that explicitly enforces per-time-slot power budget constraints and antenna position constraints. Simulation results demonstrate that the proposed approach significantly outperforms some baseline approaches and other state-of-the-art deep reinforcement learning algorithms.

SemSteDiff: Generative Diffusion Model-based Coverless Semantic Steganography Communication

Sep 05, 2025Abstract:Semantic communication (SemCom), as a novel paradigm for future communication systems, has recently attracted much attention due to its superiority in communication efficiency. However, similar to traditional communication, it also suffers from eavesdropping threats. Intelligent eavesdroppers could launch advanced semantic analysis techniques to infer secret semantic information. Therefore, some researchers have designed Semantic Steganography Communication (SemSteCom) scheme to confuse semantic eavesdroppers. However, the state-of-the-art SemSteCom schemes for image transmission rely on the pre-selected cover image, which limits the universality. To address this issue, we propose a Generative Diffusion Model-based Coverless Semantic Steganography Communication (SemSteDiff) scheme to hide secret images into generated stego images. The semantic related private and public keys enable legitimate receiver to decode secret images correctly while the eavesdropper without completely true key-pairs fail to obtain them. Simulation results demonstrate the effectiveness of the plug-and-play design in different Joint Source-Channel Coding (JSCC) frameworks. The comparison results under different eavesdroppers' threats show that, when Signal-to-Noise Ratio (SNR) = 0 dB, the peak signal-to-noise ratio (PSNR) of the legitimate receiver is 4.14 dB higher than that of the eavesdropper.

Large AI Model-Enabled Secure Communications in Low-Altitude Wireless Networks: Concepts, Perspectives and Case Study

Aug 01, 2025Abstract:Low-altitude wireless networks (LAWNs) have the potential to revolutionize communications by supporting a range of applications, including urban parcel delivery, aerial inspections and air taxis. However, compared with traditional wireless networks, LAWNs face unique security challenges due to low-altitude operations, frequent mobility and reliance on unlicensed spectrum, making it more vulnerable to some malicious attacks. In this paper, we investigate some large artificial intelligence model (LAM)-enabled solutions for secure communications in LAWNs. Specifically, we first explore the amplified security risks and important limitations of traditional AI methods in LAWNs. Then, we introduce the basic concepts of LAMs and delve into the role of LAMs in addressing these challenges. To demonstrate the practical benefits of LAMs for secure communications in LAWNs, we propose a novel LAM-based optimization framework that leverages large language models (LLMs) to generate enhanced state features on top of handcrafted representations, and to design intrinsic rewards accordingly, thereby improving reinforcement learning performance for secure communication tasks. Through a typical case study, simulation results validate the effectiveness of the proposed framework. Finally, we outline future directions for integrating LAMs into secure LAWN applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge