Siddhartha S. Srinivasa

Generative Data Mining with Longtail-Guided Diffusion

Feb 04, 2025

Abstract:It is difficult to anticipate the myriad challenges that a predictive model will encounter once deployed. Common practice entails a reactive, cyclical approach: model deployment, data mining, and retraining. We instead develop a proactive longtail discovery process by imagining additional data during training. In particular, we develop general model-based longtail signals, including a differentiable, single forward pass formulation of epistemic uncertainty that does not impact model parameters or predictive performance but can flag rare or hard inputs. We leverage these signals as guidance to generate additional training data from a latent diffusion model in a process we call Longtail Guidance (LTG). Crucially, we can perform LTG without retraining the diffusion model or the predictive model, and we do not need to expose the predictive model to intermediate diffusion states. Data generated by LTG exhibit semantically meaningful variation, yield significant generalization improvements on image classification benchmarks, and can be analyzed to proactively discover, explain, and address conceptual gaps in a predictive model.

Data Efficient Behavior Cloning for Fine Manipulation via Continuity-based Corrective Labels

May 29, 2024

Abstract:We consider imitation learning with access only to expert demonstrations, whose real-world application is often limited by covariate shift due to compounding errors during execution. We investigate the effectiveness of the Continuity-based Corrective Labels for Imitation Learning (CCIL) framework in mitigating this issue for real-world fine manipulation tasks. CCIL generates corrective labels by learning a locally continuous dynamics model from demonstrations to guide the agent back toward expert states. Through extensive experiments on peg insertion and fine grasping, we provide the first empirical validation that CCIL can significantly improve imitation learning performance despite discontinuities present in contact-rich manipulation. We find that: (1) real-world manipulation exhibits sufficient local smoothness to apply CCIL, (2) generated corrective labels are most beneficial in low-data regimes, and (3) label filtering based on estimated dynamics model error enables performance gains. To effectively apply CCIL to robotic domains, we offer a practical instantiation of the framework and insights into design choices and hyperparameter selection. Our work demonstrates CCIL's practicality for alleviating compounding errors in imitation learning on physical robots.

Multiple Ways of Working with Users to Develop Physically Assistive Robots

Mar 07, 2024

Abstract:Despite the growth of physically assistive robotics (PAR) research over the last decade, nearly half of PAR user studies do not involve participants with the target disabilities. There are several reasons for this -- recruitment challenges, small sample sizes, and transportation logistics -- all influenced by systemic barriers that people with disabilities face. However, it is well-established that working with end-users results in technology that better addresses their needs and integrates with their lived circumstances. In this paper, we reflect on multiple approaches we have taken to working with people with motor impairments across the design, development, and evaluation of three PAR projects: (a) assistive feeding with a robot arm; (b) assistive teleoperation with a mobile manipulator; and (c) shared control with a robot arm. We discuss these approaches to working with users along three dimensions -- individual vs. community-level insight, logistic burden on end-users vs. researchers, and benefit to researchers vs. community -- and share recommendations for how other PAR researchers can incorporate users into their work.

HOUND: An Open-Source, Low-cost Research Platform for High-speed Off-road Underactuated Nonholonomic Driving

Nov 19, 2023

Abstract:Off-road vehicles are susceptible to rollovers in terrains with large elevation features, such as steep hills, ditches, and berms. One way to protect them against rollovers is ruggedization through the use of industrial-grade parts and physical modifications. However, this solution can be prohibitively expensive for academic research labs. Our key insight is that a software-based rollover-prevention system (RPS) enables the use of commercial-off-the-shelf hardware parts that are cheaper than their industrial counterparts, thus reducing overall cost. In this paper, we present HOUND, a small-scale, inexpensive, off-road autonomy platform that can handle challenging outdoor terrains at high speeds through the integration of an RPS. HOUND is integrated with a complete stack for perception and control, geared towards aggressive offroad driving. We deploy HOUND in the real world, at high speeds, on four different terrains covering 50 km of driving and highlight its utility in preventing rollovers and traversing difficult terrain. Additionally, through integration with BeamNG, a state-of-the-art driving simulator, we demonstrate a significant reduction in rollovers without compromising turning ability across a series of simulated experiments. Supplementary material can be found on our website, where we will also release all design documents for the platform: https://sites.google.com/view/prl-hound .

PuSHR: A Multirobot System for Nonprehensile Rearrangement

Mar 02, 2023

Abstract:We focus on the problem of rearranging a set of objects with a team of car-like robot pushers built using off-the-shelf components. Maintaining control of pushed objects while avoiding collisions in a tight space demands highly coordinated motion that is challenging to execute on constrained hardware. Centralized replanning approaches become intractable even for small-sized problems whereas decentralized approaches often get stuck in deadlocks. Our key insight is that by carefully assigning pushing tasks to robots, we could reduce the complexity of the rearrangement task, enabling robust performance via scalable decentralized control. Based on this insight, we built PuSHR, a system that optimally assigns pushing tasks and trajectories to robots offline, and performs trajectory tracking via decentralized control online. Through an ablation study in simulation, we demonstrate that PuSHR dominates baselines ranging from purely decentralized to fully decentralized in terms of success rate and time efficiency across challenging tasks with up to 4 robots. Hardware experiments demonstrate the transfer of our system to the real world and highlight its robustness to model inaccuracies. Our code can be found at https://github.com/prl-mushr/pushr, and videos from our experiments at https://youtu.be/DIWmZerF_O8.

From Crowd Motion Prediction to Robot Navigation in Crowds

Mar 02, 2023

Abstract:We focus on robot navigation in crowded environments. To navigate safely and efficiently within crowds, robots need models for crowd motion prediction. Building such models is hard due to the high dimensionality of multiagent domains and the challenge of collecting or simulating interaction-rich crowd-robot demonstrations. While there has been important progress on models for offline pedestrian motion forecasting, transferring their performance on real robots is nontrivial due to close interaction settings and novelty effects on users. In this paper, we investigate the utility of a recent state-of-the-art motion prediction model (S-GAN) for crowd navigation tasks. We incorporate this model into a model predictive controller (MPC) and deploy it on a self-balancing robot which we subject to a diverse range of crowd behaviors in the lab. We demonstrate that while S-GAN motion prediction accuracy transfers to the real world, its value is not reflected on navigation performance, measured with respect to safety and efficiency; in fact, the MPC performs indistinguishably even when using a simple constant-velocity prediction model, suggesting that substantial model improvements might be needed to yield significant gains for crowd navigation tasks. Footage from our experiments can be found at https://youtu.be/mzFiXg8KsZ0.

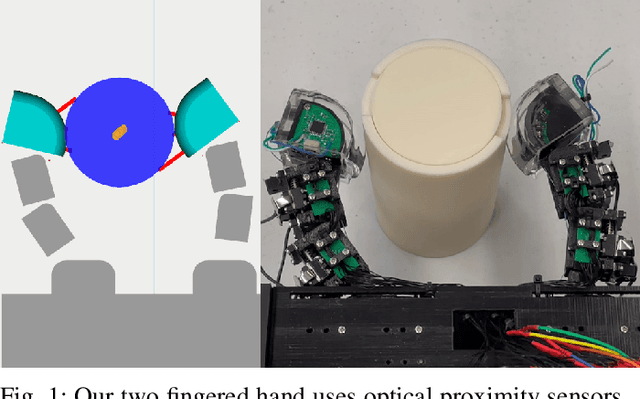

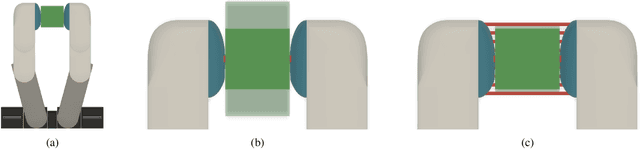

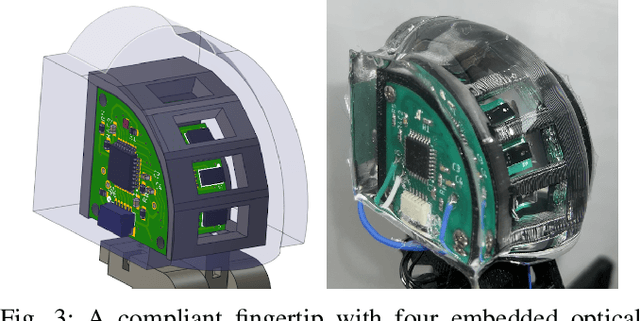

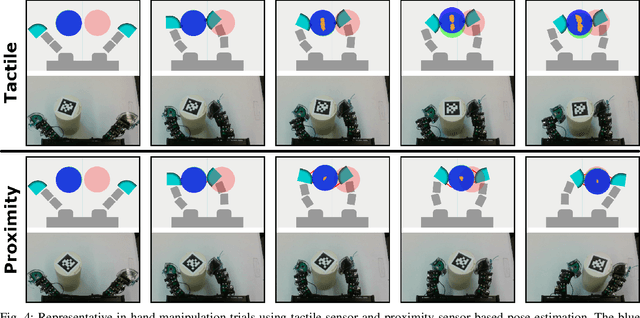

Optical Proximity Sensing for Pose Estimation During In-Hand Manipulation

Apr 05, 2022

Abstract:During in-hand manipulation, robots must be able to continuously estimate the pose of the object in order to generate appropriate control actions. The performance of algorithms for pose estimation hinges on the robot's sensors being able to detect discriminative geometric object features, but previous sensing modalities are unable to make such measurements robustly. The robot's fingers can occlude the view of environment- or robot-mounted image sensors, and tactile sensors can only measure at the local areas of contact. Motivated by fingertip-embedded proximity sensors' robustness to occlusion and ability to measure beyond the local areas of contact, we present the first evaluation of proximity sensor based pose estimation for in-hand manipulation. We develop a novel two-fingered hand with fingertip-embedded optical time-of-flight proximity sensors as a testbed for pose estimation during planar in-hand manipulation. Here, the in-hand manipulation task consists of the robot moving a cylindrical object from one end of its workspace to the other. We demonstrate, with statistical significance, that proximity-sensor based pose estimation via particle filtering during in-hand manipulation: a) exhibits 50% lower average pose error than a tactile-sensor based baseline; b) empowers a model predictive controller to achieve 30% lower final positioning error compared to when using tactile-sensor based pose estimates.

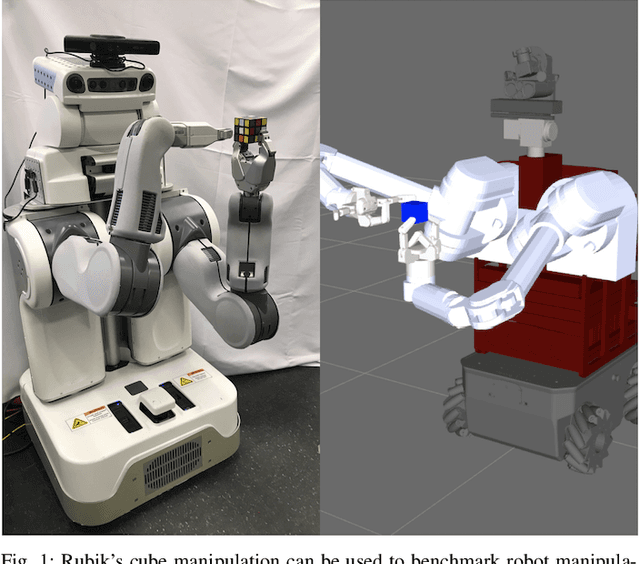

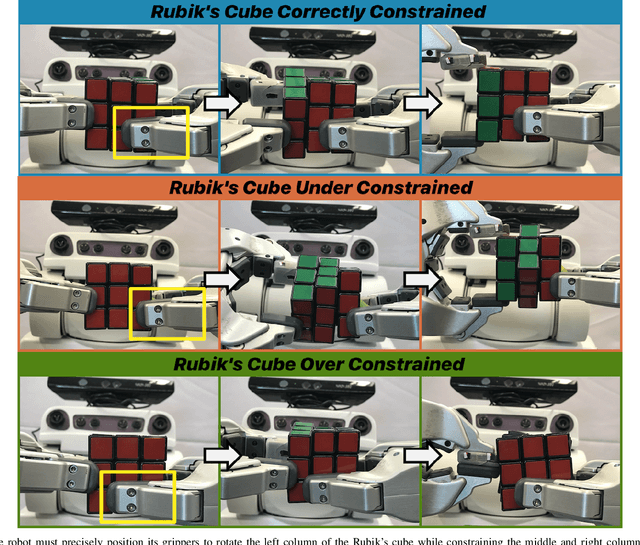

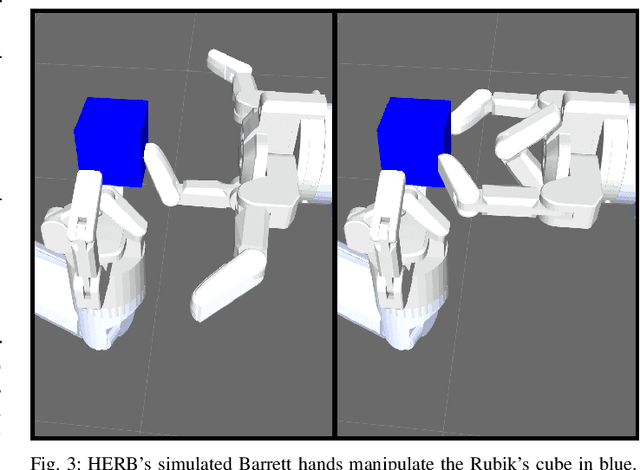

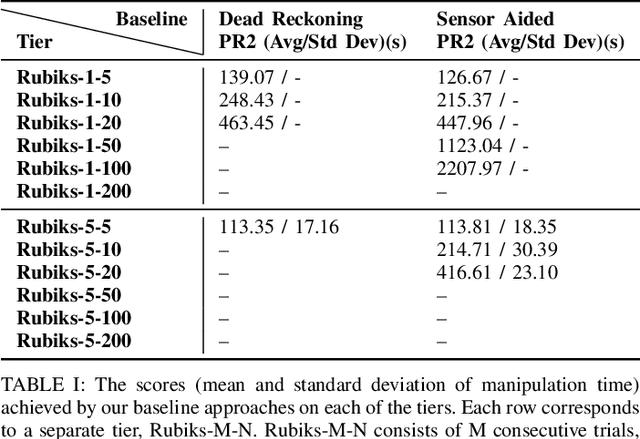

Benchmarking Robot Manipulation with the Rubik's Cube

Feb 14, 2022

Abstract:Benchmarks for robot manipulation are crucial to measuring progress in the field, yet there are few benchmarks that demonstrate critical manipulation skills, possess standardized metrics, and can be attempted by a wide array of robot platforms. To address a lack of such benchmarks, we propose Rubik's cube manipulation as a benchmark to measure simultaneous performance of precise manipulation and sequential manipulation. The sub-structure of the Rubik's cube demands precise positioning of the robot's end effectors, while its highly reconfigurable nature enables tasks that require the robot to manage pose uncertainty throughout long sequences of actions. We present a protocol for quantitatively measuring both the accuracy and speed of Rubik's cube manipulation. This protocol can be attempted by any general-purpose manipulator, and only requires a standard 3x3 Rubik's cube and a flat surface upon which the Rubik's cube initially rests (e.g. a table). We demonstrate this protocol for two distinct baseline approaches on a PR2 robot. The first baseline provides a fundamental approach for pose-based Rubik's cube manipulation. The second baseline demonstrates the benchmark's ability to quantify improved performance by the system, particularly that resulting from the integration of pre-touch sensing. To demonstrate the benchmark's applicability to other robot platforms and algorithmic approaches, we present the functional blocks required to enable the HERB robot to manipulate the Rubik's cube via push-grasping.

* IEEE RAL

Stein Variational Probabilistic Roadmaps

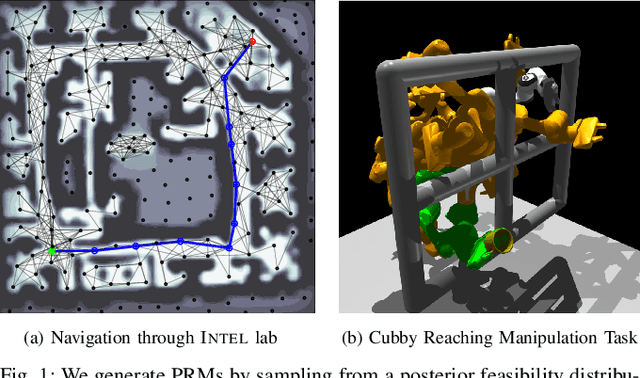

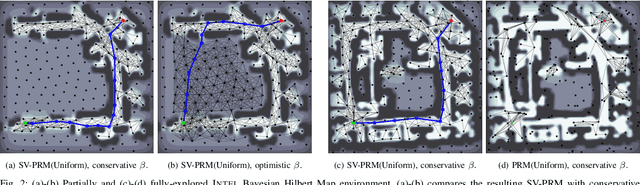

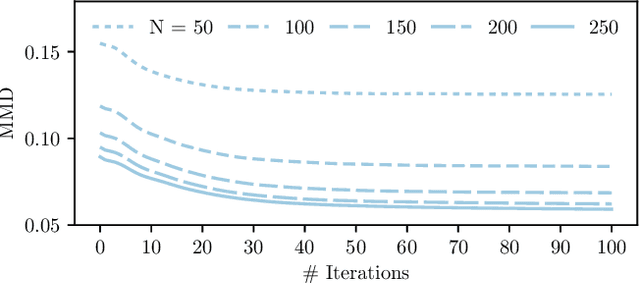

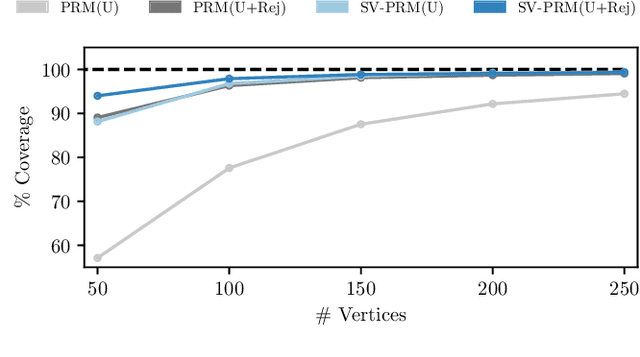

Nov 04, 2021

Abstract:Efficient and reliable generation of global path plans are necessary for safe execution and deployment of autonomous systems. In order to generate planning graphs which adequately resolve the topology of a given environment, many sampling-based motion planners resort to coarse, heuristically-driven strategies which often fail to generalize to new and varied surroundings. Further, many of these approaches are not designed to contend with partial-observability. We posit that such uncertainty in environment geometry can, in fact, help \textit{drive} the sampling process in generating feasible, and probabilistically-safe planning graphs. We propose a method for Probabilistic Roadmaps which relies on particle-based Variational Inference to efficiently cover the posterior distribution over feasible regions in configuration space. Our approach, Stein Variational Probabilistic Roadmap (SV-PRM), results in sample-efficient generation of planning-graphs and large improvements over traditional sampling approaches. We demonstrate the approach on a variety of challenging planning problems, including real-world probabilistic occupancy maps and high-dof manipulation problems common in robotics.

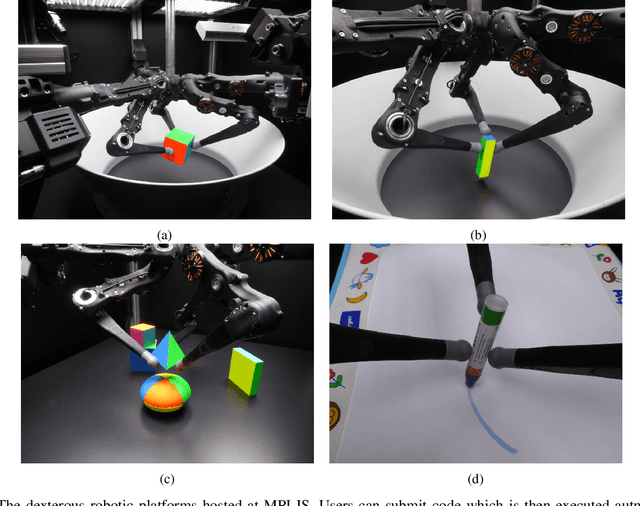

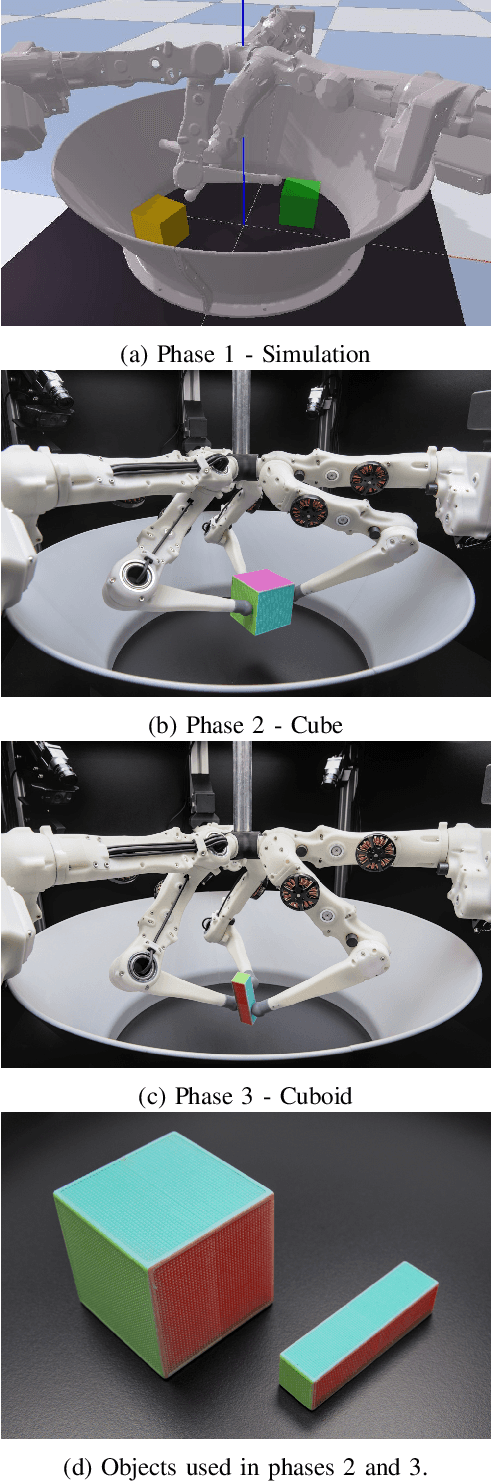

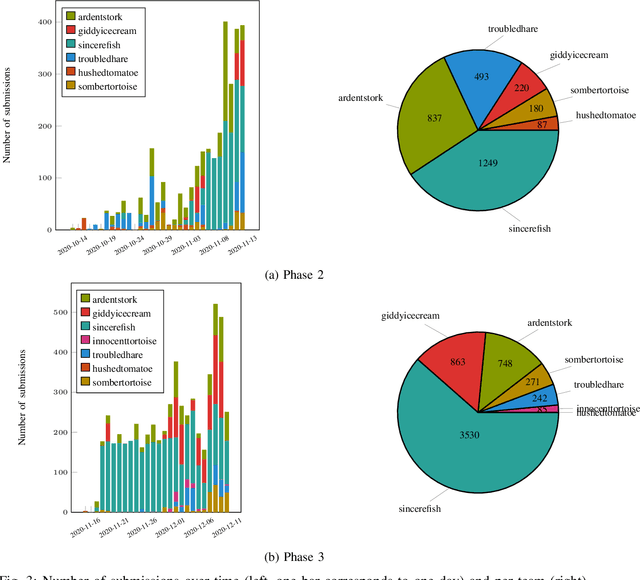

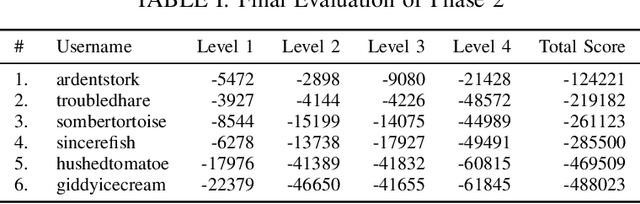

A Robot Cluster for Reproducible Research in Dexterous Manipulation

Sep 22, 2021

Abstract:Dexterous manipulation remains an open problem in robotics. To coordinate efforts of the research community towards tackling this problem, we propose a shared benchmark. We designed and built robotic platforms that are hosted at the MPI-IS and can be accessed remotely. Each platform consists of three robotic fingers that are capable of dexterous object manipulation. Users are able to control the platforms remotely by submitting code that is executed automatically, akin to a computational cluster. Using this setup, i) we host robotics competitions, where teams from anywhere in the world access our platforms to tackle challenging tasks, ii) we publish the datasets collected during these competitions (consisting of hundreds of robot hours), and iii) we give researchers access to these platforms for their own projects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge