The Elastic Lottery Ticket Hypothesis

Mar 30, 2021Xiaohan Chen, Yu Cheng, Shuohang Wang, Zhe Gan, Jingjing Liu, Zhangyang Wang

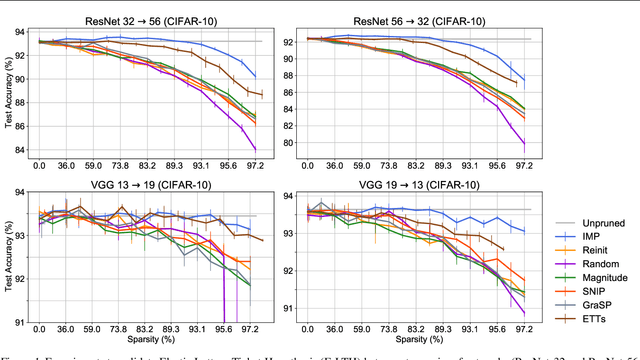

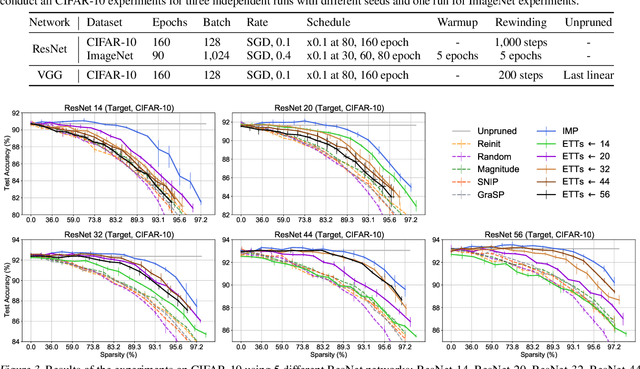

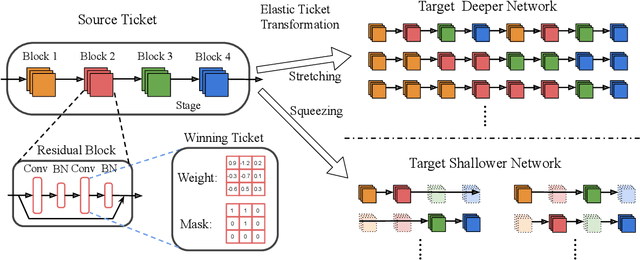

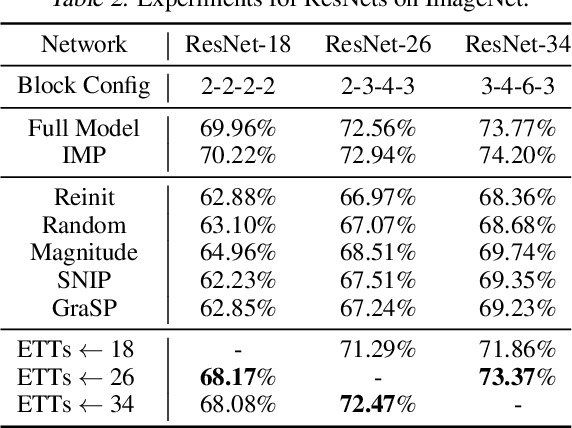

Lottery Ticket Hypothesis raises keen attention to identifying sparse trainable subnetworks or winning tickets, at the initialization (or early stage) of training, which can be trained in isolation to achieve similar or even better performance compared to the full models. Despite many efforts being made, the most effective method to identify such winning tickets is still Iterative Magnitude-based Pruning (IMP), which is computationally expensive and has to be run thoroughly for every different network. A natural question that comes in is: can we "transform" the winning ticket found in one network to another with a different architecture, yielding a winning ticket for the latter at the beginning, without re-doing the expensive IMP? Answering this question is not only practically relevant for efficient "once-for-all" winning ticket finding, but also theoretically appealing for uncovering inherently scalable sparse patterns in networks. We conduct extensive experiments on CIFAR-10 and ImageNet, and propose a variety of strategies to tweak the winning tickets found from different networks of the same model family (e.g., ResNets). Based on these results, we articulate the Elastic Lottery Ticket Hypothesis (E-LTH): by mindfully replicating (or dropping) and re-ordering layers for one network, its corresponding winning ticket could be stretched (or squeezed) into a subnetwork for another deeper (or shallower) network from the same family, whose performance is nearly as competitive as the latter's winning ticket directly found by IMP. We have also thoroughly compared E-LTH with pruning-at-initialization and dynamic sparse training methods, and discuss the generalizability of E-LTH to different model families, layer types, and even across datasets. Our codes are publicly available at https://github.com/VITA-Group/ElasticLTH.

LightningDOT: Pre-training Visual-Semantic Embeddings for Real-Time Image-Text Retrieval

Mar 16, 2021Siqi Sun, Yen-Chun Chen, Linjie Li, Shuohang Wang, Yuwei Fang, Jingjing Liu

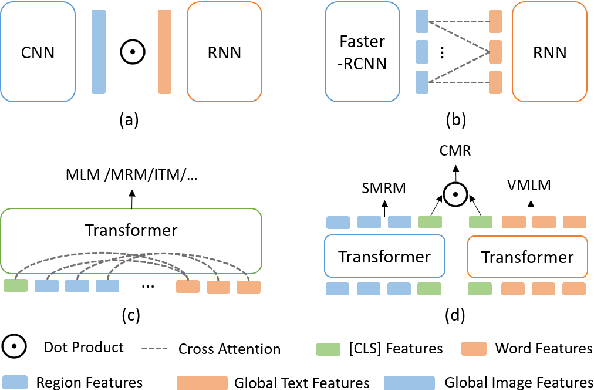

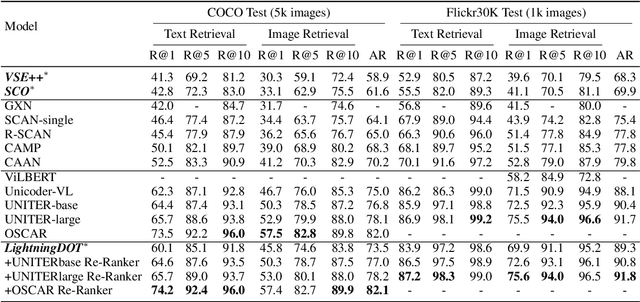

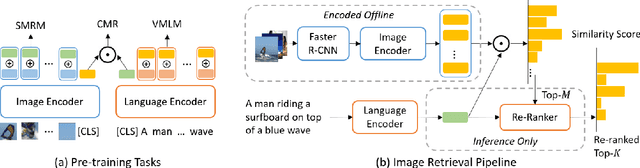

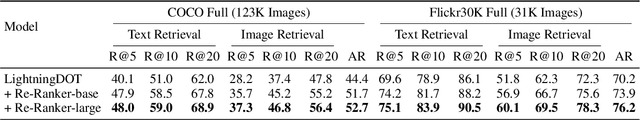

Multimodal pre-training has propelled great advancement in vision-and-language research. These large-scale pre-trained models, although successful, fatefully suffer from slow inference speed due to enormous computation cost mainly from cross-modal attention in Transformer architecture. When applied to real-life applications, such latency and computation demand severely deter the practical use of pre-trained models. In this paper, we study Image-text retrieval (ITR), the most mature scenario of V+L application, which has been widely studied even prior to the emergence of recent pre-trained models. We propose a simple yet highly effective approach, LightningDOT that accelerates the inference time of ITR by thousands of times, without sacrificing accuracy. LightningDOT removes the time-consuming cross-modal attention by pre-training on three novel learning objectives, extracting feature indexes offline, and employing instant dot-product matching with further re-ranking, which significantly speeds up retrieval process. In fact, LightningDOT achieves new state of the art across multiple ITR benchmarks such as Flickr30k, COCO and Multi30K, outperforming existing pre-trained models that consume 1000x magnitude of computational hours. Code and pre-training checkpoints are available at https://github.com/intersun/LightningDOT.

EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets

Dec 31, 2020Xiaohan Chen, Yu Cheng, Shuohang Wang, Zhe Gan, Zhangyang Wang, Jingjing Liu

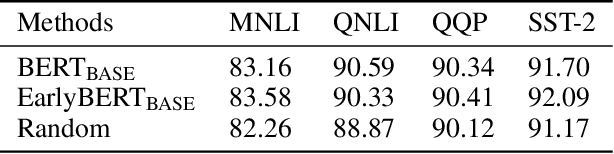

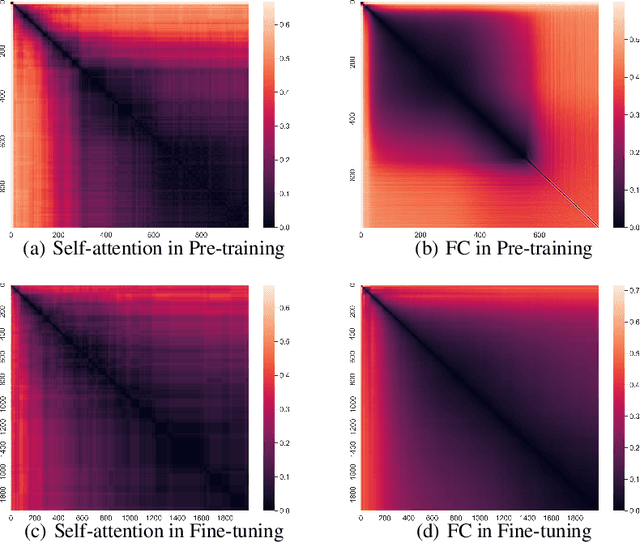

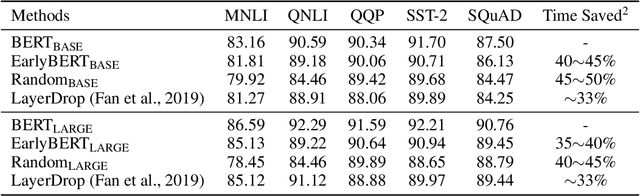

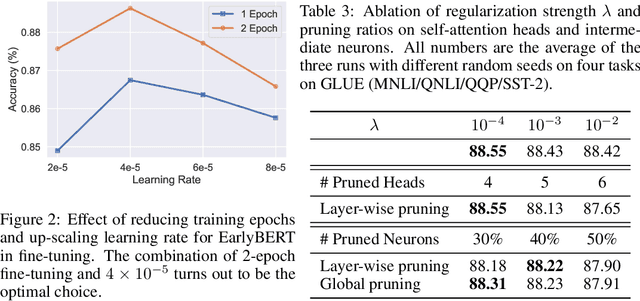

Deep, heavily overparameterized language models such as BERT, XLNet and T5 have achieved impressive success in many NLP tasks. However, their high model complexity requires enormous computation resources and extremely long training time for both pre-training and fine-tuning. Many works have studied model compression on large NLP models, but only focus on reducing inference cost/time, while still requiring expensive training process. Other works use extremely large batch sizes to shorten the pre-training time at the expense of high demand for computation resources. In this paper, inspired by the Early-Bird Lottery Tickets studied for computer vision tasks, we propose EarlyBERT, a general computationally-efficient training algorithm applicable to both pre-training and fine-tuning of large-scale language models. We are the first to identify structured winning tickets in the early stage of BERT training, and use them for efficient training. Comprehensive pre-training and fine-tuning experiments on GLUE and SQuAD downstream tasks show that EarlyBERT easily achieves comparable performance to standard BERT with 35~45% less training time.

InfoBERT: Improving Robustness of Language Models from An Information Theoretic Perspective

Oct 14, 2020Boxin Wang, Shuohang Wang, Yu Cheng, Zhe Gan, Ruoxi Jia, Bo Li, Jingjing Liu

Large-scale language models such as BERT have achieved state-of-the-art performance across a wide range of NLP tasks. Recent studies, however, show that such BERT-based models are vulnerable facing the threats of textual adversarial attacks. We aim to address this problem from an information-theoretic perspective, and propose InfoBERT, a novel learning framework for robust fine-tuning of pre-trained language models. InfoBERT contains two mutual-information-based regularizers for model training: (i) an Information Bottleneck regularizer, which suppresses noisy mutual information between the input and the feature representation; and (ii) a Robust Feature regularizer, which increases the mutual information between local robust features and global features. We provide a principled way to theoretically analyze and improve the robustness of representation learning for language models in both standard and adversarial training. Extensive experiments demonstrate that InfoBERT achieves state-of-the-art robust accuracy over several adversarial datasets on Natural Language Inference (NLI) and Question Answering (QA) tasks.

Counterfactual Variable Control for Robust and Interpretable Question Answering

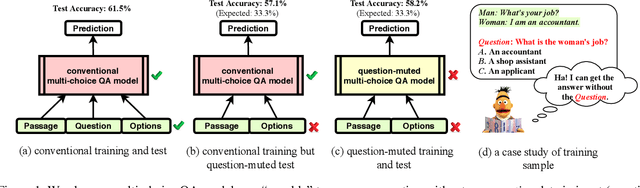

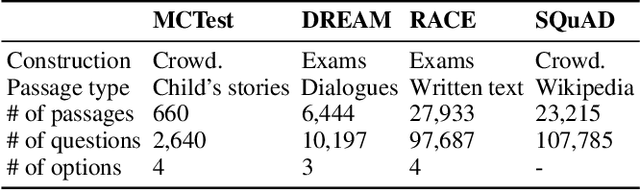

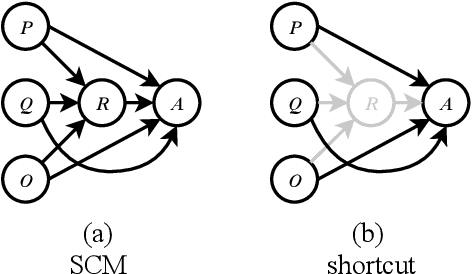

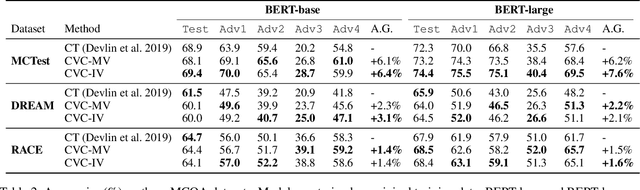

Oct 12, 2020Sicheng Yu, Yulei Niu, Shuohang Wang, Jing Jiang, Qianru Sun

Deep neural network based question answering (QA) models are neither robust nor explainable in many cases. For example, a multiple-choice QA model, tested without any input of question, is surprisingly "capable" to predict the most of correct options. In this paper, we inspect such spurious "capability" of QA models using causal inference. We find the crux is the shortcut correlation, e.g., unrobust word alignment between passage and options learned by the models. We propose a novel approach called Counterfactual Variable Control (CVC) that explicitly mitigates any shortcut correlation and preserves the comprehensive reasoning for robust QA. Specifically, we leverage multi-branch architecture that allows us to disentangle robust and shortcut correlations in the training process of QA. We then conduct two novel CVC inference methods (on trained models) to capture the effect of comprehensive reasoning as the final prediction. For evaluation, we conduct extensive experiments using two BERT backbones on both multi-choice and span-extraction QA benchmarks. The results show that our CVC achieves high robustness against a variety of adversarial attacks in QA while maintaining good interpretation ability.

Cross-Thought for Sentence Encoder Pre-training

Oct 07, 2020Shuohang Wang, Yuwei Fang, Siqi Sun, Zhe Gan, Yu Cheng, Jing Jiang, Jingjing Liu

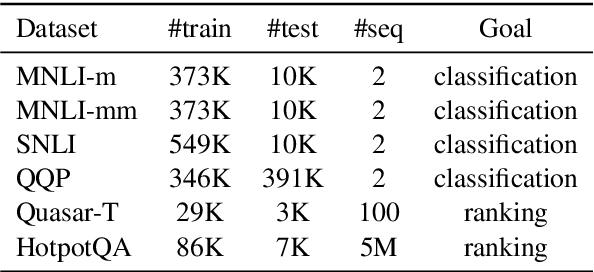

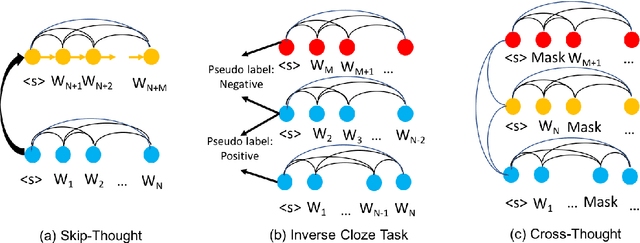

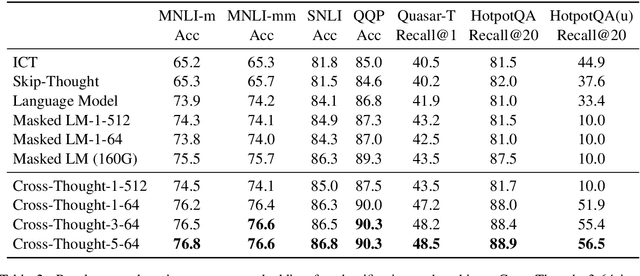

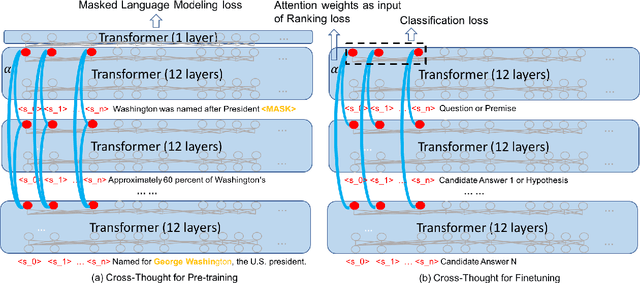

In this paper, we propose Cross-Thought, a novel approach to pre-training sequence encoder, which is instrumental in building reusable sequence embeddings for large-scale NLP tasks such as question answering. Instead of using the original signals of full sentences, we train a Transformer-based sequence encoder over a large set of short sequences, which allows the model to automatically select the most useful information for predicting masked words. Experiments on question answering and textual entailment tasks demonstrate that our pre-trained encoder can outperform state-of-the-art encoders trained with continuous sentence signals as well as traditional masked language modeling baselines. Our proposed approach also achieves new state of the art on HotpotQA (full-wiki setting) by improving intermediate information retrieval performance.

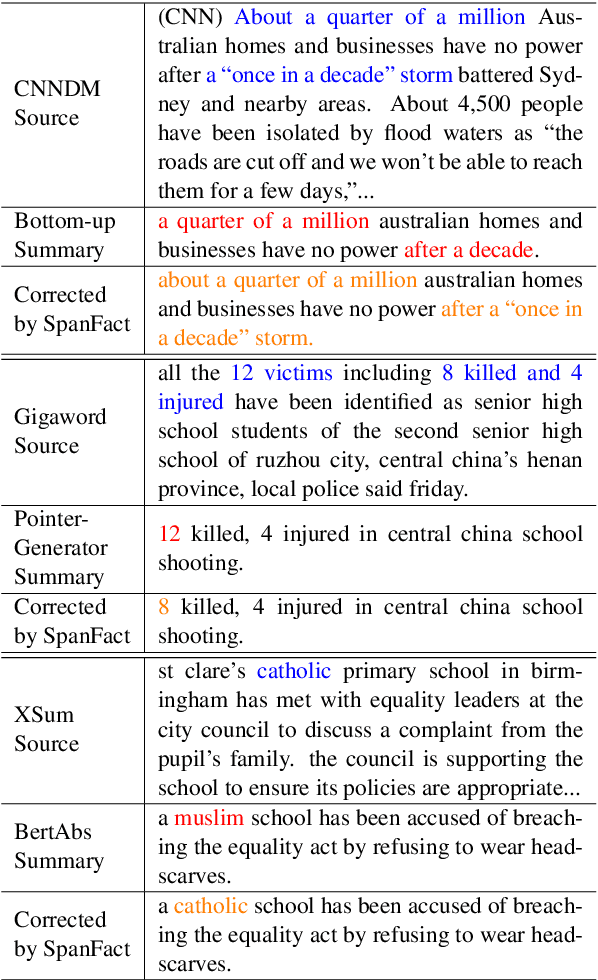

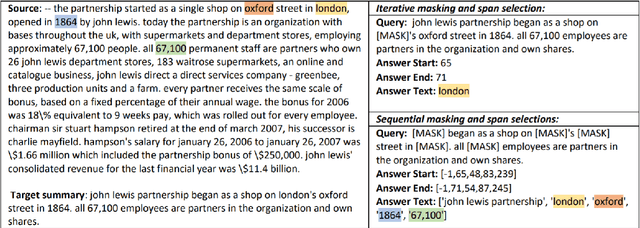

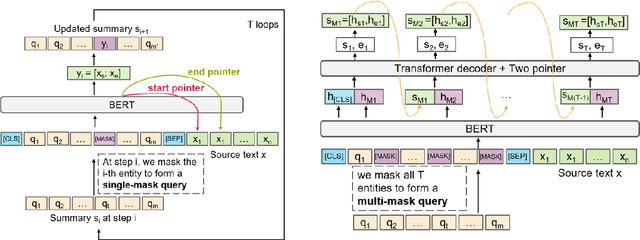

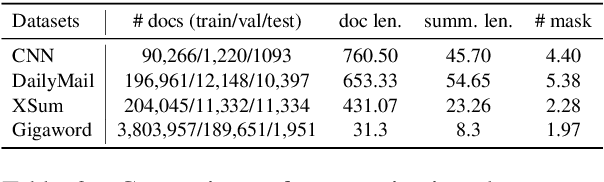

Multi-Fact Correction in Abstractive Text Summarization

Oct 06, 2020Yue Dong, Shuohang Wang, Zhe Gan, Yu Cheng, Jackie Chi Kit Cheung, Jingjing Liu

Pre-trained neural abstractive summarization systems have dominated extractive strategies on news summarization performance, at least in terms of ROUGE. However, system-generated abstractive summaries often face the pitfall of factual inconsistency: generating incorrect facts with respect to the source text. To address this challenge, we propose Span-Fact, a suite of two factual correction models that leverages knowledge learned from question answering models to make corrections in system-generated summaries via span selection. Our models employ single or multi-masking strategies to either iteratively or auto-regressively replace entities in order to ensure semantic consistency w.r.t. the source text, while retaining the syntactic structure of summaries generated by abstractive summarization models. Experiments show that our models significantly boost the factual consistency of system-generated summaries without sacrificing summary quality in terms of both automatic metrics and human evaluation.

Contrastive Distillation on Intermediate Representations for Language Model Compression

Sep 29, 2020Siqi Sun, Zhe Gan, Yu Cheng, Yuwei Fang, Shuohang Wang, Jingjing Liu

Existing language model compression methods mostly use a simple L2 loss to distill knowledge in the intermediate representations of a large BERT model to a smaller one. Although widely used, this objective by design assumes that all the dimensions of hidden representations are independent, failing to capture important structural knowledge in the intermediate layers of the teacher network. To achieve better distillation efficacy, we propose Contrastive Distillation on Intermediate Representations (CoDIR), a principled knowledge distillation framework where the student is trained to distill knowledge through intermediate layers of the teacher via a contrastive objective. By learning to distinguish positive sample from a large set of negative samples, CoDIR facilitates the student's exploitation of rich information in teacher's hidden layers. CoDIR can be readily applied to compress large-scale language models in both pre-training and finetuning stages, and achieves superb performance on the GLUE benchmark, outperforming state-of-the-art compression methods.

FILTER: An Enhanced Fusion Method for Cross-lingual Language Understanding

Sep 17, 2020Yuwei Fang, Shuohang Wang, Zhe Gan, Siqi Sun, Jingjing Liu

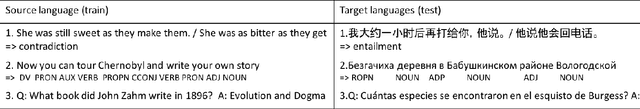

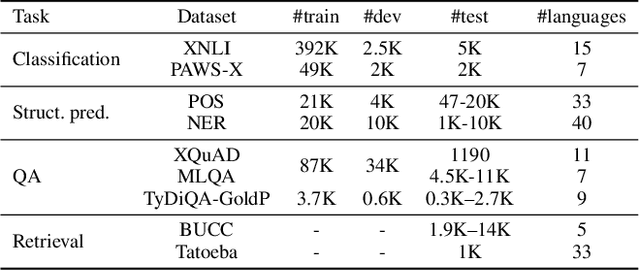

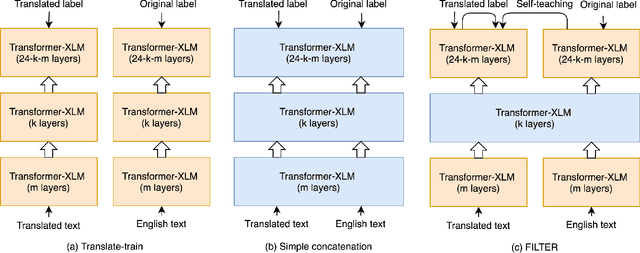

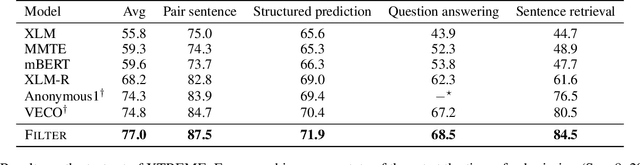

Large-scale cross-lingual language models (LM), such as mBERT, Unicoder and XLM, have achieved great success in cross-lingual representation learning. However, when applied to zero-shot cross-lingual transfer tasks, most existing methods use only single-language input for LM finetuning, without leveraging the intrinsic cross-lingual alignment between different languages that is essential for multilingual tasks. In this paper, we propose FILTER, an enhanced fusion method that takes cross-lingual data as input for XLM finetuning. Specifically, FILTER first encodes text input in the source language and its translation in the target language independently in the shallow layers, then performs cross-lingual fusion to extract multilingual knowledge in the intermediate layers, and finally performs further language-specific encoding. During inference, the model makes predictions based on the text input in the target language and its translation in the source language. For simple tasks such as classification, translated text in the target language shares the same label as the source language. However, this shared label becomes less accurate or even unavailable for more complex tasks such as question answering, NER and POS tagging. For better model scalability, we further propose an additional KL-divergence self-teaching loss for model training, based on auto-generated soft pseudo-labels for translated text in the target language. Extensive experiments demonstrate that FILTER achieves new state of the art on two challenging multilingual multi-task benchmarks, XTREME and XGLUE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge