Qiegen Liu

Correlated and Multi-frequency Diffusion Modeling for Highly Under-sampled MRI Reconstruction

Sep 02, 2023Abstract:Most existing MRI reconstruction methods perform tar-geted reconstruction of the entire MR image without tak-ing specific tissue regions into consideration. This may fail to emphasize the reconstruction accuracy on im-portant tissues for diagnosis. In this study, leveraging a combination of the properties of k-space data and the diffusion process, our novel scheme focuses on mining the multi-frequency prior with different strategies to pre-serve fine texture details in the reconstructed image. In addition, a diffusion process can converge more quickly if its target distribution closely resembles the noise distri-bution in the process. This can be accomplished through various high-frequency prior extractors. The finding further solidifies the effectiveness of the score-based gen-erative model. On top of all the advantages, our method improves the accuracy of MRI reconstruction and accel-erates sampling process. Experimental results verify that the proposed method successfully obtains more accurate reconstruction and outperforms state-of-the-art methods.

Physics-Informed DeepMRI: Bridging the Gap from Heat Diffusion to k-Space Interpolation

Aug 30, 2023Abstract:In the field of parallel imaging (PI), alongside image-domain regularization methods, substantial research has been dedicated to exploring $k$-space interpolation. However, the interpretability of these methods remains an unresolved issue. Furthermore, these approaches currently face acceleration limitations that are comparable to those experienced by image-domain methods. In order to enhance interpretability and overcome the acceleration limitations, this paper introduces an interpretable framework that unifies both $k$-space interpolation techniques and image-domain methods, grounded in the physical principles of heat diffusion equations. Building upon this foundational framework, a novel $k$-space interpolation method is proposed. Specifically, we model the process of high-frequency information attenuation in $k$-space as a heat diffusion equation, while the effort to reconstruct high-frequency information from low-frequency regions can be conceptualized as a reverse heat equation. However, solving the reverse heat equation poses a challenging inverse problem. To tackle this challenge, we modify the heat equation to align with the principles of magnetic resonance PI physics and employ the score-based generative method to precisely execute the modified reverse heat diffusion. Finally, experimental validation conducted on publicly available datasets demonstrates the superiority of the proposed approach over traditional $k$-space interpolation methods, deep learning-based $k$-space interpolation methods, and conventional diffusion models in terms of reconstruction accuracy, particularly in high-frequency regions.

Model-based Federated Learning for Accurate MR Image Reconstruction from Undersampled k-space Data

Apr 15, 2023

Abstract:Deep learning-based methods have achieved encouraging performances in the field of magnetic resonance (MR) image reconstruction. Nevertheless, to properly learn a powerful and robust model, these methods generally require large quantities of data, the collection of which from multiple centers may cause ethical and data privacy violation issues. Lately, federated learning has served as a promising solution to exploit multi-center data while getting rid of the data transfer between institutions. However, high heterogeneity exists in the data from different centers, and existing federated learning methods tend to use average aggregation methods to combine the client's information, which limits the performance and generalization capability of the trained models. In this paper, we propose a Model-based Federated learning framework (ModFed). ModFed has three major contributions: 1) Different from the existing data-driven federated learning methods, model-driven neural networks are designed to relieve each client's dependency on large data; 2) An adaptive dynamic aggregation scheme is proposed to address the data heterogeneity issue and improve the generalization capability and robustness the trained neural network models; 3) A spatial Laplacian attention mechanism and a personalized client-side loss regularization are introduced to capture the detailed information for accurate image reconstruction. ModFed is evaluated on three in-vivo datasets. Experimental results show that ModFed has strong capability in improving image reconstruction quality and enforcing model generalization capability when compared to the other five state-of-the-art federated learning approaches. Codes will be made available at https://github.com/ternencewu123/ModFed.

Universal Generative Modeling in Dual-domain for Dynamic MR Imaging

Dec 15, 2022Abstract:Dynamic magnetic resonance image reconstruction from incomplete k-space data has generated great research interest due to its capability to reduce scan time. Never-theless, the reconstruction problem is still challenging due to its ill-posed nature. Recently, diffusion models espe-cially score-based generative models have exhibited great potential in algorithm robustness and usage flexi-bility. Moreover, the unified framework through the variance exploding stochastic differential equation (VE-SDE) is proposed to enable new sampling methods and further extend the capabilities of score-based gener-ative models. Therefore, by taking advantage of the uni-fied framework, we proposed a k-space and image Du-al-Domain collaborative Universal Generative Model (DD-UGM) which combines the score-based prior with low-rank regularization penalty to reconstruct highly under-sampled measurements. More precisely, we extract prior components from both image and k-space domains via a universal generative model and adaptively handle these prior components for faster processing while maintaining good generation quality. Experimental comparisons demonstrated the noise reduction and detail preservation abilities of the proposed method. Much more than that, DD-UGM can reconstruct data of differ-ent frames by only training a single frame image, which reflects the flexibility of the proposed model.

Low-rank Tensor Assisted K-space Generative Model for Parallel Imaging Reconstruction

Dec 11, 2022Abstract:Although recent deep learning methods, especially generative models, have shown good performance in fast magnetic resonance imaging, there is still much room for improvement in high-dimensional generation. Considering that internal dimensions in score-based generative models have a critical impact on estimating the gradient of the data distribution, we present a new idea, low-rank tensor assisted k-space generative model (LR-KGM), for parallel imaging reconstruction. This means that we transform original prior information into high-dimensional prior information for learning. More specifically, the multi-channel data is constructed into a large Hankel matrix and the matrix is subsequently folded into tensor for prior learning. In the testing phase, the low-rank rotation strategy is utilized to impose low-rank constraints on tensor output of the generative network. Furthermore, we alternately use traditional generative iterations and low-rank high-dimensional tensor iterations for reconstruction. Experimental comparisons with the state-of-the-arts demonstrated that the proposed LR-KGM method achieved better performance.

One Sample Diffusion Model in Projection Domain for Low-Dose CT Imaging

Dec 07, 2022

Abstract:Low-dose computed tomography (CT) plays a significant role in reducing the radiation risk in clinical applications. However, lowering the radiation dose will significantly degrade the image quality. With the rapid development and wide application of deep learning, it has brought new directions for the development of low-dose CT imaging algorithms. Therefore, we propose a fully unsupervised one sample diffusion model (OSDM)in projection domain for low-dose CT reconstruction. To extract sufficient prior information from single sample, the Hankel matrix formulation is employed. Besides, the penalized weighted least-squares and total variation are introduced to achieve superior image quality. Specifically, we first train a score-based generative model on one sinogram by extracting a great number of tensors from the structural-Hankel matrix as the network input to capture prior distribution. Then, at the inference stage, the stochastic differential equation solver and data consistency step are performed iteratively to obtain the sinogram data. Finally, the final image is obtained through the filtered back-projection algorithm. The reconstructed results are approaching to the normal-dose counterparts. The results prove that OSDM is practical and effective model for reducing the artifacts and preserving the image quality.

Generative Modeling in Sinogram Domain for Sparse-view CT Reconstruction

Nov 25, 2022Abstract:The radiation dose in computed tomography (CT) examinations is harmful for patients but can be significantly reduced by intuitively decreasing the number of projection views. Reducing projection views usually leads to severe aliasing artifacts in reconstructed images. Previous deep learning (DL) techniques with sparse-view data require sparse-view/full-view CT image pairs to train the network with supervised manners. When the number of projection view changes, the DL network should be retrained with updated sparse-view/full-view CT image pairs. To relieve this limitation, we present a fully unsupervised score-based generative model in sinogram domain for sparse-view CT reconstruction. Specifically, we first train a score-based generative model on full-view sinogram data and use multi-channel strategy to form highdimensional tensor as the network input to capture their prior distribution. Then, at the inference stage, the stochastic differential equation (SDE) solver and data-consistency step were performed iteratively to achieve fullview projection. Filtered back-projection (FBP) algorithm was used to achieve the final image reconstruction. Qualitative and quantitative studies were implemented to evaluate the presented method with several CT data. Experimental results demonstrated that our method achieved comparable or better performance than the supervised learning counterparts.

Generative Modeling in Structural-Hankel Domain for Color Image Inpainting

Nov 25, 2022Abstract:In recent years, some researchers focused on using a single image to obtain a large number of samples through multi-scale features. This study intends to a brand-new idea that requires only ten or even fewer samples to construct the low-rank structural-Hankel matrices-assisted score-based generative model (SHGM) for color image inpainting task. During the prior learning process, a certain amount of internal-middle patches are firstly extracted from several images and then the structural-Hankel matrices are constructed from these patches. To better apply the score-based generative model to learn the internal statistical distribution within patches, the large-scale Hankel matrices are finally folded into the higher dimensional tensors for prior learning. During the iterative inpainting process, SHGM views the inpainting problem as a conditional generation procedure in low-rank environment. As a result, the intermediate restored image is acquired by alternatively performing the stochastic differential equation solver, alternating direction method of multipliers, and data consistency steps. Experimental results demonstrated the remarkable performance and diversity of SHGM.

One-shot Generative Prior Learned from Hankel-k-space for Parallel Imaging Reconstruction

Aug 28, 2022

Abstract:Magnetic resonance imaging serves as an essential tool for clinical diagnosis. However, it suffers from a long acquisition time. The utilization of deep learning, especially the deep generative models, offers aggressive acceleration and better reconstruction in magnetic resonance imaging. Nevertheless, learning the data distribution as prior knowledge and reconstructing the image from limited data remains challenging. In this work, we propose a novel Hankel-k-space generative model (HKGM), which can generate samples from a training set of as little as one k-space data. At the prior learning stage, we first construct a large Hankel matrix from k-space data, then extract multiple structured k-space patches from the large Hankel matrix to capture the internal distribution among different patches. Extracting patches from a Hankel matrix enables the generative model to be learned from redundant and low-rank data space. At the iterative reconstruction stage, it is observed that the desired solution obeys the learned prior knowledge. The intermediate reconstruction solution is updated by taking it as the input of the generative model. The updated result is then alternatively operated by imposing low-rank penalty on its Hankel matrix and data consistency con-strain on the measurement data. Experimental results confirmed that the internal statistics of patches within a single k-space data carry enough information for learning a powerful generative model and provide state-of-the-art reconstruction.

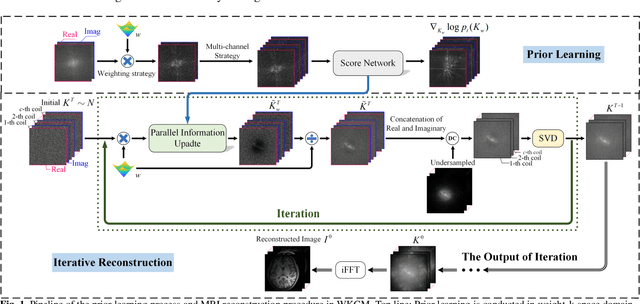

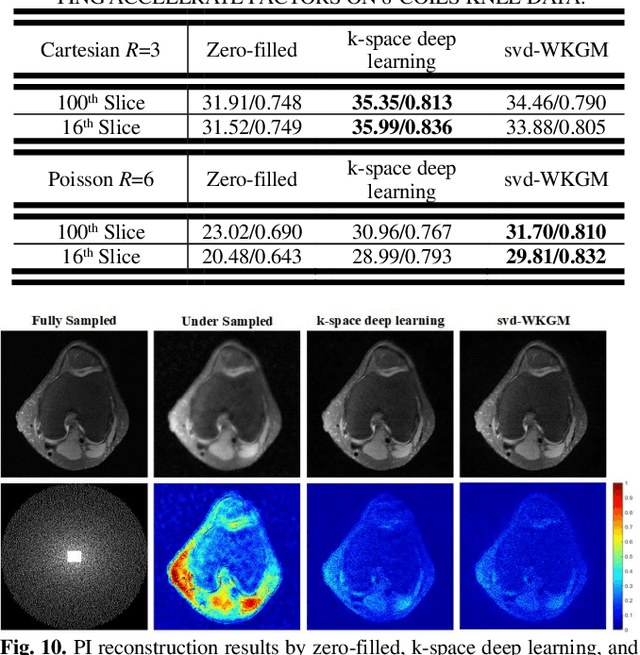

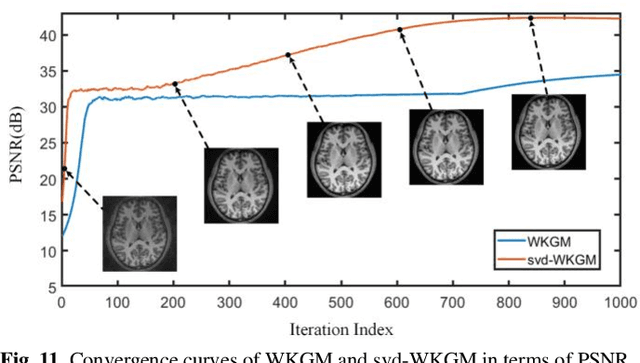

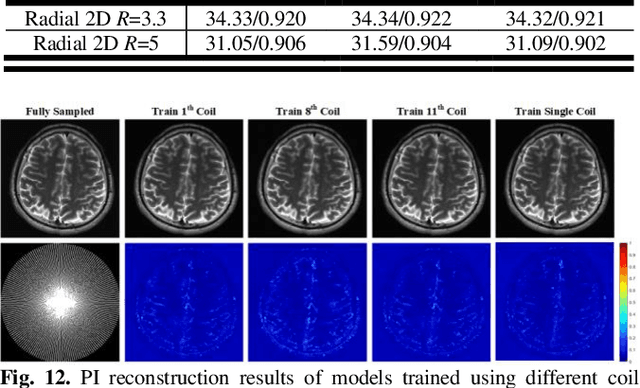

WKGM: Weight-K-space Generative Model for Parallel Imaging Reconstruction

May 08, 2022

Abstract:Parallel Imaging (PI) is one of the most im-portant and successful developments in accelerating magnetic resonance imaging (MRI). Recently deep learning PI has emerged as an effective technique to accelerate MRI. Nevertheless, most approaches have so far been based image domain. In this work, we propose to explore the k-space domain via robust generative modeling for flexible PI reconstruction, coined weight-k-space generative model (WKGM). Specifically, WKGM is a generalized k-space domain model, where the k-space weighting technology and high-dimensional space strategy are efficiently incorporated for score-based generative model training, resulting in good and robust reconstruction. In addition, WKGM is flexible and thus can synergistically combine various traditional k-space PI models, generating learning-based priors to produce high-fidelity reconstructions. Experimental results on datasets with varying sampling patterns and acceleration factors demonstrate that WKGM can attain state-of-the-art reconstruction results under the well-learned k-space generative prior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge