Hong Peng

Watching, Reasoning, and Searching: A Video Deep Research Benchmark on Open Web for Agentic Video Reasoning

Jan 11, 2026Abstract:In real-world video question answering scenarios, videos often provide only localized visual cues, while verifiable answers are distributed across the open web; models therefore need to jointly perform cross-frame clue extraction, iterative retrieval, and multi-hop reasoning-based verification. To bridge this gap, we construct the first video deep research benchmark, VideoDR. VideoDR centers on video-conditioned open-domain video question answering, requiring cross-frame visual anchor extraction, interactive web retrieval, and multi-hop reasoning over joint video-web evidence; through rigorous human annotation and quality control, we obtain high-quality video deep research samples spanning six semantic domains. We evaluate multiple closed-source and open-source multimodal large language models under both the Workflow and Agentic paradigms, and the results show that Agentic is not consistently superior to Workflow: its gains depend on a model's ability to maintain the initial video anchors over long retrieval chains. Further analysis indicates that goal drift and long-horizon consistency are the core bottlenecks. In sum, VideoDR provides a systematic benchmark for studying video agents in open-web settings and reveals the key challenges for next-generation video deep research agents.

ADGSyn: Dual-Stream Learning for Efficient Anticancer Drug Synergy Prediction

May 25, 2025Abstract:Drug combinations play a critical role in cancer therapy by significantly enhancing treatment efficacy and overcoming drug resistance. However, the combinatorial space of possible drug pairs grows exponentially, making experimental screening highly impractical. Therefore, developing efficient computational methods to predict promising drug combinations and guide experimental validation is of paramount importance. In this work, we propose ADGSyn, an innovative method for predicting drug synergy. The key components of our approach include: (1) shared projection matrices combined with attention mechanisms to enable cross-drug feature alignment; (2) automatic mixed precision (AMP)-optimized graph operations that reduce memory consumption by 40\% while accelerating training speed threefold; and (3) residual pathways stabilized by LayerNorm to ensure stable gradient propagation during training. Evaluated on the O'Neil dataset containing 13,243 drug--cell line combinations, ADGSyn demonstrates superior performance over eight baseline methods. Moreover, the framework supports full-batch processing of up to 256 molecular graphs on a single GPU, setting a new standard for efficiency in drug synergy prediction within the field of computational oncology.

A MEMS-based terahertz broadband beam steering technique

Sep 06, 2024Abstract:A multi-level tunable reflection array wide-angle beam scanning method is proposed to address the limited bandwidth and small scanning angle issues of current terahertz beam scanning technology. In this method, a focusing lens and its array are used to achieve terahertz wave spatial beam control, and MEMS mirrors and their arrays are used to achieve wide-angle beam scanning. The 1~3 order terahertz MEMS beam scanning system designed based on this method can extend the mechanical scanning angle of MEMS mirrors by 2~6 times, when tested and verified using an electromagnetic MEMS mirror with a 7mm optical aperture and a scanning angle of 15{\deg} and a D-band terahertz signal source. The experiment shows that the operating bandwidth of the first-order terahertz MEMS beam scanning system is better than 40GHz, the continuous beam scanning angle is about 30{\deg}, the continuous beam scanning cycle response time is about 1.1ms, and the antenna gain is better than 15dBi at 160GHz. This method has been validated for its large bandwidth and scalable scanning angle, and has potential application prospects in terahertz dynamic communication, detection radar, scanning imaging, and other fields.

Less is more: Ensemble Learning for Retinal Disease Recognition Under Limited Resources

Feb 15, 2024Abstract:Retinal optical coherence tomography (OCT) images provide crucial insights into the health of the posterior ocular segment. Therefore, the advancement of automated image analysis methods is imperative to equip clinicians and researchers with quantitative data, thereby facilitating informed decision-making. The application of deep learning (DL)-based approaches has gained extensive traction for executing these analysis tasks, demonstrating remarkable performance compared to labor-intensive manual analyses. However, the acquisition of Retinal OCT images often presents challenges stemming from privacy concerns and the resource-intensive labeling procedures, which contradicts the prevailing notion that DL models necessitate substantial data volumes for achieving superior performance. Moreover, limitations in available computational resources constrain the progress of high-performance medical artificial intelligence, particularly in less developed regions and countries. This paper introduces a novel ensemble learning mechanism designed for recognizing retinal diseases under limited resources (e.g., data, computation). The mechanism leverages insights from multiple pre-trained models, facilitating the transfer and adaptation of their knowledge to Retinal OCT images. This approach establishes a robust model even when confronted with limited labeled data, eliminating the need for an extensive array of parameters, as required in learning from scratch. Comprehensive experimentation on real-world datasets demonstrates that the proposed approach can achieve superior performance in recognizing Retinal OCT images, even when dealing with exceedingly restricted labeled datasets. Furthermore, this method obviates the necessity of learning extensive-scale parameters, making it well-suited for deployment in low-resource scenarios.

SLP-Net:An efficient lightweight network for segmentation of skin lesions

Jan 04, 2024Abstract:Prompt treatment for melanoma is crucial. To assist physicians in identifying lesion areas precisely in a quick manner, we propose a novel skin lesion segmentation technique namely SLP-Net, an ultra-lightweight segmentation network based on the spiking neural P(SNP) systems type mechanism. Most existing convolutional neural networks achieve high segmentation accuracy while neglecting the high hardware cost. SLP-Net, on the contrary, has a very small number of parameters and a high computation speed. We design a lightweight multi-scale feature extractor without the usual encoder-decoder structure. Rather than a decoder, a feature adaptation module is designed to replace it and implement multi-scale information decoding. Experiments at the ISIC2018 challenge demonstrate that the proposed model has the highest Acc and DSC among the state-of-the-art methods, while experiments on the PH2 dataset also demonstrate a favorable generalization ability. Finally, we compare the computational complexity as well as the computational speed of the models in experiments, where SLP-Net has the highest overall superiority

Multi-stages attention Breast cancer classification based on nonlinear spiking neural P neurons with autapses

Jan 04, 2024

Abstract:Breast cancer(BC) is a prevalent type of malignant tumor in women. Early diagnosis and treatment are vital for enhancing the patients' survival rate. Downsampling in deep networks may lead to loss of information, so for compensating the detail and edge information and allowing convolutional neural networks to pay more attention to seek the lesion region, we propose a multi-stages attention architecture based on NSNP neurons with autapses. First, unlike the single-scale attention acquisition methods of existing methods, we set up spatial attention acquisition at each feature map scale of the convolutional network to obtain an fusion global information on attention guidance. Then we introduce a new type of NSNP variants called NSNP neurons with autapses. Specifically, NSNP systems are modularized as feature encoders, recoding the features extracted from convolutional neural network as well as the fusion of attention information and preserve the key characteristic elements in feature maps. This ensures the retention of valuable data while gradually transforming high-dimensional complicated info into low-dimensional ones. The proposed method is evaluated on the public dataset BreakHis at various magnifications and classification tasks. It achieves a classification accuracy of 96.32% at all magnification cases, outperforming state-of-the-art methods. Ablation studies are also performed, verifying the proposed model's efficacy. The source code is available at XhuBobYoung/Breast-cancer-Classification.

SAMN: A Sample Attention Memory Network Combining SVM and NN in One Architecture

Sep 25, 2023Abstract:Support vector machine (SVM) and neural networks (NN) have strong complementarity. SVM focuses on the inner operation among samples while NN focuses on the operation among the features within samples. Thus, it is promising and attractive to combine SVM and NN, as it may provide a more powerful function than SVM or NN alone. However, current work on combining them lacks true integration. To address this, we propose a sample attention memory network (SAMN) that effectively combines SVM and NN by incorporating sample attention module, class prototypes, and memory block to NN. SVM can be viewed as a sample attention machine. It allows us to add a sample attention module to NN to implement the main function of SVM. Class prototypes are representatives of all classes, which can be viewed as alternatives to support vectors. The memory block is used for the storage and update of class prototypes. Class prototypes and memory block effectively reduce the computational cost of sample attention and make SAMN suitable for multi-classification tasks. Extensive experiments show that SAMN achieves better classification performance than single SVM or single NN with similar parameter sizes, as well as the previous best model for combining SVM and NN. The sample attention mechanism is a flexible module that can be easily deepened and incorporated into neural networks that require it.

MaxMin-L2-SVC-NCH: A Novel Approach for Support Vector Classifier Training and Parameter Selection

Jul 25, 2023

Abstract:The selection of Gaussian kernel parameters plays an important role in the applications of support vector classification (SVC). A commonly used method is the k-fold cross validation with grid search (CV), which is extremely time-consuming because it needs to train a large number of SVC models. In this paper, a new approach is proposed to train SVC and optimize the selection of Gaussian kernel parameters. We first formulate the training and parameter selection of SVC as a minimax optimization problem named as MaxMin-L2-SVC-NCH, in which the minimization problem is an optimization problem of finding the closest points between two normal convex hulls (L2-SVC-NCH) while the maximization problem is an optimization problem of finding the optimal Gaussian kernel parameters. A lower time complexity can be expected in MaxMin-L2-SVC-NCH because CV is not needed. We then propose a projected gradient algorithm (PGA) for training L2-SVC-NCH. The famous sequential minimal optimization (SMO) algorithm is a special case of the PGA. Thus, the PGA can provide more flexibility than the SMO. Furthermore, the solution of the maximization problem is done by a gradient ascent algorithm with dynamic learning rate. The comparative experiments between MaxMin-L2-SVC-NCH and the previous best approaches on public datasets show that MaxMin-L2-SVC-NCH greatly reduces the number of models to be trained while maintaining competitive test accuracy. These findings indicate that MaxMin-L2-SVC-NCH is a better choice for SVC tasks.

One-shot Generative Prior Learned from Hankel-k-space for Parallel Imaging Reconstruction

Aug 28, 2022

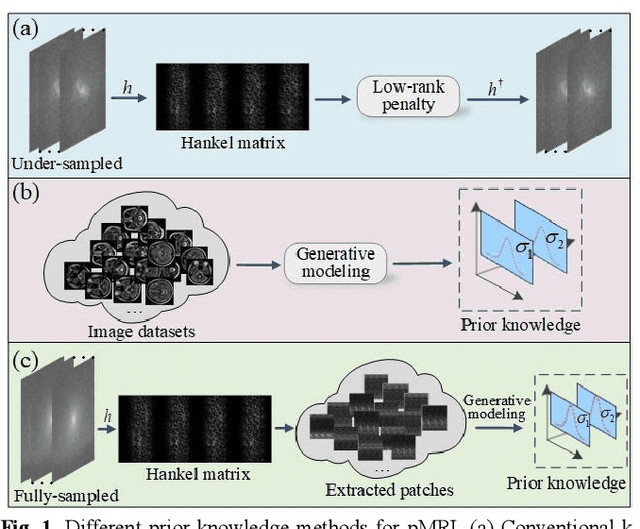

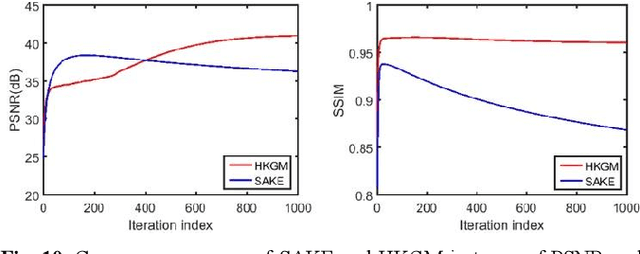

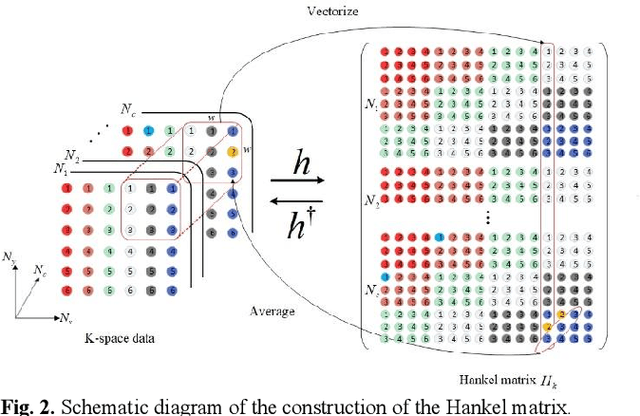

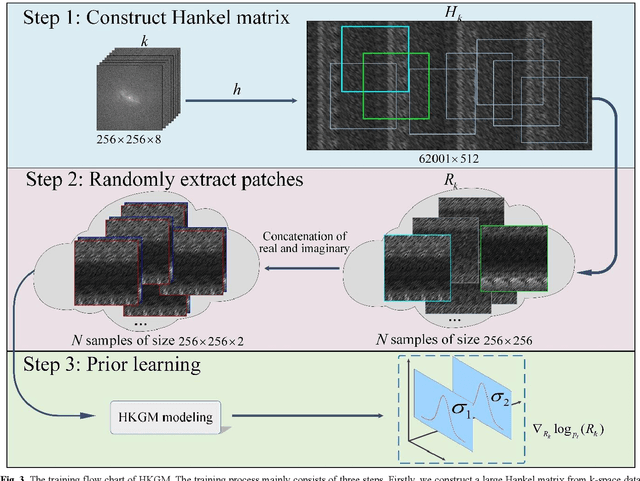

Abstract:Magnetic resonance imaging serves as an essential tool for clinical diagnosis. However, it suffers from a long acquisition time. The utilization of deep learning, especially the deep generative models, offers aggressive acceleration and better reconstruction in magnetic resonance imaging. Nevertheless, learning the data distribution as prior knowledge and reconstructing the image from limited data remains challenging. In this work, we propose a novel Hankel-k-space generative model (HKGM), which can generate samples from a training set of as little as one k-space data. At the prior learning stage, we first construct a large Hankel matrix from k-space data, then extract multiple structured k-space patches from the large Hankel matrix to capture the internal distribution among different patches. Extracting patches from a Hankel matrix enables the generative model to be learned from redundant and low-rank data space. At the iterative reconstruction stage, it is observed that the desired solution obeys the learned prior knowledge. The intermediate reconstruction solution is updated by taking it as the input of the generative model. The updated result is then alternatively operated by imposing low-rank penalty on its Hankel matrix and data consistency con-strain on the measurement data. Experimental results confirmed that the internal statistics of patches within a single k-space data carry enough information for learning a powerful generative model and provide state-of-the-art reconstruction.

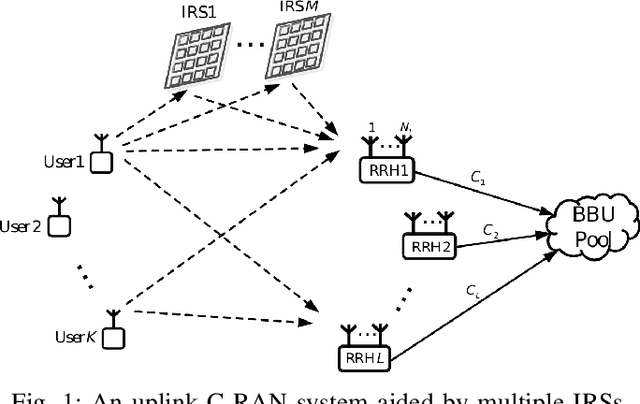

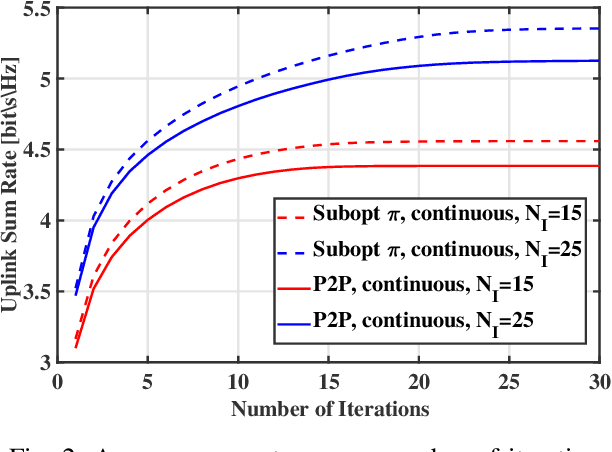

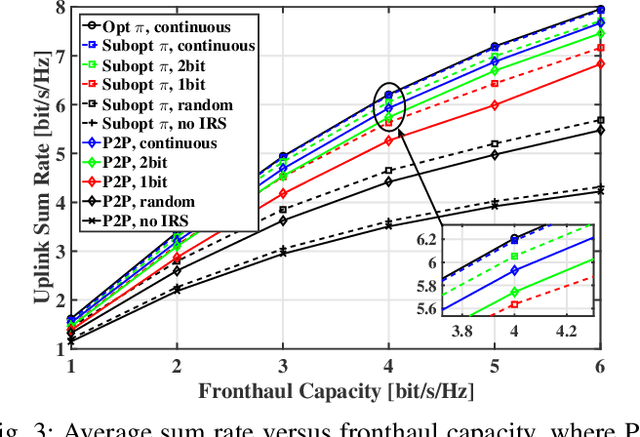

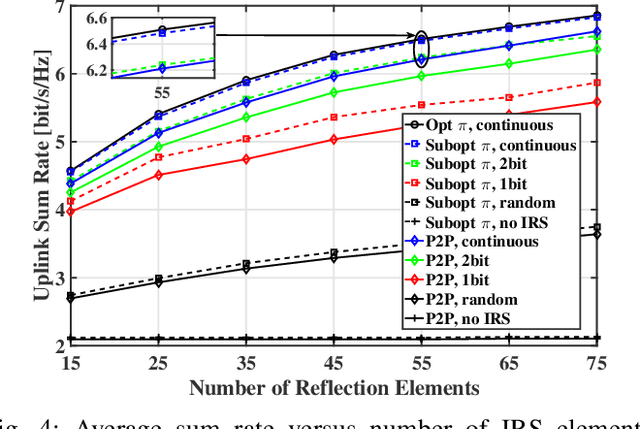

Fronthaul Compression and Passive Beamforming Design for Intelligent Reflecting Surface-aided Cloud Radio Access Networks

Feb 25, 2021

Abstract:This letter studies a cloud radio access network (C-RAN) with multiple intelligent reflecting surfaces (IRS) deployed between users and remote radio heads (RRH). Specifically, we consider the uplink transmission where each RRH quantizes the received signals from the users by either point-to-point compression or Wyner-Ziv compression and then transmits the quantization bits to the BBU pool through capacity limited fronthhual links. To maximize the uplink sum rate, we jointly optimize the passive beamformers of IRSs and the quantization noise covariance matrices of fronthoul compression. An joint fronthaul compression and passive beamforming design is proposed by exploiting the Arimoto-Blahut algorithm and semidefinte relaxation (SDR). Numerical results show the performance gain achieved by the proposed algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge