Learning Distributionally Robust Models at Scale via Composite Optimization

Mar 17, 2022Farzin Haddadpour, Mohammad Mahdi Kamani, Mehrdad Mahdavi, Amin Karbasi

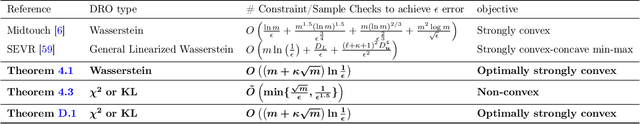

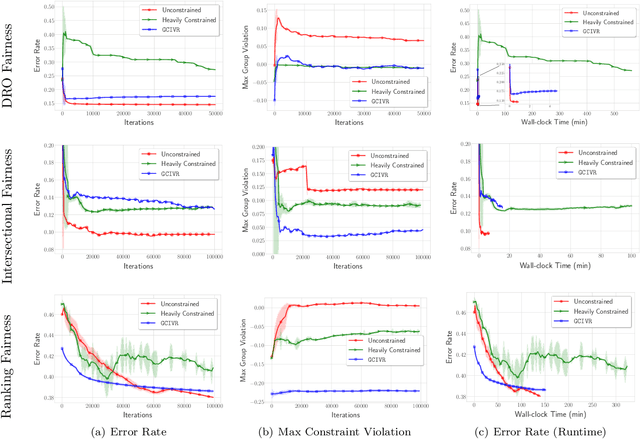

To train machine learning models that are robust to distribution shifts in the data, distributionally robust optimization (DRO) has been proven very effective. However, the existing approaches to learning a distributionally robust model either require solving complex optimization problems such as semidefinite programming or a first-order method whose convergence scales linearly with the number of data samples -- which hinders their scalability to large datasets. In this paper, we show how different variants of DRO are simply instances of a finite-sum composite optimization for which we provide scalable methods. We also provide empirical results that demonstrate the effectiveness of our proposed algorithm with respect to the prior art in order to learn robust models from very large datasets.

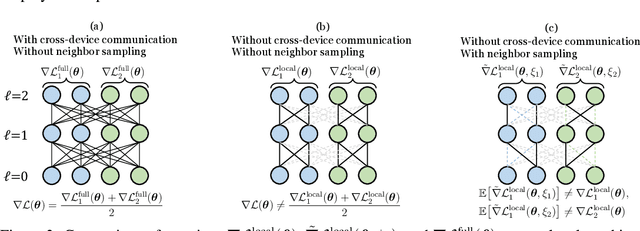

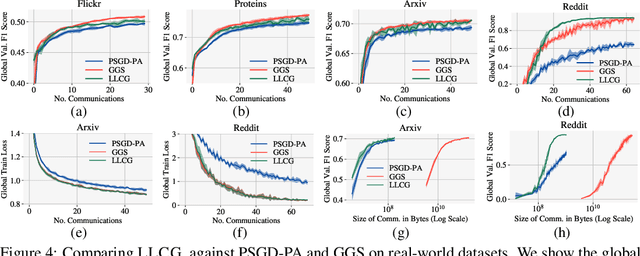

Learn Locally, Correct Globally: A Distributed Algorithm for Training Graph Neural Networks

Dec 07, 2021Morteza Ramezani, Weilin Cong, Mehrdad Mahdavi, Mahmut T. Kandemir, Anand Sivasubramaniam

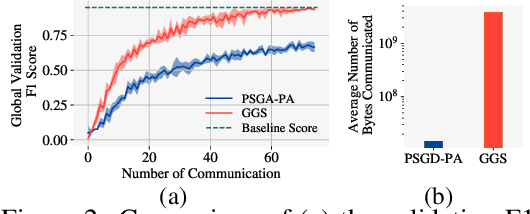

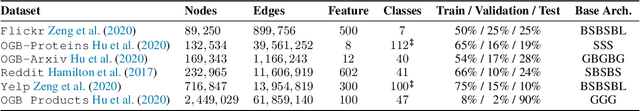

Despite the recent success of Graph Neural Networks (GNNs), training GNNs on large graphs remains challenging. The limited resource capacities of the existing servers, the dependency between nodes in a graph, and the privacy concern due to the centralized storage and model learning have spurred the need to design an effective distributed algorithm for GNN training. However, existing distributed GNN training methods impose either excessive communication costs or large memory overheads that hinders their scalability. To overcome these issues, we propose a communication-efficient distributed GNN training technique named $\text{{Learn Locally, Correct Globally}}$ (LLCG). To reduce the communication and memory overhead, each local machine in LLCG first trains a GNN on its local data by ignoring the dependency between nodes among different machines, then sends the locally trained model to the server for periodic model averaging. However, ignoring node dependency could result in significant performance degradation. To solve the performance degradation, we propose to apply $\text{{Global Server Corrections}}$ on the server to refine the locally learned models. We rigorously analyze the convergence of distributed methods with periodic model averaging for training GNNs and show that naively applying periodic model averaging but ignoring the dependency between nodes will suffer from an irreducible residual error. However, this residual error can be eliminated by utilizing the proposed global corrections to entail fast convergence rate. Extensive experiments on real-world datasets show that LLCG can significantly improve the efficiency without hurting the performance.

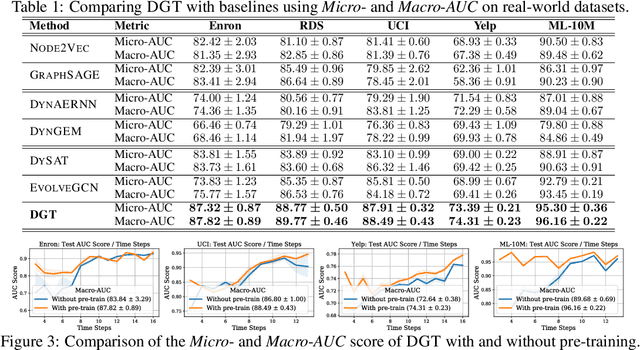

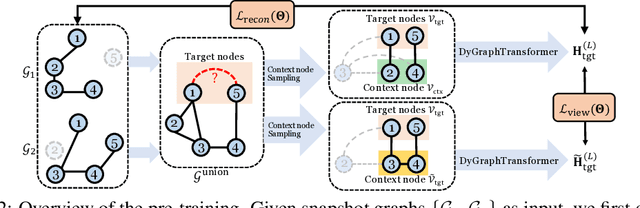

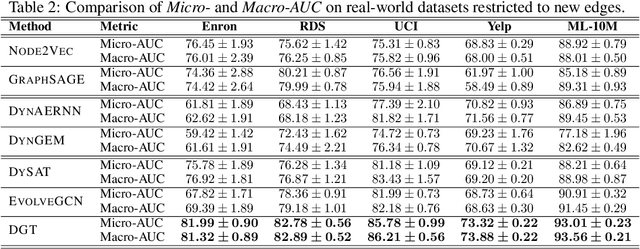

Dynamic Graph Representation Learning via Graph Transformer Networks

Nov 19, 2021Weilin Cong, Yanhong Wu, Yuandong Tian, Mengting Gu, Yinglong Xia, Mehrdad Mahdavi, Chun-cheng Jason Chen

Dynamic graph representation learning is an important task with widespread applications. Previous methods on dynamic graph learning are usually sensitive to noisy graph information such as missing or spurious connections, which can yield degenerated performance and generalization. To overcome this challenge, we propose a Transformer-based dynamic graph learning method named Dynamic Graph Transformer (DGT) with spatial-temporal encoding to effectively learn graph topology and capture implicit links. To improve the generalization ability, we introduce two complementary self-supervised pre-training tasks and show that jointly optimizing the two pre-training tasks results in a smaller Bayesian error rate via an information-theoretic analysis. We also propose a temporal-union graph structure and a target-context node sampling strategy for efficient and scalable training. Extensive experiments on real-world datasets illustrate that DGT presents superior performance compared with several state-of-the-art baselines.

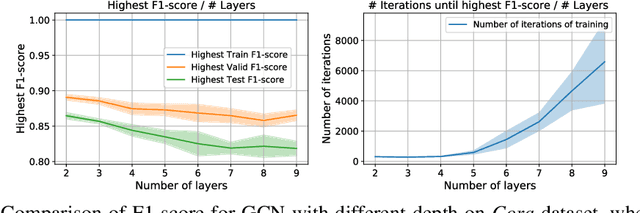

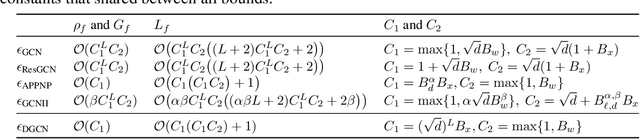

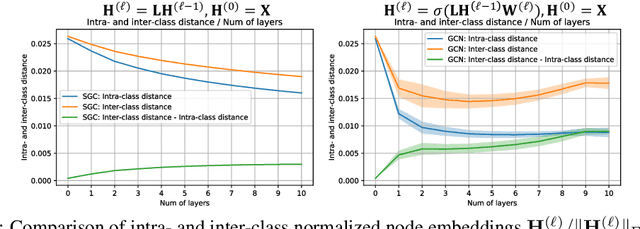

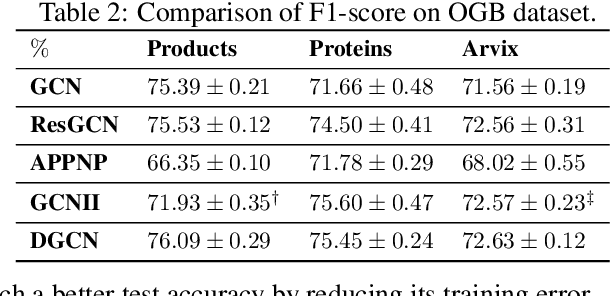

On Provable Benefits of Depth in Training Graph Convolutional Networks

Oct 28, 2021Weilin Cong, Morteza Ramezani, Mehrdad Mahdavi

Graph Convolutional Networks (GCNs) are known to suffer from performance degradation as the number of layers increases, which is usually attributed to over-smoothing. Despite the apparent consensus, we observe that there exists a discrepancy between the theoretical understanding of over-smoothing and the practical capabilities of GCNs. Specifically, we argue that over-smoothing does not necessarily happen in practice, a deeper model is provably expressive, can converge to global optimum with linear convergence rate, and achieve very high training accuracy as long as properly trained. Despite being capable of achieving high training accuracy, empirical results show that the deeper models generalize poorly on the testing stage and existing theoretical understanding of such behavior remains elusive. To achieve better understanding, we carefully analyze the generalization capability of GCNs, and show that the training strategies to achieve high training accuracy significantly deteriorate the generalization capability of GCNs. Motivated by these findings, we propose a decoupled structure for GCNs that detaches weight matrices from feature propagation to preserve the expressive power and ensure good generalization performance. We conduct empirical evaluations on various synthetic and real-world datasets to validate the correctness of our theory.

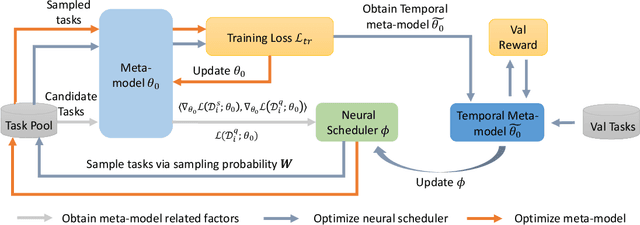

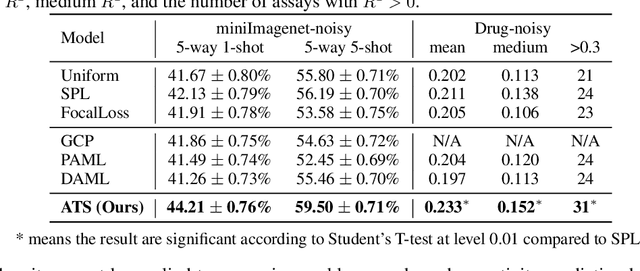

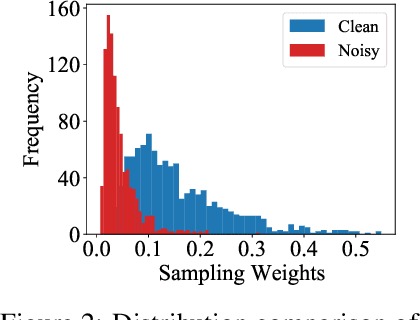

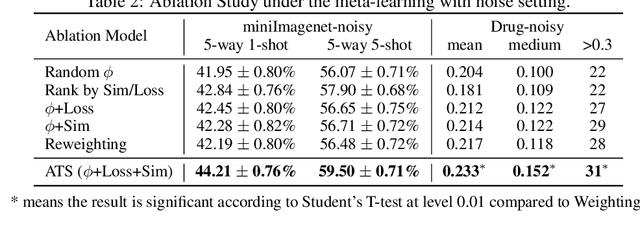

Meta-learning with an Adaptive Task Scheduler

Oct 26, 2021Huaxiu Yao, Yu Wang, Ying Wei, Peilin Zhao, Mehrdad Mahdavi, Defu Lian, Chelsea Finn

To benefit the learning of a new task, meta-learning has been proposed to transfer a well-generalized meta-model learned from various meta-training tasks. Existing meta-learning algorithms randomly sample meta-training tasks with a uniform probability, under the assumption that tasks are of equal importance. However, it is likely that tasks are detrimental with noise or imbalanced given a limited number of meta-training tasks. To prevent the meta-model from being corrupted by such detrimental tasks or dominated by tasks in the majority, in this paper, we propose an adaptive task scheduler (ATS) for the meta-training process. In ATS, for the first time, we design a neural scheduler to decide which meta-training tasks to use next by predicting the probability being sampled for each candidate task, and train the scheduler to optimize the generalization capacity of the meta-model to unseen tasks. We identify two meta-model-related factors as the input of the neural scheduler, which characterize the difficulty of a candidate task to the meta-model. Theoretically, we show that a scheduler taking the two factors into account improves the meta-training loss and also the optimization landscape. Under the setting of meta-learning with noise and limited budgets, ATS improves the performance on both miniImageNet and a real-world drug discovery benchmark by up to 13% and 18%, respectively, compared to state-of-the-art task schedulers.

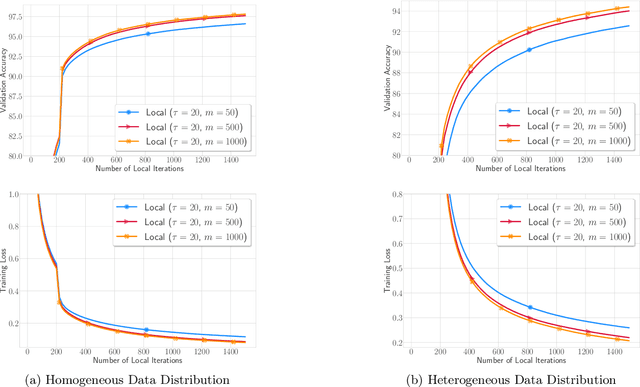

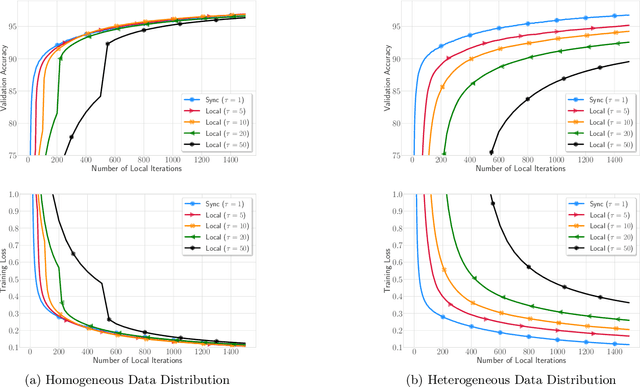

Local SGD Optimizes Overparameterized Neural Networks in Polynomial Time

Jul 22, 2021Yuyang Deng, Mehrdad Mahdavi

In this paper we prove that Local (S)GD (or FedAvg) can optimize two-layer neural networks with Rectified Linear Unit (ReLU) activation function in polynomial time. Despite the established convergence theory of Local SGD on optimizing general smooth functions in communication-efficient distributed optimization, its convergence on non-smooth ReLU networks still eludes full theoretical understanding. The key property used in many Local SGD analysis on smooth function is gradient Lipschitzness, so that the gradient on local models will not drift far away from that on averaged model. However, this decent property does not hold in networks with non-smooth ReLU activation function. We show that, even though ReLU network does not admit gradient Lipschitzness property, the difference between gradients on local models and average model will not change too much, under the dynamics of Local SGD. We validate our theoretical results via extensive experiments. This work is the first to show the convergence of Local SGD on non-smooth functions, and will shed lights on the optimization theory of federated training of deep neural networks.

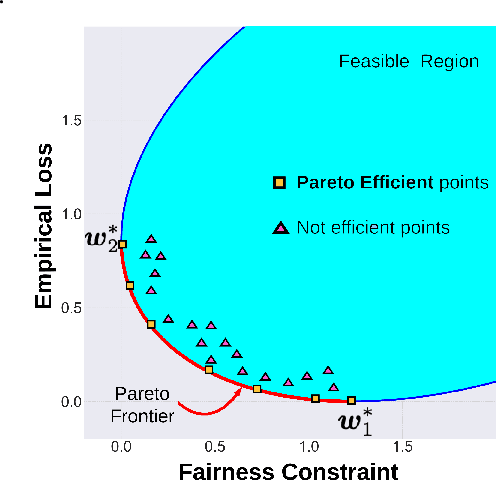

Pareto Efficient Fairness in Supervised Learning: From Extraction to Tracing

Apr 04, 2021Mohammad Mahdi Kamani, Rana Forsati, James Z. Wang, Mehrdad Mahdavi

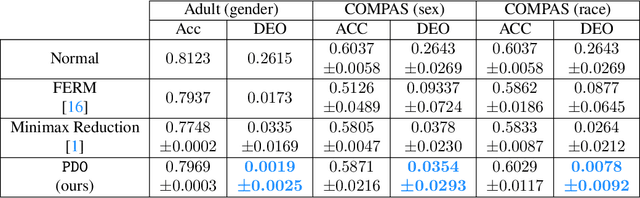

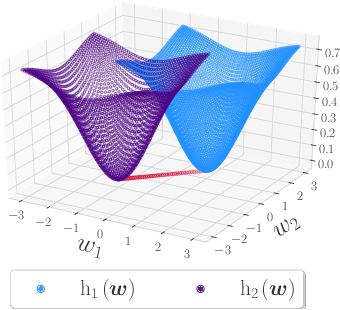

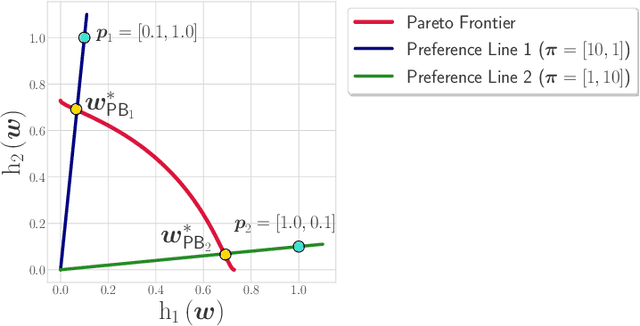

As algorithmic decision-making systems are becoming more pervasive, it is crucial to ensure such systems do not become mechanisms of unfair discrimination on the basis of gender, race, ethnicity, religion, etc. Moreover, due to the inherent trade-off between fairness measures and accuracy, it is desirable to learn fairness-enhanced models without significantly compromising the accuracy. In this paper, we propose Pareto efficient Fairness (PEF) as a suitable fairness notion for supervised learning, that can ensure the optimal trade-off between overall loss and other fairness criteria. The proposed PEF notion is definition-agnostic, meaning that any well-defined notion of fairness can be reduced to the PEF notion. To efficiently find a PEF classifier, we cast the fairness-enhanced classification as a bilevel optimization problem and propose a gradient-based method that can guarantee the solution belongs to the Pareto frontier with provable guarantees for convex and non-convex objectives. We also generalize the proposed algorithmic solution to extract and trace arbitrary solutions from the Pareto frontier for a given preference over accuracy and fairness measures. This approach is generic and can be generalized to any multicriteria optimization problem to trace points on the Pareto frontier curve, which is interesting by its own right. We empirically demonstrate the effectiveness of the PEF solution and the extracted Pareto frontier on real-world datasets compared to state-of-the-art methods.

On the Importance of Sampling in Learning Graph Convolutional Networks

Mar 03, 2021Weilin Cong, Morteza Ramezani, Mehrdad Mahdavi

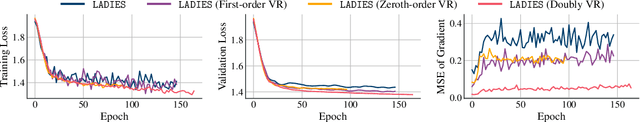

Graph Convolutional Networks (GCNs) have achieved impressive empirical advancement across a wide variety of graph-related applications. Despite their great success, training GCNs on large graphs suffers from computational and memory issues. A potential path to circumvent these obstacles is sampling-based methods, where at each layer a subset of nodes is sampled. Although recent studies have empirically demonstrated the effectiveness of sampling-based methods, these works lack theoretical convergence guarantees under realistic settings and cannot fully leverage the information of evolving parameters during optimization. In this paper, we describe and analyze a general \textbf{\textit{doubly variance reduction}} schema that can accelerate any sampling method under the memory budget. The motivating impetus for the proposed schema is a careful analysis for the variance of sampling methods where it is shown that the induced variance can be decomposed into node embedding approximation variance (\emph{zeroth-order variance}) during forward propagation and layerwise-gradient variance (\emph{first-order variance}) during backward propagation. We theoretically analyze the convergence of the proposed schema and show that it enjoys an $\mathcal{O}(1/T)$ convergence rate. We complement our theoretical results by integrating the proposed schema in different sampling methods and applying them to different large real-world graphs. Code is public available at~\url{https://github.com/CongWeilin/SGCN.git}.

Local Stochastic Gradient Descent Ascent: Convergence Analysis and Communication Efficiency

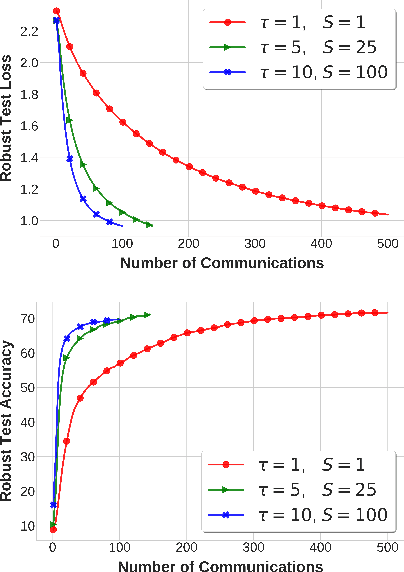

Feb 25, 2021Yuyang Deng, Mehrdad Mahdavi

Local SGD is a promising approach to overcome the communication overhead in distributed learning by reducing the synchronization frequency among worker nodes. Despite the recent theoretical advances of local SGD in empirical risk minimization, the efficiency of its counterpart in minimax optimization remains unexplored. Motivated by large scale minimax learning problems, such as adversarial robust learning and training generative adversarial networks (GANs), we propose local Stochastic Gradient Descent Ascent (local SGDA), where the primal and dual variables can be trained locally and averaged periodically to significantly reduce the number of communications. We show that local SGDA can provably optimize distributed minimax problems in both homogeneous and heterogeneous data with reduced number of communications and establish convergence rates under strongly-convex-strongly-concave and nonconvex-strongly-concave settings. In addition, we propose a novel variant local SGDA+, to solve nonconvex-nonconcave problems. We give corroborating empirical evidence on different distributed minimax problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge