Lionel Z. Wang

How Implicit Bias Accumulates and Propagates in LLM Long-term Memory

Feb 02, 2026Abstract:Long-term memory mechanisms enable Large Language Models (LLMs) to maintain continuity and personalization across extended interaction lifecycles, but they also introduce new and underexplored risks related to fairness. In this work, we study how implicit bias, defined as subtle statistical prejudice, accumulates and propagates within LLMs equipped with long-term memory. To support systematic analysis, we introduce the Decision-based Implicit Bias (DIB) Benchmark, a large-scale dataset comprising 3,776 decision-making scenarios across nine social domains, designed to quantify implicit bias in long-term decision processes. Using a realistic long-horizon simulation framework, we evaluate six state-of-the-art LLMs integrated with three representative memory architectures on DIB and demonstrate that LLMs' implicit bias does not remain static but intensifies over time and propagates across unrelated domains. We further analyze mitigation strategies and show that a static system-level prompting baseline provides limited and short-lived debiasing effects. To address this limitation, we propose Dynamic Memory Tagging (DMT), an agentic intervention that enforces fairness constraints at memory write time. Extensive experimental results show that DMT substantially reduces bias accumulation and effectively curtails cross-domain bias propagation.

AppellateGen: A Benchmark for Appellate Legal Judgment Generation

Jan 08, 2026Abstract:Legal judgment generation is a critical task in legal intelligence. However, existing research in legal judgment generation has predominantly focused on first-instance trials, relying on static fact-to-verdict mappings while neglecting the dialectical nature of appellate (second-instance) review. To address this, we introduce AppellateGen, a benchmark for second-instance legal judgment generation comprising 7,351 case pairs. The task requires models to draft legally binding judgments by reasoning over the initial verdict and evidentiary updates, thereby modeling the causal dependency between trial stages. We further propose a judicial Standard Operating Procedure (SOP)-based Legal Multi-Agent System (SLMAS) to simulate judicial workflows, which decomposes the generation process into discrete stages of issue identification, retrieval, and drafting. Experimental results indicate that while SLMAS improves logical consistency, the complexity of appellate reasoning remains a substantial challenge for current LLMs. The dataset and code are publicly available at: https://anonymous.4open.science/r/AppellateGen-5763.

DP-MGTD: Privacy-Preserving Machine-Generated Text Detection via Adaptive Differentially Private Entity Sanitization

Jan 08, 2026Abstract:The deployment of Machine-Generated Text (MGT) detection systems necessitates processing sensitive user data, creating a fundamental conflict between authorship verification and privacy preservation. Standard anonymization techniques often disrupt linguistic fluency, while rigorous Differential Privacy (DP) mechanisms typically degrade the statistical signals required for accurate detection. To resolve this dilemma, we propose \textbf{DP-MGTD}, a framework incorporating an Adaptive Differentially Private Entity Sanitization algorithm. Our approach utilizes a two-stage mechanism that performs noisy frequency estimation and dynamically calibrates privacy budgets, applying Laplace and Exponential mechanisms to numerical and textual entities respectively. Crucially, we identify a counter-intuitive phenomenon where the application of DP noise amplifies the distinguishability between human and machine text by exposing distinct sensitivity patterns to perturbation. Extensive experiments on the MGTBench-2.0 dataset show that our method achieves near-perfect detection accuracy, significantly outperforming non-private baselines while satisfying strict privacy guarantees.

SugarTextNet: A Transformer-Based Framework for Detecting Sugar Dating-Related Content on Social Media with Context-Aware Focal Loss

Nov 11, 2025

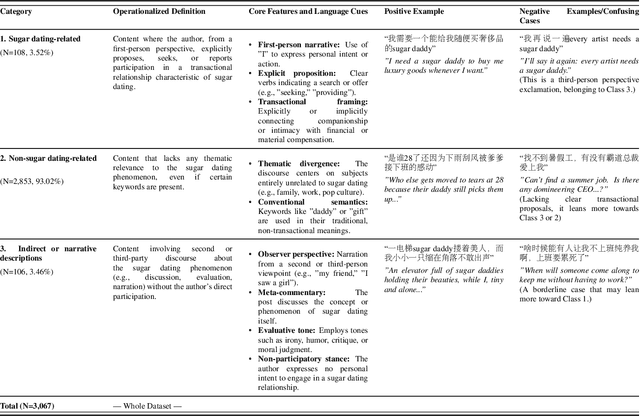

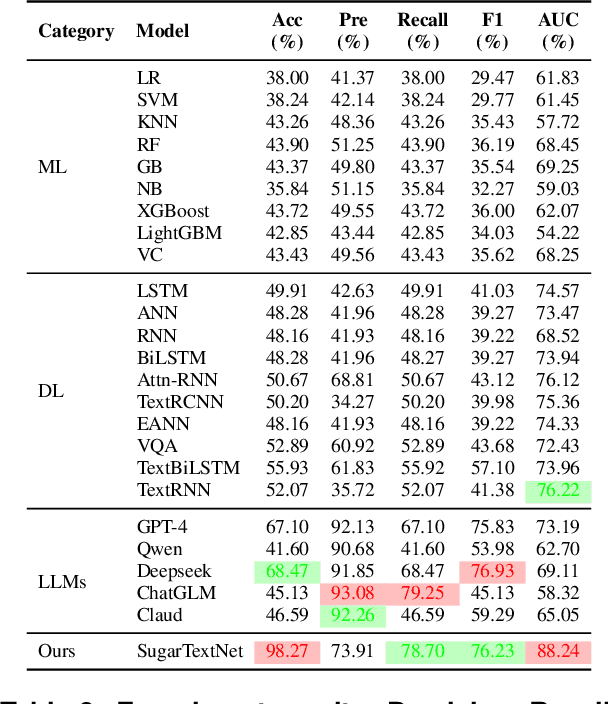

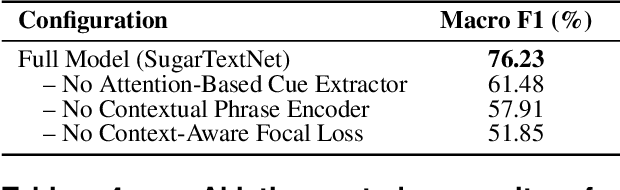

Abstract:Sugar dating-related content has rapidly proliferated on mainstream social media platforms, giving rise to serious societal and regulatory concerns, including commercialization of intimate relationships and the normalization of transactional relationships.~Detecting such content is highly challenging due to the prevalence of subtle euphemisms, ambiguous linguistic cues, and extreme class imbalance in real-world data.~In this work, we present SugarTextNet, a novel transformer-based framework specifically designed to identify sugar dating-related posts on social media.~SugarTextNet integrates a pretrained transformer encoder, an attention-based cue extractor, and a contextual phrase encoder to capture both salient and nuanced features in user-generated text.~To address class imbalance and enhance minority-class detection, we introduce Context-Aware Focal Loss, a tailored loss function that combines focal loss scaling with contextual weighting.~We evaluate SugarTextNet on a newly curated, manually annotated dataset of 3,067 Chinese social media posts from Sina Weibo, demonstrating that our approach substantially outperforms traditional machine learning models, deep learning baselines, and large language models across multiple metrics.~Comprehensive ablation studies confirm the indispensable role of each component.~Our findings highlight the importance of domain-specific, context-aware modeling for sensitive content detection, and provide a robust solution for content moderation in complex, real-world scenarios.

Sub-Clustering for Class Distance Recalculation in Long-Tailed Drug Classification

Apr 07, 2025Abstract:In the real world, long-tailed data distributions are prevalent, making it challenging for models to effectively learn and classify tail classes. However, we discover that in the field of drug chemistry, certain tail classes exhibit higher identifiability during training due to their unique molecular structural features, a finding that significantly contrasts with the conventional understanding that tail classes are generally difficult to identify. Existing imbalance learning methods, such as resampling and cost-sensitive reweighting, overly rely on sample quantity priors, causing models to excessively focus on tail classes at the expense of head class performance. To address this issue, we propose a novel method that breaks away from the traditional static evaluation paradigm based on sample size. Instead, we establish a dynamical inter-class separability metric using feature distances between different classes. Specifically, we employ a sub-clustering contrastive learning approach to thoroughly learn the embedding features of each class, and we dynamically compute the distances between class embeddings to capture the relative positional evolution of samples from different classes in the feature space, thereby rebalancing the weights of the classification loss function. We conducted experiments on multiple existing long-tailed drug datasets and achieved competitive results by improving the accuracy of tail classes without compromising the performance of dominant classes.

JiraiBench: A Bilingual Benchmark for Evaluating Large Language Models' Detection of Human Self-Destructive Behavior Content in Jirai Community

Mar 27, 2025

Abstract:This paper introduces JiraiBench, the first bilingual benchmark for evaluating large language models' effectiveness in detecting self-destructive content across Chinese and Japanese social media communities. Focusing on the transnational "Jirai" (landmine) online subculture that encompasses multiple forms of self-destructive behaviors including drug overdose, eating disorders, and self-harm, we present a comprehensive evaluation framework incorporating both linguistic and cultural dimensions. Our dataset comprises 10,419 Chinese posts and 5,000 Japanese posts with multidimensional annotation along three behavioral categories, achieving substantial inter-annotator agreement. Experimental evaluations across four state-of-the-art models reveal significant performance variations based on instructional language, with Japanese prompts unexpectedly outperforming Chinese prompts when processing Chinese content. This emergent cross-cultural transfer suggests that cultural proximity can sometimes outweigh linguistic similarity in detection tasks. Cross-lingual transfer experiments with fine-tuned models further demonstrate the potential for knowledge transfer between these language systems without explicit target language training. These findings highlight the need for culturally-informed approaches to multilingual content moderation and provide empirical evidence for the importance of cultural context in developing more effective detection systems for vulnerable online communities.

MegaFake: A Theory-Driven Dataset of Fake News Generated by Large Language Models

Aug 19, 2024

Abstract:The advent of large language models (LLMs) has revolutionized online content creation, making it much easier to generate high-quality fake news. This misuse threatens the integrity of our digital environment and ethical standards. Therefore, understanding the motivations and mechanisms behind LLM-generated fake news is crucial. In this study, we analyze the creation of fake news from a social psychology perspective and develop a comprehensive LLM-based theoretical framework, LLM-Fake Theory. We introduce a novel pipeline that automates the generation of fake news using LLMs, thereby eliminating the need for manual annotation. Utilizing this pipeline, we create a theoretically informed Machine-generated Fake news dataset, MegaFake, derived from the GossipCop dataset. We conduct comprehensive analyses to evaluate our MegaFake dataset. We believe that our dataset and insights will provide valuable contributions to future research focused on the detection and governance of fake news in the era of LLMs.

EllipBench: A Large-scale Benchmark for Machine-learning based Ellipsometry Modeling

Jul 25, 2024Abstract:Ellipsometry is used to indirectly measure the optical properties and thickness of thin films. However, solving the inverse problem of ellipsometry is time-consuming since it involves human expertise to apply the data fitting techniques. Many studies use traditional machine learning-based methods to model the complex mathematical fitting process. In our work, we approach this problem from a deep learning perspective. First, we introduce a large-scale benchmark dataset to facilitate deep learning methods. The proposed dataset encompasses 98 types of thin film materials and 4 types of substrate materials, including metals, alloys, compounds, and polymers, among others. Additionally, we propose a deep learning framework that leverages residual connections and self-attention mechanisms to learn the massive data points. We also introduce a reconstruction loss to address the common challenge of multiple solutions in thin film thickness prediction. Compared to traditional machine learning methods, our framework achieves state-of-the-art (SOTA) performance on our proposed dataset. The dataset and code will be available upon acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge