Liang Lin

Aerial Images Meet Crowdsourced Trajectories: A New Approach to Robust Road Extraction

Nov 30, 2021

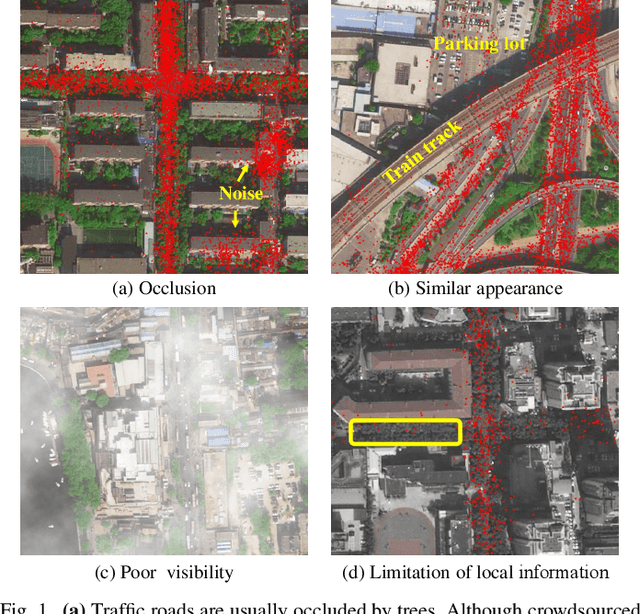

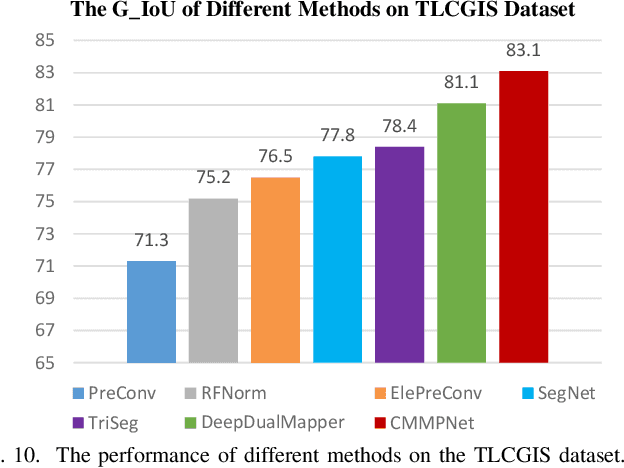

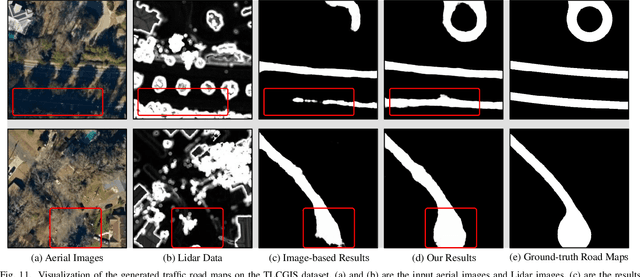

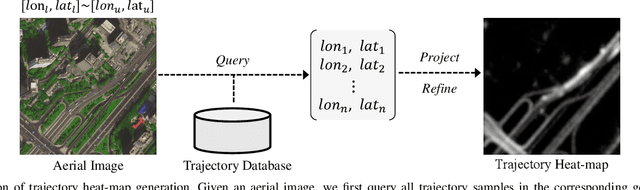

Abstract:Land remote sensing analysis is a crucial research in earth science. In this work, we focus on a challenging task of land analysis, i.e., automatic extraction of traffic roads from remote sensing data, which has widespread applications in urban development and expansion estimation. Nevertheless, conventional methods either only utilized the limited information of aerial images, or simply fused multimodal information (e.g., vehicle trajectories), thus cannot well recognize unconstrained roads. To facilitate this problem, we introduce a novel neural network framework termed Cross-Modal Message Propagation Network (CMMPNet), which fully benefits the complementary different modal data (i.e., aerial images and crowdsourced trajectories). Specifically, CMMPNet is composed of two deep Auto-Encoders for modality-specific representation learning and a tailor-designed Dual Enhancement Module for cross-modal representation refinement. In particular, the complementary information of each modality is comprehensively extracted and dynamically propagated to enhance the representation of another modality. Extensive experiments on three real-world benchmarks demonstrate the effectiveness of our CMMPNet for robust road extraction benefiting from blending different modal data, either using image and trajectory data or image and Lidar data. From the experimental results, we observe that the proposed approach outperforms current state-of-the-art methods by large margins.

Enhancing Prototypical Few-Shot Learning by Leveraging the Local-Level Strategy

Nov 08, 2021

Abstract:Aiming at recognizing the samples from novel categories with few reference samples, few-shot learning (FSL) is a challenging problem. We found that the existing works often build their few-shot model based on the image-level feature by mixing all local-level features, which leads to the discriminative location bias and information loss in local details. To tackle the problem, this paper returns the perspective to the local-level feature and proposes a series of local-level strategies. Specifically, we present (a) a local-agnostic training strategy to avoid the discriminative location bias between the base and novel categories, (b) a novel local-level similarity measure to capture the accurate comparison between local-level features, and (c) a local-level knowledge transfer that can synthesize different knowledge transfers from the base category according to different location features. Extensive experiments justify that our proposed local-level strategies can significantly boost the performance and achieve 2.8%-7.2% improvements over the baseline across different benchmark datasets, which also achieves state-of-the-art accuracy.

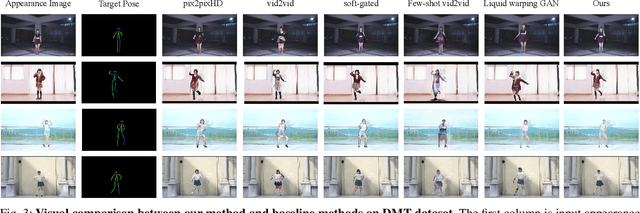

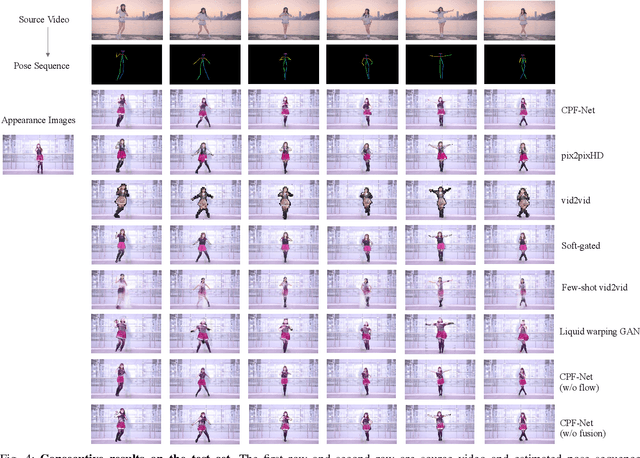

Image Comes Dancing with Collaborative Parsing-Flow Video Synthesis

Oct 28, 2021

Abstract:Transferring human motion from a source to a target person poses great potential in computer vision and graphics applications. A crucial step is to manipulate sequential future motion while retaining the appearance characteristic.Previous work has either relied on crafted 3D human models or trained a separate model specifically for each target person, which is not scalable in practice.This work studies a more general setting, in which we aim to learn a single model to parsimoniously transfer motion from a source video to any target person given only one image of the person, named as Collaborative Parsing-Flow Network (CPF-Net). The paucity of information regarding the target person makes the task particularly challenging to faithfully preserve the appearance in varying designated poses. To address this issue, CPF-Net integrates the structured human parsing and appearance flow to guide the realistic foreground synthesis which is merged into the background by a spatio-temporal fusion module. In particular, CPF-Net decouples the problem into stages of human parsing sequence generation, foreground sequence generation and final video generation. The human parsing generation stage captures both the pose and the body structure of the target. The appearance flow is beneficial to keep details in synthesized frames. The integration of human parsing and appearance flow effectively guides the generation of video frames with realistic appearance. Finally, the dedicated designed fusion network ensure the temporal coherence. We further collect a large set of human dancing videos to push forward this research field. Both quantitative and qualitative results show our method substantially improves over previous approaches and is able to generate appealing and photo-realistic target videos given any input person image. All source code and dataset will be released at https://github.com/xiezhy6/CPF-Net.

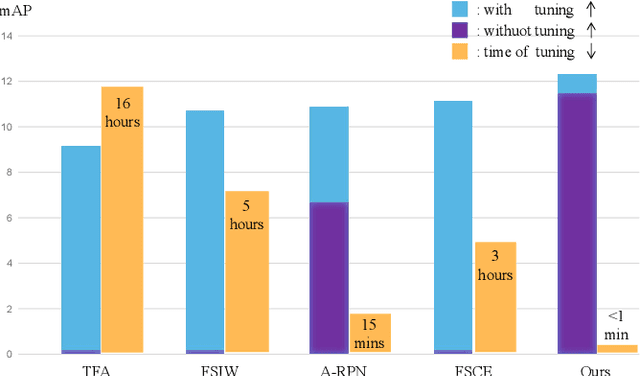

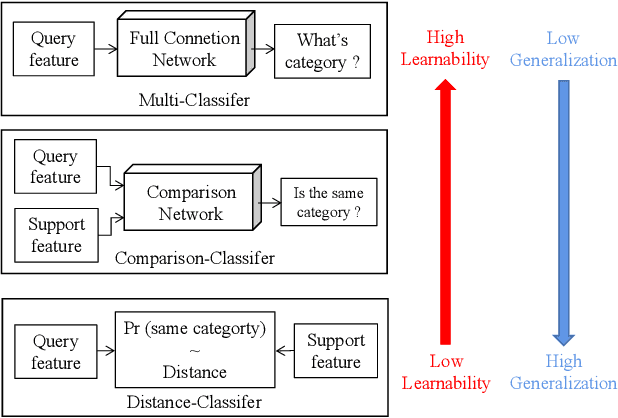

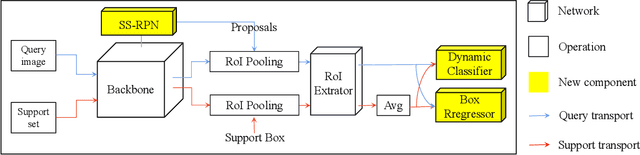

Plug-and-Play Few-shot Object Detection with Meta Strategy and Explicit Localization Inference

Oct 26, 2021

Abstract:Aiming at recognizing and localizing the object of novel categories by a few reference samples, few-shot object detection is a quite challenging task. Previous works often depend on the fine-tuning process to transfer their model to the novel category and rarely consider the defect of fine-tuning, resulting in many drawbacks. For example, these methods are far from satisfying in the low-shot or episode-based scenarios since the fine-tuning process in object detection requires much time and high-shot support data. To this end, this paper proposes a plug-and-play few-shot object detection (PnP-FSOD) framework that can accurately and directly detect the objects of novel categories without the fine-tuning process. To accomplish the objective, the PnP-FSOD framework contains two parallel techniques to address the core challenges in the few-shot learning, i.e., across-category task and few-annotation support. Concretely, we first propose two simple but effective meta strategies for the box classifier and RPN module to enable the across-category object detection without fine-tuning. Then, we introduce two explicit inferences into the localization process to reduce its dependence on the annotated data, including explicit localization score and semi-explicit box regression. In addition to the PnP-FSOD framework, we propose a novel one-step tuning method that can avoid the defects in fine-tuning. It is noteworthy that the proposed techniques and tuning method are based on the general object detector without other prior methods, so they are easily compatible with the existing FSOD methods. Extensive experiments show that the PnP-FSOD framework has achieved the state-of-the-art few-shot object detection performance without any tuning method. After applying the one-step tuning method, it further shows a significant lead in both efficiency, precision, and recall, under varied evaluation protocols.

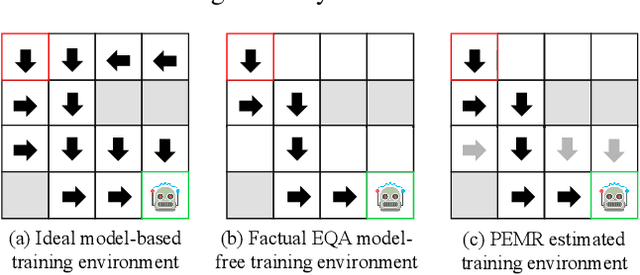

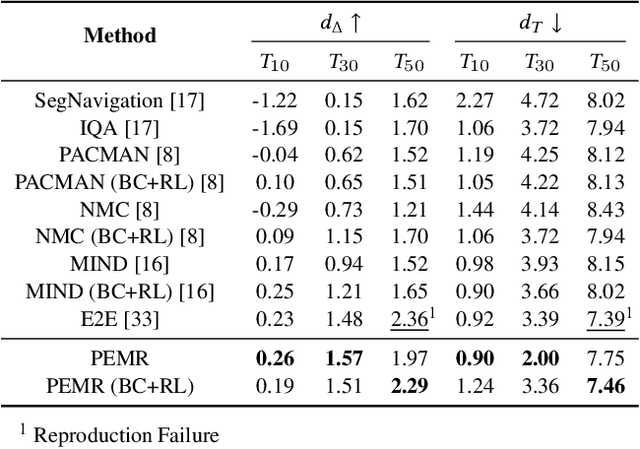

Explore before Moving: A Feasible Path Estimation and Memory Recalling Framework for Embodied Navigation

Oct 16, 2021

Abstract:An embodied task such as embodied question answering (EmbodiedQA), requires an agent to explore the environment and collect clues to answer a given question that related with specific objects in the scene. The solution of such task usually includes two stages, a navigator and a visual Q&A module. In this paper, we focus on the navigation and solve the problem of existing navigation algorithms lacking experience and common sense, which essentially results in a failure finding target when robot is spawn in unknown environments. Inspired by the human ability to think twice before moving and conceive several feasible paths to seek a goal in unfamiliar scenes, we present a route planning method named Path Estimation and Memory Recalling (PEMR) framework. PEMR includes a "looking ahead" process, \textit{i.e.} a visual feature extractor module that estimates feasible paths for gathering 3D navigational information, which is mimicking the human sense of direction. PEMR contains another process ``looking behind'' process that is a memory recall mechanism aims at fully leveraging past experience collected by the feature extractor. Last but not the least, to encourage the navigator to learn more accurate prior expert experience, we improve the original benchmark dataset and provide a family of evaluation metrics for diagnosing both navigation and question answering modules. We show strong experimental results of PEMR on the EmbodiedQA navigation task.

Wav-BERT: Cooperative Acoustic and Linguistic Representation Learning for Low-Resource Speech Recognition

Oct 09, 2021

Abstract:Unifying acoustic and linguistic representation learning has become increasingly crucial to transfer the knowledge learned on the abundance of high-resource language data for low-resource speech recognition. Existing approaches simply cascade pre-trained acoustic and language models to learn the transfer from speech to text. However, how to solve the representation discrepancy of speech and text is unexplored, which hinders the utilization of acoustic and linguistic information. Moreover, previous works simply replace the embedding layer of the pre-trained language model with the acoustic features, which may cause the catastrophic forgetting problem. In this work, we introduce Wav-BERT, a cooperative acoustic and linguistic representation learning method to fuse and utilize the contextual information of speech and text. Specifically, we unify a pre-trained acoustic model (wav2vec 2.0) and a language model (BERT) into an end-to-end trainable framework. A Representation Aggregation Module is designed to aggregate acoustic and linguistic representation, and an Embedding Attention Module is introduced to incorporate acoustic information into BERT, which can effectively facilitate the cooperation of two pre-trained models and thus boost the representation learning. Extensive experiments show that our Wav-BERT significantly outperforms the existing approaches and achieves state-of-the-art performance on low-resource speech recognition.

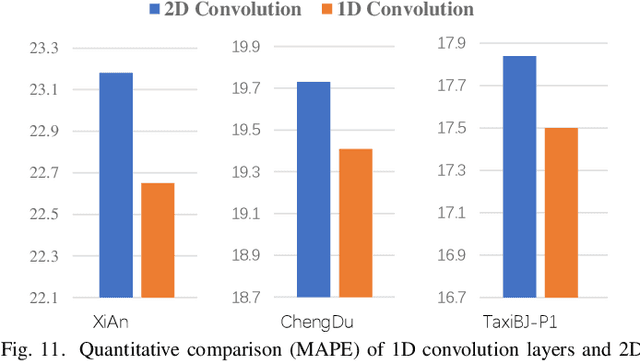

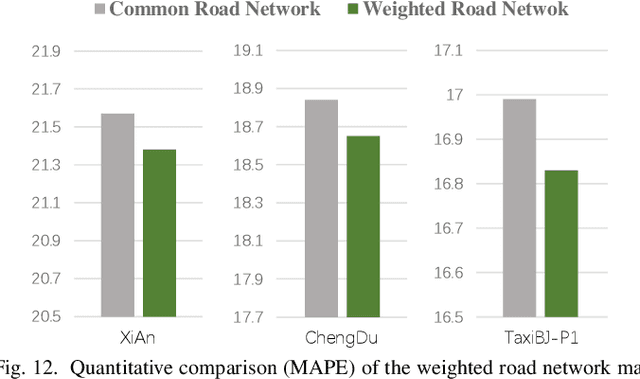

Road Network Guided Fine-Grained Urban Traffic Flow Inference

Sep 29, 2021

Abstract:Accurate inference of fine-grained traffic flow from coarse-grained one is an emerging yet crucial problem, which can help greatly reduce the number of traffic monitoring sensors for cost savings. In this work, we notice that traffic flow has a high correlation with road network, which was either completely ignored or simply treated as an external factor in previous works. To facilitate this problem, we propose a novel Road-Aware Traffic Flow Magnifier (RATFM) that explicitly exploits the prior knowledge of road networks to fully learn the road-aware spatial distribution of fine-grained traffic flow. Specifically, a multi-directional 1D convolutional layer is first introduced to extract the semantic feature of the road network. Subsequently, we incorporate the road network feature and coarse-grained flow feature to regularize the short-range spatial distribution modeling of road-relative traffic flow. Furthermore, we take the road network feature as a query to capture the long-range spatial distribution of traffic flow with a transformer architecture. Benefiting from the road-aware inference mechanism, our method can generate high-quality fine-grained traffic flow maps. Extensive experiments on three real-world datasets show that the proposed RATFM outperforms state-of-the-art models under various scenarios.

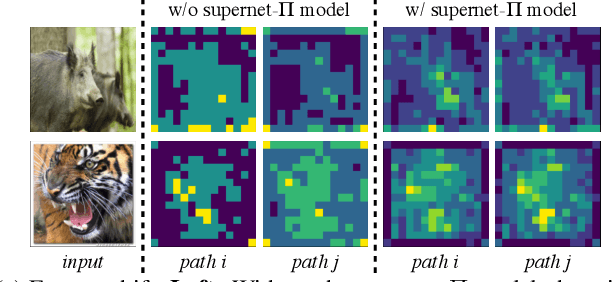

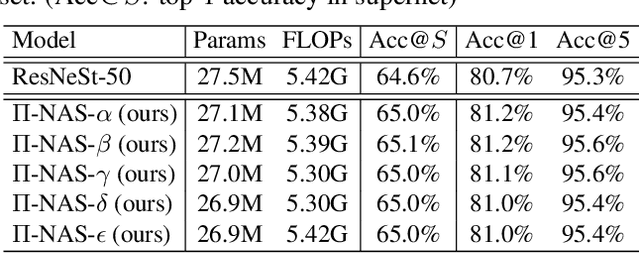

Pi-NAS: Improving Neural Architecture Search by Reducing Supernet Training Consistency Shift

Aug 22, 2021

Abstract:Recently proposed neural architecture search (NAS) methods co-train billions of architectures in a supernet and estimate their potential accuracy using the network weights detached from the supernet. However, the ranking correlation between the architectures' predicted accuracy and their actual capability is incorrect, which causes the existing NAS methods' dilemma. We attribute this ranking correlation problem to the supernet training consistency shift, including feature shift and parameter shift. Feature shift is identified as dynamic input distributions of a hidden layer due to random path sampling. The input distribution dynamic affects the loss descent and finally affects architecture ranking. Parameter shift is identified as contradictory parameter updates for a shared layer lay in different paths in different training steps. The rapidly-changing parameter could not preserve architecture ranking. We address these two shifts simultaneously using a nontrivial supernet-Pi model, called Pi-NAS. Specifically, we employ a supernet-Pi model that contains cross-path learning to reduce the feature consistency shift between different paths. Meanwhile, we adopt a novel nontrivial mean teacher containing negative samples to overcome parameter shift and model collision. Furthermore, our Pi-NAS runs in an unsupervised manner, which can search for more transferable architectures. Extensive experiments on ImageNet and a wide range of downstream tasks (e.g., COCO 2017, ADE20K, and Cityscapes) demonstrate the effectiveness and universality of our Pi-NAS compared to supervised NAS. See Codes: https://github.com/Ernie1/Pi-NAS.

Trash to Treasure: Harvesting OOD Data with Cross-Modal Matching for Open-Set Semi-Supervised Learning

Aug 12, 2021

Abstract:Open-set semi-supervised learning (open-set SSL) investigates a challenging but practical scenario where out-of-distribution (OOD) samples are contained in the unlabeled data. While the mainstream technique seeks to completely filter out the OOD samples for semi-supervised learning (SSL), we propose a novel training mechanism that could effectively exploit the presence of OOD data for enhanced feature learning while avoiding its adverse impact on the SSL. We achieve this goal by first introducing a warm-up training that leverages all the unlabeled data, including both the in-distribution (ID) and OOD samples. Specifically, we perform a pretext task that enforces our feature extractor to obtain a high-level semantic understanding of the training images, leading to more discriminative features that can benefit the downstream tasks. Since the OOD samples are inevitably detrimental to SSL, we propose a novel cross-modal matching strategy to detect OOD samples. Instead of directly applying binary classification, we train the network to predict whether the data sample is matched to an assigned one-hot class label. The appeal of the proposed cross-modal matching over binary classification is the ability to generate a compatible feature space that aligns with the core classification task. Extensive experiments show that our approach substantially lifts the performance on open-set SSL and outperforms the state-of-the-art by a large margin.

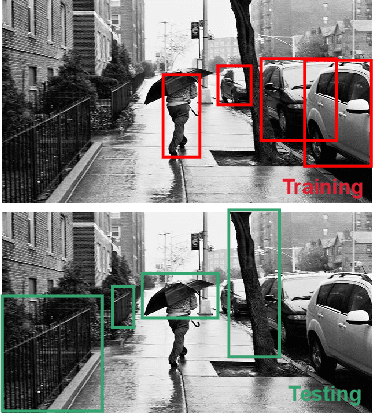

Weakly-Supervised Spatio-Temporal Anomaly Detection in Surveillance Video

Aug 09, 2021

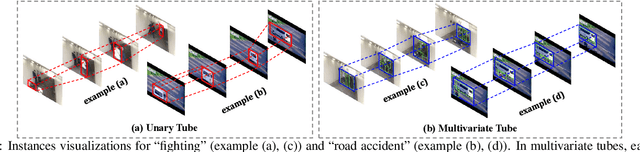

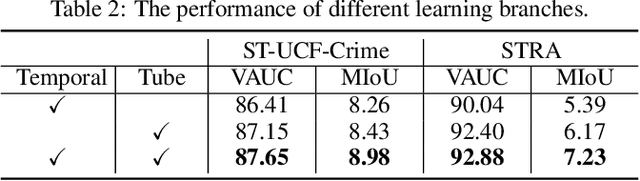

Abstract:In this paper, we introduce a novel task, referred to as Weakly-Supervised Spatio-Temporal Anomaly Detection (WSSTAD) in surveillance video. Specifically, given an untrimmed video, WSSTAD aims to localize a spatio-temporal tube (i.e., a sequence of bounding boxes at consecutive times) that encloses the abnormal event, with only coarse video-level annotations as supervision during training. To address this challenging task, we propose a dual-branch network which takes as input the proposals with multi-granularities in both spatial-temporal domains. Each branch employs a relationship reasoning module to capture the correlation between tubes/videolets, which can provide rich contextual information and complex entity relationships for the concept learning of abnormal behaviors. Mutually-guided Progressive Refinement framework is set up to employ dual-path mutual guidance in a recurrent manner, iteratively sharing auxiliary supervision information across branches. It impels the learned concepts of each branch to serve as a guide for its counterpart, which progressively refines the corresponding branch and the whole framework. Furthermore, we contribute two datasets, i.e., ST-UCF-Crime and STRA, consisting of videos containing spatio-temporal abnormal annotations to serve as the benchmarks for WSSTAD. We conduct extensive qualitative and quantitative evaluations to demonstrate the effectiveness of the proposed approach and analyze the key factors that contribute more to handle this task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge