Honghua Chen

GeoGCN: Geometric Dual-domain Graph Convolution Network for Point Cloud Denoising

Oct 28, 2022Abstract:We propose GeoGCN, a novel geometric dual-domain graph convolution network for point cloud denoising (PCD). Beyond the traditional wisdom of PCD, to fully exploit the geometric information of point clouds, we define two kinds of surface normals, one is called Real Normal (RN), and the other is Virtual Normal (VN). RN preserves the local details of noisy point clouds while VN avoids the global shape shrinkage during denoising. GeoGCN is a new PCD paradigm that, 1) first regresses point positions by spatialbased GCN with the help of VNs, 2) then estimates initial RNs by performing Principal Component Analysis on the regressed points, and 3) finally regresses fine RNs by normalbased GCN. Unlike existing PCD methods, GeoGCN not only exploits two kinds of geometry expertise (i.e., RN and VN) but also benefits from training data. Experiments validate that GeoGCN outperforms SOTAs in terms of both noise-robustness and local-and-global feature preservation.

LBF:Learnable Bilateral Filter For Point Cloud Denoising

Oct 28, 2022Abstract:Bilateral filter (BF) is a fast, lightweight and effective tool for image denoising and well extended to point cloud denoising. However, it often involves continual yet manual parameter adjustment; this inconvenience discounts the efficiency and user experience to obtain satisfied denoising results. We propose LBF, an end-to-end learnable bilateral filtering network for point cloud denoising; to our knowledge, this is the first time. Unlike the conventional BF and its variants that receive the same parameters for a whole point cloud, LBF learns adaptive parameters for each point according its geometric characteristic (e.g., corner, edge, plane), avoiding remnant noise, wrongly-removed geometric details, and distorted shapes. Besides the learnable paradigm of BF, we have two cores to facilitate LBF. First, different from the local BF, LBF possesses a global-scale feature perception ability by exploiting multi-scale patches of each point. Second, LBF formulates a geometry-aware bi-directional projection loss, leading the denoising results to being faithful to their underlying surfaces. Users can apply our LBF without any laborious parameter tuning to achieve the optimal denoising results. Experiments show clear improvements of LBF over its competitors on both synthetic and real-scanned datasets.

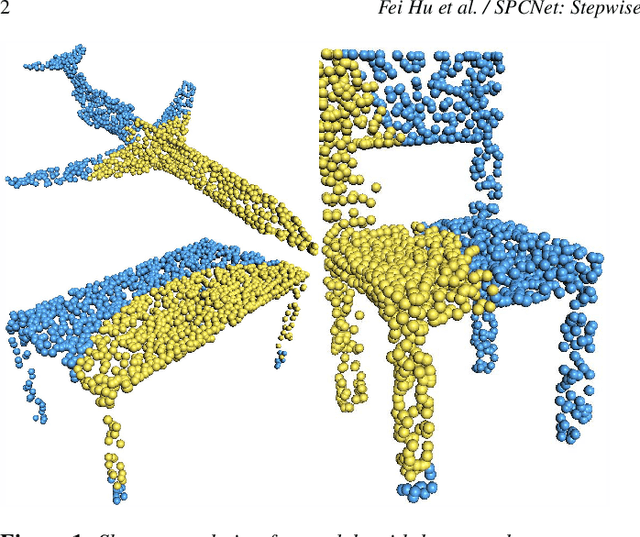

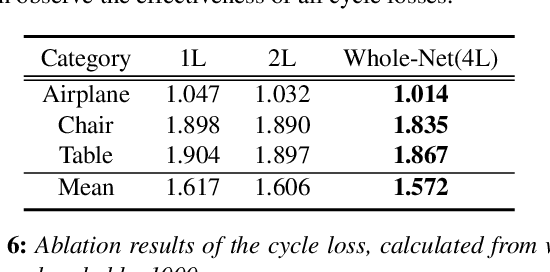

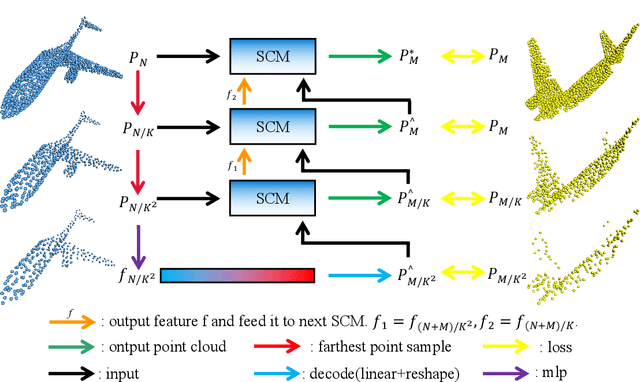

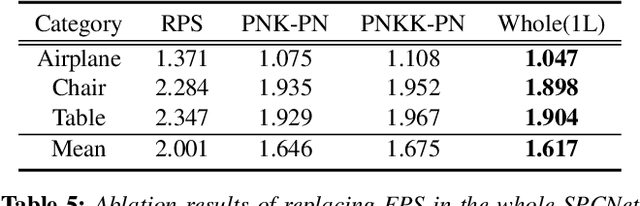

SPCNet: Stepwise Point Cloud Completion Network

Sep 05, 2022

Abstract:How will you repair a physical object with large missings? You may first recover its global yet coarse shape and stepwise increase its local details. We are motivated to imitate the above physical repair procedure to address the point cloud completion task. We propose a novel stepwise point cloud completion network (SPCNet) for various 3D models with large missings. SPCNet has a hierarchical bottom-to-up network architecture. It fulfills shape completion in an iterative manner, which 1) first infers the global feature of the coarse result; 2) then infers the local feature with the aid of global feature; and 3) finally infers the detailed result with the help of local feature and coarse result. Beyond the wisdom of simulating the physical repair, we newly design a cycle loss %based training strategy to enhance the generalization and robustness of SPCNet. Extensive experiments clearly show the superiority of our SPCNet over the state-of-the-art methods on 3D point clouds with large missings.

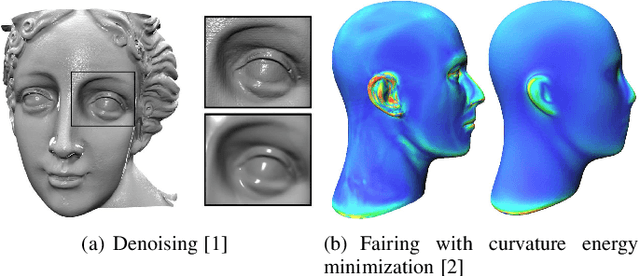

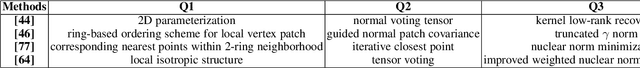

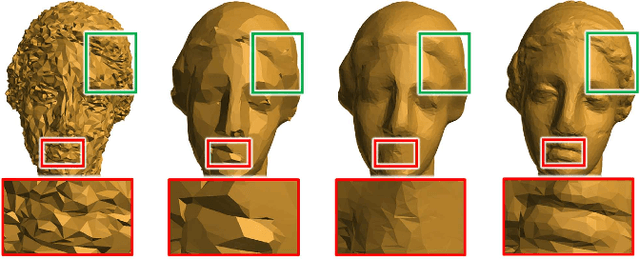

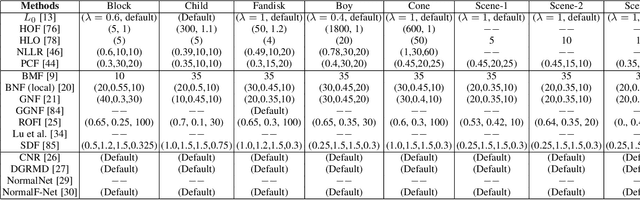

Geometric and Learning-based Mesh Denoising: A Comprehensive Survey

Sep 02, 2022

Abstract:Mesh denoising is a fundamental problem in digital geometry processing. It seeks to remove surface noise, while preserving surface intrinsic signals as accurately as possible. While the traditional wisdom has been built upon specialized priors to smooth surfaces, learning-based approaches are making their debut with great success in generalization and automation. In this work, we provide a comprehensive review of the advances in mesh denoising, containing both traditional geometric approaches and recent learning-based methods. First, to familiarize readers with the denoising tasks, we summarize four common issues in mesh denoising. We then provide two categorizations of the existing denoising methods. Furthermore, three important categories, including optimization-, filter-, and data-driven-based techniques, are introduced and analyzed in detail, respectively. Both qualitative and quantitative comparisons are illustrated, to demonstrate the effectiveness of the state-of-the-art denoising methods. Finally, potential directions of future work are pointed out to solve the common problems of these approaches. A mesh denoising benchmark is also built in this work, and future researchers will easily and conveniently evaluate their methods with the state-of-the-art approaches.

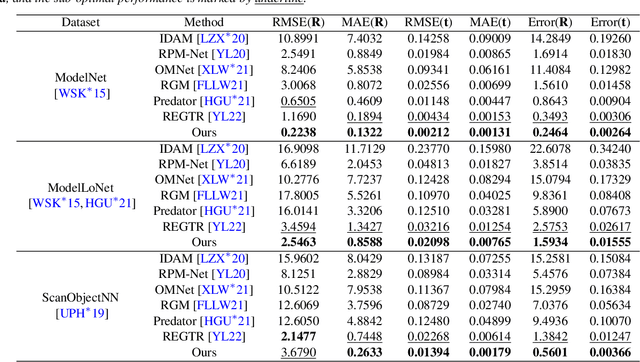

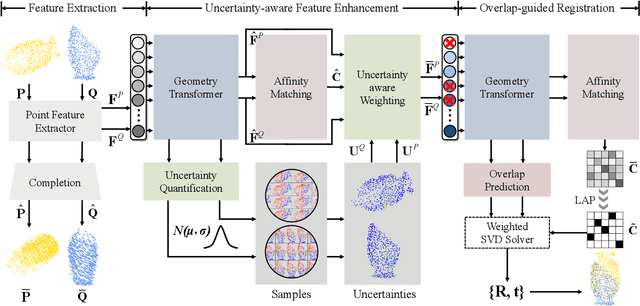

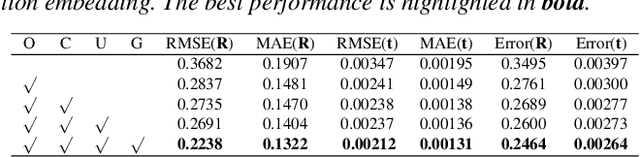

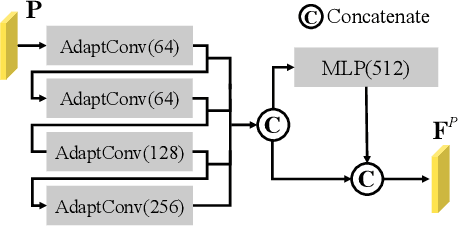

UTOPIC: Uncertainty-aware Overlap Prediction Network for Partial Point Cloud Registration

Aug 12, 2022

Abstract:High-confidence overlap prediction and accurate correspondences are critical for cutting-edge models to align paired point clouds in a partial-to-partial manner. However, there inherently exists uncertainty between the overlapping and non-overlapping regions, which has always been neglected and significantly affects the registration performance. Beyond the current wisdom, we propose a novel uncertainty-aware overlap prediction network, dubbed UTOPIC, to tackle the ambiguous overlap prediction problem; to our knowledge, this is the first to explicitly introduce overlap uncertainty to point cloud registration. Moreover, we induce the feature extractor to implicitly perceive the shape knowledge through a completion decoder, and present a geometric relation embedding for Transformer to obtain transformation-invariant geometry-aware feature representations. With the merits of more reliable overlap scores and more precise dense correspondences, UTOPIC can achieve stable and accurate registration results, even for the inputs with limited overlapping areas. Extensive quantitative and qualitative experiments on synthetic and real benchmarks demonstrate the superiority of our approach over state-of-the-art methods.

CSDN: Cross-modal Shape-transfer Dual-refinement Network for Point Cloud Completion

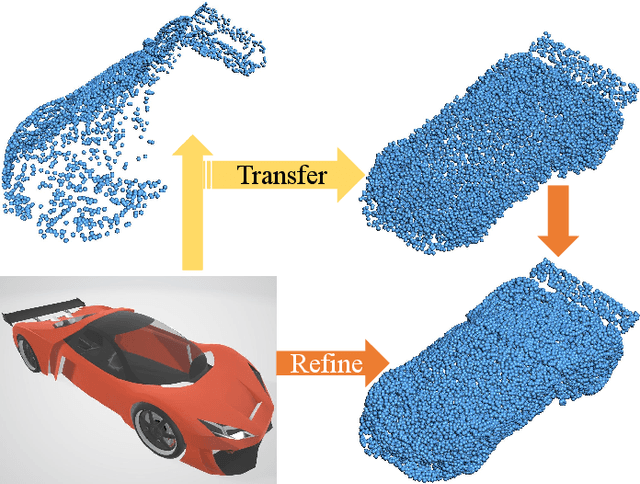

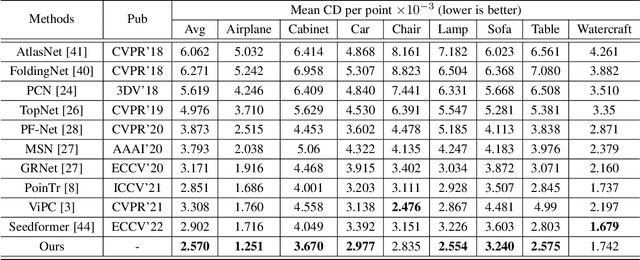

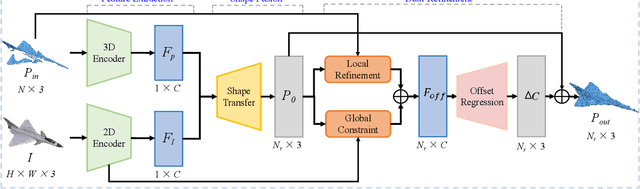

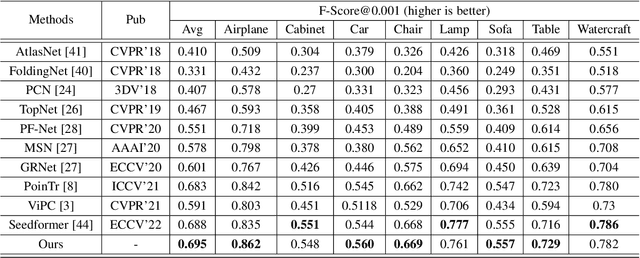

Aug 01, 2022

Abstract:How will you repair a physical object with some missings? You may imagine its original shape from previously captured images, recover its overall (global) but coarse shape first, and then refine its local details. We are motivated to imitate the physical repair procedure to address point cloud completion. To this end, we propose a cross-modal shape-transfer dual-refinement network (termed CSDN), a coarse-to-fine paradigm with images of full-cycle participation, for quality point cloud completion. CSDN mainly consists of "shape fusion" and "dual-refinement" modules to tackle the cross-modal challenge. The first module transfers the intrinsic shape characteristics from single images to guide the geometry generation of the missing regions of point clouds, in which we propose IPAdaIN to embed the global features of both the image and the partial point cloud into completion. The second module refines the coarse output by adjusting the positions of the generated points, where the local refinement unit exploits the geometric relation between the novel and the input points by graph convolution, and the global constraint unit utilizes the input image to fine-tune the generated offset. Different from most existing approaches, CSDN not only explores the complementary information from images but also effectively exploits cross-modal data in the whole coarse-to-fine completion procedure. Experimental results indicate that CSDN performs favorably against ten competitors on the cross-modal benchmark.

GeoSegNet: Point Cloud Semantic Segmentation via Geometric Encoder-Decoder Modeling

Jul 14, 2022

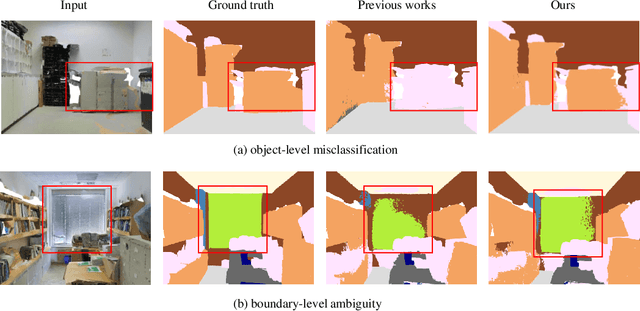

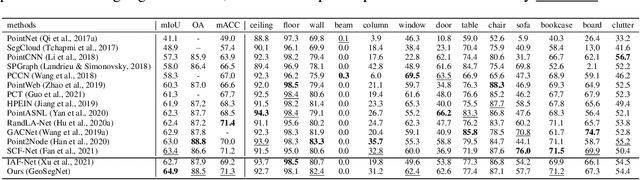

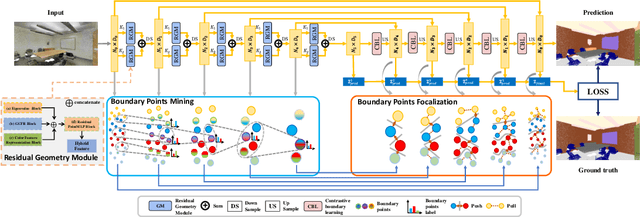

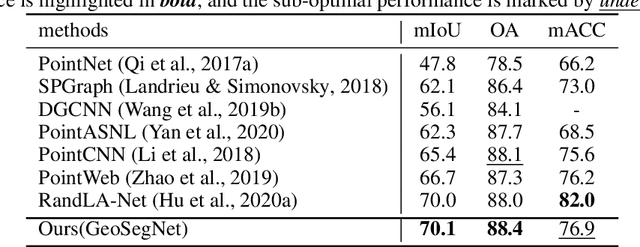

Abstract:Semantic segmentation of point clouds, aiming to assign each point a semantic category, is critical to 3D scene understanding.Despite of significant advances in recent years, most of existing methods still suffer from either the object-level misclassification or the boundary-level ambiguity. In this paper, we present a robust semantic segmentation network by deeply exploring the geometry of point clouds, dubbed GeoSegNet. Our GeoSegNet consists of a multi-geometry based encoder and a boundary-guided decoder. In the encoder, we develop a new residual geometry module from multi-geometry perspectives to extract object-level features. In the decoder, we introduce a contrastive boundary learning module to enhance the geometric representation of boundary points. Benefiting from the geometric encoder-decoder modeling, our GeoSegNet can infer the segmentation of objects effectively while making the intersections (boundaries) of two or more objects clear. Experiments show obvious improvements of our method over its competitors in terms of the overall segmentation accuracy and object boundary clearness. Code is available at https://github.com/Chen-yuiyui/GeoSegNet.

ImLoveNet: Misaligned Image-supported Registration Network for Low-overlap Point Cloud Pairs

Jul 02, 2022

Abstract:Low-overlap regions between paired point clouds make the captured features very low-confidence, leading cutting edge models to point cloud registration with poor quality. Beyond the traditional wisdom, we raise an intriguing question: Is it possible to exploit an intermediate yet misaligned image between two low-overlap point clouds to enhance the performance of cutting-edge registration models? To answer it, we propose a misaligned image supported registration network for low-overlap point cloud pairs, dubbed ImLoveNet. ImLoveNet first learns triple deep features across different modalities and then exports these features to a two-stage classifier, for progressively obtaining the high-confidence overlap region between the two point clouds. Therefore, soft correspondences are well established on the predicted overlap region, resulting in accurate rigid transformations for registration. ImLoveNet is simple to implement yet effective, since 1) the misaligned image provides clearer overlap information for the two low-overlap point clouds to better locate overlap parts; 2) it contains certain geometry knowledge to extract better deep features; and 3) it does not require the extrinsic parameters of the imaging device with respect to the reference frame of the 3D point cloud. Extensive qualitative and quantitative evaluations on different kinds of benchmarks demonstrate the effectiveness and superiority of our ImLoveNet over state-of-the-art approaches.

Semi-MoreGAN: A New Semi-supervised Generative Adversarial Network for Mixture of Rain Removal

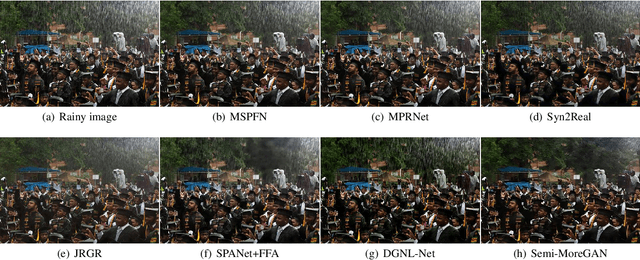

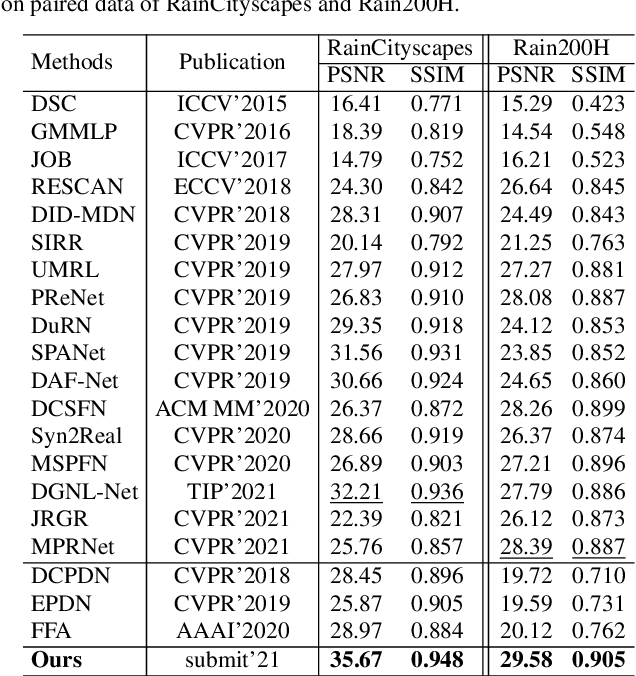

Apr 28, 2022

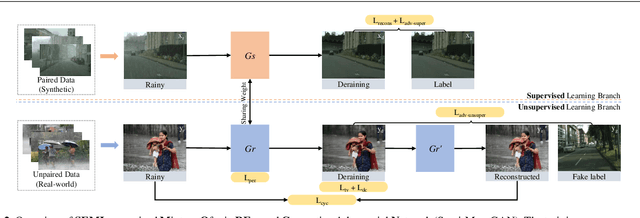

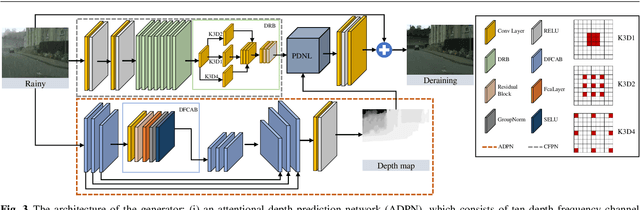

Abstract:Rain is one of the most common weather which can completely degrade the image quality and interfere with the performance of many computer vision tasks, especially under heavy rain conditions. We observe that: (i) rain is a mixture of rain streaks and rainy haze; (ii) the scene depth determines the intensity of rain streaks and the transformation into the rainy haze; (iii) most existing deraining methods are only trained on synthetic rainy images, and hence generalize poorly to the real-world scenes. Motivated by these observations, we propose a new SEMI-supervised Mixture Of rain REmoval Generative Adversarial Network (Semi-MoreGAN), which consists of four key modules: (I) a novel attentional depth prediction network to provide precise depth estimation; (ii) a context feature prediction network composed of several well-designed detailed residual blocks to produce detailed image context features; (iii) a pyramid depth-guided non-local network to effectively integrate the image context with the depth information, and produce the final rain-free images; and (iv) a comprehensive semi-supervised loss function to make the model not limited to synthetic datasets but generalize smoothly to real-world heavy rainy scenes. Extensive experiments show clear improvements of our approach over twenty representative state-of-the-arts on both synthetic and real-world rainy images.

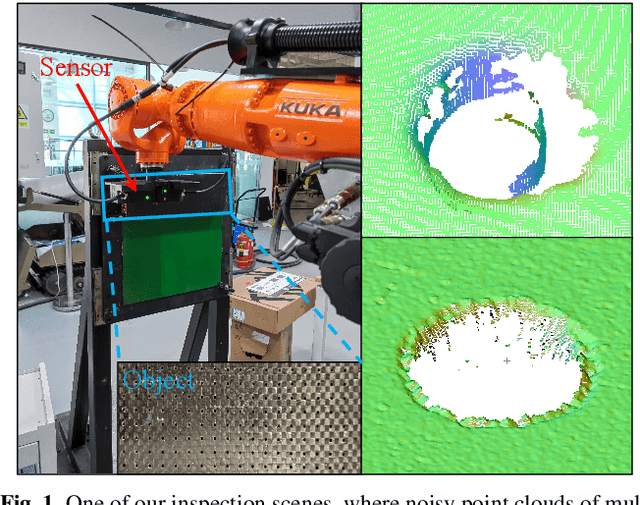

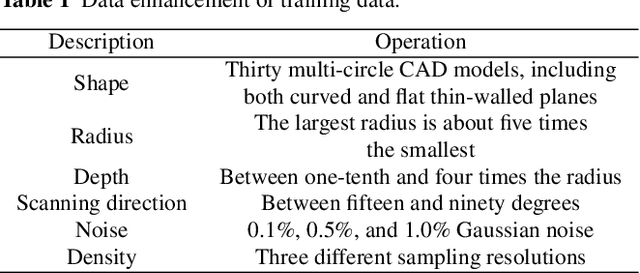

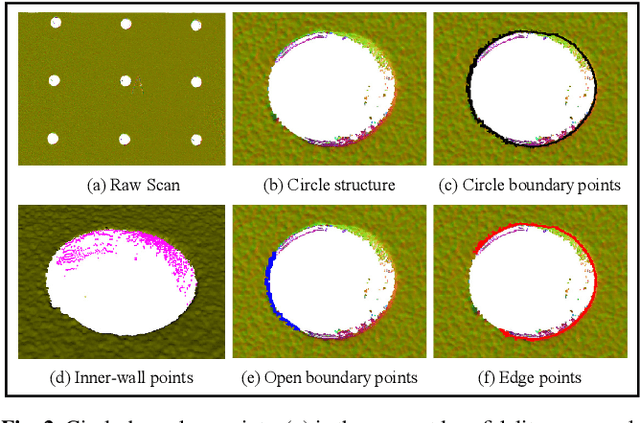

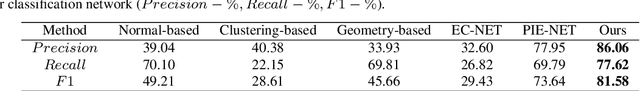

Deep Algebraic Fitting for Multiple Circle Primitives Extraction from Raw Point Clouds

Apr 02, 2022

Abstract:The shape of circle is one of fundamental geometric primitives of man-made engineering objects. Thus, extraction of circles from scanned point clouds is a quite important task in 3D geometry data processing. However, existing circle extraction methods either are sensitive to the quality of raw point clouds when classifying circle-boundary points, or require well-designed fitting functions when regressing circle parameters. To relieve the challenges, we propose an end-to-end Point Cloud Circle Algebraic Fitting Network (Circle-Net) based on a synergy of deep circle-boundary point feature learning and weighted algebraic fitting. First, we design a circle-boundary learning module, which considers local and global neighboring contexts of each point, to detect all potential circle-boundary points. Second, we develop a deep feature based circle parameter learning module for weighted algebraic fitting, without designing any weight metric, to avoid the influence of outliers during fitting. Unlike most of the cutting-edge circle extraction wisdoms, the proposed classification-and-fitting modules are originally co-trained with a comprehensive loss to enhance the quality of extracted circles.Comparisons on the established dataset and real-scanned point clouds exhibit clear improvements of Circle-Net over SOTAs in terms of both noise-robustness and extraction accuracy. We will release our code, model, and data for both training and evaluation on GitHub upon publication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge