Heng Tao Shen

Investigating and Mitigating the Side Effects of Noisy Views in Multi-view Clustering in Practical Scenarios

Mar 30, 2023

Abstract:Multi-view clustering (MvC) aims at exploring the category structure among multi-view data without label supervision. Multiple views provide more information than single views and thus existing MvC methods can achieve satisfactory performance. However, their performance might seriously degenerate when the views are noisy in practical scenarios. In this paper, we first formally investigate the drawback of noisy views and then propose a theoretically grounded deep MvC method (namely MvCAN) to address this issue. Specifically, we propose a novel MvC objective that enables un-shared parameters and inconsistent clustering predictions across multiple views to reduce the side effects of noisy views. Furthermore, a non-parametric iterative process is designed to generate a robust learning target for mining multiple views' useful information. Theoretical analysis reveals that MvCAN works by achieving the multi-view consistency, complementarity, and noise robustness. Finally, experiments on public datasets demonstrate that MvCAN outperforms state-of-the-art methods and is robust against the existence of noisy views.

ScanERU: Interactive 3D Visual Grounding based on Embodied Reference Understanding

Mar 23, 2023Abstract:Aiming to link natural language descriptions to specific regions in a 3D scene represented as 3D point clouds, 3D visual grounding is a very fundamental task for human-robot interaction. The recognition errors can significantly impact the overall accuracy and then degrade the operation of AI systems. Despite their effectiveness, existing methods suffer from the difficulty of low recognition accuracy in cases of multiple adjacent objects with similar appearances.To address this issue, this work intuitively introduces the human-robot interaction as a cue to facilitate the development of 3D visual grounding. Specifically, a new task termed Embodied Reference Understanding (ERU) is first designed for this concern. Then a new dataset called ScanERU is constructed to evaluate the effectiveness of this idea. Different from existing datasets, our ScanERU is the first to cover semi-synthetic scene integration with textual, real-world visual, and synthetic gestural information. Additionally, this paper formulates a heuristic framework based on attention mechanisms and human body movements to enlighten the research of ERU. Experimental results demonstrate the superiority of the proposed method, especially in the recognition of multiple identical objects. Our codes and dataset are ready to be available publicly.

A Complete Survey on Generative AI : Is ChatGPT from GPT-4 to GPT-5 All You Need?

Mar 21, 2023

Abstract:As ChatGPT goes viral, generative AI (AIGC, a.k.a AI-generated content) has made headlines everywhere because of its ability to analyze and create text, images, and beyond. With such overwhelming media coverage, it is almost impossible for us to miss the opportunity to glimpse AIGC from a certain angle. In the era of AI transitioning from pure analysis to creation, it is worth noting that ChatGPT, with its most recent language model GPT-4, is just a tool out of numerous AIGC tasks. Impressed by the capability of the ChatGPT, many people are wondering about its limits: can GPT-5 (or other future GPT variants) help ChatGPT unify all AIGC tasks for diversified content creation? Toward answering this question, a comprehensive review of existing AIGC tasks is needed. As such, our work comes to fill this gap promptly by offering a first look at AIGC, ranging from its techniques to applications. Modern generative AI relies on various technical foundations, ranging from model architecture and self-supervised pretraining to generative modeling methods (like GAN and diffusion models). After introducing the fundamental techniques, this work focuses on the technological development of various AIGC tasks based on their output type, including text, images, videos, 3D content, etc., which depicts the full potential of ChatGPT's future. Moreover, we summarize their significant applications in some mainstream industries, such as education and creativity content. Finally, we discuss the challenges currently faced and present an outlook on how generative AI might evolve in the near future.

ICL-D3IE: In-Context Learning with Diverse Demonstrations Updating for Document Information Extraction

Mar 09, 2023Abstract:Large language models (LLMs), such as GPT-3 and ChatGPT, have demonstrated remarkable results in various natural language processing (NLP) tasks with in-context learning, which involves inference based on a few demonstration examples. Despite their successes in NLP tasks, no investigation has been conducted to assess the ability of LLMs to perform document information extraction (DIE) using in-context learning. Applying LLMs to DIE poses two challenges: the modality and task gap. To this end, we propose a simple but effective in-context learning framework called ICL-D3IE, which enables LLMs to perform DIE with different types of demonstration examples. Specifically, we extract the most difficult and distinct segments from hard training documents as hard demonstrations for benefiting all test instances. We design demonstrations describing relationships that enable LLMs to understand positional relationships. We introduce formatting demonstrations for easy answer extraction. Additionally, the framework improves diverse demonstrations by updating them iteratively. Our experiments on three widely used benchmark datasets demonstrate that the ICL-D3IE framework enables GPT-3/ChatGPT to achieve superior performance when compared to previous pre-trained methods fine-tuned with full training in both the in-distribution (ID) setting and in the out-of-distribution (OOD) setting.

Imbalanced Open Set Domain Adaptation via Moving-threshold Estimation and Gradual Alignment

Mar 09, 2023Abstract:Multimedia applications are often associated with cross-domain knowledge transfer, where Unsupervised Domain Adaptation (UDA) can be used to reduce the domain shifts. Open Set Domain Adaptation (OSDA) aims to transfer knowledge from a well-labeled source domain to an unlabeled target domain under the assumption that the target domain contains unknown classes. Existing OSDA methods consistently lay stress on the covariate shift, ignoring the potential label shift problem. The performance of OSDA methods degrades drastically under intra-domain class imbalance and inter-domain label shift. However, little attention has been paid to this issue in the community. In this paper, the Imbalanced Open Set Domain Adaptation (IOSDA) is explored where the covariate shift, label shift and category mismatch exist simultaneously. To alleviate the negative effects raised by label shift in OSDA, we propose Open-set Moving-threshold Estimation and Gradual Alignment (OMEGA) - a novel architecture that improves existing OSDA methods on class-imbalanced data. Specifically, a novel unknown-aware target clustering scheme is proposed to form tight clusters in the target domain to reduce the negative effects of label shift and intra-domain class imbalance. Furthermore, moving-threshold estimation is designed to generate specific thresholds for each target sample rather than using one for all. Extensive experiments on IOSDA, OSDA and OPDA benchmarks demonstrate that our method could significantly outperform existing state-of-the-arts. Code and data are available at https://github.com/mendicant04/OMEGA.

A Comprehensive Survey on Source-free Domain Adaptation

Feb 23, 2023Abstract:Over the past decade, domain adaptation has become a widely studied branch of transfer learning that aims to improve performance on target domains by leveraging knowledge from the source domain. Conventional domain adaptation methods often assume access to both source and target domain data simultaneously, which may not be feasible in real-world scenarios due to privacy and confidentiality concerns. As a result, the research of Source-Free Domain Adaptation (SFDA) has drawn growing attention in recent years, which only utilizes the source-trained model and unlabeled target data to adapt to the target domain. Despite the rapid explosion of SFDA work, yet there has no timely and comprehensive survey in the field. To fill this gap, we provide a comprehensive survey of recent advances in SFDA and organize them into a unified categorization scheme based on the framework of transfer learning. Instead of presenting each approach independently, we modularize several components of each method to more clearly illustrate their relationships and mechanics in light of the composite properties of each method. Furthermore, we compare the results of more than 30 representative SFDA methods on three popular classification benchmarks, namely Office-31, Office-home, and VisDA, to explore the effectiveness of various technical routes and the combination effects among them. Additionally, we briefly introduce the applications of SFDA and related fields. Drawing from our analysis of the challenges facing SFDA, we offer some insights into future research directions and potential settings.

Semantic Enhanced Knowledge Graph for Large-Scale Zero-Shot Learning

Dec 26, 2022Abstract:Zero-Shot Learning has been a highlighted research topic in both vision and language areas. Recently, most existing methods adopt structured knowledge information to model explicit correlations among categories and use deep graph convolutional network to propagate information between different categories. However, it is difficult to add new categories to existing structured knowledge graph, and deep graph convolutional network suffers from over-smoothing problem. In this paper, we provide a new semantic enhanced knowledge graph that contains both expert knowledge and categories semantic correlation. Our semantic enhanced knowledge graph can further enhance the correlations among categories and make it easy to absorb new categories. To propagate information on the knowledge graph, we propose a novel Residual Graph Convolutional Network (ResGCN), which can effectively alleviate the problem of over-smoothing. Experiments conducted on the widely used large-scale ImageNet-21K dataset and AWA2 dataset show the effectiveness of our method, and establish a new state-of-the-art on zero-shot learning. Moreover, our results on the large-scale ImageNet-21K with various feature extraction networks show that our method has better generalization and robustness.

Visual Commonsense-aware Representation Network for Video Captioning

Nov 17, 2022Abstract:Generating consecutive descriptions for videos, i.e., Video Captioning, requires taking full advantage of visual representation along with the generation process. Existing video captioning methods focus on making an exploration of spatial-temporal representations and their relationships to produce inferences. However, such methods only exploit the superficial association contained in the video itself without considering the intrinsic visual commonsense knowledge that existed in a video dataset, which may hinder their capabilities of knowledge cognitive to reason accurate descriptions. To address this problem, we propose a simple yet effective method, called Visual Commonsense-aware Representation Network (VCRN), for video captioning. Specifically, we construct a Video Dictionary, a plug-and-play component, obtained by clustering all video features from the total dataset into multiple clustered centers without additional annotation. Each center implicitly represents a visual commonsense concept in the video domain, which is utilized in our proposed Visual Concept Selection (VCS) to obtain a video-related concept feature. Next, a Conceptual Integration Generation (CIG) is proposed to enhance the caption generation. Extensive experiments on three publicly video captioning benchmarks: MSVD, MSR-VTT, and VATEX, demonstrate that our method reaches state-of-the-art performance, indicating the effectiveness of our method. In addition, our approach is integrated into the existing method of video question answering and improves this performance, further showing the generalization of our method. Source code has been released at https://github.com/zchoi/VCRN.

A Lower Bound of Hash Codes' Performance

Oct 12, 2022

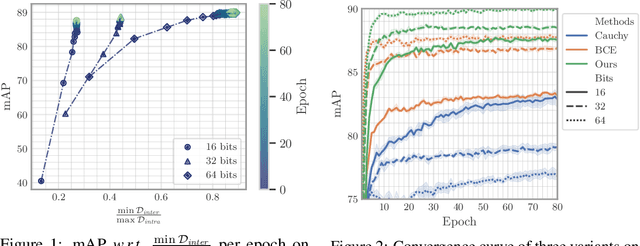

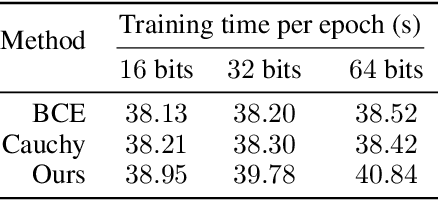

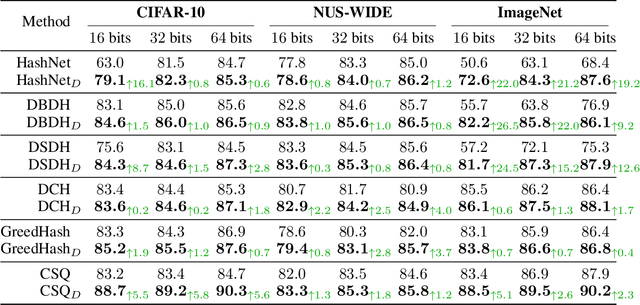

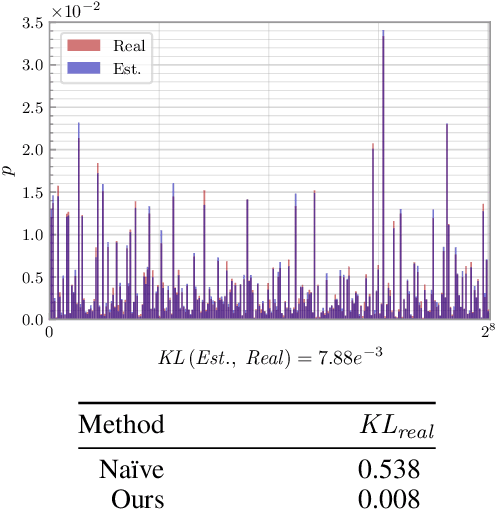

Abstract:As a crucial approach for compact representation learning, hashing has achieved great success in effectiveness and efficiency. Numerous heuristic Hamming space metric learning objectives are designed to obtain high-quality hash codes. Nevertheless, a theoretical analysis of criteria for learning good hash codes remains largely unexploited. In this paper, we prove that inter-class distinctiveness and intra-class compactness among hash codes determine the lower bound of hash codes' performance. Promoting these two characteristics could lift the bound and improve hash learning. We then propose a surrogate model to fully exploit the above objective by estimating the posterior of hash codes and controlling it, which results in a low-bias optimization. Extensive experiments reveal the effectiveness of the proposed method. By testing on a series of hash-models, we obtain performance improvements among all of them, with an up to $26.5\%$ increase in mean Average Precision and an up to $20.5\%$ increase in accuracy. Our code is publicly available at \url{https://github.com/VL-Group/LBHash}.

Dual-branch Hybrid Learning Network for Unbiased Scene Graph Generation

Jul 16, 2022

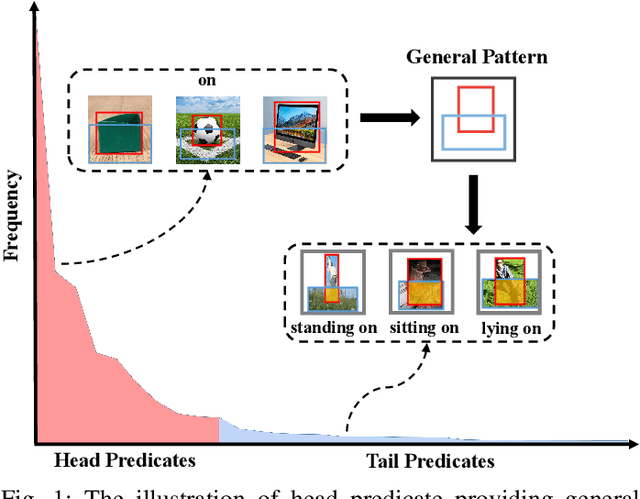

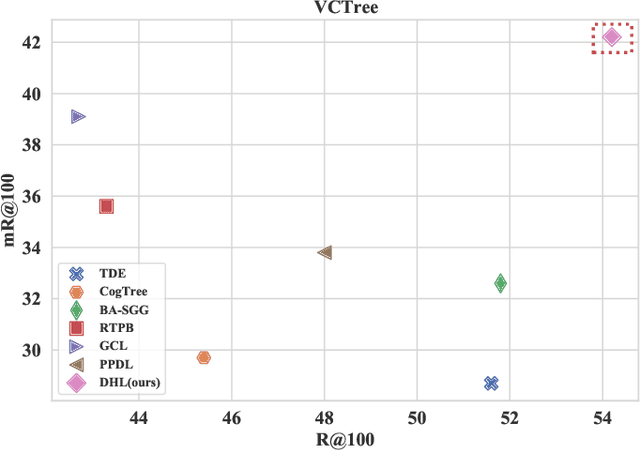

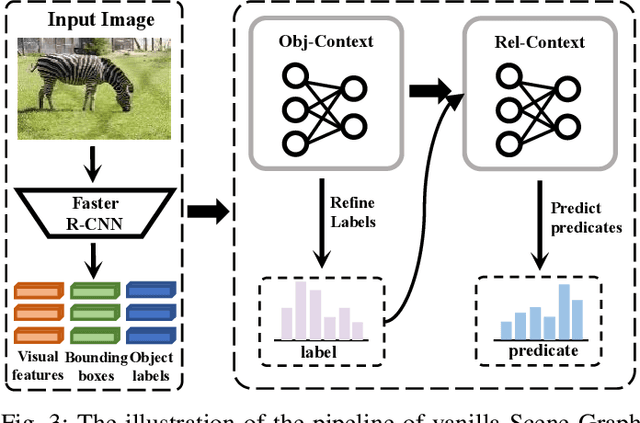

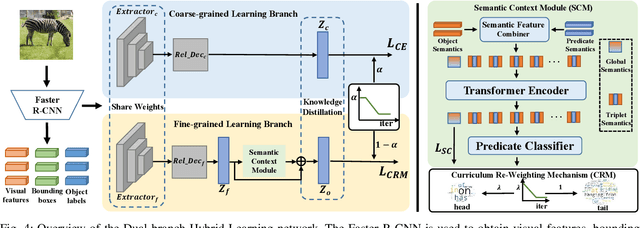

Abstract:The current studies of Scene Graph Generation (SGG) focus on solving the long-tailed problem for generating unbiased scene graphs. However, most de-biasing methods overemphasize the tail predicates and underestimate head ones throughout training, thereby wrecking the representation ability of head predicate features. Furthermore, these impaired features from head predicates harm the learning of tail predicates. In fact, the inference of tail predicates heavily depends on the general patterns learned from head ones, e.g., "standing on" depends on "on". Thus, these de-biasing SGG methods can neither achieve excellent performance on tail predicates nor satisfying behaviors on head ones. To address this issue, we propose a Dual-branch Hybrid Learning network (DHL) to take care of both head predicates and tail ones for SGG, including a Coarse-grained Learning Branch (CLB) and a Fine-grained Learning Branch (FLB). Specifically, the CLB is responsible for learning expertise and robust features of head predicates, while the FLB is expected to predict informative tail predicates. Furthermore, DHL is equipped with a Branch Curriculum Schedule (BCS) to make the two branches work well together. Experiments show that our approach achieves a new state-of-the-art performance on VG and GQA datasets and makes a trade-off between the performance of tail predicates and head ones. Moreover, extensive experiments on two downstream tasks (i.e., Image Captioning and Sentence-to-Graph Retrieval) further verify the generalization and practicability of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge