Dongyu Zhang

Enhancing Textual Personality Detection toward Social Media: Integrating Long-term and Short-term Perspectives

Apr 23, 2024

Abstract:Textual personality detection aims to identify personality characteristics by analyzing user-generated content toward social media platforms. Numerous psychological literature highlighted that personality encompasses both long-term stable traits and short-term dynamic states. However, existing studies often concentrate only on either long-term or short-term personality representations, without effectively combining both aspects. This limitation hinders a comprehensive understanding of individuals' personalities, as both stable traits and dynamic states are vital. To bridge this gap, we propose a Dual Enhanced Network(DEN) to jointly model users' long-term and short-term personality for textual personality detection. In DEN, a Long-term Personality Encoding is devised to effectively model long-term stable personality traits. Short-term Personality Encoding is presented to capture short-term dynamic personality states. The Bi-directional Interaction component facilitates the integration of both personality aspects, allowing for a comprehensive representation of the user's personality. Experimental results on two personality detection datasets demonstrate the effectiveness of the DEN model and the benefits of considering both the dynamic and stable nature of personality characteristics for textual personality detection.

CoLafier: Collaborative Noisy Label Purifier With Local Intrinsic Dimensionality Guidance

Jan 10, 2024Abstract:Deep neural networks (DNNs) have advanced many machine learning tasks, but their performance is often harmed by noisy labels in real-world data. Addressing this, we introduce CoLafier, a novel approach that uses Local Intrinsic Dimensionality (LID) for learning with noisy labels. CoLafier consists of two subnets: LID-dis and LID-gen. LID-dis is a specialized classifier. Trained with our uniquely crafted scheme, LID-dis consumes both a sample's features and its label to predict the label - which allows it to produce an enhanced internal representation. We observe that LID scores computed from this representation effectively distinguish between correct and incorrect labels across various noise scenarios. In contrast to LID-dis, LID-gen, functioning as a regular classifier, operates solely on the sample's features. During training, CoLafier utilizes two augmented views per instance to feed both subnets. CoLafier considers the LID scores from the two views as produced by LID-dis to assign weights in an adapted loss function for both subnets. Concurrently, LID-gen, serving as classifier, suggests pseudo-labels. LID-dis then processes these pseudo-labels along with two views to derive LID scores. Finally, these LID scores along with the differences in predictions from the two subnets guide the label update decisions. This dual-view and dual-subnet approach enhances the overall reliability of the framework. Upon completion of the training, we deploy the LID-gen subnet of CoLafier as the final classification model. CoLafier demonstrates improved prediction accuracy, surpassing existing methods, particularly under severe label noise. For more details, see the code at https://github.com/zdy93/CoLafier.

Image Restoration Through Generalized Ornstein-Uhlenbeck Bridge

Dec 16, 2023

Abstract:Diffusion models possess powerful generative capabilities enabling the mapping of noise to data using reverse stochastic differential equations. However, in image restoration tasks, the focus is on the mapping relationship from low-quality images to high-quality images. To address this, we introduced the Generalized Ornstein-Uhlenbeck Bridge (GOUB) model. By leveraging the natural mean-reverting property of the generalized OU process and further adjusting the variance of its steady-state distribution through the Doob's h-transform, we achieve diffusion mappings from point to point with minimal cost. This allows for end-to-end training, enabling the recovery of high-quality images from low-quality ones. Additionally, we uncovered the mathematical essence of some bridge models, all of which are special cases of the GOUB and empirically demonstrated the optimality of our proposed models. Furthermore, benefiting from our distinctive parameterization mechanism, we proposed the Mean-ODE model that is better at capturing pixel-level information and structural perceptions. Experimental results show that both models achieved state-of-the-art results in various tasks, including inpainting, deraining, and super-resolution. Code is available at https://github.com/Hammour-steak/GOUB.

UCE-FID: Using Large Unlabeled, Medium Crowdsourced-Labeled, and Small Expert-Labeled Tweets for Foodborne Illness Detection

Dec 02, 2023Abstract:Foodborne illnesses significantly impact public health. Deep learning surveillance applications using social media data aim to detect early warning signals. However, labeling foodborne illness-related tweets for model training requires extensive human resources, making it challenging to collect a sufficient number of high-quality labels for tweets within a limited budget. The severe class imbalance resulting from the scarcity of foodborne illness-related tweets among the vast volume of social media further exacerbates the problem. Classifiers trained on a class-imbalanced dataset are biased towards the majority class, making accurate detection difficult. To overcome these challenges, we propose EGAL, a deep learning framework for foodborne illness detection that uses small expert-labeled tweets augmented by crowdsourced-labeled and massive unlabeled data. Specifically, by leveraging tweets labeled by experts as a reward set, EGAL learns to assign a weight of zero to incorrectly labeled tweets to mitigate their negative influence. Other tweets receive proportionate weights to counter-balance the unbalanced class distribution. Extensive experiments on real-world \textit{TWEET-FID} data show that EGAL outperforms strong baseline models across different settings, including varying expert-labeled set sizes and class imbalance ratios. A case study on a multistate outbreak of Salmonella Typhimurium infection linked to packaged salad greens demonstrates how the trained model captures relevant tweets offering valuable outbreak insights. EGAL, funded by the U.S. Department of Agriculture (USDA), has the potential to be deployed for real-time analysis of tweet streaming, contributing to foodborne illness outbreak surveillance efforts.

FATA-Trans: Field And Time-Aware Transformer for Sequential Tabular Data

Oct 20, 2023

Abstract:Sequential tabular data is one of the most commonly used data types in real-world applications. Different from conventional tabular data, where rows in a table are independent, sequential tabular data contains rich contextual and sequential information, where some fields are dynamically changing over time and others are static. Existing transformer-based approaches analyzing sequential tabular data overlook the differences between dynamic and static fields by replicating and filling static fields into each transformer, and ignore temporal information between rows, which leads to three major disadvantages: (1) computational overhead, (2) artificially simplified data for masked language modeling pre-training task that may yield less meaningful representations, and (3) disregarding the temporal behavioral patterns implied by time intervals. In this work, we propose FATA-Trans, a model with two field transformers for modeling sequential tabular data, where each processes static and dynamic field information separately. FATA-Trans is field- and time-aware for sequential tabular data. The field-type embedding in the method enables FATA-Trans to capture differences between static and dynamic fields. The time-aware position embedding exploits both order and time interval information between rows, which helps the model detect underlying temporal behavior in a sequence. Our experiments on three benchmark datasets demonstrate that the learned representations from FATA-Trans consistently outperform state-of-the-art solutions in the downstream tasks. We also present visualization studies to highlight the insights captured by the learned representations, enhancing our understanding of the underlying data. Our codes are available at https://github.com/zdy93/FATA-Trans.

EVE: Efficient Vision-Language Pre-training with Masked Prediction and Modality-Aware MoE

Aug 23, 2023

Abstract:Building scalable vision-language models to learn from diverse, multimodal data remains an open challenge. In this paper, we introduce an Efficient Vision-languagE foundation model, namely EVE, which is one unified multimodal Transformer pre-trained solely by one unified pre-training task. Specifically, EVE encodes both vision and language within a shared Transformer network integrated with modality-aware sparse Mixture-of-Experts (MoE) modules, which capture modality-specific information by selectively switching to different experts. To unify pre-training tasks of vision and language, EVE performs masked signal modeling on image-text pairs to reconstruct masked signals, i.e., image pixels and text tokens, given visible signals. This simple yet effective pre-training objective accelerates training by 3.5x compared to the model pre-trained with Image-Text Contrastive and Image-Text Matching losses. Owing to the combination of the unified architecture and pre-training task, EVE is easy to scale up, enabling better downstream performance with fewer resources and faster training speed. Despite its simplicity, EVE achieves state-of-the-art performance on various vision-language downstream tasks, including visual question answering, visual reasoning, and image-text retrieval.

TWEET-FID: An Annotated Dataset for Multiple Foodborne Illness Detection Tasks

May 22, 2022

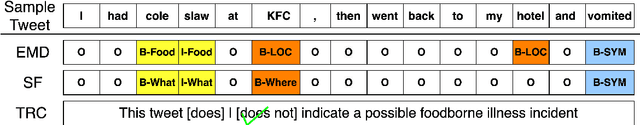

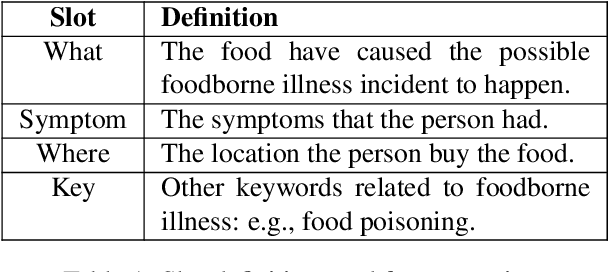

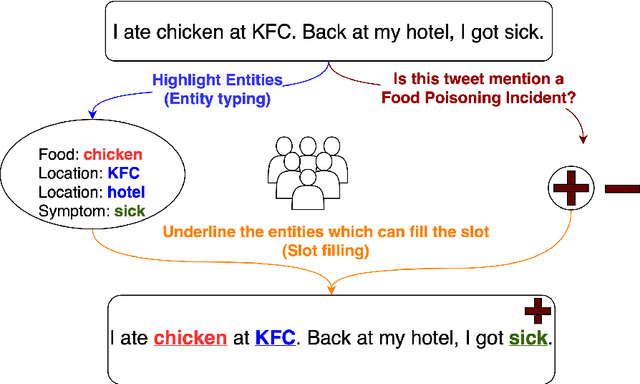

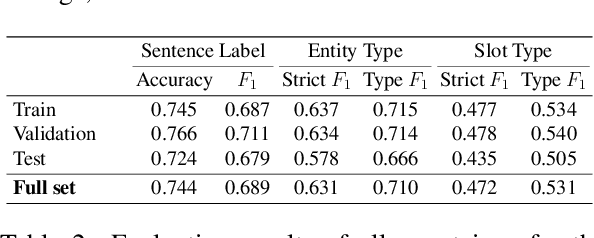

Abstract:Foodborne illness is a serious but preventable public health problem -- with delays in detecting the associated outbreaks resulting in productivity loss, expensive recalls, public safety hazards, and even loss of life. While social media is a promising source for identifying unreported foodborne illnesses, there is a dearth of labeled datasets for developing effective outbreak detection models. To accelerate the development of machine learning-based models for foodborne outbreak detection, we thus present TWEET-FID (TWEET-Foodborne Illness Detection), the first publicly available annotated dataset for multiple foodborne illness incident detection tasks. TWEET-FID collected from Twitter is annotated with three facets: tweet class, entity type, and slot type, with labels produced by experts as well as by crowdsource workers. We introduce several domain tasks leveraging these three facets: text relevance classification (TRC), entity mention detection (EMD), and slot filling (SF). We describe the end-to-end methodology for dataset design, creation, and labeling for supporting model development for these tasks. A comprehensive set of results for these tasks leveraging state-of-the-art single- and multi-task deep learning methods on the TWEET-FID dataset are provided. This dataset opens opportunities for future research in foodborne outbreak detection.

Surf or sleep? Understanding the influence of bedtime patterns on campus

Feb 18, 2022

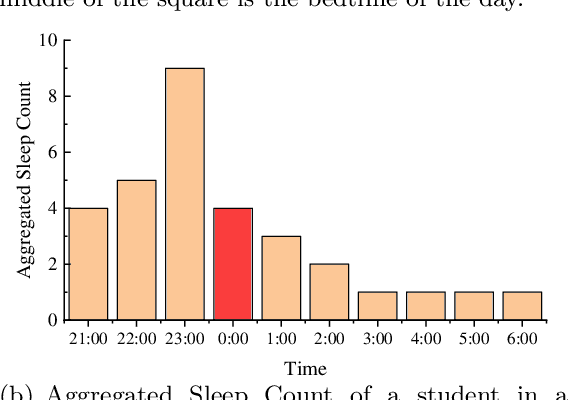

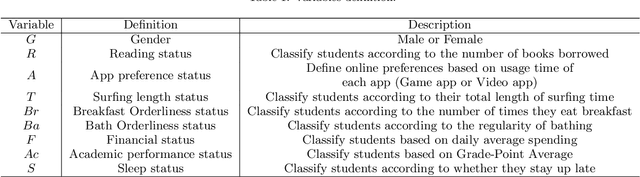

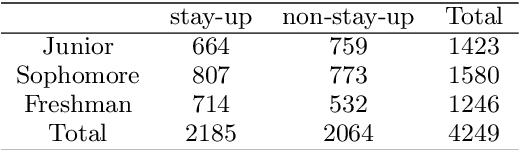

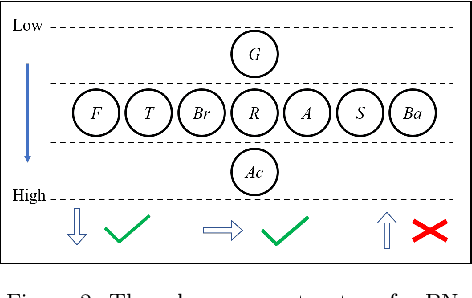

Abstract:Poor sleep habits may cause serious problems of mind and body, and it is a commonly observed issue for college students due to study workload as well as peer and social influence. Understanding its impact and identifying students with poor sleep habits matters a lot in educational management. Most of the current research is either based on self-reports and questionnaires, suffering from a small sample size and social desirability bias, or the methods used are not suitable for the education system. In this paper, we develop a general data-driven method for identifying students' sleep patterns according to their Internet access pattern stored in the education management system and explore its influence from various aspects. First, we design a Possion-based probabilistic mixture model to cluster students according to the distribution of bedtime and identify students who are used to staying up late. Second, we profile students from five aspects (including eight dimensions) based on campus-behavior data and build Bayesian networks to explore the relationship between behavioral characteristics and sleeping habits. Finally, we test the predictability of sleeping habits. This paper not only contributes to the understanding of student sleep from a cognitive and behavioral perspective but also presents a new approach that provides an effective framework for various educational institutions to detect the sleeping patterns of students.

Enhancing Prototypical Few-Shot Learning by Leveraging the Local-Level Strategy

Nov 08, 2021

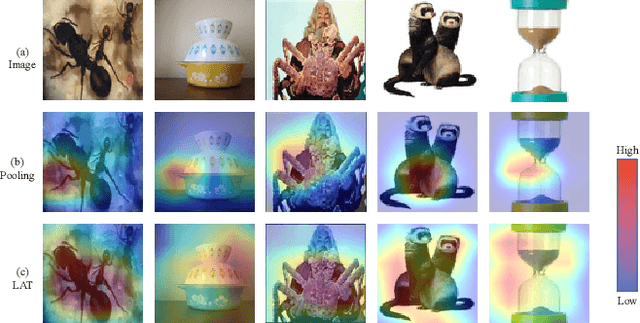

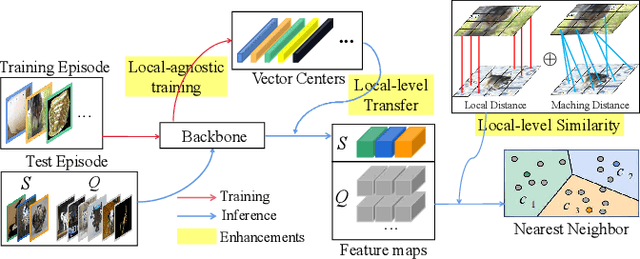

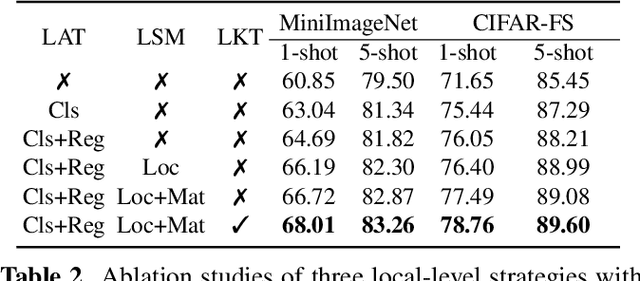

Abstract:Aiming at recognizing the samples from novel categories with few reference samples, few-shot learning (FSL) is a challenging problem. We found that the existing works often build their few-shot model based on the image-level feature by mixing all local-level features, which leads to the discriminative location bias and information loss in local details. To tackle the problem, this paper returns the perspective to the local-level feature and proposes a series of local-level strategies. Specifically, we present (a) a local-agnostic training strategy to avoid the discriminative location bias between the base and novel categories, (b) a novel local-level similarity measure to capture the accurate comparison between local-level features, and (c) a local-level knowledge transfer that can synthesize different knowledge transfers from the base category according to different location features. Extensive experiments justify that our proposed local-level strategies can significantly boost the performance and achieve 2.8%-7.2% improvements over the baseline across different benchmark datasets, which also achieves state-of-the-art accuracy.

Plug-and-Play Few-shot Object Detection with Meta Strategy and Explicit Localization Inference

Oct 26, 2021

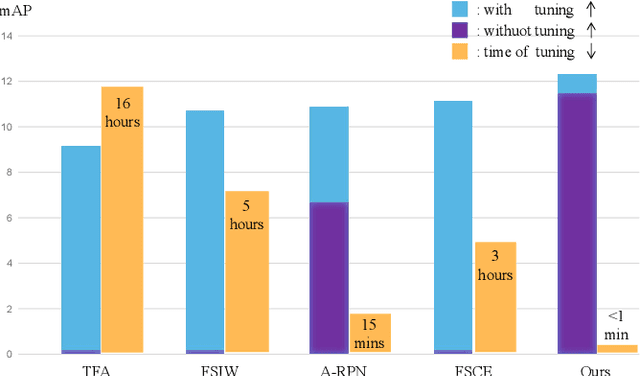

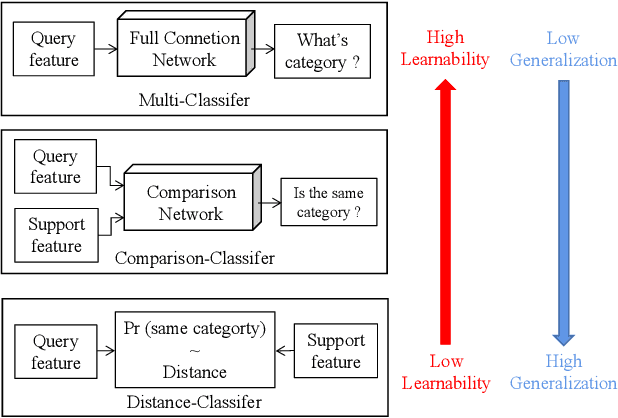

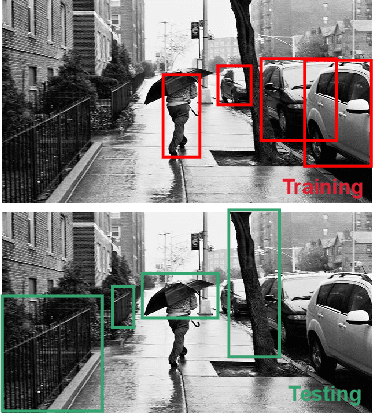

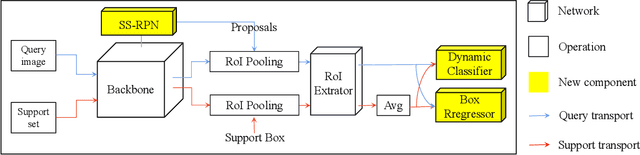

Abstract:Aiming at recognizing and localizing the object of novel categories by a few reference samples, few-shot object detection is a quite challenging task. Previous works often depend on the fine-tuning process to transfer their model to the novel category and rarely consider the defect of fine-tuning, resulting in many drawbacks. For example, these methods are far from satisfying in the low-shot or episode-based scenarios since the fine-tuning process in object detection requires much time and high-shot support data. To this end, this paper proposes a plug-and-play few-shot object detection (PnP-FSOD) framework that can accurately and directly detect the objects of novel categories without the fine-tuning process. To accomplish the objective, the PnP-FSOD framework contains two parallel techniques to address the core challenges in the few-shot learning, i.e., across-category task and few-annotation support. Concretely, we first propose two simple but effective meta strategies for the box classifier and RPN module to enable the across-category object detection without fine-tuning. Then, we introduce two explicit inferences into the localization process to reduce its dependence on the annotated data, including explicit localization score and semi-explicit box regression. In addition to the PnP-FSOD framework, we propose a novel one-step tuning method that can avoid the defects in fine-tuning. It is noteworthy that the proposed techniques and tuning method are based on the general object detector without other prior methods, so they are easily compatible with the existing FSOD methods. Extensive experiments show that the PnP-FSOD framework has achieved the state-of-the-art few-shot object detection performance without any tuning method. After applying the one-step tuning method, it further shows a significant lead in both efficiency, precision, and recall, under varied evaluation protocols.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge