Davide Tateo

ROS-LLM: A ROS framework for embodied AI with task feedback and structured reasoning

Jun 28, 2024

Abstract:We present a framework for intuitive robot programming by non-experts, leveraging natural language prompts and contextual information from the Robot Operating System (ROS). Our system integrates large language models (LLMs), enabling non-experts to articulate task requirements to the system through a chat interface. Key features of the framework include: integration of ROS with an AI agent connected to a plethora of open-source and commercial LLMs, automatic extraction of a behavior from the LLM output and execution of ROS actions/services, support for three behavior modes (sequence, behavior tree, state machine), imitation learning for adding new robot actions to the library of possible actions, and LLM reflection via human and environment feedback. Extensive experiments validate the framework, showcasing robustness, scalability, and versatility in diverse scenarios, including long-horizon tasks, tabletop rearrangements, and remote supervisory control. To facilitate the adoption of our framework and support the reproduction of our results, we have made our code open-source. You can access it at: https://github.com/huawei-noah/HEBO/tree/master/ROSLLM.

Safe Reinforcement Learning on the Constraint Manifold: Theory and Applications

Apr 13, 2024

Abstract:Integrating learning-based techniques, especially reinforcement learning, into robotics is promising for solving complex problems in unstructured environments. However, most existing approaches are trained in well-tuned simulators and subsequently deployed on real robots without online fine-tuning. In this setting, the simulation's realism seriously impacts the deployment's success rate. Instead, learning with real-world interaction data offers a promising alternative: not only eliminates the need for a fine-tuned simulator but also applies to a broader range of tasks where accurate modeling is unfeasible. One major problem for on-robot reinforcement learning is ensuring safety, as uncontrolled exploration can cause catastrophic damage to the robot or the environment. Indeed, safety specifications, often represented as constraints, can be complex and non-linear, making safety challenging to guarantee in learning systems. In this paper, we show how we can impose complex safety constraints on learning-based robotics systems in a principled manner, both from theoretical and practical points of view. Our approach is based on the concept of the Constraint Manifold, representing the set of safe robot configurations. Exploiting differential geometry techniques, i.e., the tangent space, we can construct a safe action space, allowing learning agents to sample arbitrary actions while ensuring safety. We demonstrate the method's effectiveness in a real-world Robot Air Hockey task, showing that our method can handle high-dimensional tasks with complex constraints. Videos of the real robot experiments are available on the project website (https://puzeliu.github.io/TRO-ATACOM).

Sharing Knowledge in Multi-Task Deep Reinforcement Learning

Jan 17, 2024

Abstract:We study the benefit of sharing representations among tasks to enable the effective use of deep neural networks in Multi-Task Reinforcement Learning. We leverage the assumption that learning from different tasks, sharing common properties, is helpful to generalize the knowledge of them resulting in a more effective feature extraction compared to learning a single task. Intuitively, the resulting set of features offers performance benefits when used by Reinforcement Learning algorithms. We prove this by providing theoretical guarantees that highlight the conditions for which is convenient to share representations among tasks, extending the well-known finite-time bounds of Approximate Value-Iteration to the multi-task setting. In addition, we complement our analysis by proposing multi-task extensions of three Reinforcement Learning algorithms that we empirically evaluate on widely used Reinforcement Learning benchmarks showing significant improvements over the single-task counterparts in terms of sample efficiency and performance.

Towards Transferring Tactile-based Continuous Force Control Policies from Simulation to Robot

Nov 13, 2023

Abstract:The advent of tactile sensors in robotics has sparked many ideas on how robots can leverage direct contact measurements of their environment interactions to improve manipulation tasks. An important line of research in this regard is that of grasp force control, which aims to manipulate objects safely by limiting the amount of force exerted on the object. While prior works have either hand-modeled their force controllers, employed model-based approaches, or have not shown sim-to-real transfer, we propose a model-free deep reinforcement learning approach trained in simulation and then transferred to the robot without further fine-tuning. We therefore present a simulation environment that produces realistic normal forces, which we use to train continuous force control policies. An evaluation in which we compare against a baseline and perform an ablation study shows that our approach outperforms the hand-modeled baseline and that our proposed inductive bias and domain randomization facilitate sim-to-real transfer. Code, models, and supplementary videos are available on https://sites.google.com/view/rl-force-ctrl

Time-Efficient Reinforcement Learning with Stochastic Stateful Policies

Nov 07, 2023Abstract:Stateful policies play an important role in reinforcement learning, such as handling partially observable environments, enhancing robustness, or imposing an inductive bias directly into the policy structure. The conventional method for training stateful policies is Backpropagation Through Time (BPTT), which comes with significant drawbacks, such as slow training due to sequential gradient propagation and the occurrence of vanishing or exploding gradients. The gradient is often truncated to address these issues, resulting in a biased policy update. We present a novel approach for training stateful policies by decomposing the latter into a stochastic internal state kernel and a stateless policy, jointly optimized by following the stateful policy gradient. We introduce different versions of the stateful policy gradient theorem, enabling us to easily instantiate stateful variants of popular reinforcement learning and imitation learning algorithms. Furthermore, we provide a theoretical analysis of our new gradient estimator and compare it with BPTT. We evaluate our approach on complex continuous control tasks, e.g., humanoid locomotion, and demonstrate that our gradient estimator scales effectively with task complexity while offering a faster and simpler alternative to BPTT.

LocoMuJoCo: A Comprehensive Imitation Learning Benchmark for Locomotion

Nov 04, 2023

Abstract:Imitation Learning (IL) holds great promise for enabling agile locomotion in embodied agents. However, many existing locomotion benchmarks primarily focus on simplified toy tasks, often failing to capture the complexity of real-world scenarios and steering research toward unrealistic domains. To advance research in IL for locomotion, we present a novel benchmark designed to facilitate rigorous evaluation and comparison of IL algorithms. This benchmark encompasses a diverse set of environments, including quadrupeds, bipeds, and musculoskeletal human models, each accompanied by comprehensive datasets, such as real noisy motion capture data, ground truth expert data, and ground truth sub-optimal data, enabling evaluation across a spectrum of difficulty levels. To increase the robustness of learned agents, we provide an easy interface for dynamics randomization and offer a wide range of partially observable tasks to train agents across different embodiments. Finally, we provide handcrafted metrics for each task and ship our benchmark with state-of-the-art baseline algorithms to ease evaluation and enable fast benchmarking.

LS-IQ: Implicit Reward Regularization for Inverse Reinforcement Learning

Mar 01, 2023

Abstract:Recent methods for imitation learning directly learn a $Q$-function using an implicit reward formulation rather than an explicit reward function. However, these methods generally require implicit reward regularization to improve stability and often mistreat absorbing states. Previous works show that a squared norm regularization on the implicit reward function is effective, but do not provide a theoretical analysis of the resulting properties of the algorithms. In this work, we show that using this regularizer under a mixture distribution of the policy and the expert provides a particularly illuminating perspective: the original objective can be understood as squared Bellman error minimization, and the corresponding optimization problem minimizes a bounded $\chi^2$-Divergence between the expert and the mixture distribution. This perspective allows us to address instabilities and properly treat absorbing states. We show that our method, Least Squares Inverse Q-Learning (LS-IQ), outperforms state-of-the-art algorithms, particularly in environments with absorbing states. Finally, we propose to use an inverse dynamics model to learn from observations only. Using this approach, we retain performance in settings where no expert actions are available.

Fast Kinodynamic Planning on the Constraint Manifold with Deep Neural Networks

Jan 12, 2023

Abstract:Motion planning is a mature area of research in robotics with many well-established methods based on optimization or sampling the state space, suitable for solving kinematic motion planning. However, when dynamic motions under constraints are needed and computation time is limited, fast kinodynamic planning on the constraint manifold is indispensable. In recent years, learning-based solutions have become alternatives to classical approaches, but they still lack comprehensive handling of complex constraints, such as planning on a lower-dimensional manifold of the task space while considering the robot's dynamics. This paper introduces a novel learning-to-plan framework that exploits the concept of constraint manifold, including dynamics, and neural planning methods. Our approach generates plans satisfying an arbitrary set of constraints and computes them in a short constant time, namely the inference time of a neural network. This allows the robot to plan and replan reactively, making our approach suitable for dynamic environments. We validate our approach on two simulated tasks and in a demanding real-world scenario, where we use a Kuka LBR Iiwa 14 robotic arm to perform the hitting movement in robotic Air Hockey.

Learning-based Design and Control for Quadrupedal Robots with Parallel-Elastic Actuators

Jan 09, 2023Abstract:Parallel-elastic joints can improve the efficiency and strength of robots by assisting the actuators with additional torques. For these benefits to be realized, a spring needs to be carefully designed. However, designing robots is an iterative and tedious process, often relying on intuition and heuristics. We introduce a design optimization framework that allows us to co-optimize a parallel elastic knee joint and locomotion controller for quadrupedal robots with minimal human intuition. We design a parallel elastic joint and optimize its parameters with respect to the efficiency in a model-free fashion. In the first step, we train a design-conditioned policy using model-free Reinforcement Learning, capable of controlling the quadruped in the predefined range of design parameters. Afterwards, we use Bayesian Optimization to find the best design using the policy. We use this framework to optimize the parallel-elastic spring parameters for the knee of our quadrupedal robot ANYmal together with the optimal controller. We evaluate the optimized design and controller in real-world experiments over various terrains. Our results show that the new system improves the torque-square efficiency of the robot by 33% compared to the baseline and reduces maximum joint torque by 30% without compromising tracking performance. The improved design resulted in 11% longer operation time on flat terrain.

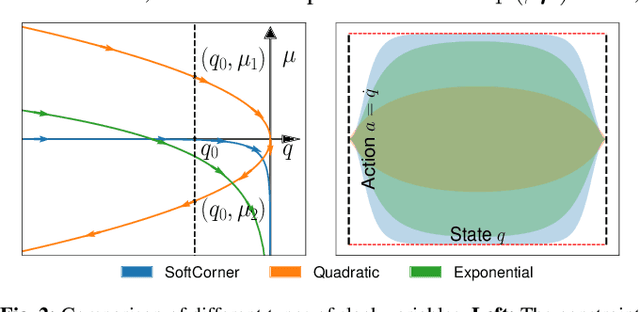

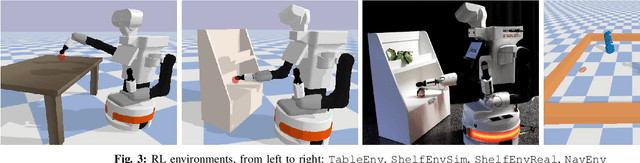

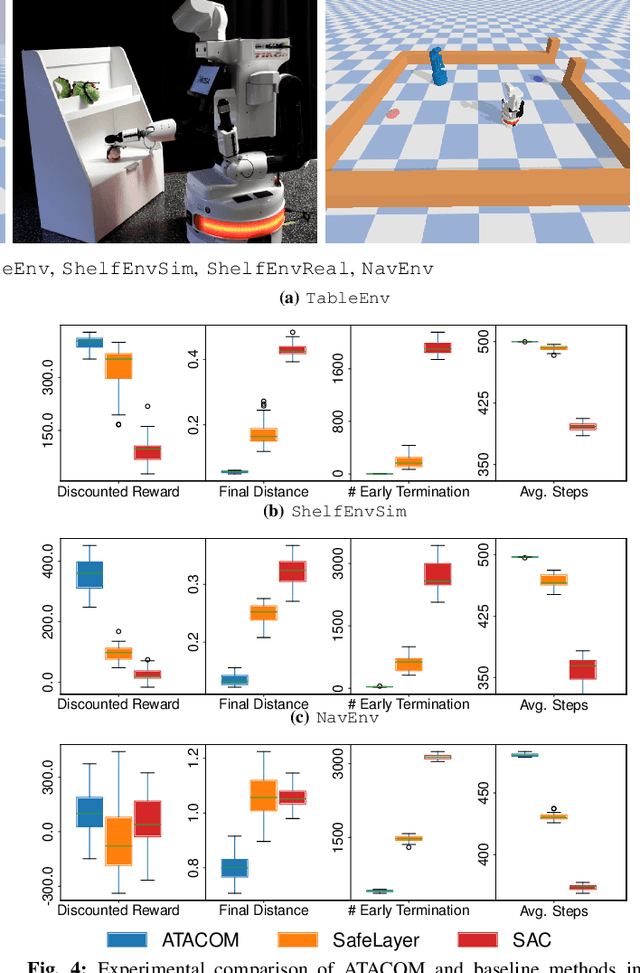

Safe reinforcement learning of dynamic high-dimensional robotic tasks: navigation, manipulation, interaction

Sep 27, 2022

Abstract:Safety is a crucial property of every robotic platform: any control policy should always comply with actuator limits and avoid collisions with the environment and humans. In reinforcement learning, safety is even more fundamental for exploring an environment without causing any damage. While there are many proposed solutions to the safe exploration problem, only a few of them can deal with the complexity of the real world. This paper introduces a new formulation of safe exploration for reinforcement learning of various robotic tasks. Our approach applies to a wide class of robotic platforms and enforces safety even under complex collision constraints learned from data by exploring the tangent space of the constraint manifold. Our proposed approach achieves state-of-the-art performance in simulated high-dimensional and dynamic tasks while avoiding collisions with the environment. We show safe real-world deployment of our learned controller on a TIAGo++ robot, achieving remarkable performance in manipulation and human-robot interaction tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge