Graph Ladling: Shockingly Simple Parallel GNN Training without Intermediate Communication

Jun 18, 2023Ajay Jaiswal, Shiwei Liu, Tianlong Chen, Ying Ding, Zhangyang Wang

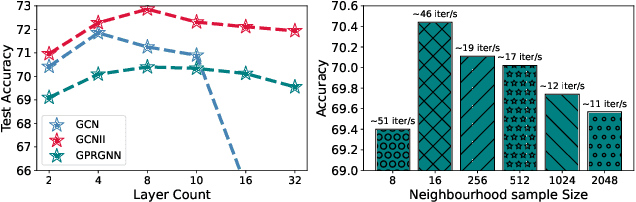

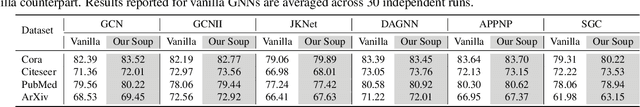

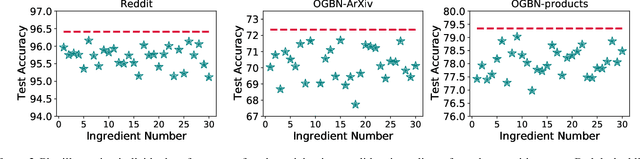

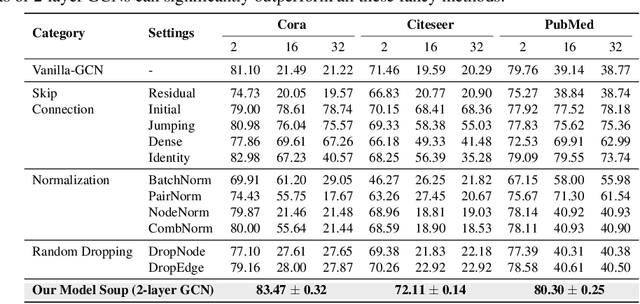

Graphs are omnipresent and GNNs are a powerful family of neural networks for learning over graphs. Despite their popularity, scaling GNNs either by deepening or widening suffers from prevalent issues of unhealthy gradients, over-smoothening, information squashing, which often lead to sub-standard performance. In this work, we are interested in exploring a principled way to scale GNNs capacity without deepening or widening, which can improve its performance across multiple small and large graphs. Motivated by the recent intriguing phenomenon of model soups, which suggest that fine-tuned weights of multiple large-language pre-trained models can be merged to a better minima, we argue to exploit the fundamentals of model soups to mitigate the aforementioned issues of memory bottleneck and trainability during GNNs scaling. More specifically, we propose not to deepen or widen current GNNs, but instead present a data-centric perspective of model soups tailored for GNNs, i.e., to build powerful GNNs by dividing giant graph data to build independently and parallelly trained multiple comparatively weaker GNNs without any intermediate communication, and combining their strength using a greedy interpolation soup procedure to achieve state-of-the-art performance. Moreover, we provide a wide variety of model soup preparation techniques by leveraging state-of-the-art graph sampling and graph partitioning approaches that can handle large graph data structures. Our extensive experiments across many real-world small and large graphs, illustrate the effectiveness of our approach and point towards a promising orthogonal direction for GNN scaling. Codes are available at: \url{https://github.com/VITA-Group/graph_ladling}.

Instant Soup: Cheap Pruning Ensembles in A Single Pass Can Draw Lottery Tickets from Large Models

Jun 18, 2023Ajay Jaiswal, Shiwei Liu, Tianlong Chen, Ying Ding, Zhangyang Wang

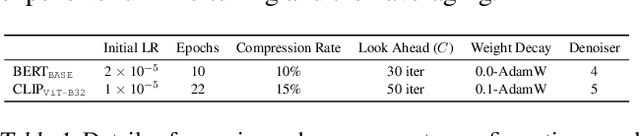

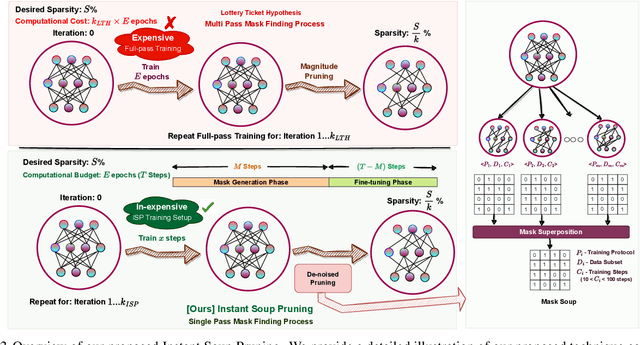

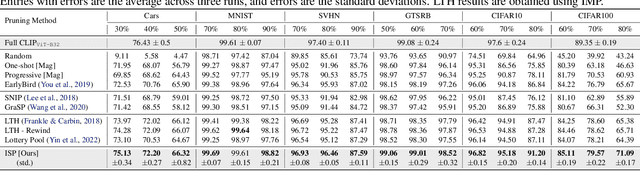

Large pre-trained transformers have been receiving explosive attention in the past few years, due to their wide adaptability for numerous downstream applications via fine-tuning, but their exponentially increasing parameter counts are becoming a primary hurdle to even just fine-tune them without industry-standard hardware. Recently, Lottery Ticket Hypothesis (LTH) and its variants, have been exploited to prune these large pre-trained models generating subnetworks that can achieve similar performance as their dense counterparts, but LTH pragmatism is enormously inhibited by repetitive full training and pruning routine of iterative magnitude pruning (IMP) which worsens with increasing model size. Motivated by the recent observations of model soups, which suggest that fine-tuned weights of multiple models can be merged to a better minima, we propose Instant Soup Pruning (ISP) to generate lottery ticket quality subnetworks, using a fraction of the original IMP cost by replacing the expensive intermediate pruning stages of IMP with computationally efficient weak mask generation and aggregation routine. More specifically, during the mask generation stage, ISP takes a small handful of iterations using varying training protocols and data subsets to generate many weak and noisy subnetworks, and superpose them to average out the noise creating a high-quality denoised subnetwork. Our extensive experiments and ablation on two popular large-scale pre-trained models: CLIP (unexplored in pruning till date) and BERT across multiple benchmark vision and language datasets validate the effectiveness of ISP compared to several state-of-the-art pruning methods. Codes are available at: \url{https://github.com/VITA-Group/instant_soup}

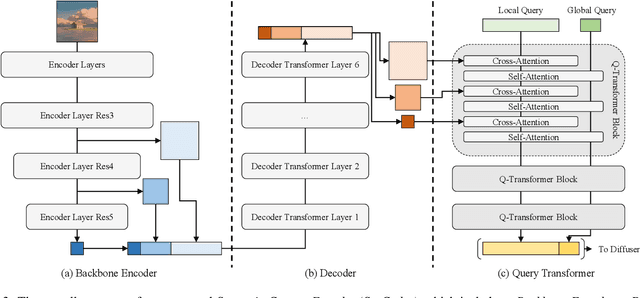

Learning to Estimate 6DoF Pose from Limited Data: A Few-Shot, Generalizable Approach using RGB Images

Jun 13, 2023Panwang Pan, Zhiwen Fan, Brandon Y. Feng, Peihao Wang, Chenxin Li, Zhangyang Wang

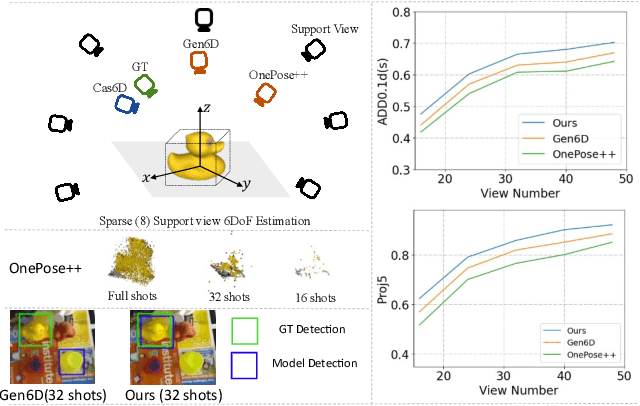

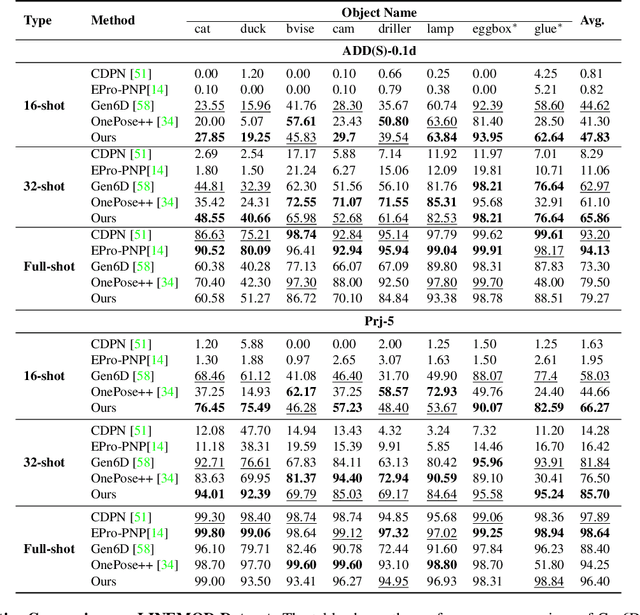

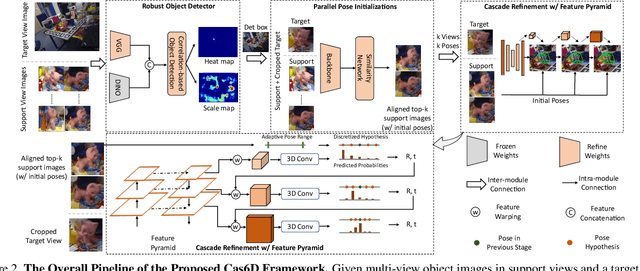

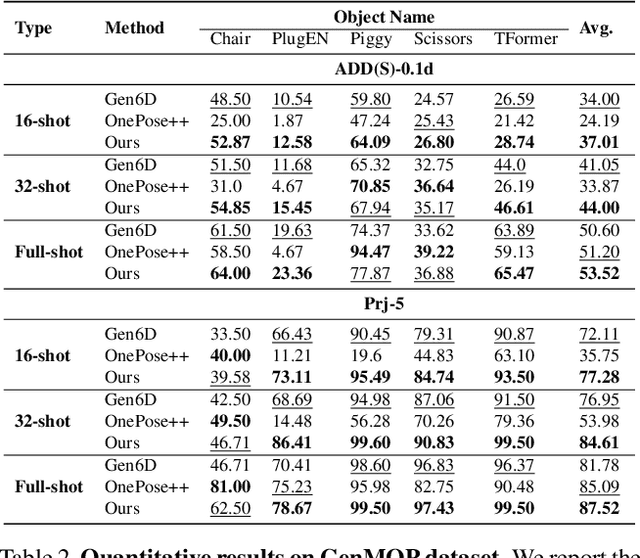

The accurate estimation of six degrees-of-freedom (6DoF) object poses is essential for many applications in robotics and augmented reality. However, existing methods for 6DoF pose estimation often depend on CAD templates or dense support views, restricting their usefulness in realworld situations. In this study, we present a new cascade framework named Cas6D for few-shot 6DoF pose estimation that is generalizable and uses only RGB images. To address the false positives of target object detection in the extreme few-shot setting, our framework utilizes a selfsupervised pre-trained ViT to learn robust feature representations. Then, we initialize the nearest top-K pose candidates based on similarity score and refine the initial poses using feature pyramids to formulate and update the cascade warped feature volume, which encodes context at increasingly finer scales. By discretizing the pose search range using multiple pose bins and progressively narrowing the pose search range in each stage using predictions from the previous stage, Cas6D can overcome the large gap between pose candidates and ground truth poses, which is a common failure mode in sparse-view scenarios. Experimental results on the LINEMOD and GenMOP datasets demonstrate that Cas6D outperforms state-of-the-art methods by 9.2% and 3.8% accuracy (Proj-5) under the 32-shot setting compared to OnePose++ and Gen6D.

The Emergence of Essential Sparsity in Large Pre-trained Models: The Weights that Matter

Jun 06, 2023Ajay Jaiswal, Shiwei Liu, Tianlong Chen, Zhangyang Wang

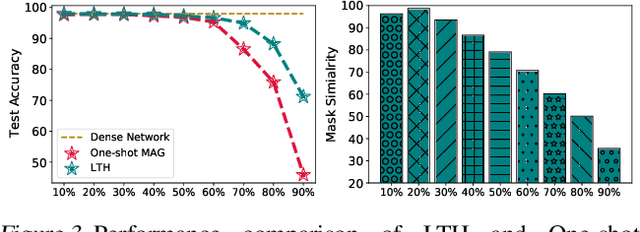

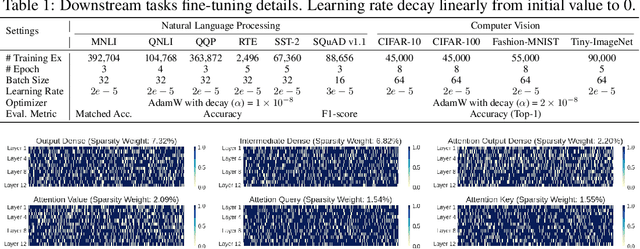

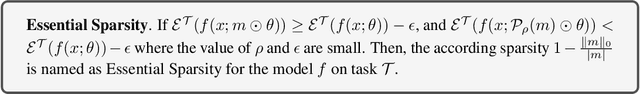

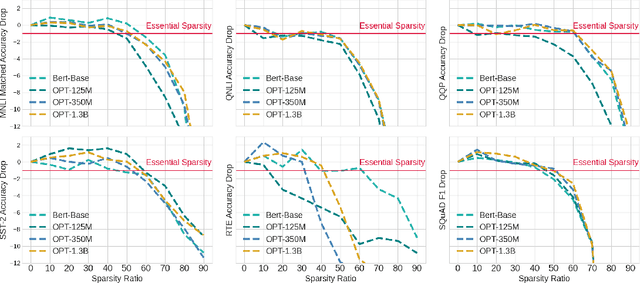

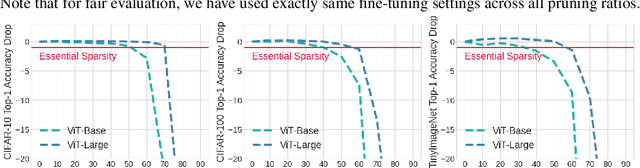

Large pre-trained transformers are show-stealer in modern-day deep learning, and it becomes crucial to comprehend the parsimonious patterns that exist within them as they grow in scale. With exploding parameter counts, Lottery Ticket Hypothesis (LTH) and its variants, have lost their pragmatism in sparsifying them due to high computation and memory bottleneck of the repetitive train-prune-retrain routine of iterative magnitude pruning (IMP) which worsens with increasing model size. In this paper, we comprehensively study induced sparse patterns across multiple large pre-trained vision and language transformers. We propose the existence of -- essential sparsity defined with a sharp dropping point beyond which the performance declines much faster w.r.t the rise of sparsity level, when we directly remove weights with the smallest magnitudes in one-shot. In the sparsity-performance curve We also present an intriguing emerging phenomenon of abrupt sparsification during the pre-training of BERT, i.e., BERT suddenly becomes heavily sparse in pre-training after certain iterations. Moreover, our observations also indicate a counter-intuitive finding that BERT trained with a larger amount of pre-training data tends to have a better ability to condense knowledge in comparatively relatively fewer parameters. Lastly, we investigate the effect of the pre-training loss on essential sparsity and discover that self-supervised learning (SSL) objectives trigger stronger emergent sparsification properties than supervised learning (SL). Our codes are available at \url{https://github.com/VITA-Group/essential\_sparsity}.

Dynamic Sparsity Is Channel-Level Sparsity Learner

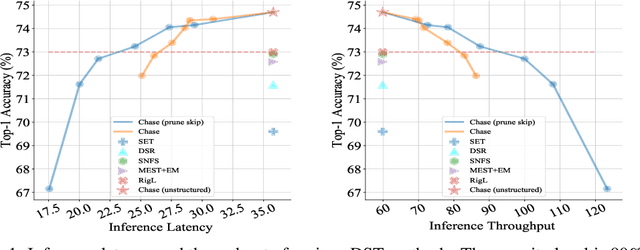

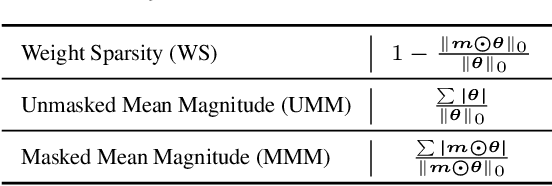

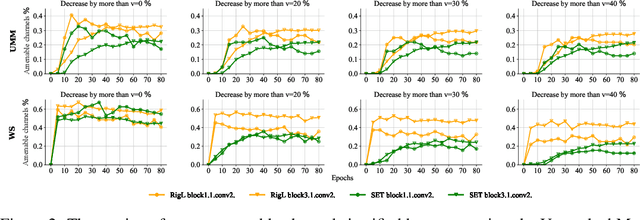

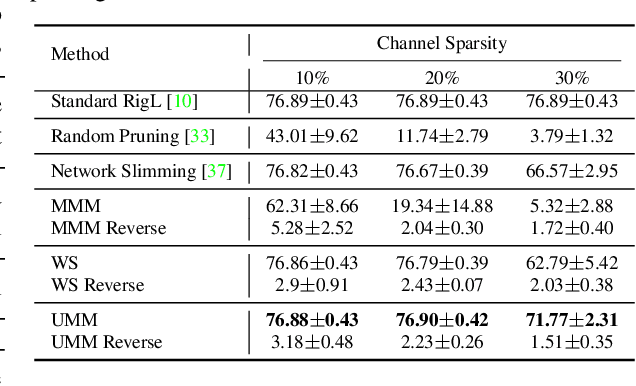

May 30, 2023Lu Yin, Gen Li, Meng Fang, Li Shen, Tianjin Huang, Zhangyang Wang, Vlado Menkovski, Xiaolong Ma, Mykola Pechenizkiy, Shiwei Liu

Sparse training has received an upsurging interest in machine learning due to its tantalizing saving potential for the entire training process as well as inference. Dynamic sparse training (DST), as a leading sparse training approach, can train deep neural networks at high sparsity from scratch to match the performance of their dense counterparts. However, most if not all DST prior arts demonstrate their effectiveness on unstructured sparsity with highly irregular sparse patterns, which receives limited support in common hardware. This limitation hinders the usage of DST in practice. In this paper, we propose Channel-aware dynamic sparse (Chase), which for the first time seamlessly translates the promise of unstructured dynamic sparsity to GPU-friendly channel-level sparsity (not fine-grained N:M or group sparsity) during one end-to-end training process, without any ad-hoc operations. The resulting small sparse networks can be directly accelerated by commodity hardware, without using any particularly sparsity-aware hardware accelerators. This appealing outcome is partially motivated by a hidden phenomenon of dynamic sparsity: off-the-shelf unstructured DST implicitly involves biased parameter reallocation across channels, with a large fraction of channels (up to 60\%) being sparser than others. By progressively identifying and removing these channels during training, our approach translates unstructured sparsity to channel-wise sparsity. Our experimental results demonstrate that Chase achieves 1.7 X inference throughput speedup on common GPU devices without compromising accuracy with ResNet-50 on ImageNet. We release our codes in https://github.com/luuyin/chase.

Are Large Kernels Better Teachers than Transformers for ConvNets?

May 30, 2023Tianjin Huang, Lu Yin, Zhenyu Zhang, Li Shen, Meng Fang, Mykola Pechenizkiy, Zhangyang Wang, Shiwei Liu

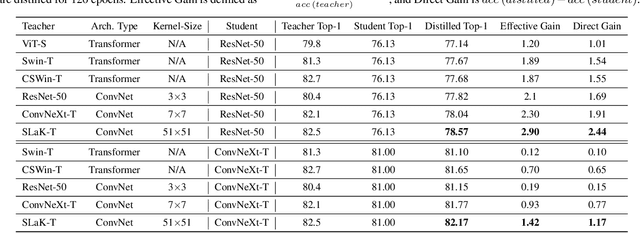

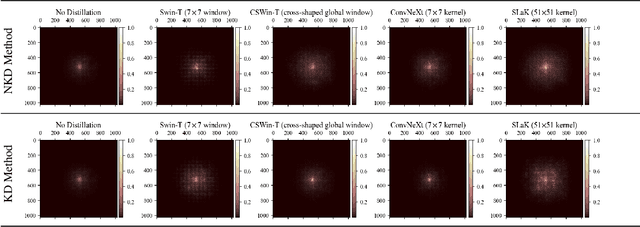

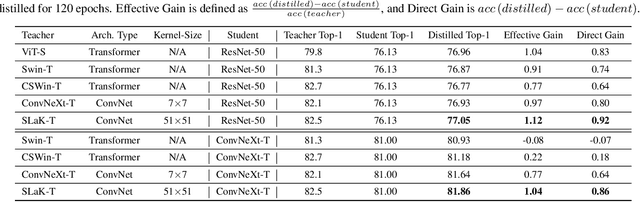

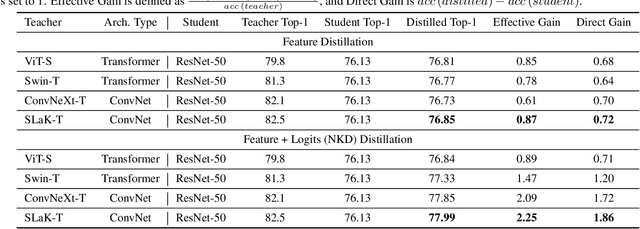

This paper reveals a new appeal of the recently emerged large-kernel Convolutional Neural Networks (ConvNets): as the teacher in Knowledge Distillation (KD) for small-kernel ConvNets. While Transformers have led state-of-the-art (SOTA) performance in various fields with ever-larger models and labeled data, small-kernel ConvNets are considered more suitable for resource-limited applications due to the efficient convolution operation and compact weight sharing. KD is widely used to boost the performance of small-kernel ConvNets. However, previous research shows that it is not quite effective to distill knowledge (e.g., global information) from Transformers to small-kernel ConvNets, presumably due to their disparate architectures. We hereby carry out a first-of-its-kind study unveiling that modern large-kernel ConvNets, a compelling competitor to Vision Transformers, are remarkably more effective teachers for small-kernel ConvNets, due to more similar architectures. Our findings are backed up by extensive experiments on both logit-level and feature-level KD ``out of the box", with no dedicated architectural nor training recipe modifications. Notably, we obtain the \textbf{best-ever pure ConvNet} under 30M parameters with \textbf{83.1\%} top-1 accuracy on ImageNet, outperforming current SOTA methods including ConvNeXt V2 and Swin V2. We also find that beneficial characteristics of large-kernel ConvNets, e.g., larger effective receptive fields, can be seamlessly transferred to students through this large-to-small kernel distillation. Code is available at: \url{https://github.com/VITA-Group/SLaK}.

* Accepted by ICML 2023

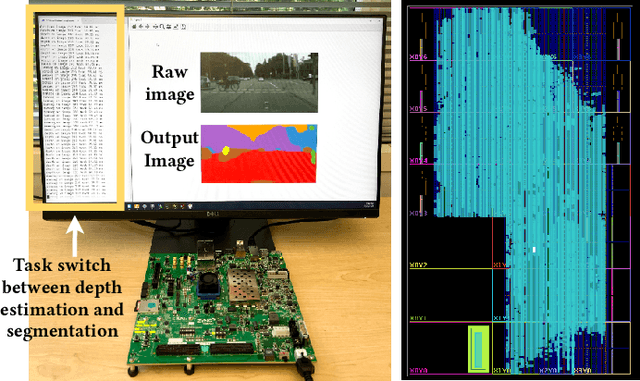

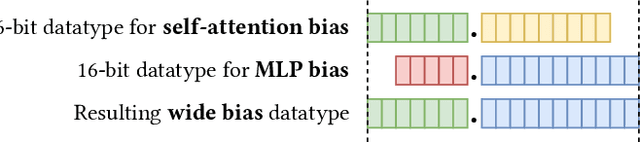

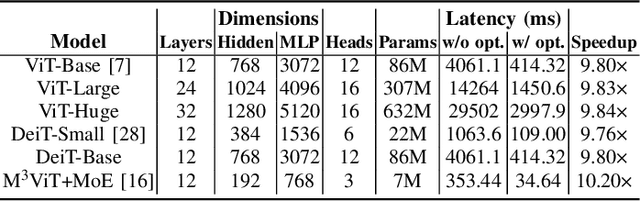

Edge-MoE: Memory-Efficient Multi-Task Vision Transformer Architecture with Task-level Sparsity via Mixture-of-Experts

May 30, 2023Rishov Sarkar, Hanxue Liang, Zhiwen Fan, Zhangyang Wang, Cong Hao

Computer vision researchers are embracing two promising paradigms: Vision Transformers (ViTs) and Multi-task Learning (MTL), which both show great performance but are computation-intensive, given the quadratic complexity of self-attention in ViT and the need to activate an entire large MTL model for one task. M$^3$ViT is the latest multi-task ViT model that introduces mixture-of-experts (MoE), where only a small portion of subnetworks ("experts") are sparsely and dynamically activated based on the current task. M$^3$ViT achieves better accuracy and over 80% computation reduction but leaves challenges for efficient deployment on FPGA. Our work, dubbed Edge-MoE, solves the challenges to introduce the first end-to-end FPGA accelerator for multi-task ViT with a collection of architectural innovations, including (1) a novel reordering mechanism for self-attention, which requires only constant bandwidth regardless of the target parallelism; (2) a fast single-pass softmax approximation; (3) an accurate and low-cost GELU approximation; (4) a unified and flexible computing unit that is shared by almost all computational layers to maximally reduce resource usage; and (5) uniquely for M$^3$ViT, a novel patch reordering method to eliminate memory access overhead. Edge-MoE achieves 2.24x and 4.90x better energy efficiency comparing with GPU and CPU, respectively. A real-time video demonstration is available online, along with our code written using High-Level Synthesis, which will be open-sourced.

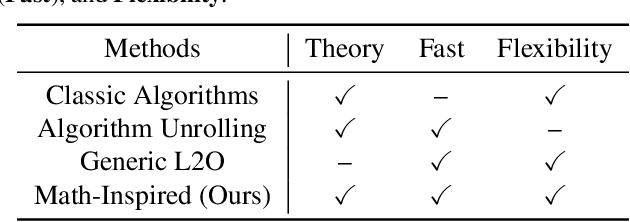

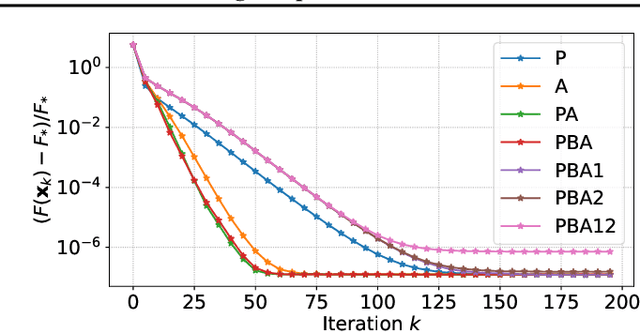

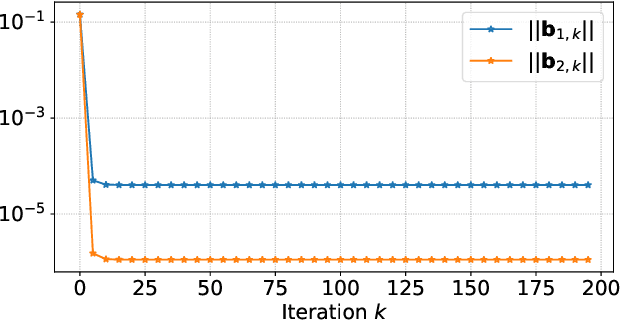

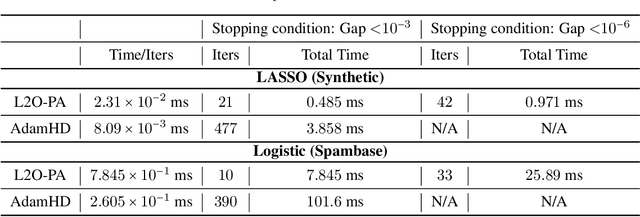

Towards Constituting Mathematical Structures for Learning to Optimize

May 29, 2023Jialin Liu, Xiaohan Chen, Zhangyang Wang, Wotao Yin, HanQin Cai

Learning to Optimize (L2O), a technique that utilizes machine learning to learn an optimization algorithm automatically from data, has gained arising attention in recent years. A generic L2O approach parameterizes the iterative update rule and learns the update direction as a black-box network. While the generic approach is widely applicable, the learned model can overfit and may not generalize well to out-of-distribution test sets. In this paper, we derive the basic mathematical conditions that successful update rules commonly satisfy. Consequently, we propose a novel L2O model with a mathematics-inspired structure that is broadly applicable and generalized well to out-of-distribution problems. Numerical simulations validate our theoretical findings and demonstrate the superior empirical performance of the proposed L2O model.

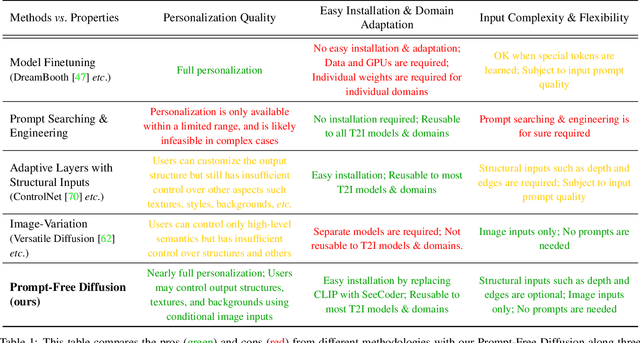

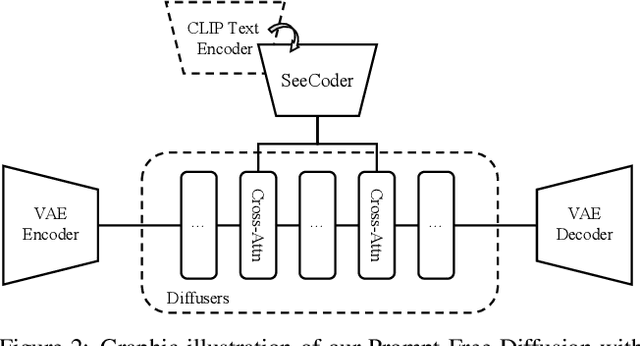

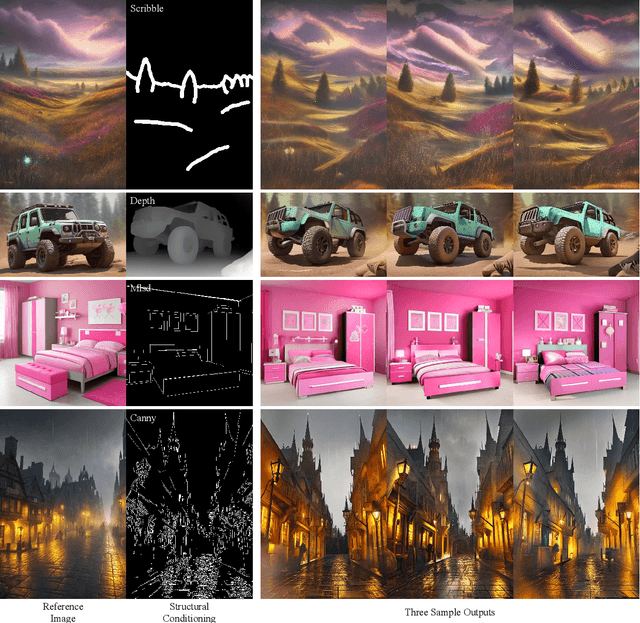

Prompt-Free Diffusion: Taking "Text" out of Text-to-Image Diffusion Models

May 25, 2023Xingqian Xu, Jiayi Guo, Zhangyang Wang, Gao Huang, Irfan Essa, Humphrey Shi

Text-to-image (T2I) research has grown explosively in the past year, owing to the large-scale pre-trained diffusion models and many emerging personalization and editing approaches. Yet, one pain point persists: the text prompt engineering, and searching high-quality text prompts for customized results is more art than science. Moreover, as commonly argued: "an image is worth a thousand words" - the attempt to describe a desired image with texts often ends up being ambiguous and cannot comprehensively cover delicate visual details, hence necessitating more additional controls from the visual domain. In this paper, we take a bold step forward: taking "Text" out of a pre-trained T2I diffusion model, to reduce the burdensome prompt engineering efforts for users. Our proposed framework, Prompt-Free Diffusion, relies on only visual inputs to generate new images: it takes a reference image as "context", an optional image structural conditioning, and an initial noise, with absolutely no text prompt. The core architecture behind the scene is Semantic Context Encoder (SeeCoder), substituting the commonly used CLIP-based or LLM-based text encoder. The reusability of SeeCoder also makes it a convenient drop-in component: one can also pre-train a SeeCoder in one T2I model and reuse it for another. Through extensive experiments, Prompt-Free Diffusion is experimentally found to (i) outperform prior exemplar-based image synthesis approaches; (ii) perform on par with state-of-the-art T2I models using prompts following the best practice; and (iii) be naturally extensible to other downstream applications such as anime figure generation and virtual try-on, with promising quality. Our code and models are open-sourced at https://github.com/SHI-Labs/Prompt-Free-Diffusion.

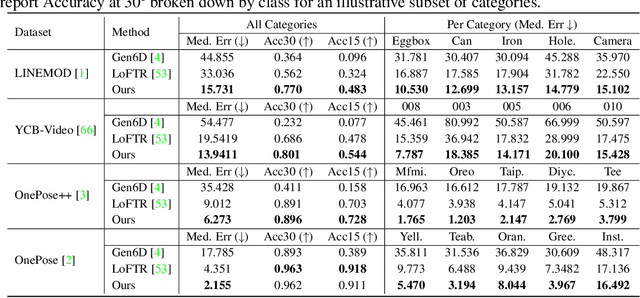

POPE: 6-DoF Promptable Pose Estimation of Any Object, in Any Scene, with One Reference

May 25, 2023Zhiwen Fan, Panwang Pan, Peihao Wang, Yifan Jiang, Dejia Xu, Hanwen Jiang, Zhangyang Wang

Despite the significant progress in six degrees-of-freedom (6DoF) object pose estimation, existing methods have limited applicability in real-world scenarios involving embodied agents and downstream 3D vision tasks. These limitations mainly come from the necessity of 3D models, closed-category detection, and a large number of densely annotated support views. To mitigate this issue, we propose a general paradigm for object pose estimation, called Promptable Object Pose Estimation (POPE). The proposed approach POPE enables zero-shot 6DoF object pose estimation for any target object in any scene, while only a single reference is adopted as the support view. To achieve this, POPE leverages the power of the pre-trained large-scale 2D foundation model, employs a framework with hierarchical feature representation and 3D geometry principles. Moreover, it estimates the relative camera pose between object prompts and the target object in new views, enabling both two-view and multi-view 6DoF pose estimation tasks. Comprehensive experimental results demonstrate that POPE exhibits unrivaled robust performance in zero-shot settings, by achieving a significant reduction in the averaged Median Pose Error by 52.38% and 50.47% on the LINEMOD and OnePose datasets, respectively. We also conduct more challenging testings in causally captured images (see Figure 1), which further demonstrates the robustness of POPE. Project page can be found with https://paulpanwang.github.io/POPE/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge