Yong Xu

Contrastive Meta Learning with Behavior Multiplicity for Recommendation

Feb 17, 2022

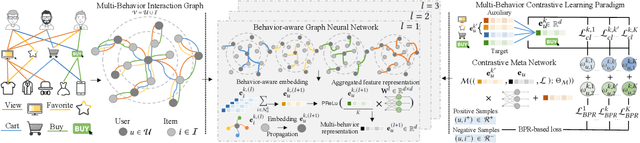

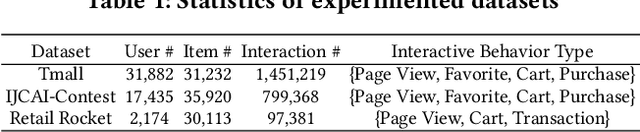

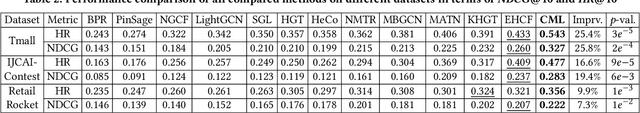

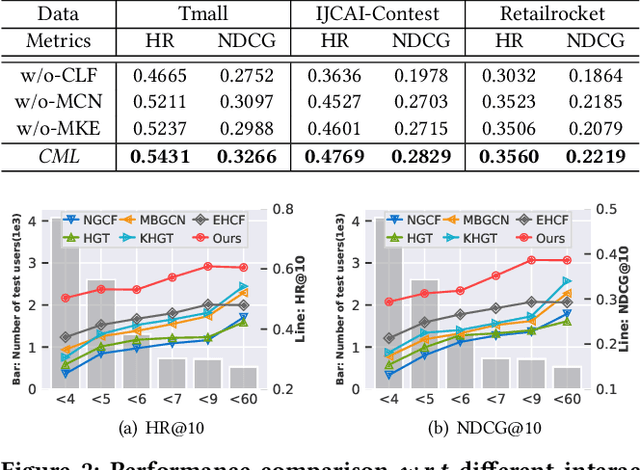

Abstract:A well-informed recommendation framework could not only help users identify their interested items, but also benefit the revenue of various online platforms (e.g., e-commerce, social media). Traditional recommendation models usually assume that only a single type of interaction exists between user and item, and fail to model the multiplex user-item relationships from multi-typed user behavior data, such as page view, add-to-favourite and purchase. While some recent studies propose to capture the dependencies across different types of behaviors, two important challenges have been less explored: i) Dealing with the sparse supervision signal under target behaviors (e.g., purchase). ii) Capturing the personalized multi-behavior patterns with customized dependency modeling. To tackle the above challenges, we devise a new model CML, Contrastive Meta Learning (CML), to maintain dedicated cross-type behavior dependency for different users. In particular, we propose a multi-behavior contrastive learning framework to distill transferable knowledge across different types of behaviors via the constructed contrastive loss. In addition, to capture the diverse multi-behavior patterns, we design a contrastive meta network to encode the customized behavior heterogeneity for different users. Extensive experiments on three real-world datasets indicate that our method consistently outperforms various state-of-the-art recommendation methods. Our empirical studies further suggest that the contrastive meta learning paradigm offers great potential for capturing the behavior multiplicity in recommendation. We release our model implementation at: https://github.com/weiwei1206/CML.git.

Collaborative Reflection-Augmented Autoencoder Network for Recommender Systems

Jan 10, 2022

Abstract:As the deep learning techniques have expanded to real-world recommendation tasks, many deep neural network based Collaborative Filtering (CF) models have been developed to project user-item interactions into latent feature space, based on various neural architectures, such as multi-layer perceptron, auto-encoder and graph neural networks. However, the majority of existing collaborative filtering systems are not well designed to handle missing data. Particularly, in order to inject the negative signals in the training phase, these solutions largely rely on negative sampling from unobserved user-item interactions and simply treating them as negative instances, which brings the recommendation performance degradation. To address the issues, we develop a Collaborative Reflection-Augmented Autoencoder Network (CRANet), that is capable of exploring transferable knowledge from observed and unobserved user-item interactions. The network architecture of CRANet is formed of an integrative structure with a reflective receptor network and an information fusion autoencoder module, which endows our recommendation framework with the ability of encoding implicit user's pairwise preference on both interacted and non-interacted items. Additionally, a parametric regularization-based tied-weight scheme is designed to perform robust joint training of the two-stage CRANet model. We finally experimentally validate CRANet on four diverse benchmark datasets corresponding to two recommendation tasks, to show that debiasing the negative signals of user-item interactions improves the performance as compared to various state-of-the-art recommendation techniques. Our source code is available at https://github.com/akaxlh/CRANet.

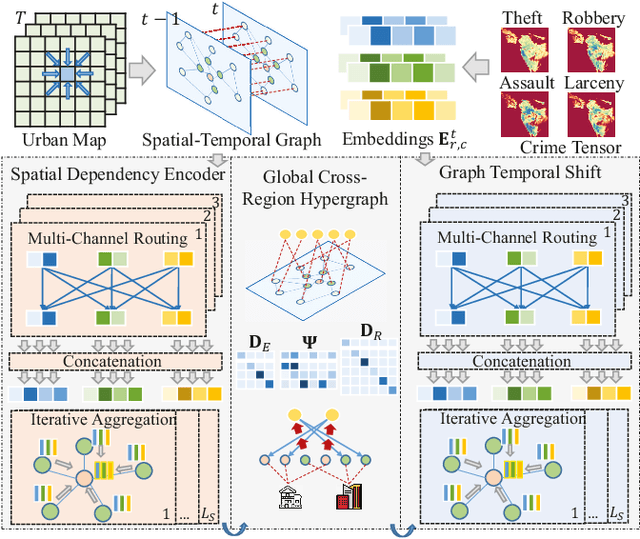

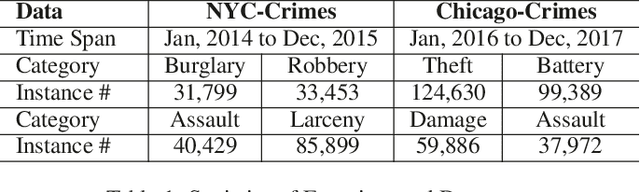

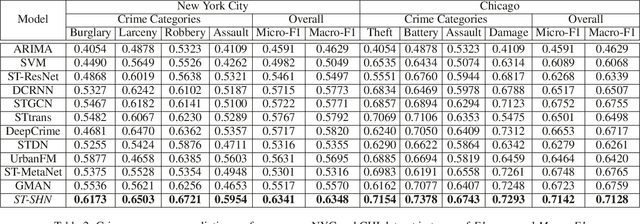

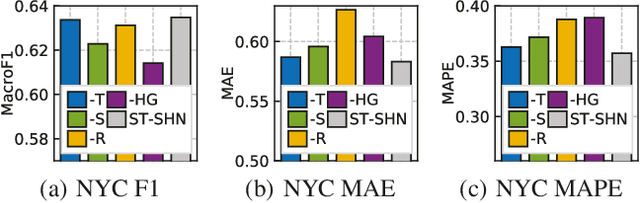

Spatial-Temporal Sequential Hypergraph Network for Crime Prediction

Jan 07, 2022

Abstract:Crime prediction is crucial for public safety and resource optimization, yet is very challenging due to two aspects: i) the dynamics of criminal patterns across time and space, crime events are distributed unevenly on both spatial and temporal domains; ii) time-evolving dependencies between different types of crimes (e.g., Theft, Robbery, Assault, Damage) which reveal fine-grained semantics of crimes. To tackle these challenges, we propose Spatial-Temporal Sequential Hypergraph Network (ST-SHN) to collectively encode complex crime spatial-temporal patterns as well as the underlying category-wise crime semantic relationships. In specific, to handle spatial-temporal dynamics under the long-range and global context, we design a graph-structured message passing architecture with the integration of the hypergraph learning paradigm. To capture category-wise crime heterogeneous relations in a dynamic environment, we introduce a multi-channel routing mechanism to learn the time-evolving structural dependency across crime types. We conduct extensive experiments on two real-world datasets, showing that our proposed ST-SHN framework can significantly improve the prediction performance as compared to various state-of-the-art baselines. The source code is available at: https://github.com/akaxlh/ST-SHN.

Multi-Behavior Enhanced Recommendation with Cross-Interaction Collaborative Relation Modeling

Jan 07, 2022

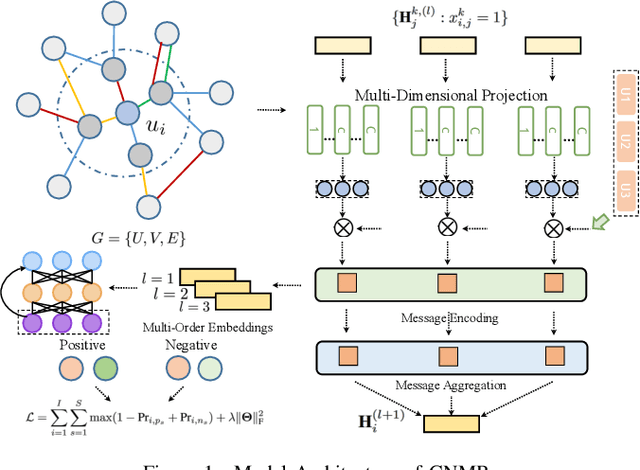

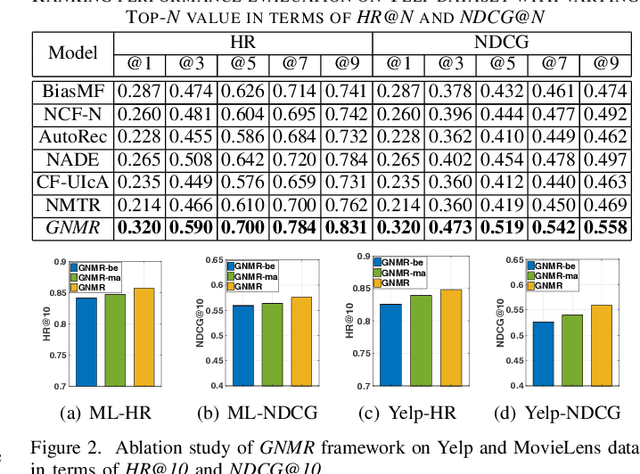

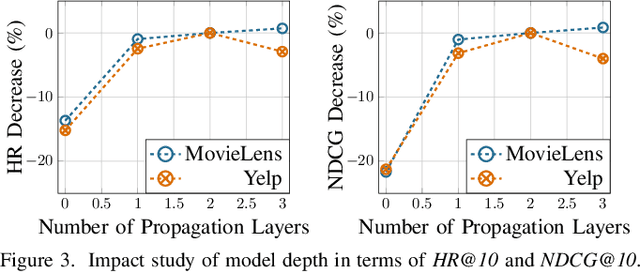

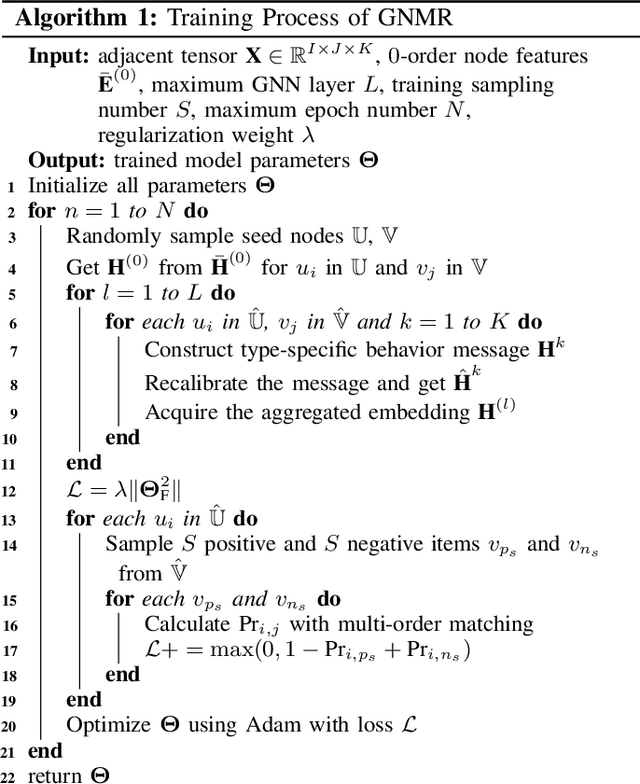

Abstract:Many previous studies aim to augment collaborative filtering with deep neural network techniques, so as to achieve better recommendation performance. However, most existing deep learning-based recommender systems are designed for modeling singular type of user-item interaction behavior, which can hardly distill the heterogeneous relations between user and item. In practical recommendation scenarios, there exist multityped user behaviors, such as browse and purchase. Due to the overlook of user's multi-behavioral patterns over different items, existing recommendation methods are insufficient to capture heterogeneous collaborative signals from user multi-behavior data. Inspired by the strength of graph neural networks for structured data modeling, this work proposes a Graph Neural Multi-Behavior Enhanced Recommendation (GNMR) framework which explicitly models the dependencies between different types of user-item interactions under a graph-based message passing architecture. GNMR devises a relation aggregation network to model interaction heterogeneity, and recursively performs embedding propagation between neighboring nodes over the user-item interaction graph. Experiments on real-world recommendation datasets show that our GNMR consistently outperforms state-of-the-art methods. The source code is available at https://github.com/akaxlh/GNMR.

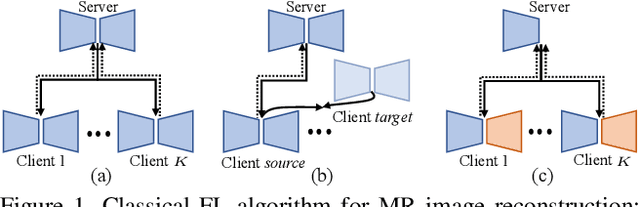

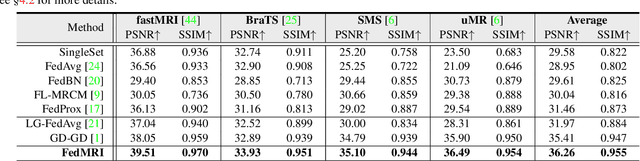

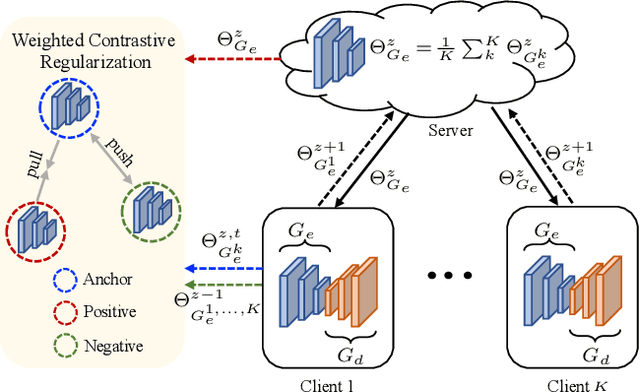

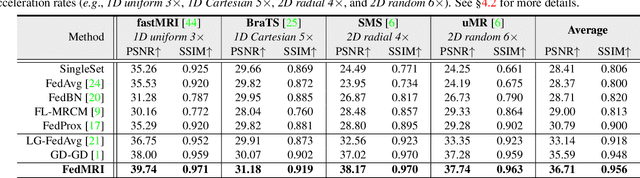

Specificity-Preserving Federated Learning for MR Image Reconstruction

Dec 09, 2021

Abstract:Federated learning (FL) can be used to improve data privacy and efficiency in magnetic resonance (MR) image reconstruction by enabling multiple institutions to collaborate without needing to aggregate local data. However, the domain shift caused by different MR imaging protocols can substantially degrade the performance of FL models. Recent FL techniques tend to solve this by enhancing the generalization of the global model, but they ignore the domain-specific features, which may contain important information about the device properties and be useful for local reconstruction. In this paper, we propose a specificity-preserving FL algorithm for MR image reconstruction (FedMRI). The core idea is to divide the MR reconstruction model into two parts: a globally shared encoder to obtain a generalized representation at the global level, and a client-specific decoder to preserve the domain-specific properties of each client, which is important for collaborative reconstruction when the clients have unique distribution. Moreover, to further boost the convergence of the globally shared encoder when a domain shift is present, a weighted contrastive regularization is introduced to directly correct any deviation between the client and server during optimization. Extensive experiments demonstrate that our FedMRI's reconstructed results are the closest to the ground-truth for multi-institutional data, and that it outperforms state-of-the-art FL methods.

SwinTrack: A Simple and Strong Baseline for Transformer Tracking

Dec 08, 2021

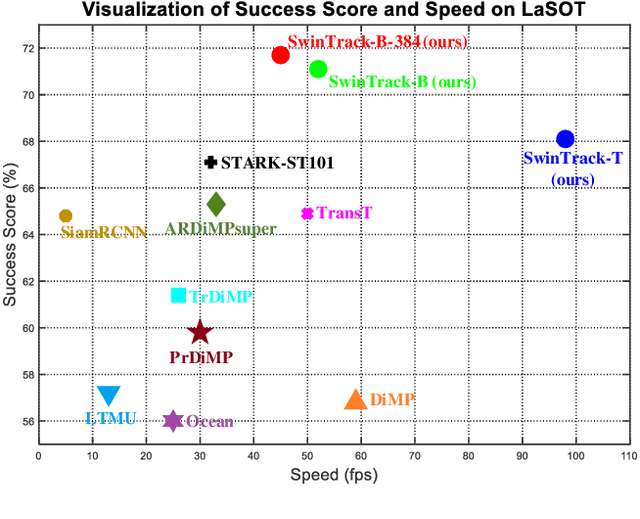

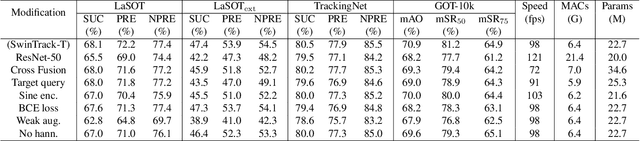

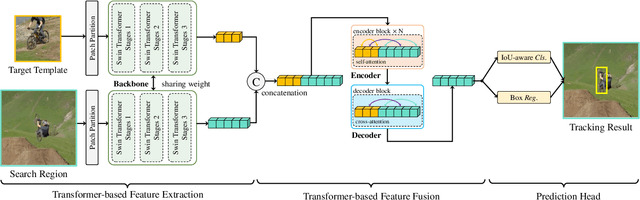

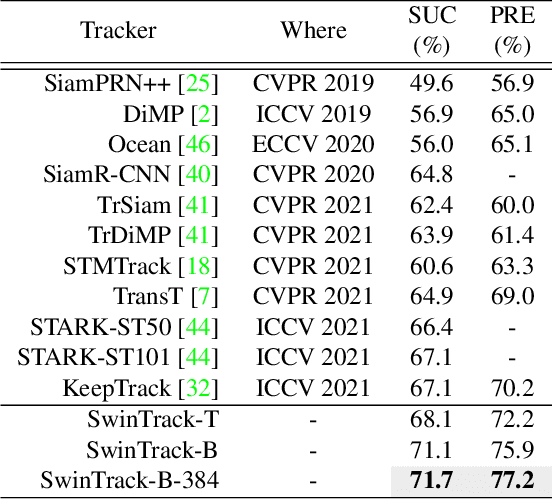

Abstract:Transformer has recently demonstrated clear potential in improving visual tracking algorithms. Nevertheless, existing transformer-based trackers mostly use Transformer to fuse and enhance the features generated by convolutional neural networks (CNNs). By contrast, in this paper, we propose a fully attentional-based Transformer tracking algorithm, Swin-Transformer Tracker (SwinTrack). SwinTrack uses Transformer for both feature extraction and feature fusion, allowing full interactions between the target object and the search region for tracking. To further improve performance, we investigate comprehensively different strategies for feature fusion, position encoding, and training loss. All these efforts make SwinTrack a simple yet solid baseline. In our thorough experiments, SwinTrack sets a new record with 0.702 SUC on LaSOT, surpassing STARK by 3.1% while still running at 45 FPS. Besides, it achieves state-of-the-art performances with 0.476 SUC, 0.840 SUC and 0.694 AO on other challenging LaSOT$_{ext}$, TrackingNet, and GOT-10k datasets. Our implementation and trained models are available at https://github.com/LitingLin/SwinTrack.

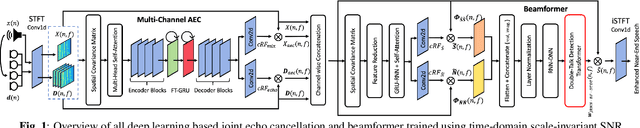

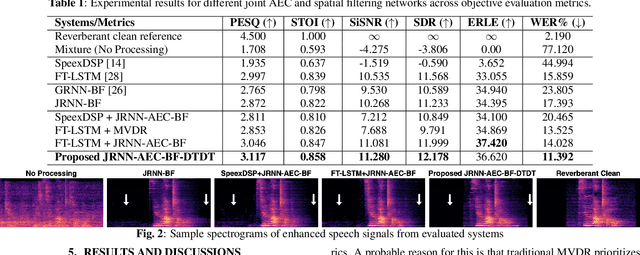

Joint AEC AND Beamforming with Double-Talk Detection using RNN-Transformer

Nov 09, 2021

Abstract:Acoustic echo cancellation (AEC) is a technique used in full-duplex communication systems to eliminate acoustic feedback of far-end speech. However, their performance degrades in naturalistic environments due to nonlinear distortions introduced by the speaker, as well as background noise, reverberation, and double-talk scenarios. To address nonlinear distortions and co-existing background noise, several deep neural network (DNN)-based joint AEC and denoising systems were developed. These systems are based on either purely "black-box" neural networks or "hybrid" systems that combine traditional AEC algorithms with neural networks. We propose an all-deep-learning framework that combines multi-channel AEC and our recently proposed self-attentive recurrent neural network (RNN) beamformer. We propose an all-deep-learning framework that combines multi-channel AEC and our recently proposed self-attentive recurrent neural network (RNN) beamformer. Furthermore, we propose a double-talk detection transformer (DTDT) module based on the multi-head attention transformer structure that computes attention over time by leveraging frame-wise double-talk predictions. Experiments show that our proposed method outperforms other approaches in terms of improving speech quality and speech recognition rate of an ASR system.

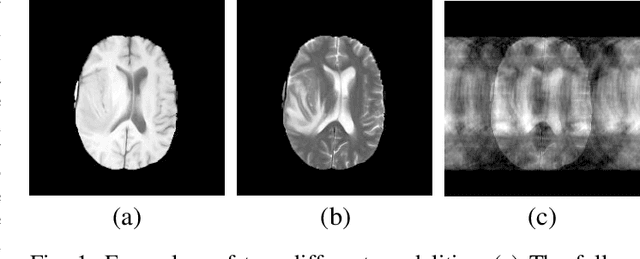

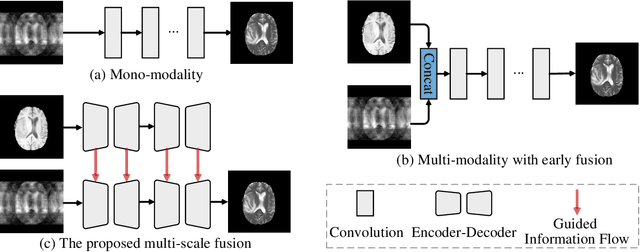

Multi-modal Aggregation Network for Fast MR Imaging

Oct 20, 2021

Abstract:Magnetic resonance (MR) imaging is a commonly used scanning technique for disease detection, diagnosis and treatment monitoring. Although it is able to produce detailed images of organs and tissues with better contrast, it suffers from a long acquisition time, which makes the image quality vulnerable to say motion artifacts. Recently, many approaches have been developed to reconstruct full-sampled images from partially observed measurements in order to accelerate MR imaging. However, most of these efforts focus on reconstruction over a single modality or simple fusion of multiple modalities, neglecting the discovery of correlation knowledge at different feature level. In this work, we propose a novel Multi-modal Aggregation Network, named MANet, which is capable of discovering complementary representations from a fully sampled auxiliary modality, with which to hierarchically guide the reconstruction of a given target modality. In our MANet, the representations from the fully sampled auxiliary and undersampled target modalities are learned independently through a specific network. Then, a guided attention module is introduced in each convolutional stage to selectively aggregate multi-modal features for better reconstruction, yielding comprehensive, multi-scale, multi-modal feature fusion. Moreover, our MANet follows a hybrid domain learning framework, which allows it to simultaneously recover the frequency signal in the $k$-space domain as well as restore the image details from the image domain. Extensive experiments demonstrate the superiority of the proposed approach over state-of-the-art MR image reconstruction methods.

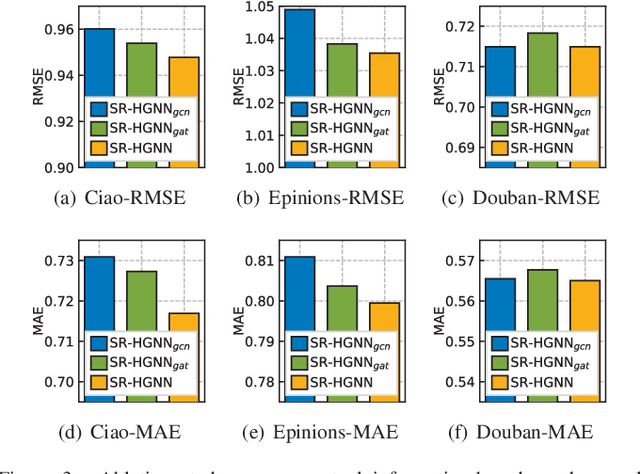

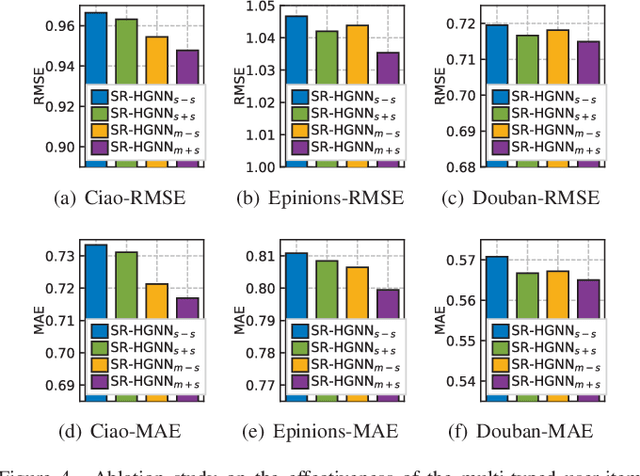

Global Context Enhanced Social Recommendation with Hierarchical Graph Neural Networks

Oct 08, 2021

Abstract:Social recommendation which aims to leverage social connections among users to enhance the recommendation performance. With the revival of deep learning techniques, many efforts have been devoted to developing various neural network-based social recommender systems, such as attention mechanisms and graph-based message passing frameworks. However, two important challenges have not been well addressed yet: (i) Most of existing social recommendation models fail to fully explore the multi-type user-item interactive behavior as well as the underlying cross-relational inter-dependencies. (ii) While the learned social state vector is able to model pair-wise user dependencies, it still has limited representation capacity in capturing the global social context across users. To tackle these limitations, we propose a new Social Recommendation framework with Hierarchical Graph Neural Networks (SR-HGNN). In particular, we first design a relation-aware reconstructed graph neural network to inject the cross-type collaborative semantics into the recommendation framework. In addition, we further augment SR-HGNN with a social relation encoder based on the mutual information learning paradigm between low-level user embeddings and high-level global representation, which endows SR-HGNN with the capability of capturing the global social contextual signals. Empirical results on three public benchmarks demonstrate that SR-HGNN significantly outperforms state-of-the-art recommendation methods. Source codes are available at: https://github.com/xhcdream/SR-HGNN.

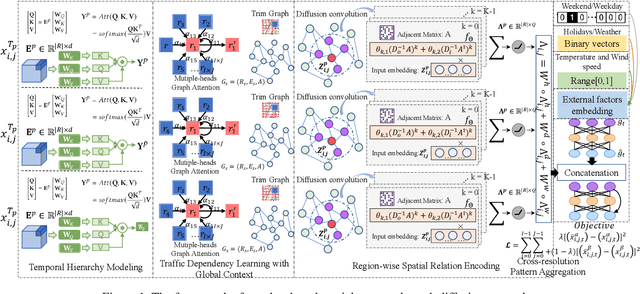

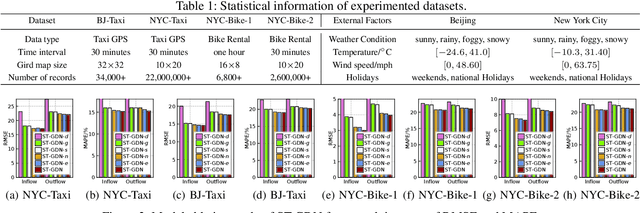

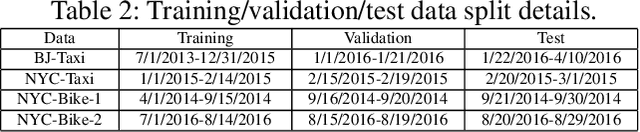

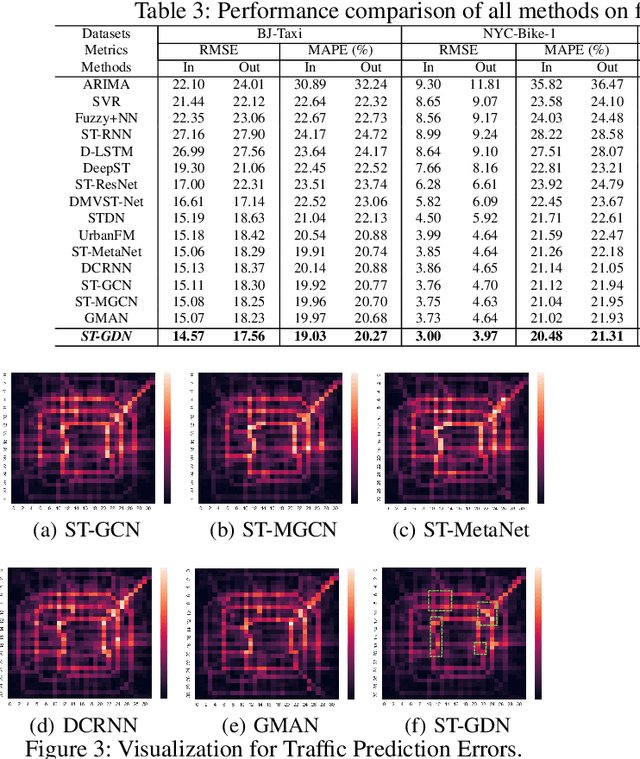

Traffic Flow Forecasting with Spatial-Temporal Graph Diffusion Network

Oct 08, 2021

Abstract:Accurate forecasting of citywide traffic flow has been playing critical role in a variety of spatial-temporal mining applications, such as intelligent traffic control and public risk assessment. While previous work has made significant efforts to learn traffic temporal dynamics and spatial dependencies, two key limitations exist in current models. First, only the neighboring spatial correlations among adjacent regions are considered in most existing methods, and the global inter-region dependency is ignored. Additionally, these methods fail to encode the complex traffic transition regularities exhibited with time-dependent and multi-resolution in nature. To tackle these challenges, we develop a new traffic prediction framework-Spatial-Temporal Graph Diffusion Network (ST-GDN). In particular, ST-GDN is a hierarchically structured graph neural architecture which learns not only the local region-wise geographical dependencies, but also the spatial semantics from a global perspective. Furthermore, a multi-scale attention network is developed to empower ST-GDN with the capability of capturing multi-level temporal dynamics. Experiments on several real-life traffic datasets demonstrate that ST-GDN outperforms different types of state-of-the-art baselines. Source codes of implementations are available at https://github.com/jill001/ST-GDN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge