Hao Xing

Understanding Human Activity with Uncertainty Measure for Novelty in Graph Convolutional Networks

Oct 10, 2024

Abstract:Understanding human activity is a crucial aspect of developing intelligent robots, particularly in the domain of human-robot collaboration. Nevertheless, existing systems encounter challenges such as over-segmentation, attributed to errors in the up-sampling process of the decoder. In response, we introduce a promising solution: the Temporal Fusion Graph Convolutional Network. This innovative approach aims to rectify the inadequate boundary estimation of individual actions within an activity stream and mitigate the issue of over-segmentation in the temporal dimension. Moreover, systems leveraging human activity recognition frameworks for decision-making necessitate more than just the identification of actions. They require a confidence value indicative of the certainty regarding the correspondence between observations and training examples. This is crucial to prevent overly confident responses to unforeseen scenarios that were not part of the training data and may have resulted in mismatches due to weak similarity measures within the system. To address this, we propose the incorporation of a Spectral Normalized Residual connection aimed at enhancing efficient estimation of novelty in observations. This innovative approach ensures the preservation of input distance within the feature space by imposing constraints on the maximum gradients of weight updates. By limiting these gradients, we promote a more robust handling of novel situations, thereby mitigating the risks associated with overconfidence. Our methodology involves the use of a Gaussian process to quantify the distance in feature space.

Understanding Spatio-Temporal Relations in Human-Object Interaction using Pyramid Graph Convolutional Network

Oct 10, 2024

Abstract:Human activities recognition is an important task for an intelligent robot, especially in the field of human-robot collaboration, it requires not only the label of sub-activities but also the temporal structure of the activity. In order to automatically recognize both the label and the temporal structure in sequence of human-object interaction, we propose a novel Pyramid Graph Convolutional Network (PGCN), which employs a pyramidal encoder-decoder architecture consisting of an attention based graph convolution network and a temporal pyramid pooling module for downsampling and upsampling interaction sequence on the temporal axis, respectively. The system represents the 2D or 3D spatial relation of human and objects from the detection results in video data as a graph. To learn the human-object relations, a new attention graph convolutional network is trained to extract condensed information from the graph representation. To segment action into sub-actions, a novel temporal pyramid pooling module is proposed, which upsamples compressed features back to the original time scale and classifies actions per frame. We explore various attention layers, namely spatial attention, temporal attention and channel attention, and combine different upsampling decoders to test the performance on action recognition and segmentation. We evaluate our model on two challenging datasets in the field of human-object interaction recognition, i.e. Bimanual Actions and IKEA Assembly datasets. We demonstrate that our classifier significantly improves both framewise action recognition and segmentation, e.g., F1 micro and F1@50 scores on Bimanual Actions dataset are improved by $4.3\%$ and $8.5\%$ respectively.

RCRN: Real-world Character Image Restoration Network via Skeleton Extraction

Jul 19, 2022

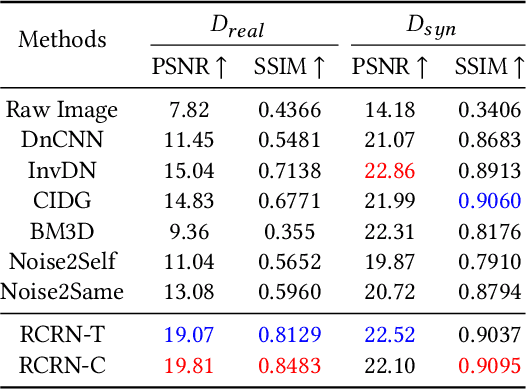

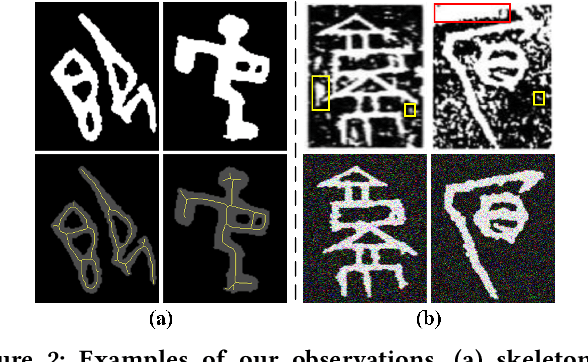

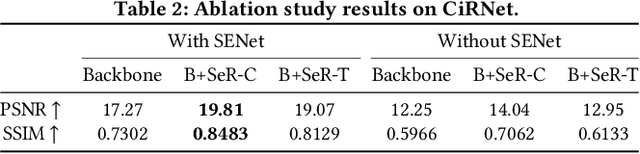

Abstract:Constructing high-quality character image datasets is challenging because real-world images are often affected by image degradation. There are limitations when applying current image restoration methods to such real-world character images, since (i) the categories of noise in character images are different from those in general images; (ii) real-world character images usually contain more complex image degradation, e.g., mixed noise at different noise levels. To address these problems, we propose a real-world character restoration network (RCRN) to effectively restore degraded character images, where character skeleton information and scale-ensemble feature extraction are utilized to obtain better restoration performance. The proposed method consists of a skeleton extractor (SENet) and a character image restorer (CiRNet). SENet aims to preserve the structural consistency of the character and normalize complex noise. Then, CiRNet reconstructs clean images from degraded character images and their skeletons. Due to the lack of benchmarks for real-world character image restoration, we constructed a dataset containing 1,606 character images with real-world degradation to evaluate the validity of the proposed method. The experimental results demonstrate that RCRN outperforms state-of-the-art methods quantitatively and qualitatively.

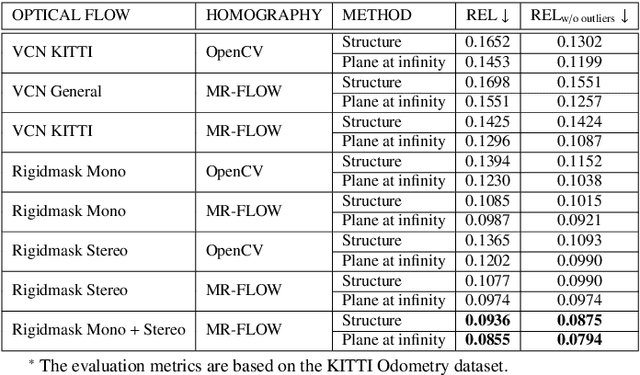

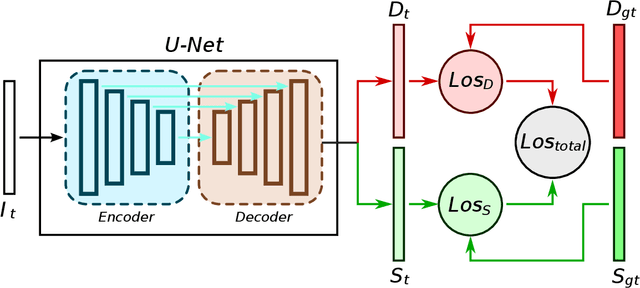

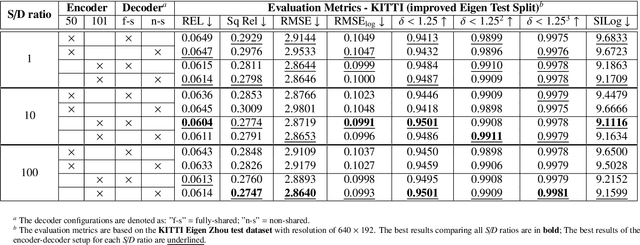

Joint Prediction of Monocular Depth and Structure using Planar and Parallax Geometry

Jul 13, 2022

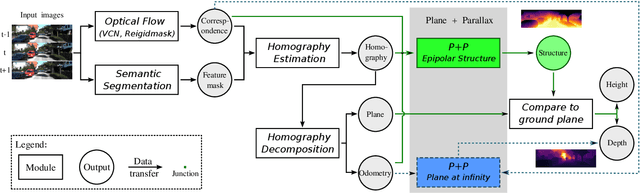

Abstract:Supervised learning depth estimation methods can achieve good performance when trained on high-quality ground-truth, like LiDAR data. However, LiDAR can only generate sparse 3D maps which causes losing information. Obtaining high-quality ground-truth depth data per pixel is difficult to acquire. In order to overcome this limitation, we propose a novel approach combining structure information from a promising Plane and Parallax geometry pipeline with depth information into a U-Net supervised learning network, which results in quantitative and qualitative improvement compared to existing popular learning-based methods. In particular, the model is evaluated on two large-scale and challenging datasets: KITTI Vision Benchmark and Cityscapes dataset and achieve the best performance in terms of relative error. Compared with pure depth supervision models, our model has impressive performance on depth prediction of thin objects and edges, and compared to structure prediction baseline, our model performs more robustly.

Skeletal Human Action Recognition using Hybrid Attention based Graph Convolutional Network

Jul 12, 2022Abstract:In skeleton-based action recognition, Graph Convolutional Networks model human skeletal joints as vertices and connect them through an adjacency matrix, which can be seen as a local attention mask. However, in most existing Graph Convolutional Networks, the local attention mask is defined based on natural connections of human skeleton joints and ignores the dynamic relations for example between head, hands and feet joints. In addition, the attention mechanism has been proven effective in Natural Language Processing and image description, which is rarely investigated in existing methods. In this work, we proposed a new adaptive spatial attention layer that extends local attention map to global based on relative distance and relative angle information. Moreover, we design a new initial graph adjacency matrix that connects head, hands and feet, which shows visible improvement in terms of action recognition accuracy. The proposed model is evaluated on two large-scale and challenging datasets in the field of human activities in daily life: NTU-RGB+D and Kinetics skeleton. The results demonstrate that our model has strong performance on both dataset.

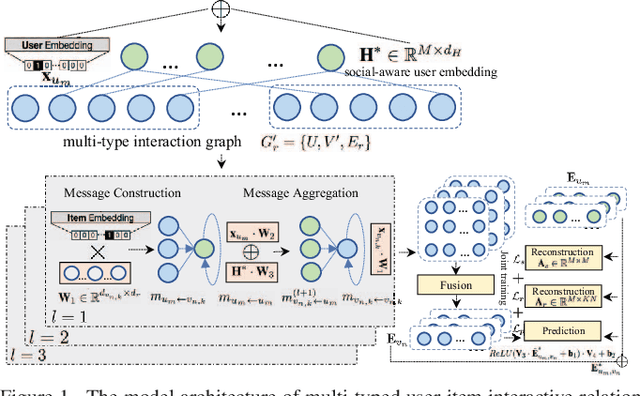

Global Context Enhanced Social Recommendation with Hierarchical Graph Neural Networks

Oct 08, 2021

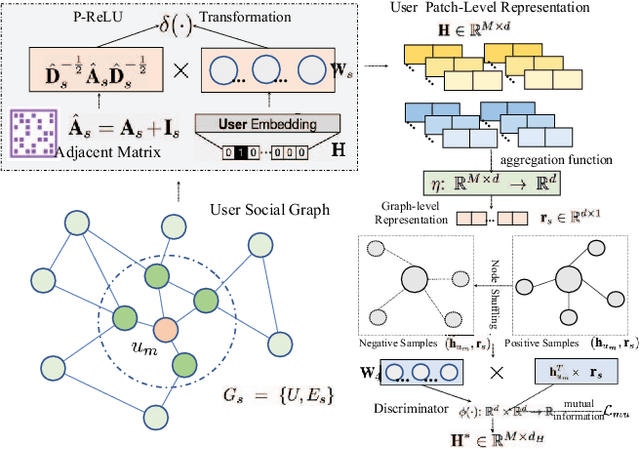

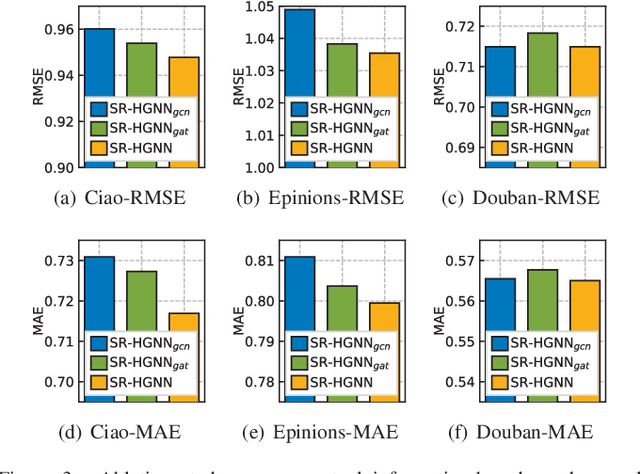

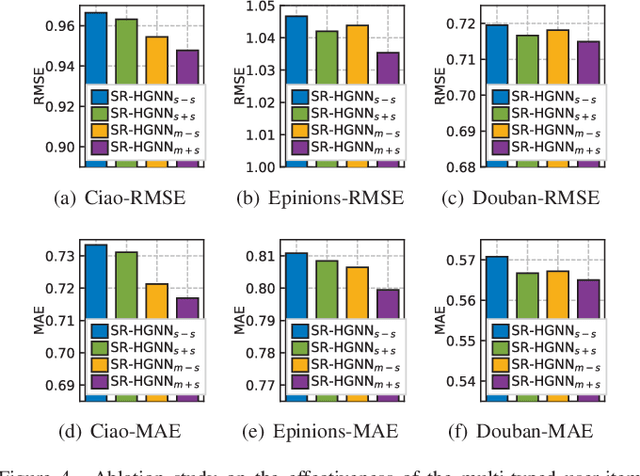

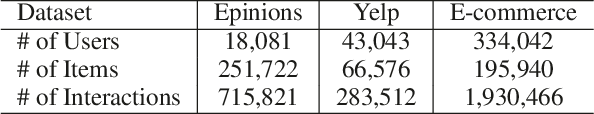

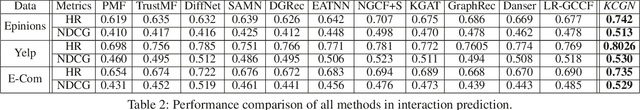

Abstract:Social recommendation which aims to leverage social connections among users to enhance the recommendation performance. With the revival of deep learning techniques, many efforts have been devoted to developing various neural network-based social recommender systems, such as attention mechanisms and graph-based message passing frameworks. However, two important challenges have not been well addressed yet: (i) Most of existing social recommendation models fail to fully explore the multi-type user-item interactive behavior as well as the underlying cross-relational inter-dependencies. (ii) While the learned social state vector is able to model pair-wise user dependencies, it still has limited representation capacity in capturing the global social context across users. To tackle these limitations, we propose a new Social Recommendation framework with Hierarchical Graph Neural Networks (SR-HGNN). In particular, we first design a relation-aware reconstructed graph neural network to inject the cross-type collaborative semantics into the recommendation framework. In addition, we further augment SR-HGNN with a social relation encoder based on the mutual information learning paradigm between low-level user embeddings and high-level global representation, which endows SR-HGNN with the capability of capturing the global social contextual signals. Empirical results on three public benchmarks demonstrate that SR-HGNN significantly outperforms state-of-the-art recommendation methods. Source codes are available at: https://github.com/xhcdream/SR-HGNN.

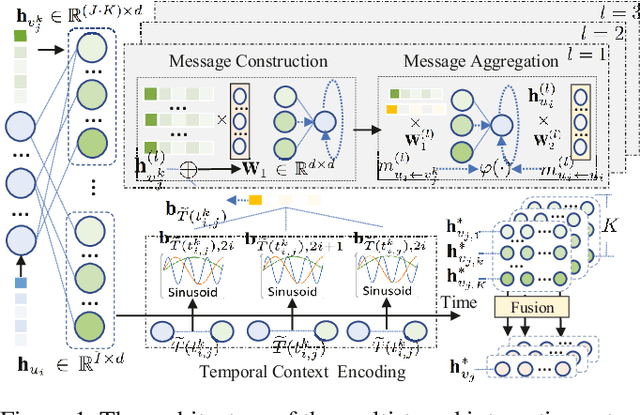

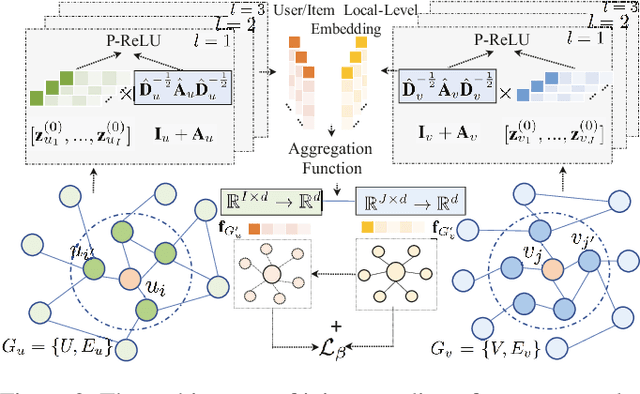

Knowledge-aware Coupled Graph Neural Network for Social Recommendation

Oct 08, 2021

Abstract:Social recommendation task aims to predict users' preferences over items with the incorporation of social connections among users, so as to alleviate the sparse issue of collaborative filtering. While many recent efforts show the effectiveness of neural network-based social recommender systems, several important challenges have not been well addressed yet: (i) The majority of models only consider users' social connections, while ignoring the inter-dependent knowledge across items; (ii) Most of existing solutions are designed for singular type of user-item interactions, making them infeasible to capture the interaction heterogeneity; (iii) The dynamic nature of user-item interactions has been less explored in many social-aware recommendation techniques. To tackle the above challenges, this work proposes a Knowledge-aware Coupled Graph Neural Network (KCGN) that jointly injects the inter-dependent knowledge across items and users into the recommendation framework. KCGN enables the high-order user- and item-wise relation encoding by exploiting the mutual information for global graph structure awareness. Additionally, we further augment KCGN with the capability of capturing dynamic multi-typed user-item interactive patterns. Experimental studies on real-world datasets show the effectiveness of our method against many strong baselines in a variety of settings. Source codes are available at: https://github.com/xhcdream/KCGN.

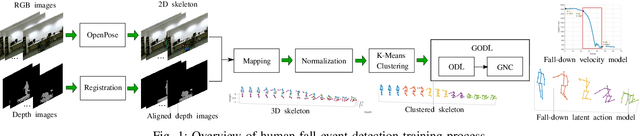

Robust Event Detection based on Spatio-Temporal Latent Action Unit using Skeletal Information

Oct 01, 2021

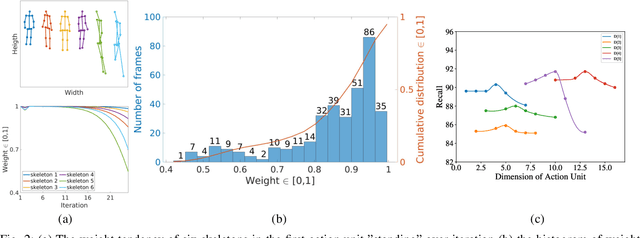

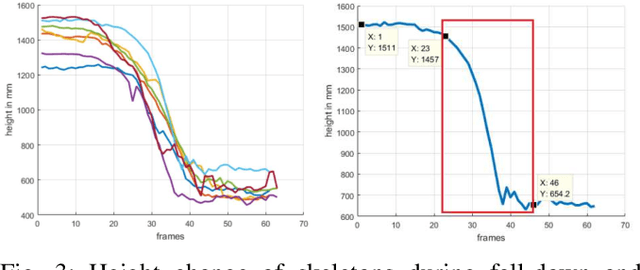

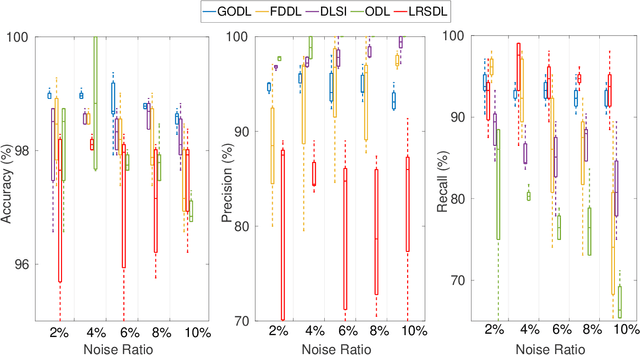

Abstract:This paper propose a novel dictionary learning approach to detect event action using skeletal information extracted from RGBD video. The event action is represented as several latent atoms and composed of latent spatial and temporal attributes. We perform the method at the example of fall event detection. The skeleton frames are clustered by an initial K-means method. Each skeleton frame is assigned with a varying weight parameter and fed into our Gradual Online Dictionary Learning (GODL) algorithm. During the training process, outlier frames will be gradually filtered by reducing the weight that is inversely proportional to a cost. In order to strictly distinguish the event action from similar actions and robustly acquire its action unit, we build a latent unit temporal structure for each sub-action. We evaluate the proposed method on parts of the NTURGB+D dataset, which includes 209 fall videos, 405 ground-lift videos, 420 sit-down videos, and 280 videos of 46 otheractions. We present the experimental validation of the achieved accuracy, recall and precision. Our approach achieves the bestperformance on precision and accuracy of human fall event detection, compared with other existing dictionary learning methods. With increasing noise ratio, our method remains the highest accuracy and the lowest variance.

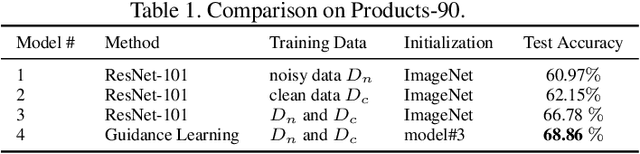

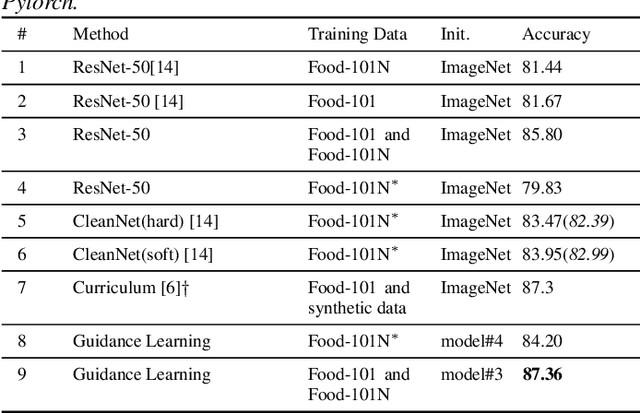

Product Image Recognition with Guidance Learning and Noisy Supervision

Jul 26, 2019

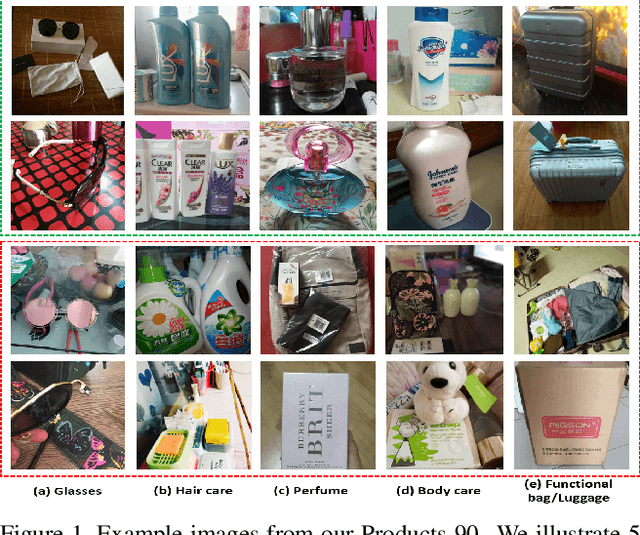

Abstract:This paper considers recognizing products from daily photos, which is an important problem in real-world applications but also challenging due to background clutters, category diversities, noisy labels, etc. We address this problem by two contributions. First, we introduce a novel large-scale product image dataset, termed as Product-90. Instead of collecting product images by labor-and time-intensive image capturing, we take advantage of the web and download images from the reviews of several e-commerce websites where the images are casually captured by consumers. Labels are assigned automatically by the categories of e-commerce websites. Totally the Product-90 consists of more than 140K images with 90 categories. Due to the fact that consumers may upload unrelated images, it is inevitable that our Product-90 introduces noisy labels. As the second contribution, we develop a simple yet efficient \textit{guidance learning} (GL) method for training convolutional neural networks (CNNs) with noisy supervision. The GL method first trains an initial teacher network with the full noisy dataset, and then trains a target/student network with both large-scale noisy set and small manually-verified clean set in a multi-task manner. Specifically, in the stage of student network training, the large-scale noisy data is supervised by its guidance knowledge which is the combination of its given noisy label and the soften label from the teacher network. We conduct extensive experiments on our Products-90 and public datasets, namely Food101, Food-101N, and Clothing1M. Our guidance learning method achieves performance superior to state-of-the-art methods on these datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge