Yong Xu

Accelerated Multi-Modal MR Imaging with Transformers

Jun 29, 2021

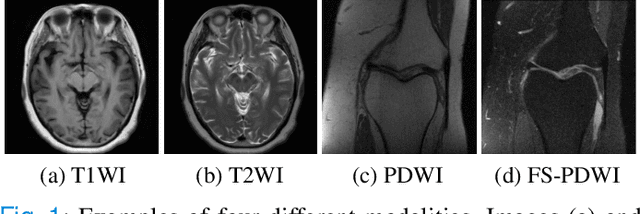

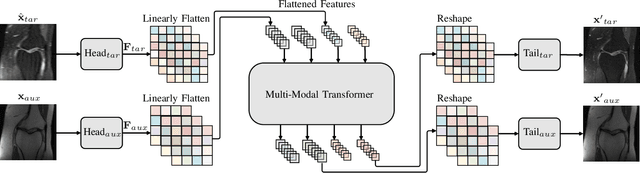

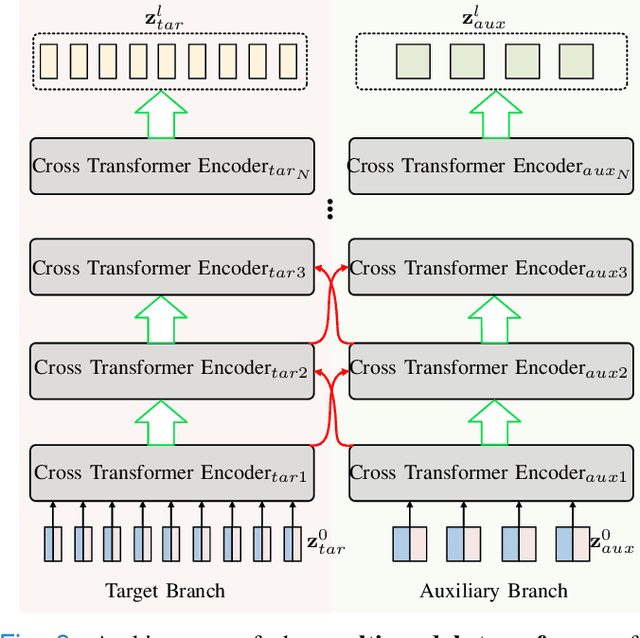

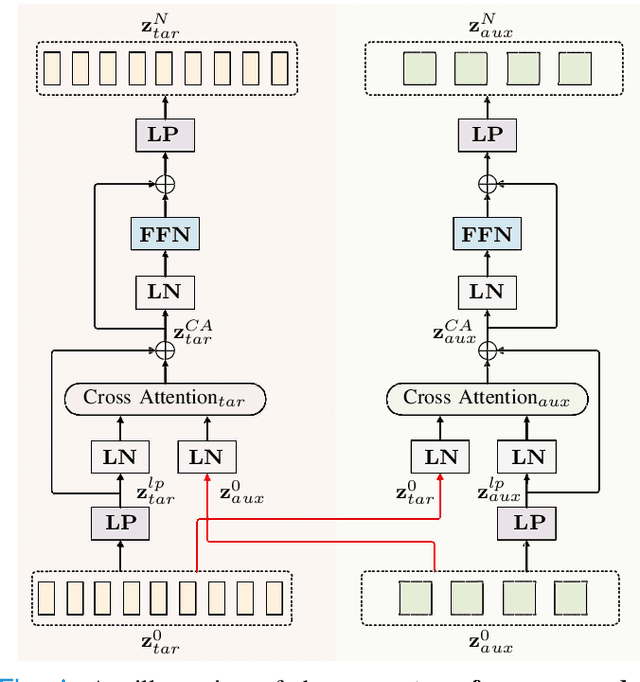

Abstract:Accelerating multi-modal magnetic resonance (MR) imaging is a new and effective solution for fast MR imaging, providing superior performance in restoring the target modality from its undersampled counterpart with guidance from an auxiliary modality. However, existing works simply introduce the auxiliary modality as prior information, lacking in-depth investigations on the potential mechanisms for fusing two modalities. Further, they usually rely on the convolutional neural networks (CNNs), which focus on local information and prevent them from fully capturing the long-distance dependencies of global knowledge. To this end, we propose a multi-modal transformer (MTrans), which is capable of transferring multi-scale features from the target modality to the auxiliary modality, for accelerated MR imaging. By restructuring the transformer architecture, our MTrans gains a powerful ability to capture deep multi-modal information. More specifically, the target modality and the auxiliary modality are first split into two branches and then fused using a multi-modal transformer module. This module is based on an improved multi-head attention mechanism, named the cross attention module, which absorbs features from the auxiliary modality that contribute to the target modality. Our framework provides two appealing benefits: (i) MTrans is the first attempt at using improved transformers for multi-modal MR imaging, affording more global information compared with CNN-based methods. (ii) A new cross attention module is proposed to exploit the useful information in each branch at different scales. It affords both distinct structural information and subtle pixel-level information, which supplement the target modality effectively.

Dual-Stream Reciprocal Disentanglement Learning for Domain Adaption Person Re-Identification

Jun 26, 2021

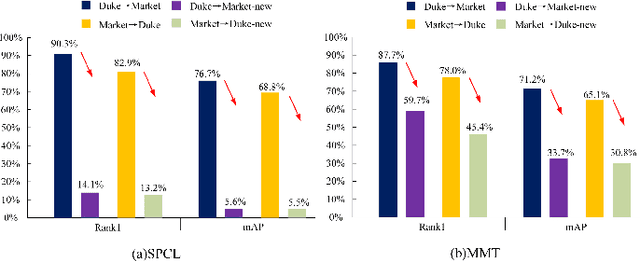

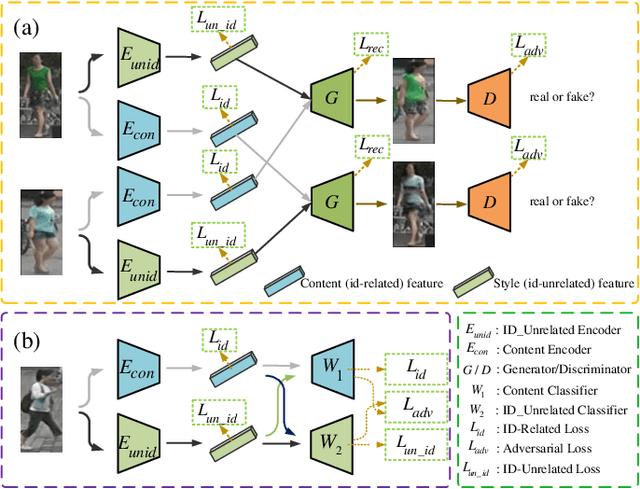

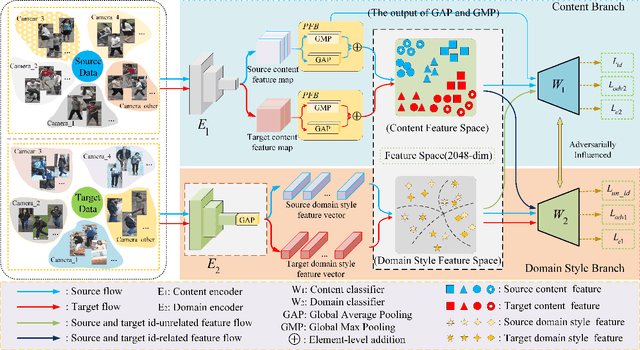

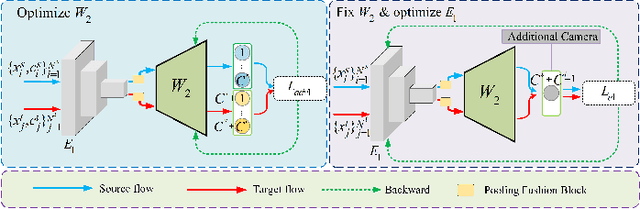

Abstract:Since human-labeled samples are free for the target set, unsupervised person re-identification (Re-ID) has attracted much attention in recent years, by additionally exploiting the source set. However, due to the differences on camera styles, illumination and backgrounds, there exists a large gap between source domain and target domain, introducing a great challenge on cross-domain matching. To tackle this problem, in this paper we propose a novel method named Dual-stream Reciprocal Disentanglement Learning (DRDL), which is quite efficient in learning domain-invariant features. In DRDL, two encoders are first constructed for id-related and id-unrelated feature extractions, which are respectively measured by their associated classifiers. Furthermore, followed by an adversarial learning strategy, both streams reciprocally and positively effect each other, so that the id-related features and id-unrelated features are completely disentangled from a given image, allowing the encoder to be powerful enough to obtain the discriminative but domain-invariant features. In contrast to existing approaches, our proposed method is free from image generation, which not only reduces the computational complexity remarkably, but also removes redundant information from id-related features. Extensive experiments substantiate the superiority of our proposed method compared with the state-of-the-arts. The source code has been released in https://github.com/lhf12278/DRDL.

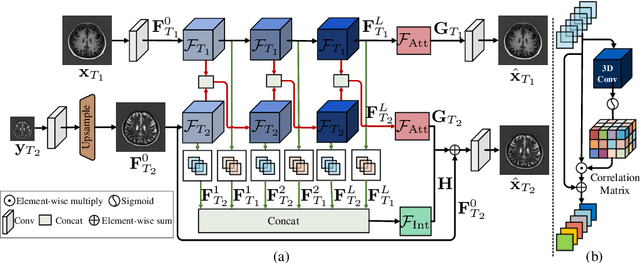

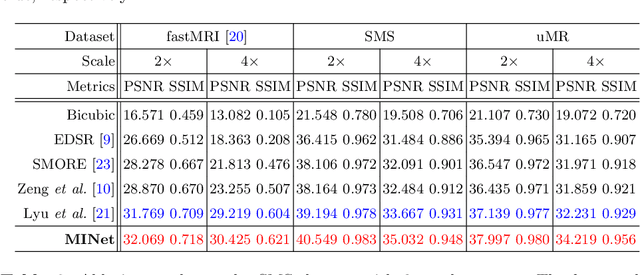

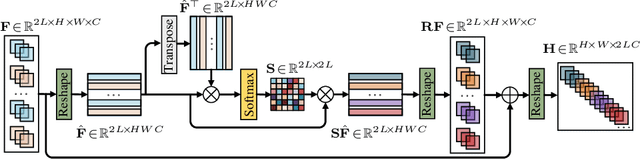

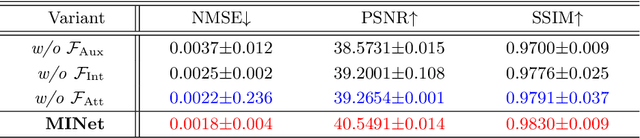

Multi-Contrast MRI Super-Resolution via a Multi-Stage Integration Network

May 19, 2021

Abstract:Super-resolution (SR) plays a crucial role in improving the image quality of magnetic resonance imaging (MRI). MRI produces multi-contrast images and can provide a clear display of soft tissues. However, current super-resolution methods only employ a single contrast, or use a simple multi-contrast fusion mechanism, ignoring the rich relations among different contrasts, which are valuable for improving SR. In this work, we propose a multi-stage integration network (i.e., MINet) for multi-contrast MRI SR, which explicitly models the dependencies between multi-contrast images at different stages to guide image SR. In particular, our MINet first learns a hierarchical feature representation from multiple convolutional stages for each of different-contrast image. Subsequently, we introduce a multi-stage integration module to mine the comprehensive relations between the representations of the multi-contrast images. Specifically, the module matches each representation with all other features, which are integrated in terms of their similarities to obtain an enriched representation. Extensive experiments on fastMRI and real-world clinical datasets demonstrate that 1) our MINet outperforms state-of-the-art multi-contrast SR methods in terms of various metrics and 2) our multi-stage integration module is able to excavate complex interactions among multi-contrast features at different stages, leading to improved target-image quality.

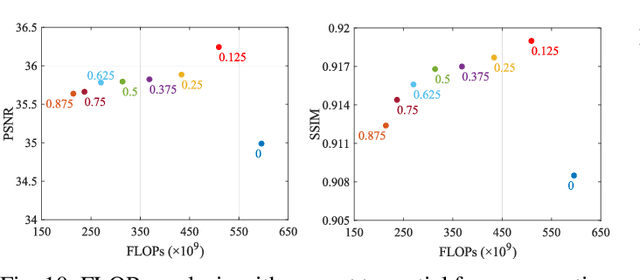

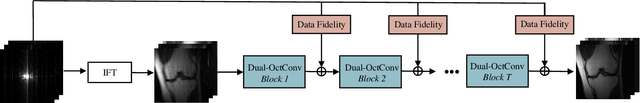

DONet: Dual-Octave Network for Fast MR Image Reconstruction

May 12, 2021

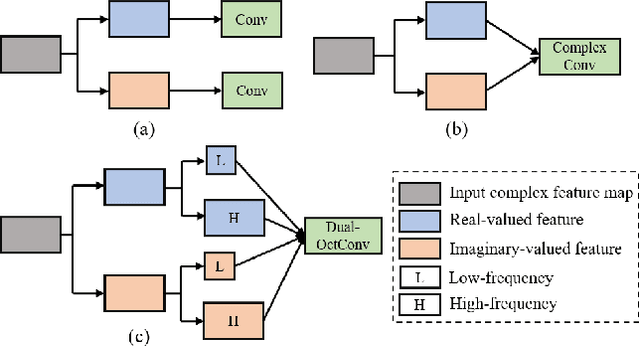

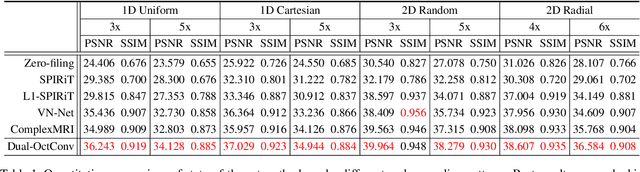

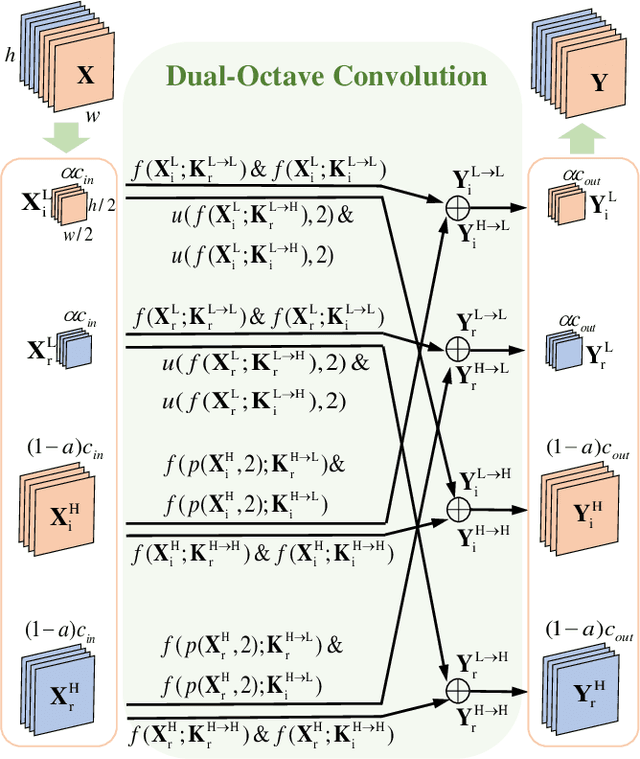

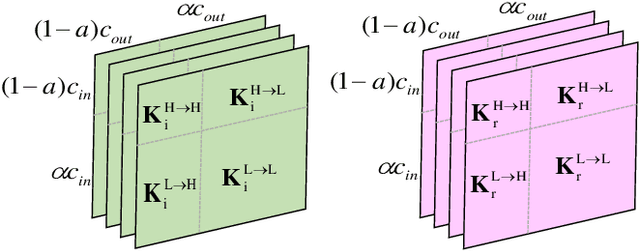

Abstract:Magnetic resonance (MR) image acquisition is an inherently prolonged process, whose acceleration has long been the subject of research. This is commonly achieved by obtaining multiple undersampled images, simultaneously, through parallel imaging. In this paper, we propose the Dual-Octave Network (DONet), which is capable of learning multi-scale spatial-frequency features from both the real and imaginary components of MR data, for fast parallel MR image reconstruction. More specifically, our DONet consists of a series of Dual-Octave convolutions (Dual-OctConv), which are connected in a dense manner for better reuse of features. In each Dual-OctConv, the input feature maps and convolutional kernels are first split into two components (ie, real and imaginary), and then divided into four groups according to their spatial frequencies. Then, our Dual-OctConv conducts intra-group information updating and inter-group information exchange to aggregate the contextual information across different groups. Our framework provides three appealing benefits: (i) It encourages information interaction and fusion between the real and imaginary components at various spatial frequencies to achieve richer representational capacity. (ii) The dense connections between the real and imaginary groups in each Dual-OctConv make the propagation of features more efficient by feature reuse. (iii) DONet enlarges the receptive field by learning multiple spatial-frequency features of both the real and imaginary components. Extensive experiments on two popular datasets (ie, clinical knee and fastMRI), under different undersampling patterns and acceleration factors, demonstrate the superiority of our model in accelerated parallel MR image reconstruction.

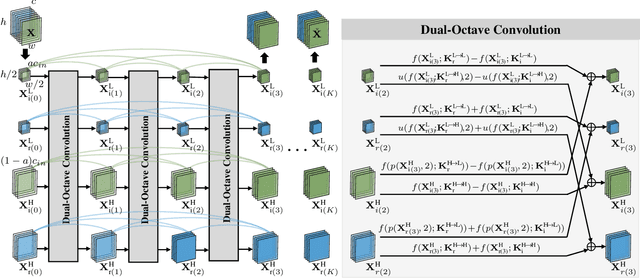

MIMO Self-attentive RNN Beamformer for Multi-speaker Speech Separation

Apr 26, 2021

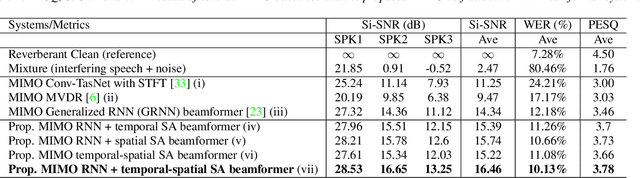

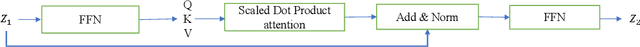

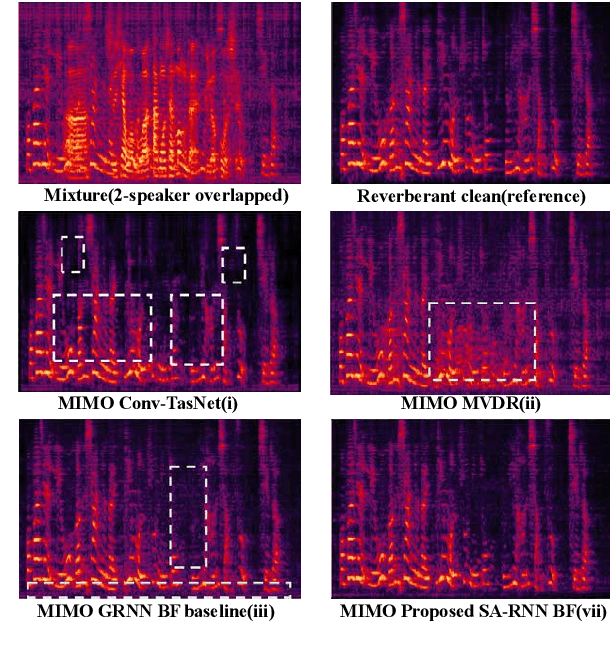

Abstract:Recently, our proposed recurrent neural network (RNN) based all deep learning minimum variance distortionless response (ADL-MVDR) beamformer method yielded superior performance over the conventional MVDR by replacing the matrix inversion and eigenvalue decomposition with two recurrent neural networks. In this work, we present a self-attentive RNN beamformer to further improve our previous RNN-based beamformer by leveraging on the powerful modeling capability of self-attention. Temporal-spatial self-attention module is proposed to better learn the beamforming weights from the speech and noise spatial covariance matrices. The temporal self-attention module could help RNN to learn global statistics of covariance matrices. The spatial self-attention module is designed to attend on the cross-channel correlation in the covariance matrices. Furthermore, a multi-channel input with multi-speaker directional features and multi-speaker speech separation outputs (MIMO) model is developed to improve the inference efficiency. The evaluations demonstrate that our proposed MIMO self-attentive RNN beamformer improves both the automatic speech recognition (ASR) accuracy and the perceptual estimation of speech quality (PESQ) against prior arts.

Dual-Octave Convolution for Accelerated Parallel MR Image Reconstruction

Apr 12, 2021

Abstract:Magnetic resonance (MR) image acquisition is an inherently prolonged process, whose acceleration by obtaining multiple undersampled images simultaneously through parallel imaging has always been the subject of research. In this paper, we propose the Dual-Octave Convolution (Dual-OctConv), which is capable of learning multi-scale spatial-frequency features from both real and imaginary components, for fast parallel MR image reconstruction. By reformulating the complex operations using octave convolutions, our model shows a strong ability to capture richer representations of MR images, while at the same time greatly reducing the spatial redundancy. More specifically, the input feature maps and convolutional kernels are first split into two components (i.e., real and imaginary), which are then divided into four groups according to their spatial frequencies. Then, our Dual-OctConv conducts intra-group information updating and inter-group information exchange to aggregate the contextual information across different groups. Our framework provides two appealing benefits: (i) it encourages interactions between real and imaginary components at various spatial frequencies to achieve richer representational capacity, and (ii) it enlarges the receptive field by learning multiple spatial-frequency features of both the real and imaginary components. We evaluate the performance of the proposed model on the acceleration of multi-coil MR image reconstruction. Extensive experiments are conducted on an {in vivo} knee dataset under different undersampling patterns and acceleration factors. The experimental results demonstrate the superiority of our model in accelerated parallel MR image reconstruction. Our code is available at: github.com/chunmeifeng/Dual-OctConv.

* Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI) 2021

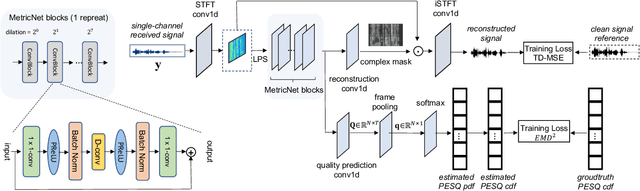

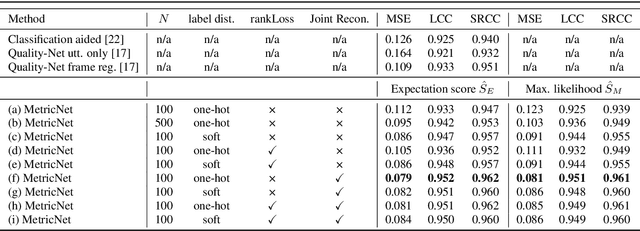

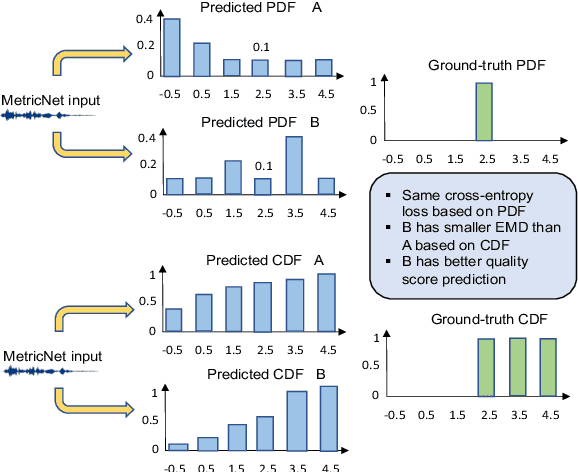

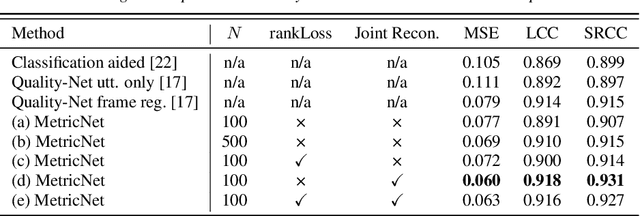

MetricNet: Towards Improved Modeling For Non-Intrusive Speech Quality Assessment

Apr 02, 2021

Abstract:The objective speech quality assessment is usually conducted by comparing received speech signal with its clean reference, while human beings are capable of evaluating the speech quality without any reference, such as in the mean opinion score (MOS) tests. Non-intrusive speech quality assessment has attracted much attention recently due to the lack of access to clean reference signals for objective evaluations in real scenarios. In this paper, we propose a novel non-intrusive speech quality measurement model, MetricNet, which leverages label distribution learning and joint speech reconstruction learning to achieve significantly improved performance compared to the existing non-intrusive speech quality measurement models. We demonstrate that the proposed approach yields promisingly high correlation to the intrusive objective evaluation of speech quality on clean, noisy and processed speech data.

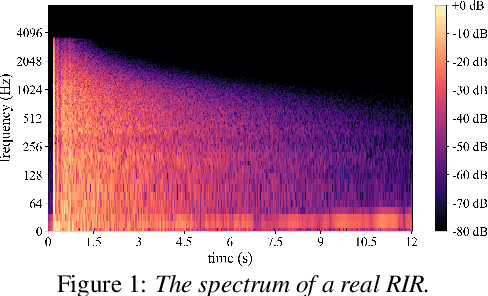

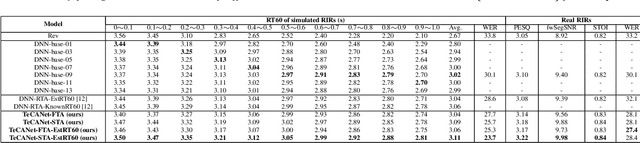

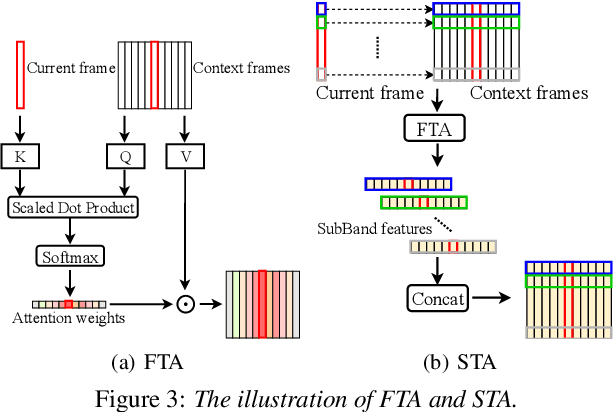

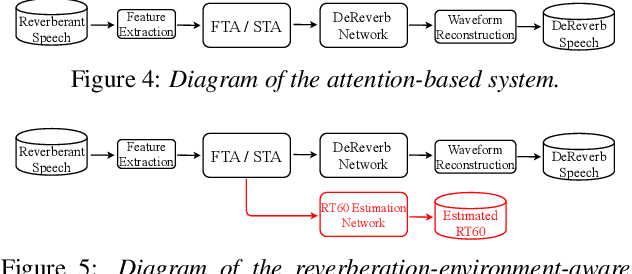

TeCANet: Temporal-Contextual Attention Network for Environment-Aware Speech Dereverberation

Mar 31, 2021

Abstract:In this paper, we exploit the effective way to leverage contextual information to improve the speech dereverberation performance in real-world reverberant environments. We propose a temporal-contextual attention approach on the deep neural network (DNN) for environment-aware speech dereverberation, which can adaptively attend to the contextual information. More specifically, a FullBand based Temporal Attention approach (FTA) is proposed, which models the correlations between the fullband information of the context frames. In addition, considering the difference between the attenuation of high frequency bands and low frequency bands (high frequency bands attenuate faster than low frequency bands) in the room impulse response (RIR), we also propose a SubBand based Temporal Attention approach (STA). In order to guide the network to be more aware of the reverberant environments, we jointly optimize the dereverberation network and the reverberation time (RT60) estimator in a multi-task manner. Our experimental results indicate that the proposed method outperforms our previously proposed reverberation-time-aware DNN and the learned attention weights are fully physical consistent. We also report a preliminary yet promising dereverberation and recognition experiment on real test data.

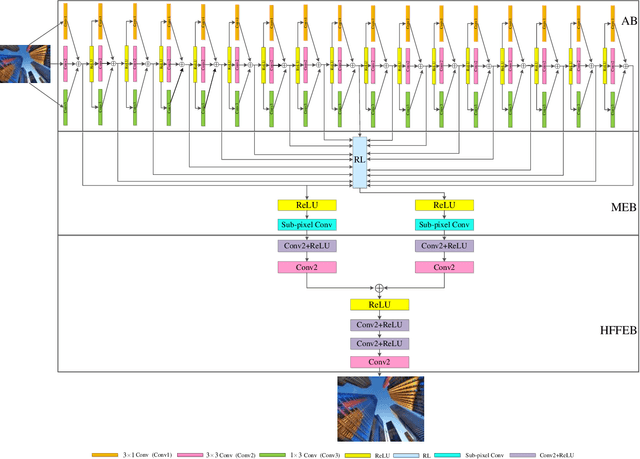

Asymmetric CNN for image super-resolution

Mar 30, 2021

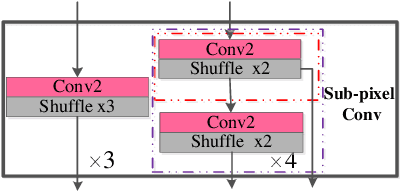

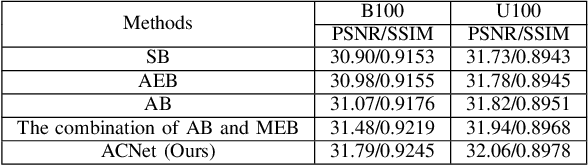

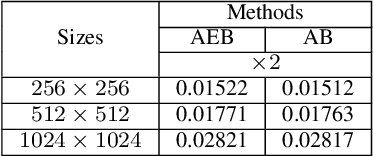

Abstract:Deep convolutional neural networks (CNNs) have been widely applied for low-level vision over the past five years. According to nature of different applications, designing appropriate CNN architectures is developed. However, customized architectures gather different features via treating all pixel points as equal to improve the performance of given application, which ignores the effects of local power pixel points and results in low training efficiency. In this paper, we propose an asymmetric CNN (ACNet) comprising an asymmetric block (AB), a memory enhancement block (MEB) and a high-frequency feature enhancement block (HFFEB) for image super-resolution. The AB utilizes one-dimensional asymmetric convolutions to intensify the square convolution kernels in horizontal and vertical directions for promoting the influences of local salient features for SISR. The MEB fuses all hierarchical low-frequency features from the AB via residual learning (RL) technique to resolve the long-term dependency problem and transforms obtained low-frequency features into high-frequency features. The HFFEB exploits low- and high-frequency features to obtain more robust super-resolution features and address excessive feature enhancement problem. Addditionally, it also takes charge of reconstructing a high-resolution (HR) image. Extensive experiments show that our ACNet can effectively address single image super-resolution (SISR), blind SISR and blind SISR of blind noise problems. The code of the ACNet is shown at https://github.com/hellloxiaotian/ACNet.

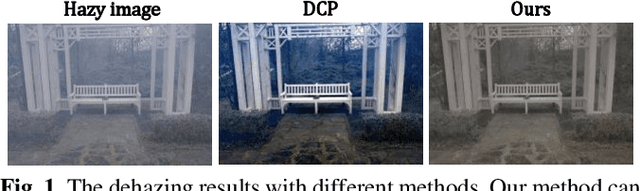

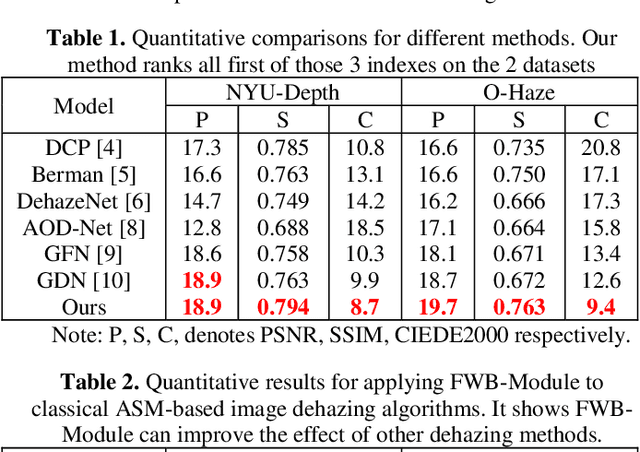

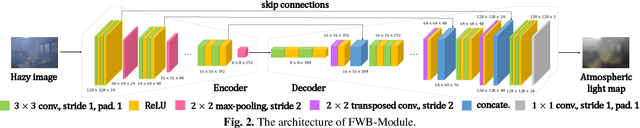

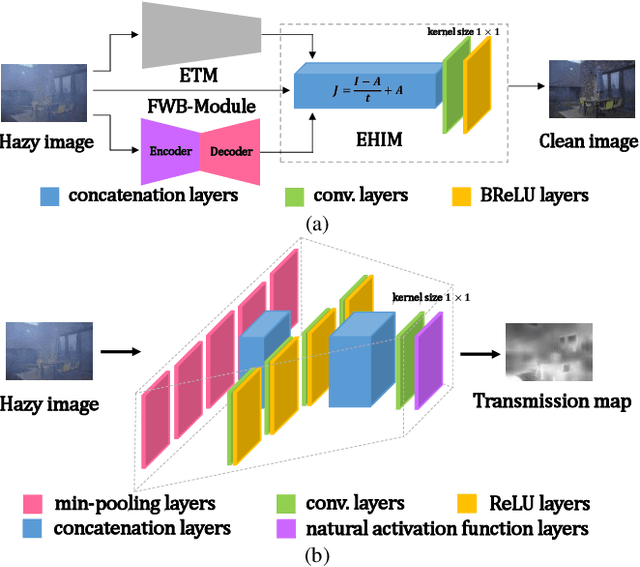

FWB-Net:Front White Balance Network for Color Shift Correction in Single Image Dehazing via Atmospheric Light Estimation

Jan 21, 2021

Abstract:In recent years, single image dehazing deep models based on Atmospheric Scattering Model (ASM) have achieved remarkable results. But the dehazing outputs of those models suffer from color shift. Analyzing the ASM model shows that the atmospheric light factor (ALF) is set as a scalar which indicates ALF is constant for whole image. However, for images taken in real-world, the illumination is not uniformly distributed over whole image which brings model mismatch and possibly results in color shift of the deep models using ASM. Bearing this in mind, in this study, first, a new non-homogeneous atmospheric scattering model (NH-ASM) is proposed for improving image modeling of hazy images taken under complex illumination conditions. Second, a new U-Net based front white balance module (FWB-Module) is dedicatedly designed to correct color shift before generating dehazing result via atmospheric light estimation. Third, a new FWB loss is innovatively developed for training FWB-Module, which imposes penalty on color shift. In the end, based on NH-ASM and front white balance technology, an end-to-end CNN-based color-shift-restraining dehazing network is developed, termed as FWB-Net. Experimental results demonstrate the effectiveness and superiority of our proposed FWB-Net for dehazing on both synthetic and real-world images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge