Huafeng Li

Hierarchical Prompt Learning for Image- and Text-Based Person Re-Identification

Nov 17, 2025

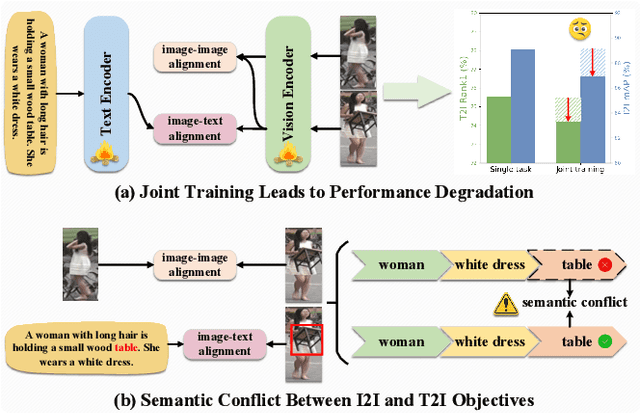

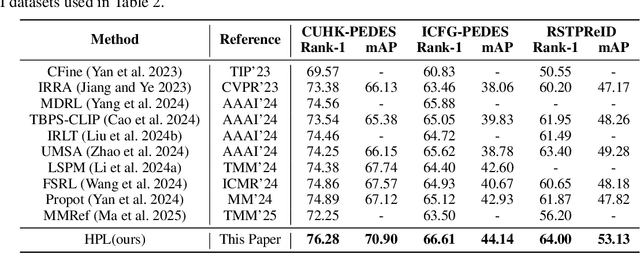

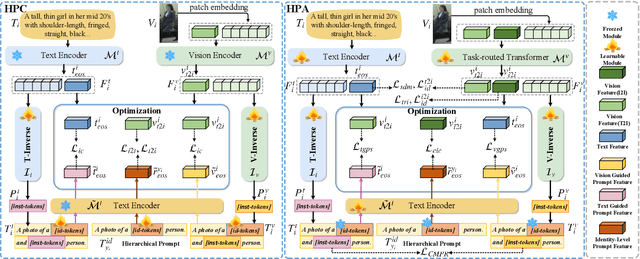

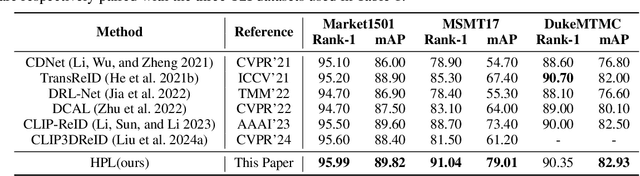

Abstract:Person re-identification (ReID) aims to retrieve target pedestrian images given either visual queries (image-to-image, I2I) or textual descriptions (text-to-image, T2I). Although both tasks share a common retrieval objective, they pose distinct challenges: I2I emphasizes discriminative identity learning, while T2I requires accurate cross-modal semantic alignment. Existing methods often treat these tasks separately, which may lead to representation entanglement and suboptimal performance. To address this, we propose a unified framework named Hierarchical Prompt Learning (HPL), which leverages task-aware prompt modeling to jointly optimize both tasks. Specifically, we first introduce a Task-Routed Transformer, which incorporates dual classification tokens into a shared visual encoder to route features for I2I and T2I branches respectively. On top of this, we develop a hierarchical prompt generation scheme that integrates identity-level learnable tokens with instance-level pseudo-text tokens. These pseudo-tokens are derived from image or text features via modality-specific inversion networks, injecting fine-grained, instance-specific semantics into the prompts. Furthermore, we propose a Cross-Modal Prompt Regularization strategy to enforce semantic alignment in the prompt token space, ensuring that pseudo-prompts preserve source-modality characteristics while enhancing cross-modal transferability. Extensive experiments on multiple ReID benchmarks validate the effectiveness of our method, achieving state-of-the-art performance on both I2I and T2I tasks.

DINOv2 Driven Gait Representation Learning for Video-Based Visible-Infrared Person Re-identification

Nov 06, 2025Abstract:Video-based Visible-Infrared person re-identification (VVI-ReID) aims to retrieve the same pedestrian across visible and infrared modalities from video sequences. Existing methods tend to exploit modality-invariant visual features but largely overlook gait features, which are not only modality-invariant but also rich in temporal dynamics, thus limiting their ability to model the spatiotemporal consistency essential for cross-modal video matching. To address these challenges, we propose a DINOv2-Driven Gait Representation Learning (DinoGRL) framework that leverages the rich visual priors of DINOv2 to learn gait features complementary to appearance cues, facilitating robust sequence-level representations for cross-modal retrieval. Specifically, we introduce a Semantic-Aware Silhouette and Gait Learning (SASGL) model, which generates and enhances silhouette representations with general-purpose semantic priors from DINOv2 and jointly optimizes them with the ReID objective to achieve semantically enriched and task-adaptive gait feature learning. Furthermore, we develop a Progressive Bidirectional Multi-Granularity Enhancement (PBMGE) module, which progressively refines feature representations by enabling bidirectional interactions between gait and appearance streams across multiple spatial granularities, fully leveraging their complementarity to enhance global representations with rich local details and produce highly discriminative features. Extensive experiments on HITSZ-VCM and BUPT datasets demonstrate the superiority of our approach, significantly outperforming existing state-of-the-art methods.

FS-Diff: Semantic guidance and clarity-aware simultaneous multimodal image fusion and super-resolution

Sep 11, 2025Abstract:As an influential information fusion and low-level vision technique, image fusion integrates complementary information from source images to yield an informative fused image. A few attempts have been made in recent years to jointly realize image fusion and super-resolution. However, in real-world applications such as military reconnaissance and long-range detection missions, the target and background structures in multimodal images are easily corrupted, with low resolution and weak semantic information, which leads to suboptimal results in current fusion techniques. In response, we propose FS-Diff, a semantic guidance and clarity-aware joint image fusion and super-resolution method. FS-Diff unifies image fusion and super-resolution as a conditional generation problem. It leverages semantic guidance from the proposed clarity sensing mechanism for adaptive low-resolution perception and cross-modal feature extraction. Specifically, we initialize the desired fused result as pure Gaussian noise and introduce the bidirectional feature Mamba to extract the global features of the multimodal images. Moreover, utilizing the source images and semantics as conditions, we implement a random iterative denoising process via a modified U-Net network. This network istrained for denoising at multiple noise levels to produce high-resolution fusion results with cross-modal features and abundant semantic information. We also construct a powerful aerial view multiscene (AVMS) benchmark covering 600 pairs of images. Extensive joint image fusion and super-resolution experiments on six public and our AVMS datasets demonstrated that FS-Diff outperforms the state-of-the-art methods at multiple magnifications and can recover richer details and semantics in the fused images. The code is available at https://github.com/XylonXu01/FS-Diff.

FlexiD-Fuse: Flexible number of inputs multi-modal medical image fusion based on diffusion model

Sep 11, 2025

Abstract:Different modalities of medical images provide unique physiological and anatomical information for diseases. Multi-modal medical image fusion integrates useful information from different complementary medical images with different modalities, producing a fused image that comprehensively and objectively reflects lesion characteristics to assist doctors in clinical diagnosis. However, existing fusion methods can only handle a fixed number of modality inputs, such as accepting only two-modal or tri-modal inputs, and cannot directly process varying input quantities, which hinders their application in clinical settings. To tackle this issue, we introduce FlexiD-Fuse, a diffusion-based image fusion network designed to accommodate flexible quantities of input modalities. It can end-to-end process two-modal and tri-modal medical image fusion under the same weight. FlexiD-Fuse transforms the diffusion fusion problem, which supports only fixed-condition inputs, into a maximum likelihood estimation problem based on the diffusion process and hierarchical Bayesian modeling. By incorporating the Expectation-Maximization algorithm into the diffusion sampling iteration process, FlexiD-Fuse can generate high-quality fused images with cross-modal information from source images, independently of the number of input images. We compared the latest two and tri-modal medical image fusion methods, tested them on Harvard datasets, and evaluated them using nine popular metrics. The experimental results show that our method achieves the best performance in medical image fusion with varying inputs. Meanwhile, we conducted extensive extension experiments on infrared-visible, multi-exposure, and multi-focus image fusion tasks with arbitrary numbers, and compared them with the perspective SOTA methods. The results of the extension experiments consistently demonstrate the effectiveness and superiority of our method.

AWM-Fuse: Multi-Modality Image Fusion for Adverse Weather via Global and Local Text Perception

Aug 23, 2025

Abstract:Multi-modality image fusion (MMIF) in adverse weather aims to address the loss of visual information caused by weather-related degradations, providing clearer scene representations. Although less studies have attempted to incorporate textual information to improve semantic perception, they often lack effective categorization and thorough analysis of textual content. In response, we propose AWM-Fuse, a novel fusion method for adverse weather conditions, designed to handle multiple degradations through global and local text perception within a unified, shared weight architecture. In particular, a global feature perception module leverages BLIP-produced captions to extract overall scene features and identify primary degradation types, thus promoting generalization across various adverse weather conditions. Complementing this, the local module employs detailed scene descriptions produced by ChatGPT to concentrate on specific degradation effects through concrete textual cues, thereby capturing finer details. Furthermore, textual descriptions are used to constrain the generation of fusion images, effectively steering the network learning process toward better alignment with real semantic labels, thereby promoting the learning of more meaningful visual features. Extensive experiments demonstrate that AWM-Fuse outperforms current state-of-the-art methods in complex weather conditions and downstream tasks. Our code is available at https://github.com/Feecuin/AWM-Fuse.

MambaTrans: Multimodal Fusion Image Translation via Large Language Model Priors for Downstream Visual Tasks

Aug 11, 2025Abstract:The goal of multimodal image fusion is to integrate complementary information from infrared and visible images, generating multimodal fused images for downstream tasks. Existing downstream pre-training models are typically trained on visible images. However, the significant pixel distribution differences between visible and multimodal fusion images can degrade downstream task performance, sometimes even below that of using only visible images. This paper explores adapting multimodal fused images with significant modality differences to object detection and semantic segmentation models trained on visible images. To address this, we propose MambaTrans, a novel multimodal fusion image modality translator. MambaTrans uses descriptions from a multimodal large language model and masks from semantic segmentation models as input. Its core component, the Multi-Model State Space Block, combines mask-image-text cross-attention and a 3D-Selective Scan Module, enhancing pure visual capabilities. By leveraging object detection prior knowledge, MambaTrans minimizes detection loss during training and captures long-term dependencies among text, masks, and images. This enables favorable results in pre-trained models without adjusting their parameters. Experiments on public datasets show that MambaTrans effectively improves multimodal image performance in downstream tasks.

Dual-Granularity Cross-Modal Identity Association for Weakly-Supervised Text-to-Person Image Matching

Jul 09, 2025Abstract:Weakly supervised text-to-person image matching, as a crucial approach to reducing models' reliance on large-scale manually labeled samples, holds significant research value. However, existing methods struggle to predict complex one-to-many identity relationships, severely limiting performance improvements. To address this challenge, we propose a local-and-global dual-granularity identity association mechanism. Specifically, at the local level, we explicitly establish cross-modal identity relationships within a batch, reinforcing identity constraints across different modalities and enabling the model to better capture subtle differences and correlations. At the global level, we construct a dynamic cross-modal identity association network with the visual modality as the anchor and introduce a confidence-based dynamic adjustment mechanism, effectively enhancing the model's ability to identify weakly associated samples while improving overall sensitivity. Additionally, we propose an information-asymmetric sample pair construction method combined with consistency learning to tackle hard sample mining and enhance model robustness. Experimental results demonstrate that the proposed method substantially boosts cross-modal matching accuracy, providing an efficient and practical solution for text-to-person image matching.

OTFusion: Bridging Vision-only and Vision-Language Models via Optimal Transport for Transductive Zero-Shot Learning

Jun 16, 2025Abstract:Transductive zero-shot learning (ZSL) aims to classify unseen categories by leveraging both semantic class descriptions and the distribution of unlabeled test data. While Vision-Language Models (VLMs) such as CLIP excel at aligning visual inputs with textual semantics, they often rely too heavily on class-level priors and fail to capture fine-grained visual cues. In contrast, Vision-only Foundation Models (VFMs) like DINOv2 provide rich perceptual features but lack semantic alignment. To exploit the complementary strengths of these models, we propose OTFusion, a simple yet effective training-free framework that bridges VLMs and VFMs via Optimal Transport. Specifically, OTFusion aims to learn a shared probabilistic representation that aligns visual and semantic information by minimizing the transport cost between their respective distributions. This unified distribution enables coherent class predictions that are both semantically meaningful and visually grounded. Extensive experiments on 11 benchmark datasets demonstrate that OTFusion consistently outperforms the original CLIP model, achieving an average accuracy improvement of nearly $10\%$, all without any fine-tuning or additional annotations. The code will be publicly released after the paper is accepted.

BSAFusion: A Bidirectional Stepwise Feature Alignment Network for Unaligned Medical Image Fusion

Dec 11, 2024

Abstract:If unaligned multimodal medical images can be simultaneously aligned and fused using a single-stage approach within a unified processing framework, it will not only achieve mutual promotion of dual tasks but also help reduce the complexity of the model. However, the design of this model faces the challenge of incompatible requirements for feature fusion and alignment; specifically, feature alignment requires consistency among corresponding features, whereas feature fusion requires the features to be complementary to each other. To address this challenge, this paper proposes an unaligned medical image fusion method called Bidirectional Stepwise Feature Alignment and Fusion (BSFA-F) strategy. To reduce the negative impact of modality differences on cross-modal feature matching, we incorporate the Modal Discrepancy-Free Feature Representation (MDF-FR) method into BSFA-F. MDF-FR utilizes a Modality Feature Representation Head (MFRH) to integrate the global information of the input image. By injecting the information contained in MFRH of the current image into other modality images, it effectively reduces the impact of modality differences on feature alignment while preserving the complementary information carried by different images. In terms of feature alignment, BSFA-F employs a bidirectional stepwise alignment deformation field prediction strategy based on the path independence of vector displacement between two points. This strategy solves the problem of large spans and inaccurate deformation field prediction in single-step alignment. Finally, Multi-Modal Feature Fusion block achieves the fusion of aligned features. The experimental results across multiple datasets demonstrate the effectiveness of our method. The source code is available at https://github.com/slrl123/BSAFusion.

PP-SSL : Priority-Perception Self-Supervised Learning for Fine-Grained Recognition

Nov 28, 2024Abstract:Self-supervised learning is emerging in fine-grained visual recognition with promising results. However, existing self-supervised learning methods are often susceptible to irrelevant patterns in self-supervised tasks and lack the capability to represent the subtle differences inherent in fine-grained visual recognition (FGVR), resulting in generally poorer performance. To address this, we propose a novel Priority-Perception Self-Supervised Learning framework, denoted as PP-SSL, which can effectively filter out irrelevant feature interference and extract more subtle discriminative features throughout the training process. Specifically, it composes of two main parts: the Anti-Interference Strategy (AIS) and the Image-Aided Distinction Module (IADM). In AIS, a fine-grained textual description corpus is established, and a knowledge distillation strategy is devised to guide the model in eliminating irrelevant features while enhancing the learning of more discriminative and high-quality features. IADM reveals that extracting GradCAM from the original image effectively reveals subtle differences between fine-grained categories. Compared to features extracted from intermediate or output layers, the original image retains more detail, allowing for a deeper exploration of the subtle distinctions among fine-grained classes. Extensive experimental results indicate that the PP-SSL significantly outperforms existing methods across various datasets, highlighting its effectiveness in fine-grained recognition tasks. Our code will be made publicly available upon publication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge