Xindong Wu

OPP-Miner: Order-preserving sequential pattern mining

Feb 09, 2022

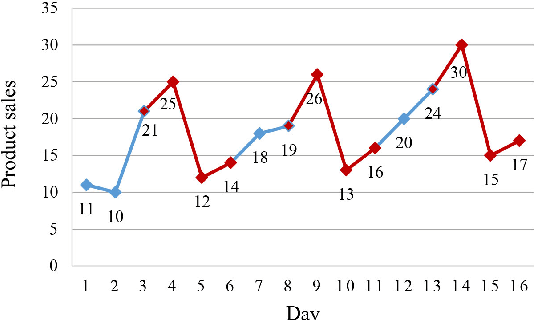

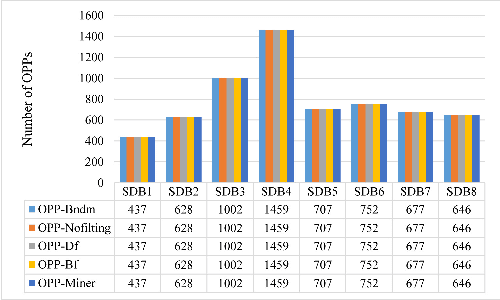

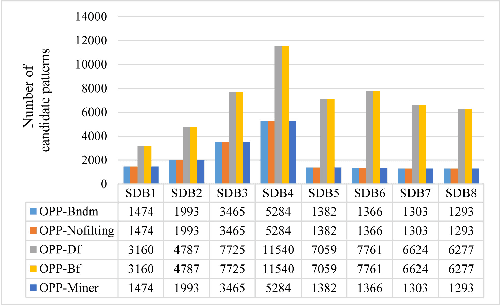

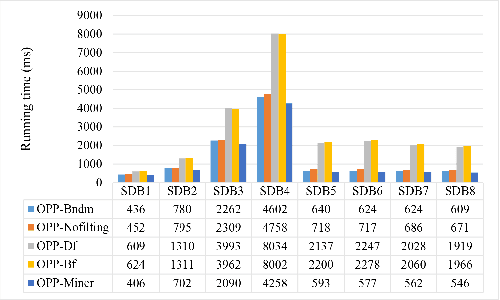

Abstract:A time series is a collection of measurements in chronological order. Discovering patterns from time series is useful in many domains, such as stock analysis, disease detection, and weather forecast. To discover patterns, existing methods often convert time series data into another form, such as nominal/symbolic format, to reduce dimensionality, which inevitably deviates the data values. Moreover, existing methods mainly neglect the order relationships between time series values. To tackle these issues, inspired by order-preserving matching, this paper proposes an Order-Preserving sequential Pattern (OPP) mining method, which represents patterns based on the order relationships of the time series data. An inherent advantage of such representation is that the trend of a time series can be represented by the relative order of the values underneath the time series data. To obtain frequent trends in time series, we propose the OPP-Miner algorithm to mine patterns with the same trend (sub-sequences with the same relative order). OPP-Miner employs the filtration and verification strategies to calculate the support and uses pattern fusion strategy to generate candidate patterns. To compress the result set, we also study finding the maximal OPPs. Experiments validate that OPP-Miner is not only efficient and scalable but can also discover similar sub-sequences in time series. In addition, case studies show that our algorithms have high utility in analyzing the COVID-19 epidemic by identifying critical trends and improve the clustering performance.

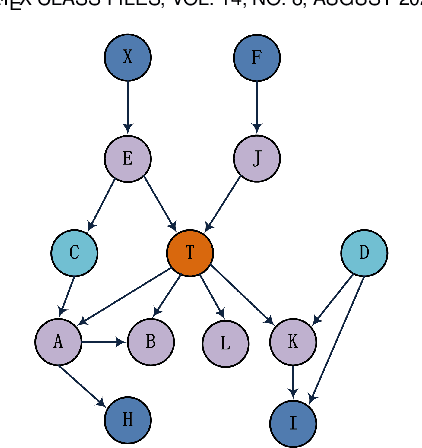

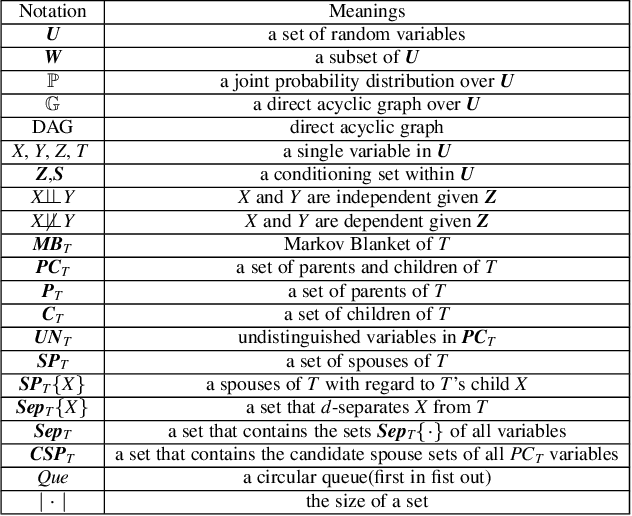

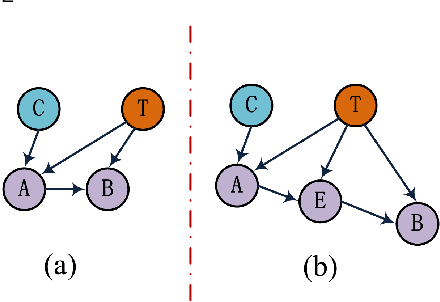

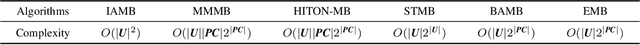

Towards Efficient Local Causal Structure Learning

Feb 28, 2021

Abstract:Local causal structure learning aims to discover and distinguish direct causes (parents) and direct effects (children) of a variable of interest from data. While emerging successes have been made, existing methods need to search a large space to distinguish direct causes from direct effects of a target variable T. To tackle this issue, we propose a novel Efficient Local Causal Structure learning algorithm, named ELCS. Specifically, we first propose the concept of N-structures, then design an efficient Markov Blanket (MB) discovery subroutine to integrate MB learning with N-structures to learn the MB of T and simultaneously distinguish direct causes from direct effects of T. With the proposed MB subroutine, ELCS starts from the target variable, sequentially finds MBs of variables connected to the target variable and simultaneously constructs local causal structures over MBs until the direct causes and direct effects of the target variable have been distinguished. Using eight Bayesian networks the extensive experiments have validated that ELCS achieves better accuracy and efficiency than the state-of-the-art algorithms.

Trustworthy Preference Completion in Social Choice

Dec 14, 2020

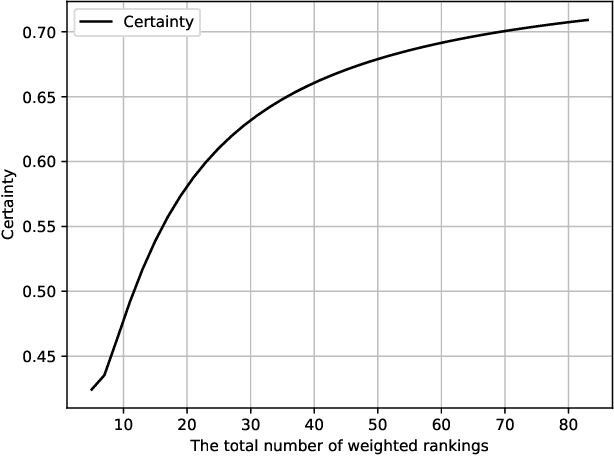

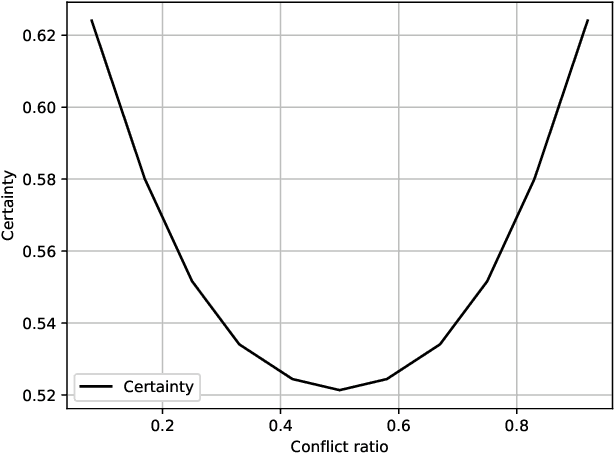

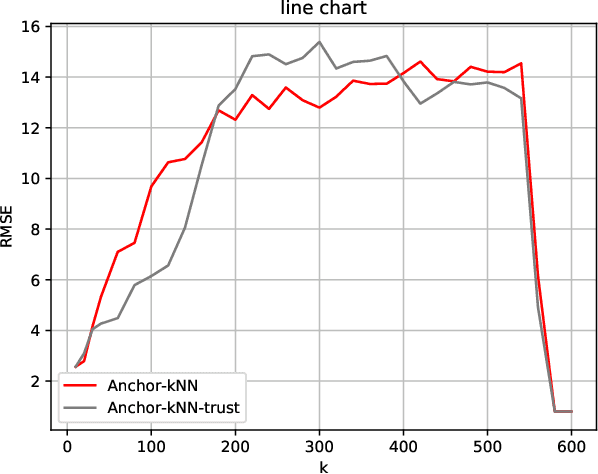

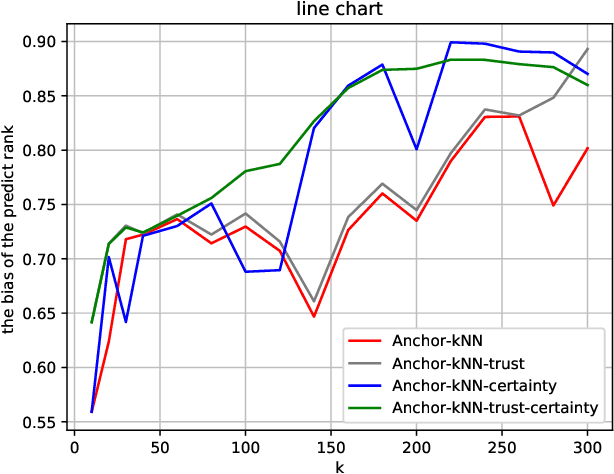

Abstract:As from time to time it is impractical to ask agents to provide linear orders over all alternatives, for these partial rankings it is necessary to conduct preference completion. Specifically, the personalized preference of each agent over all the alternatives can be estimated with partial rankings from neighboring agents over subsets of alternatives. However, since the agents' rankings are nondeterministic, where they may provide rankings with noise, it is necessary and important to conduct the trustworthy preference completion. Hence, in this paper firstly, a trust-based anchor-kNN algorithm is proposed to find $k$-nearest trustworthy neighbors of the agent with trust-oriented Kendall-Tau distances, which will handle the cases when an agent exhibits irrational behaviors or provides only noisy rankings. Then, for alternative pairs, a bijection can be built from the ranking space to the preference space, and its certainty and conflict can be evaluated based on a well-built statistical measurement Probability-Certainty Density Function. Therefore, a certain common voting rule for the first $k$ trustworthy neighboring agents based on certainty and conflict can be taken to conduct the trustworthy preference completion. The properties of the proposed certainty and conflict have been studied empirically, and the proposed approach has been experimentally validated compared to state-of-arts approaches with several data sets.

Chinese Lexical Simplification

Oct 14, 2020

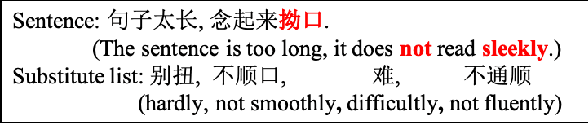

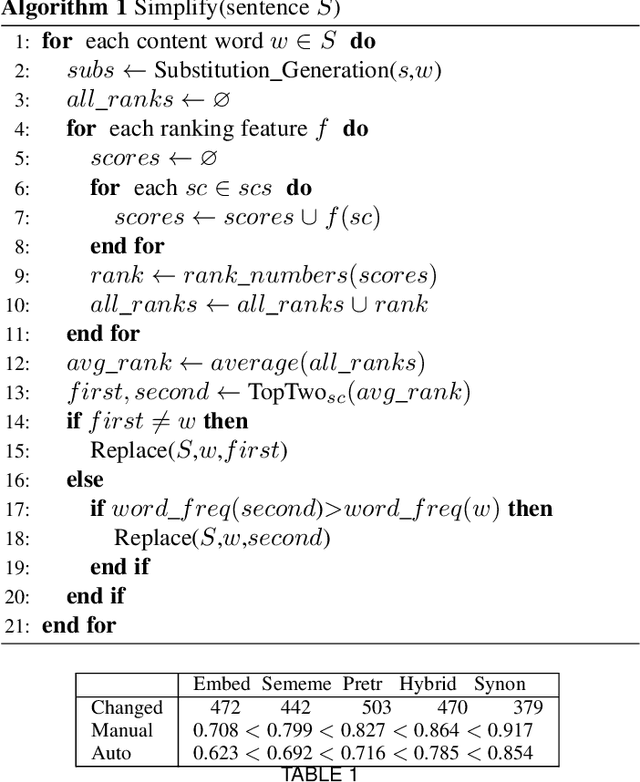

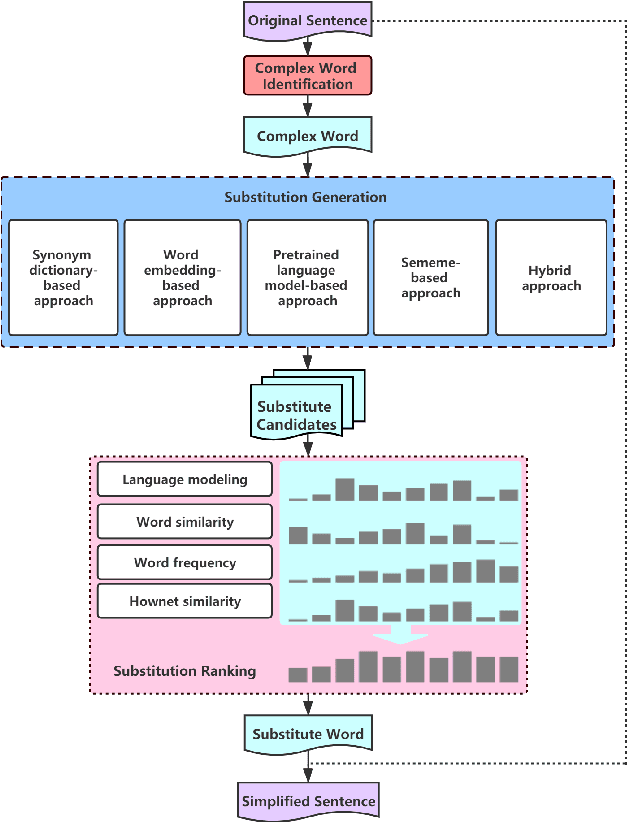

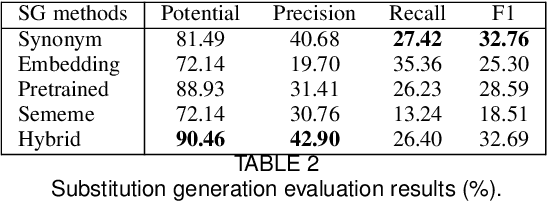

Abstract:Lexical simplification has attracted much attention in many languages, which is the process of replacing complex words in a given sentence with simpler alternatives of equivalent meaning. Although the richness of vocabulary in Chinese makes the text very difficult to read for children and non-native speakers, there is no research work for Chinese lexical simplification (CLS) task. To circumvent difficulties in acquiring annotations, we manually create the first benchmark dataset for CLS, which can be used for evaluating the lexical simplification systems automatically. In order to acquire more thorough comparison, we present five different types of methods as baselines to generate substitute candidates for the complex word that include synonym-based approach, word embedding-based approach, pretrained language model-based approach, sememe-based approach, and a hybrid approach. Finally, we design the experimental evaluation of these baselines and discuss their advantages and disadvantages. To our best knowledge, this is the first study for CLS task.

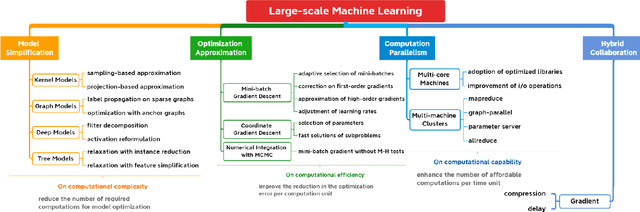

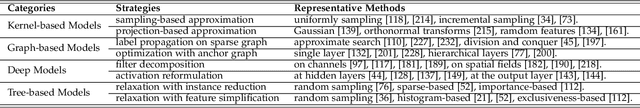

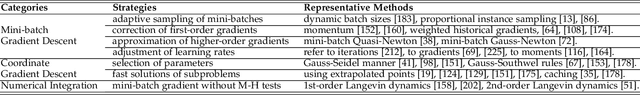

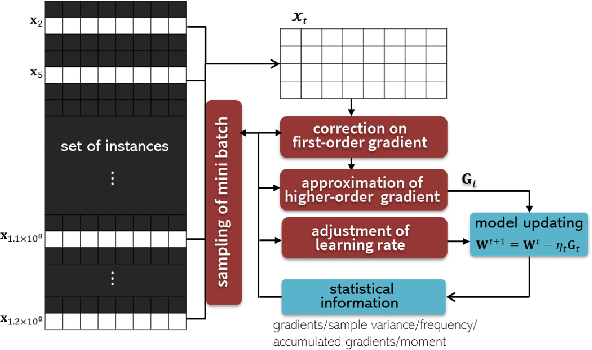

A Survey on Large-scale Machine Learning

Aug 10, 2020

Abstract:Machine learning can provide deep insights into data, allowing machines to make high-quality predictions and having been widely used in real-world applications, such as text mining, visual classification, and recommender systems. However, most sophisticated machine learning approaches suffer from huge time costs when operating on large-scale data. This issue calls for the need of {Large-scale Machine Learning} (LML), which aims to learn patterns from big data with comparable performance efficiently. In this paper, we offer a systematic survey on existing LML methods to provide a blueprint for the future developments of this area. We first divide these LML methods according to the ways of improving the scalability: 1) model simplification on computational complexities, 2) optimization approximation on computational efficiency, and 3) computation parallelism on computational capabilities. Then we categorize the methods in each perspective according to their targeted scenarios and introduce representative methods in line with intrinsic strategies. Lastly, we analyze their limitations and discuss potential directions as well as open issues that are promising to address in the future.

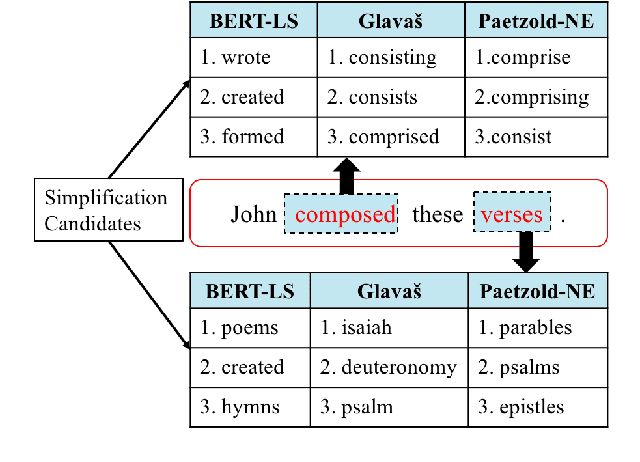

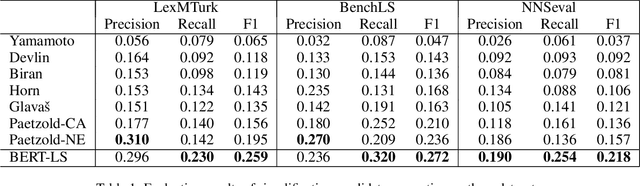

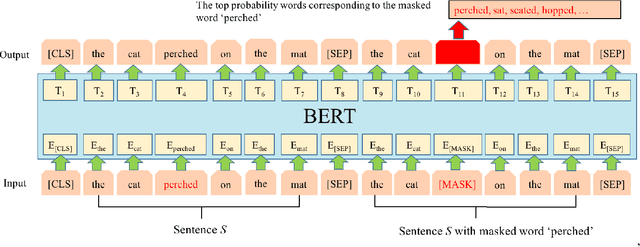

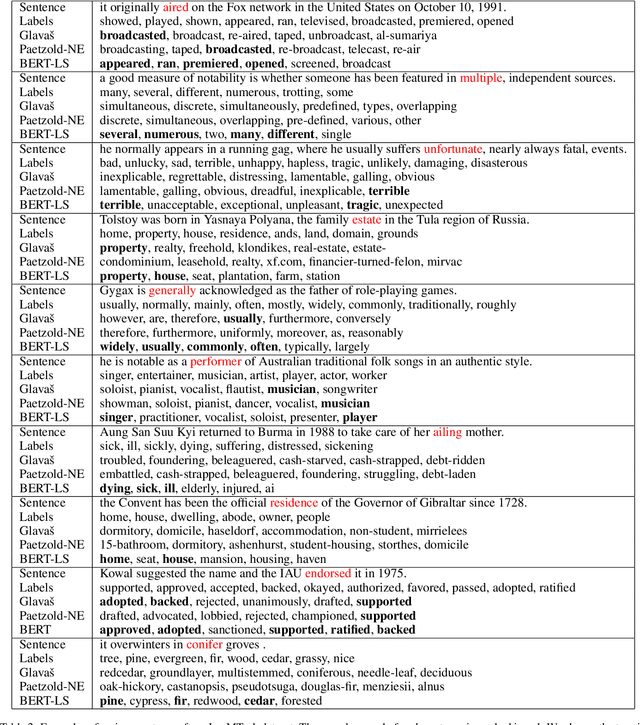

LSBert: A Simple Framework for Lexical Simplification

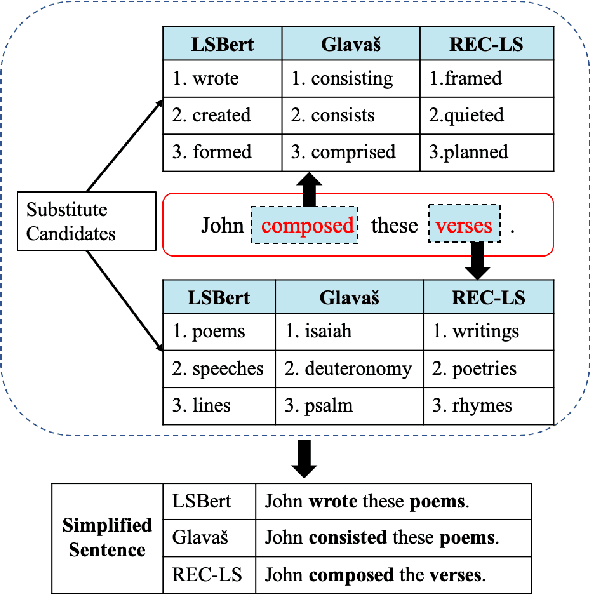

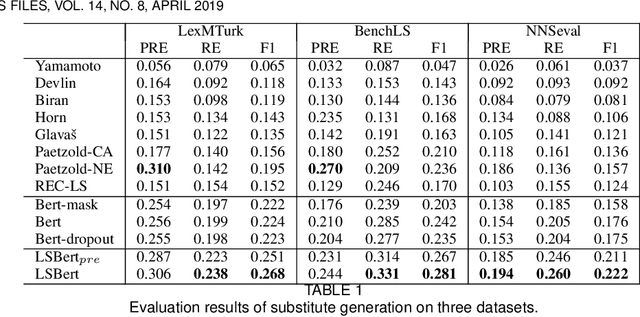

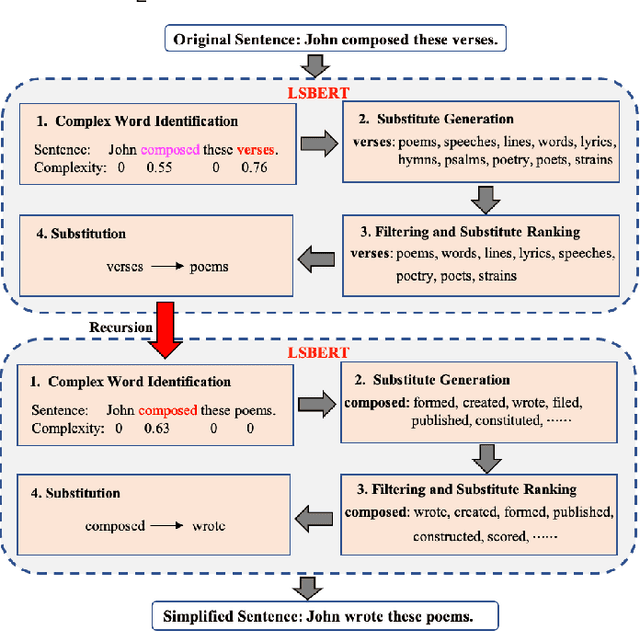

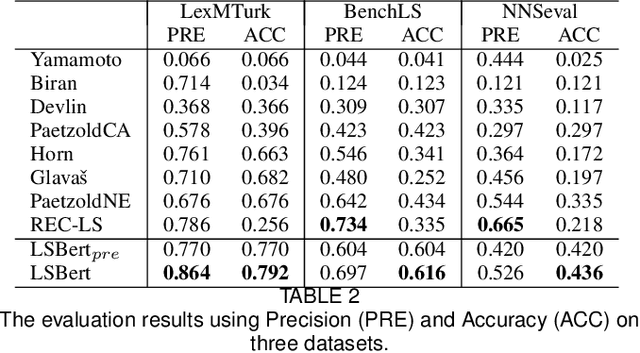

Jun 25, 2020

Abstract:Lexical simplification (LS) aims to replace complex words in a given sentence with their simpler alternatives of equivalent meaning, to simplify the sentence. Recently unsupervised lexical simplification approaches only rely on the complex word itself regardless of the given sentence to generate candidate substitutions, which will inevitably produce a large number of spurious candidates. In this paper, we propose a lexical simplification framework LSBert based on pretrained representation model Bert, that is capable of (1) making use of the wider context when both detecting the words in need of simplification and generating substitue candidates, and (2) taking five high-quality features into account for ranking candidates, including Bert prediction order, Bert-based language model, and the paraphrase database PPDB, in addition to the word frequency and word similarity commonly used in other LS methods. We show that our system outputs lexical simplifications that are grammatically correct and semantically appropriate, and obtains obvious improvement compared with these baselines, outperforming the state-of-the-art by 29.8 Accuracy points on three well-known benchmarks.

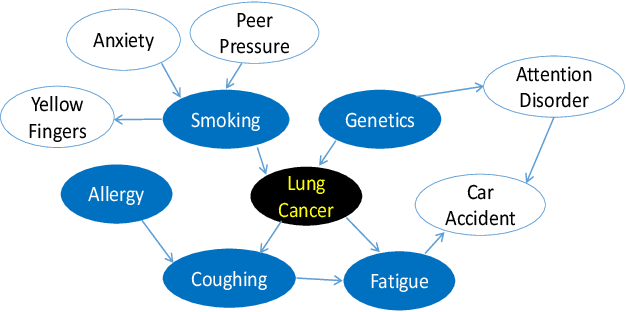

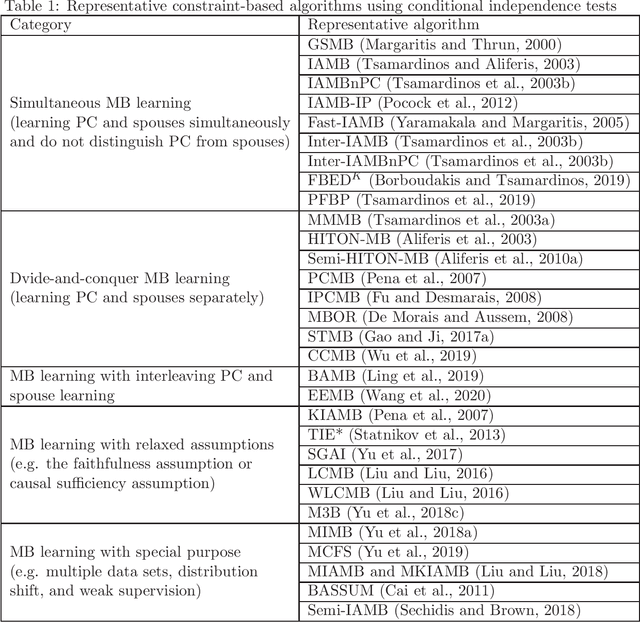

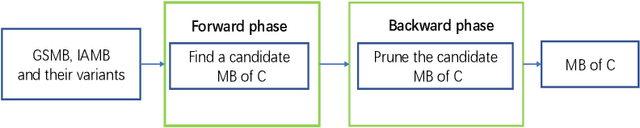

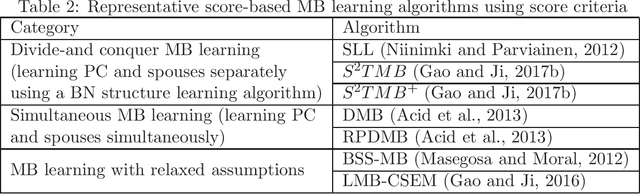

Causality-based Feature Selection: Methods and Evaluations

Nov 17, 2019

Abstract:Feature selection is a crucial preprocessing step in data analytics and machine learning. Classical feature selection algorithms select features based on the correlations between predictive features and the class variable and do not attempt to capture causal relationships between them. It has been shown that the knowledge about the causal relationships between features and the class variable has potential benefits for building interpretable and robust prediction models, since causal relationships imply the underlying mechanism of a system. Consequently, causality-based feature selection has gradually attracted greater attentions and many algorithms have been proposed. In this paper, we present a comprehensive review of recent advances in causality-based feature selection. To facilitate the development of new algorithms in the research area and make it easy for the comparisons between new methods and existing ones, we develop the first open-source package, called CausalFS, which consists of most of the representative causality-based feature selection algorithms (available at https://github.com/kuiy/CausalFS). Using CausalFS, we conduct extensive experiments to compare the representative algorithms with both synthetic and real-world data sets. Finally, we discuss some challenging problems to be tackled in future causality-based feature selection research.

A Simple BERT-Based Approach for Lexical Simplification

Aug 16, 2019

Abstract:Lexical simplification (LS) aims to replace complex words in a given sentence with their simpler alternatives of equivalent meaning. Recently unsupervised lexical simplification approaches only rely on the complex word itself regardless of the given sentence to generate candidate substitutions, which will inevitably produce a large number of spurious candidates. We present a simple BERT-based LS approach that makes use of the pre-trained unsupervised deep bidirectional representations BERT. Despite being entirely unsupervised, experimental results show that our approach obtains obvious improvement than these baselines leveraging linguistic databases and parallel corpus, outperforming the state-of-the-art by more than 11 Accuracy points on three well-known benchmarks.

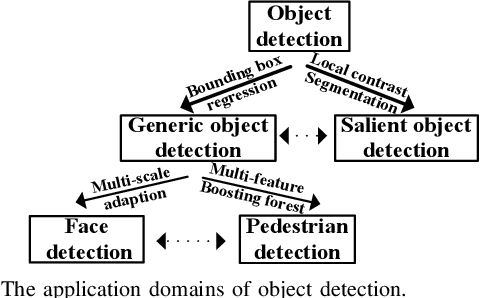

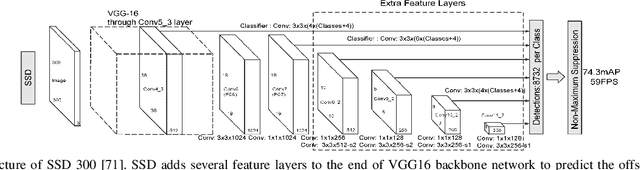

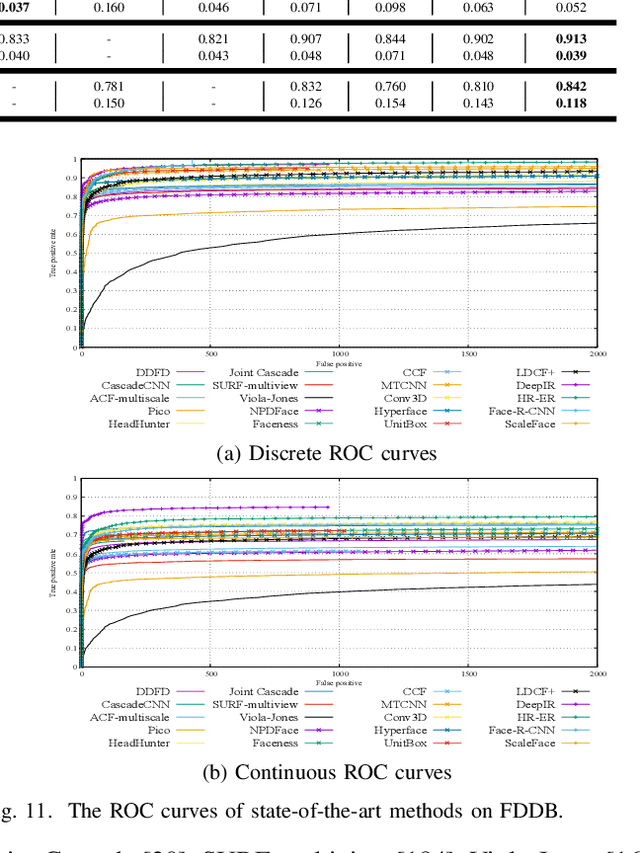

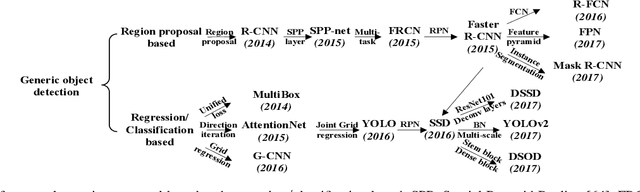

Object Detection with Deep Learning: A Review

Jul 15, 2018

Abstract:Due to object detection's close relationship with video analysis and image understanding, it has attracted much research attention in recent years. Traditional object detection methods are built on handcrafted features and shallow trainable architectures. Their performance easily stagnates by constructing complex ensembles which combine multiple low-level image features with high-level context from object detectors and scene classifiers. With the rapid development in deep learning, more powerful tools, which are able to learn semantic, high-level, deeper features, are introduced to address the problems existing in traditional architectures. These models behave differently in network architecture, training strategy and optimization function, etc. In this paper, we provide a review on deep learning based object detection frameworks. Our review begins with a brief introduction on the history of deep learning and its representative tool, namely Convolutional Neural Network (CNN). Then we focus on typical generic object detection architectures along with some modifications and useful tricks to improve detection performance further. As distinct specific detection tasks exhibit different characteristics, we also briefly survey several specific tasks, including salient object detection, face detection and pedestrian detection. Experimental analyses are also provided to compare various methods and draw some meaningful conclusions. Finally, several promising directions and tasks are provided to serve as guidelines for future work in both object detection and relevant neural network based learning systems.

AAANE: Attention-based Adversarial Autoencoder for Multi-scale Network Embedding

Mar 24, 2018

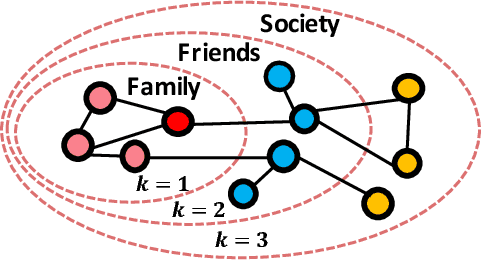

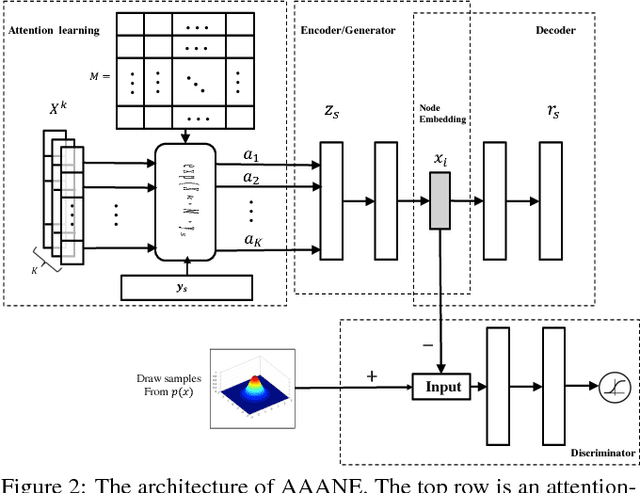

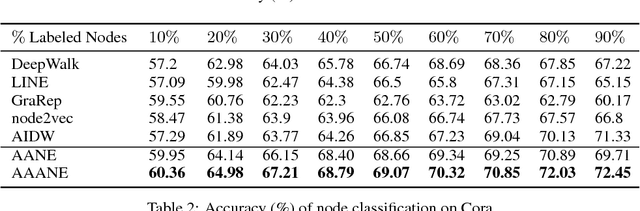

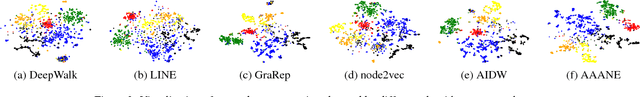

Abstract:Network embedding represents nodes in a continuous vector space and preserves structure information from the Network. Existing methods usually adopt a "one-size-fits-all" approach when concerning multi-scale structure information, such as first- and second-order proximity of nodes, ignoring the fact that different scales play different roles in the embedding learning. In this paper, we propose an Attention-based Adversarial Autoencoder Network Embedding(AAANE) framework, which promotes the collaboration of different scales and lets them vote for robust representations. The proposed AAANE consists of two components: 1) Attention-based autoencoder effectively capture the highly non-linear network structure, which can de-emphasize irrelevant scales during training. 2) An adversarial regularization guides the autoencoder learn robust representations by matching the posterior distribution of the latent embeddings to given prior distribution. This is the first attempt to introduce attention mechanisms to multi-scale network embedding. Experimental results on real-world networks show that our learned attention parameters are different for every network and the proposed approach outperforms existing state-of-the-art approaches for network embedding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge