Xin Hu

Human Emotion Knowledge Representation Emerges in Large Language Model and Supports Discrete Emotion Inference

Feb 21, 2023

Abstract:How humans infer discrete emotions is a fundamental research question in the field of psychology. While conceptual knowledge about emotions (emotion knowledge) has been suggested to be essential for emotion inference, evidence to date is mostly indirect and inconclusive. As the large language models (LLMs) have been shown to support effective representations of various human conceptual knowledge, the present study further employed artificial neurons in LLMs to investigate the mechanism of human emotion inference. With artificial neurons activated by prompts, the LLM (RoBERTa) demonstrated a similar conceptual structure of 27 discrete emotions as that of human behaviors. Furthermore, the LLM-based conceptual structure revealed a human-like reliance on 14 underlying conceptual attributes of emotions for emotion inference. Most importantly, by manipulating attribute-specific neurons, we found that the corresponding LLM's emotion inference performance deteriorated, and the performance deterioration was correlated to the effectiveness of representations of the conceptual attributes on the human side. Our findings provide direct evidence for the emergence of emotion knowledge representation in large language models and suggest its casual support for discrete emotion inference.

GPTR: Gestalt-Perception Transformer for Diagram Object Detection

Dec 29, 2022

Abstract:Diagram object detection is the key basis of practical applications such as textbook question answering. Because the diagram mainly consists of simple lines and color blocks, its visual features are sparser than those of natural images. In addition, diagrams usually express diverse knowledge, in which there are many low-frequency object categories in diagrams. These lead to the fact that traditional data-driven detection model is not suitable for diagrams. In this work, we propose a gestalt-perception transformer model for diagram object detection, which is based on an encoder-decoder architecture. Gestalt perception contains a series of laws to explain human perception, that the human visual system tends to perceive patches in an image that are similar, close or connected without abrupt directional changes as a perceptual whole object. Inspired by these thoughts, we build a gestalt-perception graph in transformer encoder, which is composed of diagram patches as nodes and the relationships between patches as edges. This graph aims to group these patches into objects via laws of similarity, proximity, and smoothness implied in these edges, so that the meaningful objects can be effectively detected. The experimental results demonstrate that the proposed GPTR achieves the best results in the diagram object detection task. Our model also obtains comparable results over the competitors in natural image object detection.

Dynamic Event-Triggered Discrete-Time Linear Time-Varying System with Privacy-Preservation

Oct 28, 2022Abstract:This paper focuses on discrete-time wireless sensor networks with privacy-preservation. In practical applications, information exchange between sensors is subject to attacks. For the information leakage caused by the attack during the information transmission process, privacy-preservation is introduced for system states. To make communication resources more effectively utilized, a dynamic event-triggered set-membership estimator is designed. Moreover, the privacy of the system is analyzed to ensure the security of the real data. As a result, the set-membership estimator with differential privacy is analyzed using recursive convex optimization. Then the steady-state performance of the system is studied. Finally, one example is presented to demonstrate the feasibility of the proposed distributed filter containing privacy-preserving analysis.

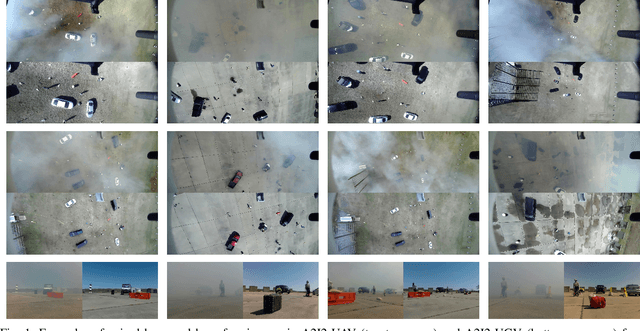

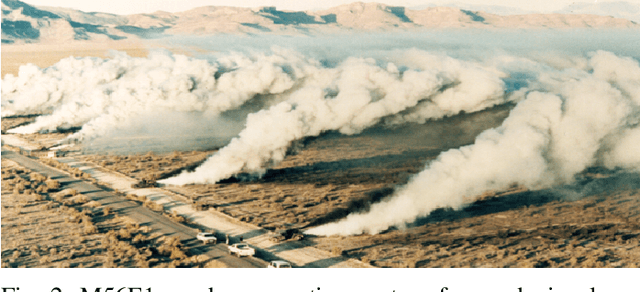

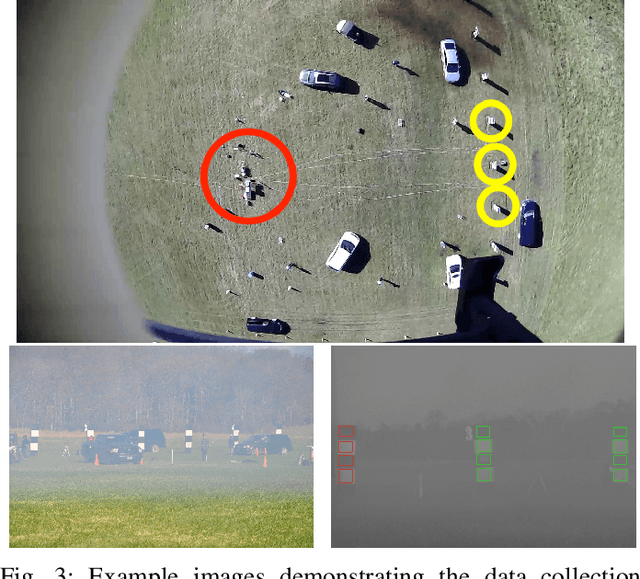

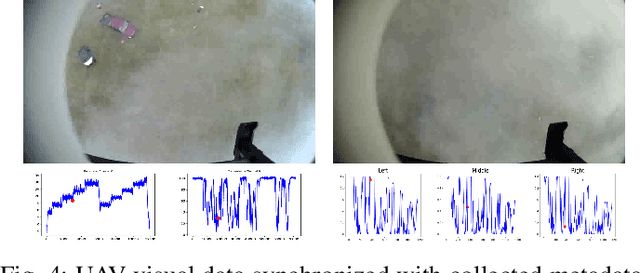

A Multi-purpose Real Haze Benchmark with Quantifiable Haze Levels and Ground Truth

Jun 13, 2022

Abstract:Imagery collected from outdoor visual environments is often degraded due to the presence of dense smoke or haze. A key challenge for research in scene understanding in these degraded visual environments (DVE) is the lack of representative benchmark datasets. These datasets are required to evaluate state-of-the-art object recognition and other computer vision algorithms in degraded settings. In this paper, we address some of these limitations by introducing the first paired real image benchmark dataset with hazy and haze-free images, and in-situ haze density measurements. This dataset was produced in a controlled environment with professional smoke generating machines that covered the entire scene, and consists of images captured from the perspective of both an unmanned aerial vehicle (UAV) and an unmanned ground vehicle (UGV). We also evaluate a set of representative state-of-the-art dehazing approaches as well as object detectors on the dataset. The full dataset presented in this paper, including the ground truth object classification bounding boxes and haze density measurements, is provided for the community to evaluate their algorithms at: https://a2i2-archangel.vision. A subset of this dataset has been used for the Object Detection in Haze Track of CVPR UG2 2022 challenge.

E^2TAD: An Energy-Efficient Tracking-based Action Detector

Apr 09, 2022

Abstract:Video action detection (spatio-temporal action localization) is usually the starting point for human-centric intelligent analysis of videos nowadays. It has high practical impacts for many applications across robotics, security, healthcare, etc. The two-stage paradigm of Faster R-CNN inspires a standard paradigm of video action detection in object detection, i.e., firstly generating person proposals and then classifying their actions. However, none of the existing solutions could provide fine-grained action detection to the "who-when-where-what" level. This paper presents a tracking-based solution to accurately and efficiently localize predefined key actions spatially (by predicting the associated target IDs and locations) and temporally (by predicting the time in exact frame indices). This solution won first place in the UAV-Video Track of 2021 Low-Power Computer Vision Challenge (LPCVC).

Learning First-Order Rules with Relational Path Contrast for Inductive Relation Reasoning

Oct 17, 2021

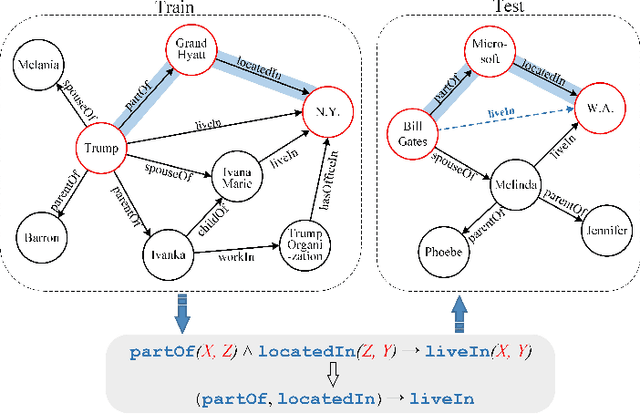

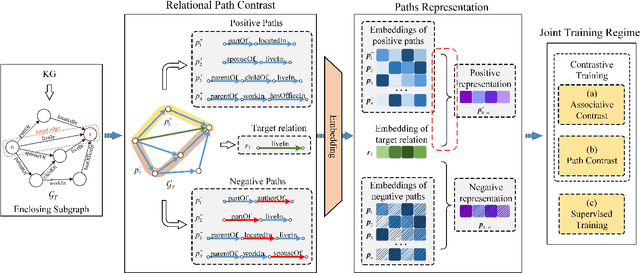

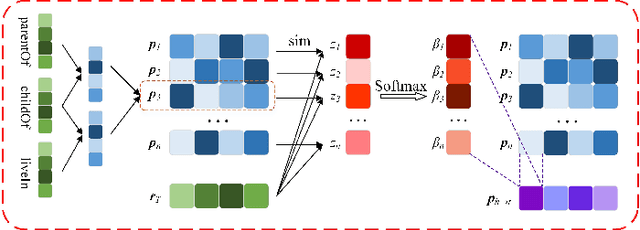

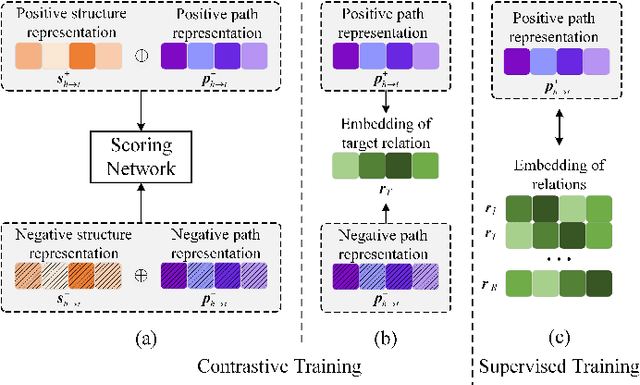

Abstract:Relation reasoning in knowledge graphs (KGs) aims at predicting missing relations in incomplete triples, whereas the dominant paradigm is learning the embeddings of relations and entities, which is limited to a transductive setting and has restriction on processing unseen entities in an inductive situation. Previous inductive methods are scalable and consume less resource. They utilize the structure of entities and triples in subgraphs to own inductive ability. However, in order to obtain better reasoning results, the model should acquire entity-independent relational semantics in latent rules and solve the deficient supervision caused by scarcity of rules in subgraphs. To address these issues, we propose a novel graph convolutional network (GCN)-based approach for interpretable inductive reasoning with relational path contrast, named RPC-IR. RPC-IR firstly extracts relational paths between two entities and learns representations of them, and then innovatively introduces a contrastive strategy by constructing positive and negative relational paths. A joint training strategy considering both supervised and contrastive information is also proposed. Comprehensive experiments on three inductive datasets show that RPC-IR achieves outstanding performance comparing with the latest inductive reasoning methods and could explicitly represent logical rules for interpretability.

Contrastive Learning of Subject-Invariant EEG Representations for Cross-Subject Emotion Recognition

Sep 20, 2021

Abstract:Emotion recognition plays a vital role in human-machine interactions and daily healthcare. EEG signals have been reported to be informative and reliable for emotion recognition in recent years. However, the inter-subject variability of emotion-related EEG signals poses a great challenge for the practical use of EEG-based emotion recognition. Inspired by the recent neuroscience studies on inter-subject correlation, we proposed a Contrastive Learning method for Inter-Subject Alignment (CLISA) for reliable cross-subject emotion recognition. Contrastive learning was employed to minimize the inter-subject differences by maximizing the similarity in EEG signals across subjects when they received the same stimuli in contrast to different ones. Specifically, a convolutional neural network with depthwise spatial convolution and temporal convolution layers was applied to learn inter-subject aligned spatiotemporal representations from raw EEG signals. Then the aligned representations were used to extract differential entropy features for emotion classification. The performance of the proposed method was evaluated on our THU-EP dataset with 80 subjects and the publicly available SEED dataset with 15 subjects. Comparable or better cross-subject emotion recognition accuracy (i.e., 72.1% and 47.0% for binary and nine-class classification, respectively, on the THU-EP dataset and 86.3% on the SEED dataset for three-class classification) was achieved as compared to the state-of-the-art methods. The proposed method could be generalized well to unseen emotional stimuli as well. The CLISA method is therefore expected to considerably increase the practicality of EEG-based emotion recognition by operating in a "plug-and-play" manner. Furthermore, the learned spatiotemporal representations by CLISA could provide insights into the neural mechanisms of human emotion processing.

A Feature Fusion-Net Using Deep Spatial Context Encoder and Nonstationary Joint Statistical Model for High Resolution SAR Image Classification

May 11, 2021

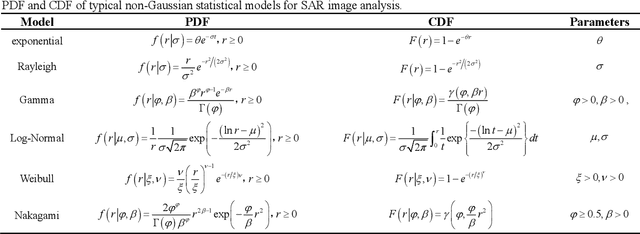

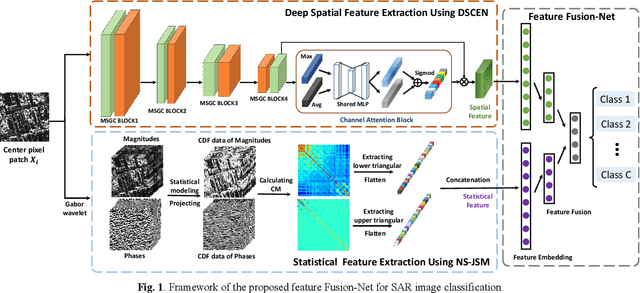

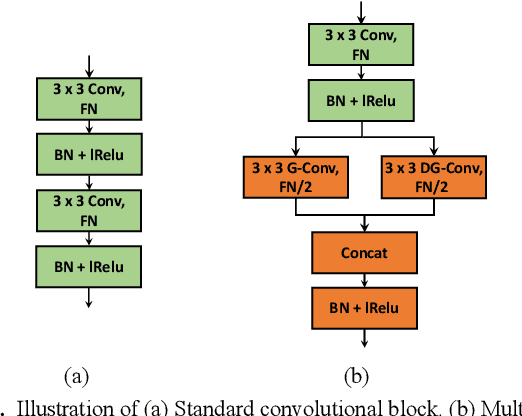

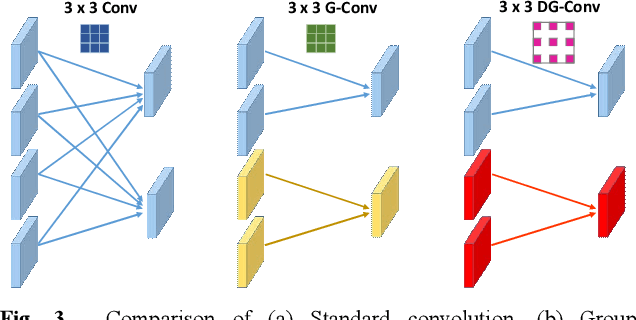

Abstract:Convolutional neural networks (CNNs) have been applied to learn spatial features for high-resolution (HR) synthetic aperture radar (SAR) image classification. However, there has been little work on integrating the unique statistical distributions of SAR images which can reveal physical properties of terrain objects, into CNNs in a supervised feature learning framework. To address this problem, a novel end-to-end supervised classification method is proposed for HR SAR images by considering both spatial context and statistical features. First, to extract more effective spatial features from SAR images, a new deep spatial context encoder network (DSCEN) is proposed, which is a lightweight structure and can be effectively trained with a small number of samples. Meanwhile, to enhance the diversity of statistics, the nonstationary joint statistical model (NS-JSM) is adopted to form the global statistical features. Specifically, SAR images are transformed into the Gabor wavelet domain and the produced multi-subbands magnitudes and phases are modeled by the log-normal and uniform distribution. The covariance matrix is further utilized to capture the inter-scale and intra-scale nonstationary correlation between the statistical subbands and make the joint statistical features more compact and distinguishable. Considering complementary advantages, a feature fusion network (Fusion-Net) base on group compression and smooth normalization is constructed to embed the statistical features into the spatial features and optimize the fusion feature representation. As a result, our model can learn the discriminative features and improve the final classification performance. Experiments on four HR SAR images validate the superiority of the proposed method over other related algorithms.

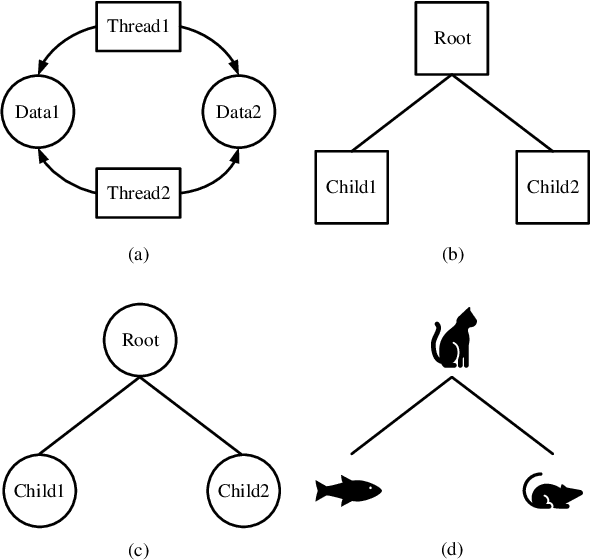

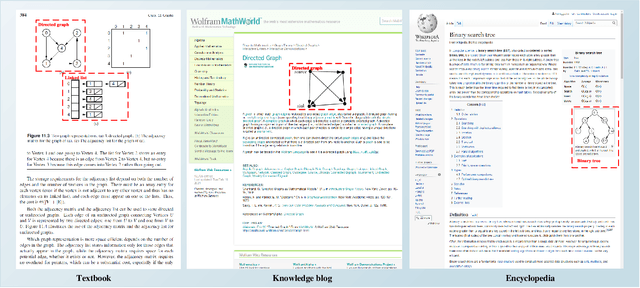

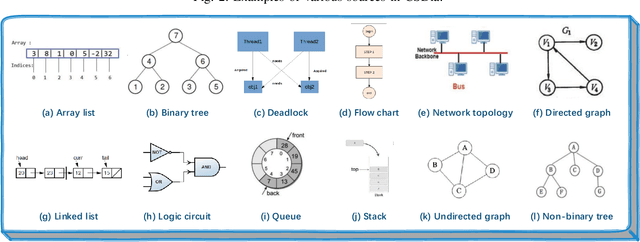

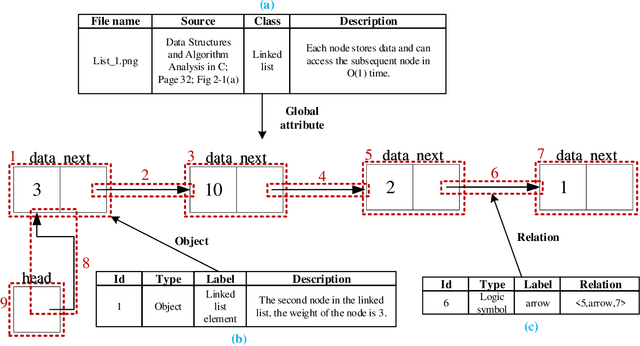

RL-CSDia: Representation Learning of Computer Science Diagrams

Mar 10, 2021

Abstract:Recent studies on computer vision mainly focus on natural images that express real-world scenes. They achieve outstanding performance on diverse tasks such as visual question answering. Diagram is a special form of visual expression that frequently appears in the education field and is of great significance for learners to understand multimodal knowledge. Current research on diagrams preliminarily focuses on natural disciplines such as Biology and Geography, whose expressions are still similar to natural images. Another type of diagrams such as from Computer Science is composed of graphics containing complex topologies and relations, and research on this type of diagrams is still blank. The main challenges of graphic diagrams understanding are the rarity of data and the confusion of semantics, which are mainly reflected in the diversity of expressions. In this paper, we construct a novel dataset of graphic diagrams named Computer Science Diagrams (CSDia). It contains more than 1,200 diagrams and exhaustive annotations of objects and relations. Considering the visual noises caused by the various expressions in diagrams, we introduce the topology of diagrams to parse topological structure. After that, we propose Diagram Parsing Net (DPN) to represent the diagram from three branches: topology, visual feature, and text, and apply the model to the diagram classification task to evaluate the ability of diagrams understanding. The results show the effectiveness of the proposed DPN on diagrams understanding.

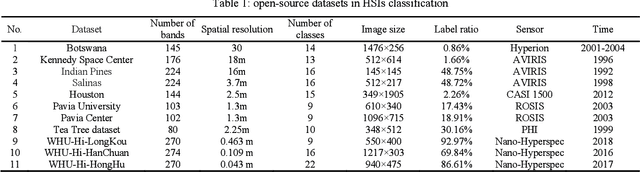

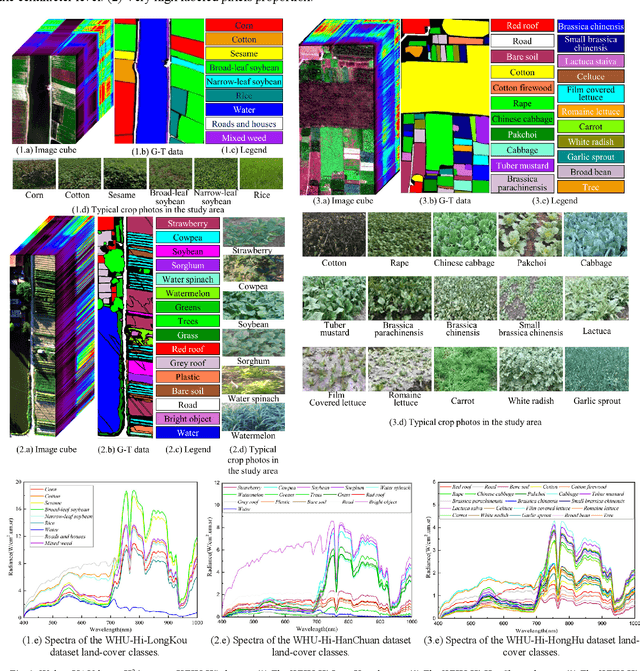

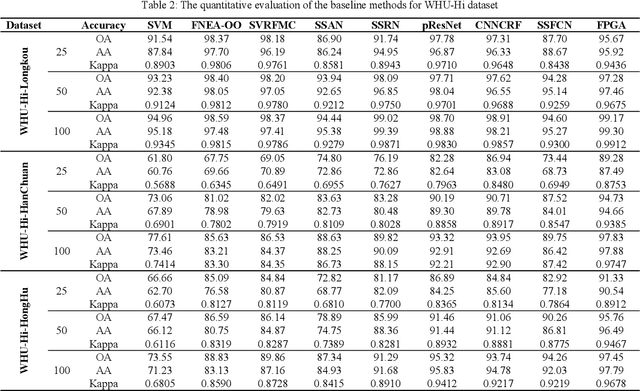

WHU-Hi: UAV-borne hyperspectral with high spatial resolution benchmark datasets for hyperspectral image classification

Dec 27, 2020

Abstract:Classification is an important aspect of hyperspectral images processing and application. At present, the researchers mostly use the classic airborne hyperspectral imagery as the benchmark dataset. However, existing datasets suffer from three bottlenecks: (1) low spatial resolution; (2) low labeled pixels proportion; (3) low degree of subclasses distinction. In this paper, a new benchmark dataset named the Wuhan UAV-borne hyperspectral image (WHU-Hi) dataset was built for hyperspectral image classification. The WHU-Hi dataset with a high spectral resolution (nm level) and a very high spatial resolution (cm level), which we refer to here as H2 imager. Besides, the WHU-Hi dataset has a higher pixel labeling ratio and finer subclasses. Some start-of-art hyperspectral image classification methods benchmarked the WHU-Hi dataset, and the experimental results show that WHU-Hi is a challenging dataset. We hope WHU-Hi dataset can become a strong benchmark to accelerate future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge