Weiping Wang

Stability and Generalization of Differentially Private Minimax Problems

Apr 11, 2022

Abstract:In the field of machine learning, many problems can be formulated as the minimax problem, including reinforcement learning, generative adversarial networks, to just name a few. So the minimax problem has attracted a huge amount of attentions from researchers in recent decades. However, there is relatively little work on studying the privacy of the general minimax paradigm. In this paper, we focus on the privacy of the general minimax setting, combining differential privacy together with minimax optimization paradigm. Besides, via algorithmic stability theory, we theoretically analyze the high probability generalization performance of the differentially private minimax algorithm under the strongly-convex-strongly-concave condition. To the best of our knowledge, this is the first time to analyze the generalization performance of general minimax paradigm, taking differential privacy into account.

Towards Escaping from Language Bias and OCR Error: Semantics-Centered Text Visual Question Answering

Mar 24, 2022

Abstract:Texts in scene images convey critical information for scene understanding and reasoning. The abilities of reading and reasoning matter for the model in the text-based visual question answering (TextVQA) process. However, current TextVQA models do not center on the text and suffer from several limitations. The model is easily dominated by language biases and optical character recognition (OCR) errors due to the absence of semantic guidance in the answer prediction process. In this paper, we propose a novel Semantics-Centered Network (SC-Net) that consists of an instance-level contrastive semantic prediction module (ICSP) and a semantics-centered transformer module (SCT). Equipped with the two modules, the semantics-centered model can resist the language biases and the accumulated errors from OCR. Extensive experiments on TextVQA and ST-VQA datasets show the effectiveness of our model. SC-Net surpasses previous works with a noticeable margin and is more reasonable for the TextVQA task.

Imagine by Reasoning: A Reasoning-Based Implicit Semantic Data Augmentation for Long-Tailed Classification

Dec 15, 2021

Abstract:Real-world data often follows a long-tailed distribution, which makes the performance of existing classification algorithms degrade heavily. A key issue is that samples in tail categories fail to depict their intra-class diversity. Humans can imagine a sample in new poses, scenes, and view angles with their prior knowledge even if it is the first time to see this category. Inspired by this, we propose a novel reasoning-based implicit semantic data augmentation method to borrow transformation directions from other classes. Since the covariance matrix of each category represents the feature transformation directions, we can sample new directions from similar categories to generate definitely different instances. Specifically, the long-tailed distributed data is first adopted to train a backbone and a classifier. Then, a covariance matrix for each category is estimated, and a knowledge graph is constructed to store the relations of any two categories. Finally, tail samples are adaptively enhanced via propagating information from all the similar categories in the knowledge graph. Experimental results on CIFAR-100-LT, ImageNet-LT, and iNaturalist 2018 have demonstrated the effectiveness of our proposed method compared with the state-of-the-art methods.

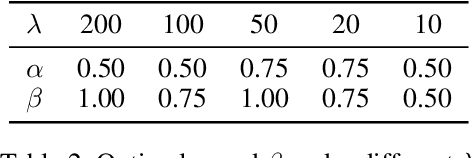

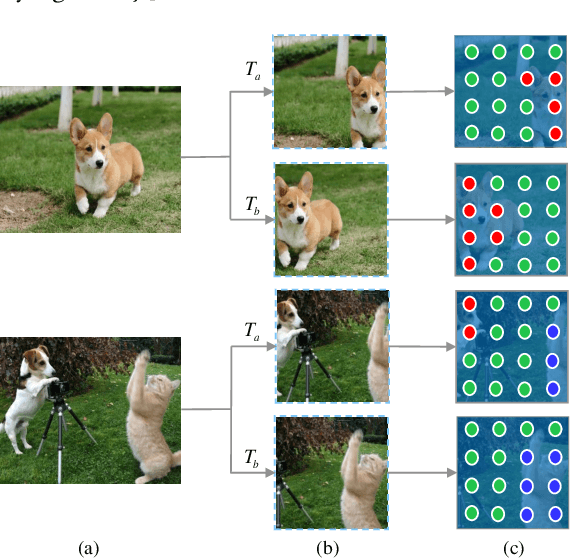

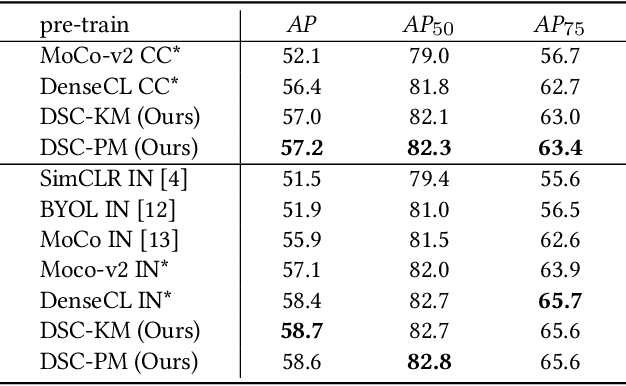

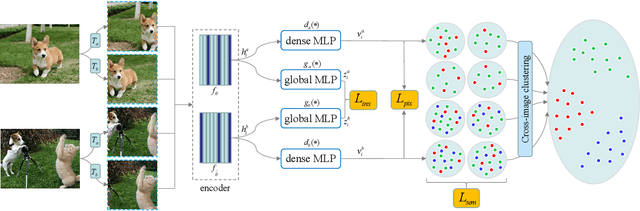

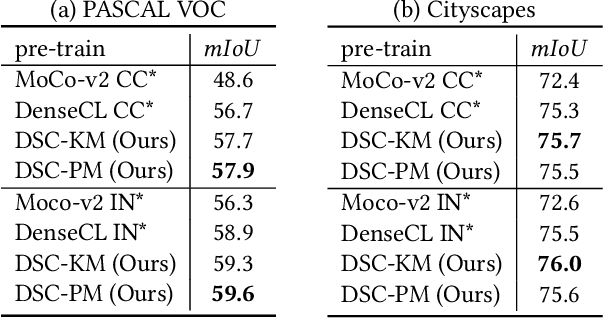

Dense Semantic Contrast for Self-Supervised Visual Representation Learning

Sep 16, 2021

Abstract:Self-supervised representation learning for visual pre-training has achieved remarkable success with sample (instance or pixel) discrimination and semantics discovery of instance, whereas there still exists a non-negligible gap between pre-trained model and downstream dense prediction tasks. Concretely, these downstream tasks require more accurate representation, in other words, the pixels from the same object must belong to a shared semantic category, which is lacking in the previous methods. In this work, we present Dense Semantic Contrast (DSC) for modeling semantic category decision boundaries at a dense level to meet the requirement of these tasks. Furthermore, we propose a dense cross-image semantic contrastive learning framework for multi-granularity representation learning. Specially, we explicitly explore the semantic structure of the dataset by mining relations among pixels from different perspectives. For intra-image relation modeling, we discover pixel neighbors from multiple views. And for inter-image relations, we enforce pixel representation from the same semantic class to be more similar than the representation from different classes in one mini-batch. Experimental results show that our DSC model outperforms state-of-the-art methods when transferring to downstream dense prediction tasks, including object detection, semantic segmentation, and instance segmentation. Code will be made available.

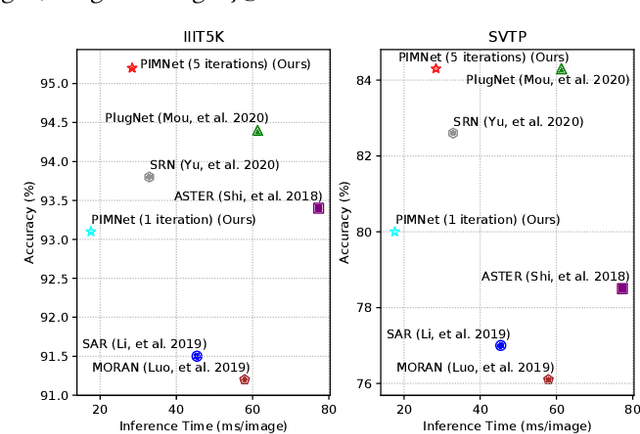

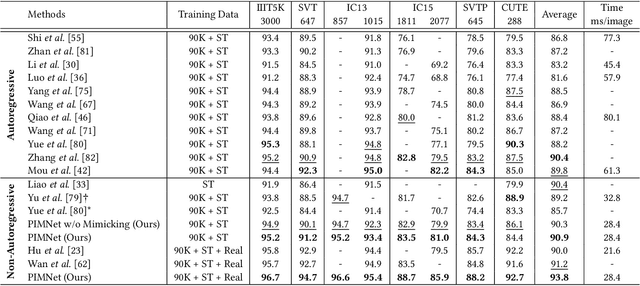

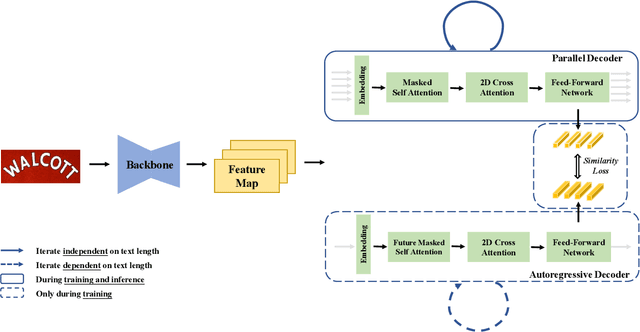

PIMNet: A Parallel, Iterative and Mimicking Network for Scene Text Recognition

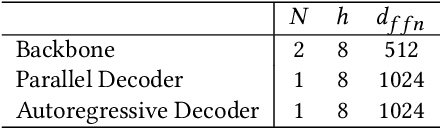

Sep 09, 2021

Abstract:Nowadays, scene text recognition has attracted more and more attention due to its various applications. Most state-of-the-art methods adopt an encoder-decoder framework with attention mechanism, which generates text autoregressively from left to right. Despite the convincing performance, the speed is limited because of the one-by-one decoding strategy. As opposed to autoregressive models, non-autoregressive models predict the results in parallel with a much shorter inference time, but the accuracy falls behind the autoregressive counterpart considerably. In this paper, we propose a Parallel, Iterative and Mimicking Network (PIMNet) to balance accuracy and efficiency. Specifically, PIMNet adopts a parallel attention mechanism to predict the text faster and an iterative generation mechanism to make the predictions more accurate. In each iteration, the context information is fully explored. To improve learning of the hidden layer, we exploit the mimicking learning in the training phase, where an additional autoregressive decoder is adopted and the parallel decoder mimics the autoregressive decoder with fitting outputs of the hidden layer. With the shared backbone between the two decoders, the proposed PIMNet can be trained end-to-end without pre-training. During inference, the branch of the autoregressive decoder is removed for a faster speed. Extensive experiments on public benchmarks demonstrate the effectiveness and efficiency of PIMNet. Our code will be available at https://github.com/Pay20Y/PIMNet.

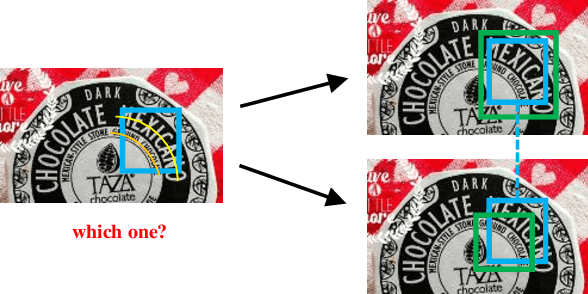

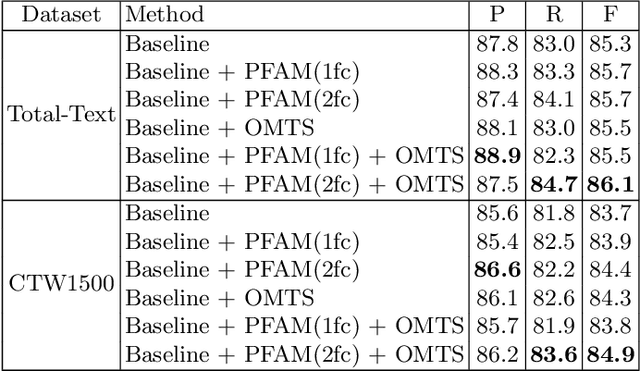

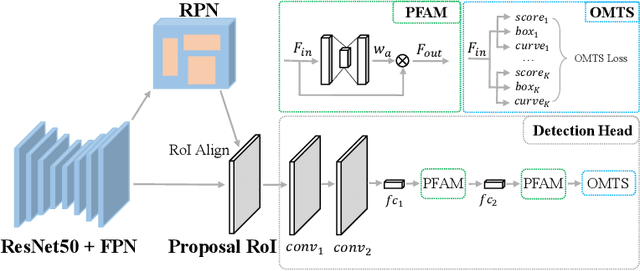

Which and Where to Focus: A Simple yet Accurate Framework for Arbitrary-Shaped Nearby Text Detection in Scene Images

Sep 08, 2021

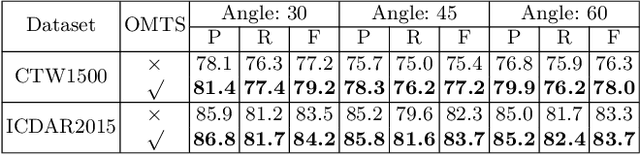

Abstract:Scene text detection has drawn the close attention of researchers. Though many methods have been proposed for horizontal and oriented texts, previous methods may not perform well when dealing with arbitrary-shaped texts such as curved texts. In particular, confusion problem arises in the case of nearby text instances. In this paper, we propose a simple yet effective method for accurate arbitrary-shaped nearby scene text detection. Firstly, a One-to-Many Training Scheme (OMTS) is designed to eliminate confusion and enable the proposals to learn more appropriate groundtruths in the case of nearby text instances. Secondly, we propose a Proposal Feature Attention Module (PFAM) to exploit more effective features for each proposal, which can better adapt to arbitrary-shaped text instances. Finally, we propose a baseline that is based on Faster R-CNN and outputs the curve representation directly. Equipped with PFAM and OMTS, the detector can achieve state-of-the-art or competitive performance on several challenging benchmarks.

Mask is All You Need: Rethinking Mask R-CNN for Dense and Arbitrary-Shaped Scene Text Detection

Sep 08, 2021

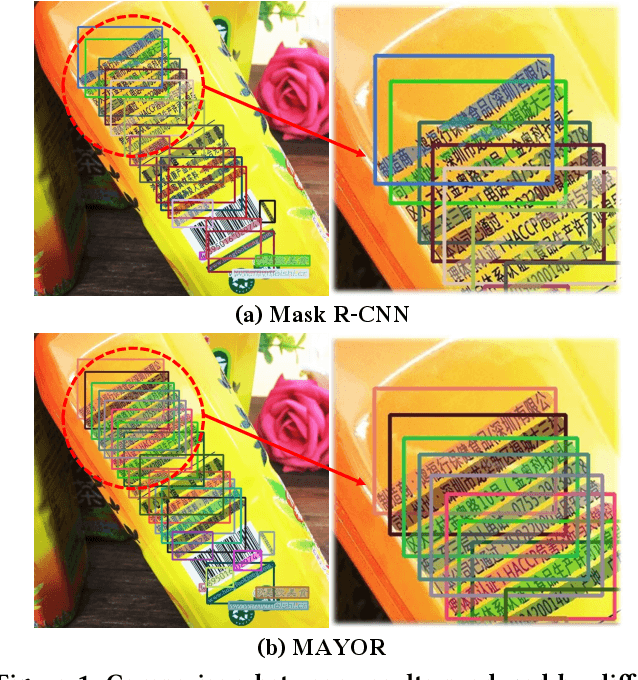

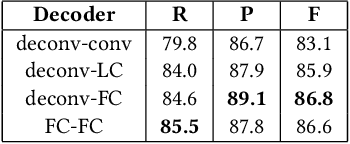

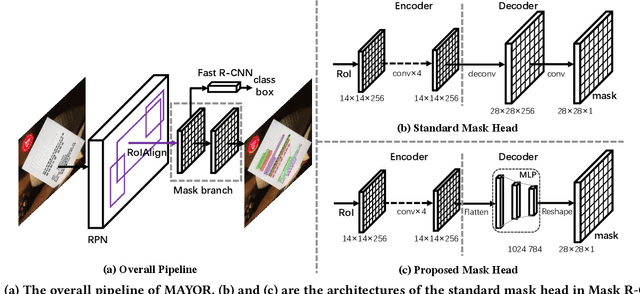

Abstract:Due to the large success in object detection and instance segmentation, Mask R-CNN attracts great attention and is widely adopted as a strong baseline for arbitrary-shaped scene text detection and spotting. However, two issues remain to be settled. The first is dense text case, which is easy to be neglected but quite practical. There may exist multiple instances in one proposal, which makes it difficult for the mask head to distinguish different instances and degrades the performance. In this work, we argue that the performance degradation results from the learning confusion issue in the mask head. We propose to use an MLP decoder instead of the "deconv-conv" decoder in the mask head, which alleviates the issue and promotes robustness significantly. And we propose instance-aware mask learning in which the mask head learns to predict the shape of the whole instance rather than classify each pixel to text or non-text. With instance-aware mask learning, the mask branch can learn separated and compact masks. The second is that due to large variations in scale and aspect ratio, RPN needs complicated anchor settings, making it hard to maintain and transfer across different datasets. To settle this issue, we propose an adaptive label assignment in which all instances especially those with extreme aspect ratios are guaranteed to be associated with enough anchors. Equipped with these components, the proposed method named MAYOR achieves state-of-the-art performance on five benchmarks including DAST1500, MSRA-TD500, ICDAR2015, CTW1500, and Total-Text.

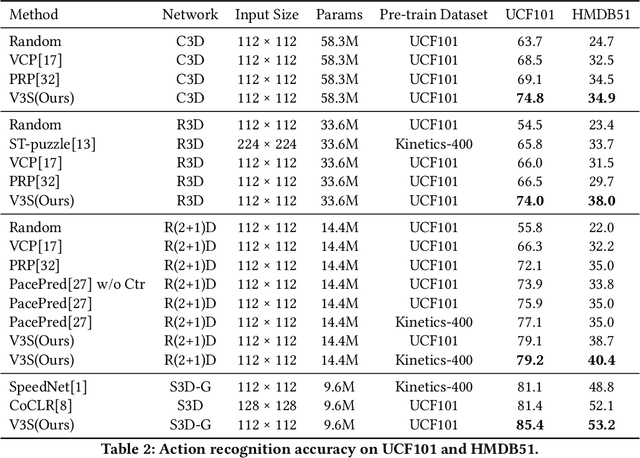

Video 3D Sampling for Self-supervised Representation Learning

Jul 08, 2021

Abstract:Most of the existing video self-supervised methods mainly leverage temporal signals of videos, ignoring that the semantics of moving objects and environmental information are all critical for video-related tasks. In this paper, we propose a novel self-supervised method for video representation learning, referred to as Video 3D Sampling (V3S). In order to sufficiently utilize the information (spatial and temporal) provided in videos, we pre-process a video from three dimensions (width, height, time). As a result, we can leverage the spatial information (the size of objects), temporal information (the direction and magnitude of motions) as our learning target. In our implementation, we combine the sampling of the three dimensions and propose the scale and projection transformations in space and time respectively. The experimental results show that, when applied to action recognition, video retrieval and action similarity labeling, our approach improves the state-of-the-arts with significant margins.

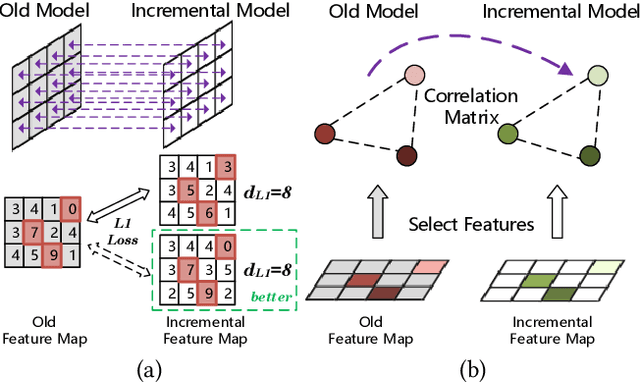

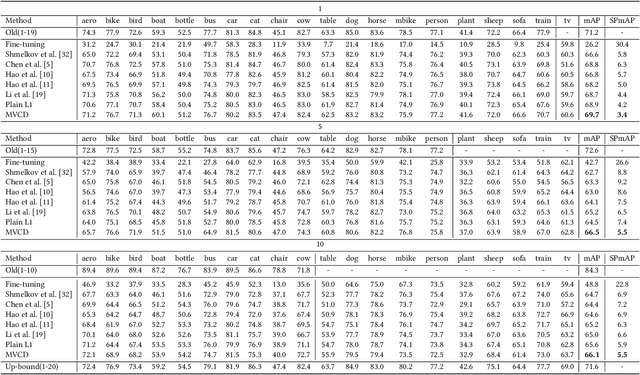

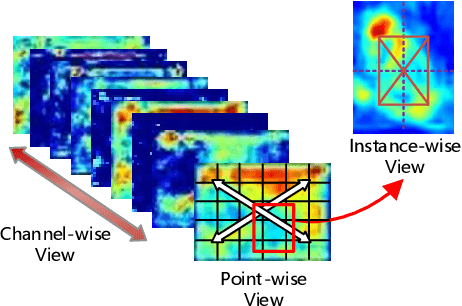

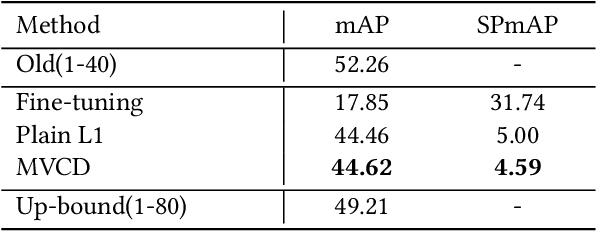

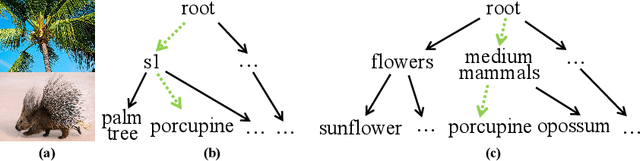

Multi-View Correlation Distillation for Incremental Object Detection

Jul 05, 2021

Abstract:In real applications, new object classes often emerge after the detection model has been trained on a prepared dataset with fixed classes. Due to the storage burden and the privacy of old data, sometimes it is impractical to train the model from scratch with both old and new data. Fine-tuning the old model with only new data will lead to a well-known phenomenon of catastrophic forgetting, which severely degrades the performance of modern object detectors. In this paper, we propose a novel \textbf{M}ulti-\textbf{V}iew \textbf{C}orrelation \textbf{D}istillation (MVCD) based incremental object detection method, which explores the correlations in the feature space of the two-stage object detector (Faster R-CNN). To better transfer the knowledge learned from the old classes and maintain the ability to learn new classes, we design correlation distillation losses from channel-wise, point-wise and instance-wise views to regularize the learning of the incremental model. A new metric named Stability-Plasticity-mAP is proposed to better evaluate both the stability for old classes and the plasticity for new classes in incremental object detection. The extensive experiments conducted on VOC2007 and COCO demonstrate that MVCD can effectively learn to detect objects of new classes and mitigate the problem of catastrophic forgetting.

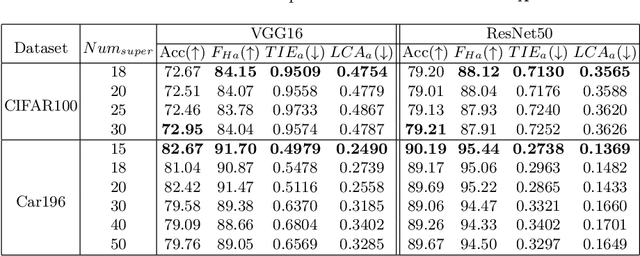

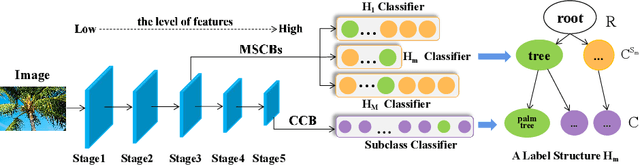

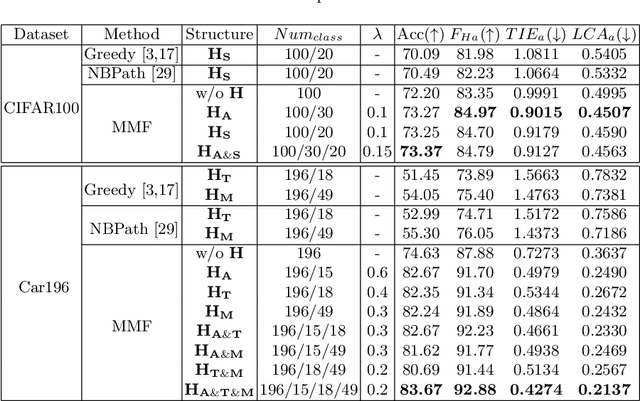

MMF: Multi-Task Multi-Structure Fusion for Hierarchical Image Classification

Jul 02, 2021

Abstract:Hierarchical classification is significant for complex tasks by providing multi-granular predictions and encouraging better mistakes. As the label structure decides its performance, many existing approaches attempt to construct an excellent label structure for promoting the classification results. In this paper, we consider that different label structures provide a variety of prior knowledge for category recognition, thus fusing them is helpful to achieve better hierarchical classification results. Furthermore, we propose a multi-task multi-structure fusion model to integrate different label structures. It contains two kinds of branches: one is the traditional classification branch to classify the common subclasses, the other is responsible for identifying the heterogeneous superclasses defined by different label structures. Besides the effect of multiple label structures, we also explore the architecture of the deep model for better hierachical classification and adjust the hierarchical evaluation metrics for multiple label structures. Experimental results on CIFAR100 and Car196 show that our method obtains significantly better results than using a flat classifier or a hierarchical classifier with any single label structure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge