Weifeng Liu

MDFM: Multi-Decision Fusing Model for Few-Shot Learning

Dec 03, 2021

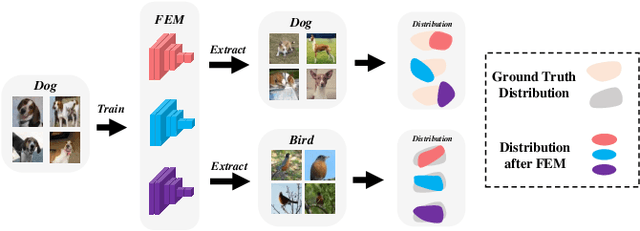

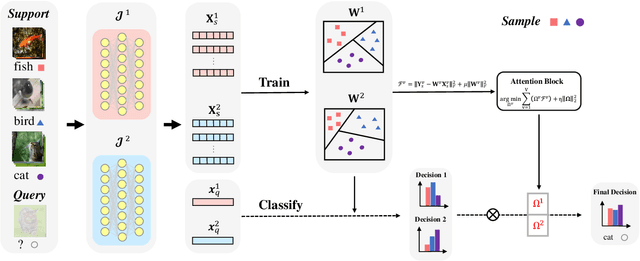

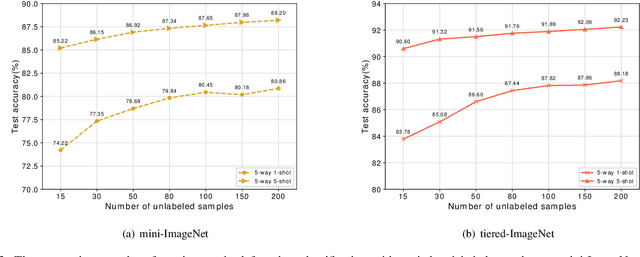

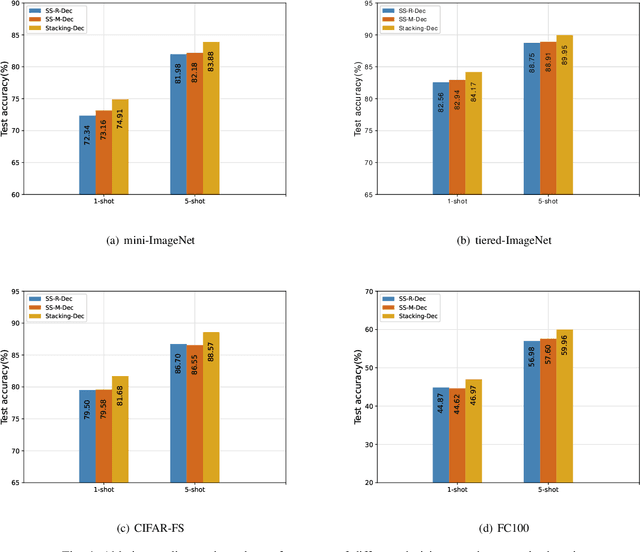

Abstract:In recent years, researchers pay growing attention to the few-shot learning (FSL) task to address the data-scarce problem. A standard FSL framework is composed of two components: i) Pre-train. Employ the base data to generate a CNN-based feature extraction model (FEM). ii) Meta-test. Apply the trained FEM to the novel data (category is different from base data) to acquire the feature embeddings and recognize them. Although researchers have made remarkable breakthroughs in FSL, there still exists a fundamental problem. Since the trained FEM with base data usually cannot adapt to the novel class flawlessly, the novel data's feature may lead to the distribution shift problem. To address this challenge, we hypothesize that even if most of the decisions based on different FEMs are viewed as weak decisions, which are not available for all classes, they still perform decently in some specific categories. Inspired by this assumption, we propose a novel method Multi-Decision Fusing Model (MDFM), which comprehensively considers the decisions based on multiple FEMs to enhance the efficacy and robustness of the model. MDFM is a simple, flexible, non-parametric method that can directly apply to the existing FEMs. Besides, we extend the proposed MDFM to two FSL settings (i.e., supervised and semi-supervised settings). We evaluate the proposed method on five benchmark datasets and achieve significant improvements of 3.4%-7.3% compared with state-of-the-arts.

Semantic-Preserving Adversarial Text Attacks

Aug 23, 2021

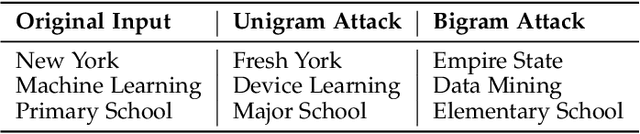

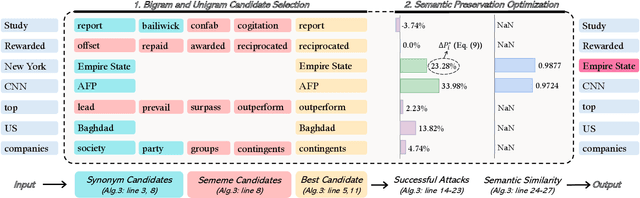

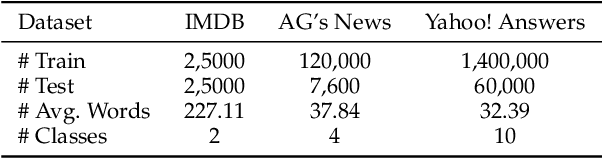

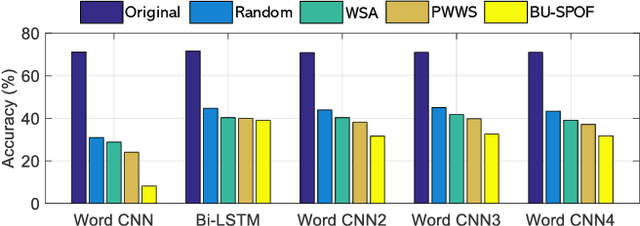

Abstract:Deep neural networks (DNNs) are known to be vulnerable to adversarial images, while their robustness in text classification is rarely studied. Several lines of text attack methods have been proposed in the literature, including character-level, word-level, and sentence-level attacks. However, it is still a challenge to minimize the number of word changes necessary to induce misclassification, while simultaneously ensuring lexical correctness, syntactic soundness, and semantic similarity. In this paper, we propose a Bigram and Unigram based adaptive Semantic Preservation Optimization (BU-SPO) method to examine the vulnerability of deep models. Our method has four major merits. Firstly, we propose to attack text documents not only at the unigram word level but also at the bigram level which better keeps semantics and avoids producing meaningless outputs. Secondly, we propose a hybrid method to replace the input words with options among both their synonyms candidates and sememe candidates, which greatly enriches the potential substitutions compared to only using synonyms. Thirdly, we design an optimization algorithm, i.e., Semantic Preservation Optimization (SPO), to determine the priority of word replacements, aiming to reduce the modification cost. Finally, we further improve the SPO with a semantic Filter (named SPOF) to find the adversarial example with the highest semantic similarity. We evaluate the effectiveness of our BU-SPO and BU-SPOF on IMDB, AG's News, and Yahoo! Answers text datasets by attacking four popular DNNs models. Results show that our methods achieve the highest attack success rates and semantics rates by changing the smallest number of words compared with existing methods.

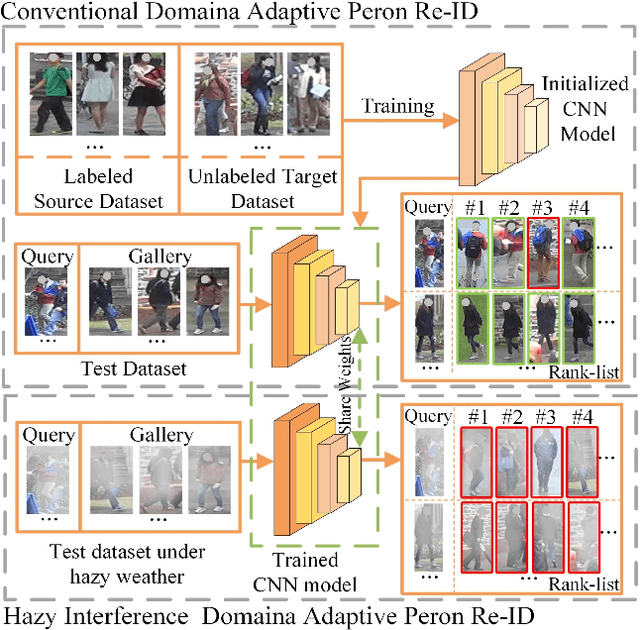

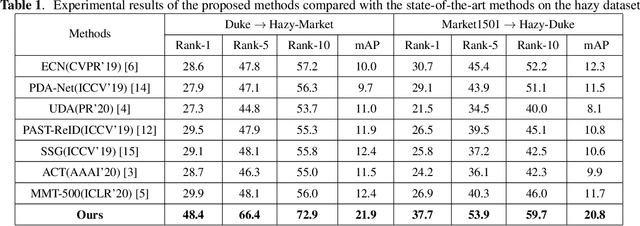

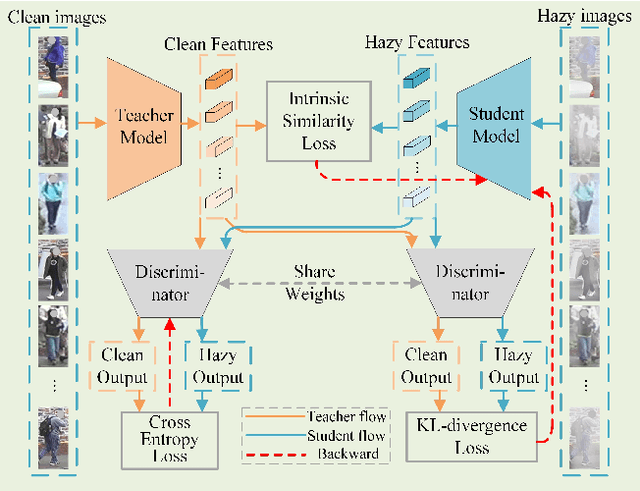

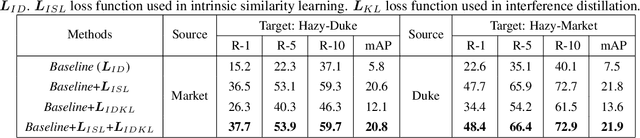

Hazy Re-ID: An Interference Suppression Model For Domain Adaptation Person Re-identification Under Inclement Weather Condition

Apr 22, 2021

Abstract:In a conventional domain adaptation person Re-identification (Re-ID) task, both the training and test images in target domain are collected under the sunny weather. However, in reality, the pedestrians to be retrieved may be obtained under severe weather conditions such as hazy, dusty and snowing, etc. This paper proposes a novel Interference Suppression Model (ISM) to deal with the interference caused by the hazy weather in domain adaptation person Re-ID. A teacherstudent model is used in the ISM to distill the interference information at the feature level by reducing the discrepancy between the clear and the hazy intrinsic similarity matrix. Furthermore, in the distribution level, the extra discriminator is introduced to assist the student model make the interference feature distribution more clear. The experimental results show that the proposed method achieves the superior performance on two synthetic datasets than the stateof-the-art methods. The related code will be released online https://github.com/pangjian123/ISM-ReID.

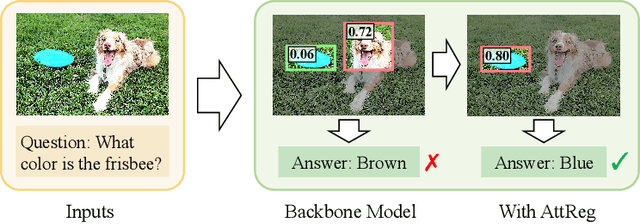

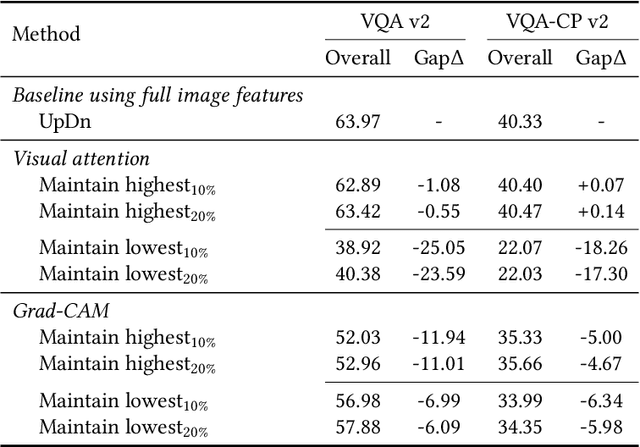

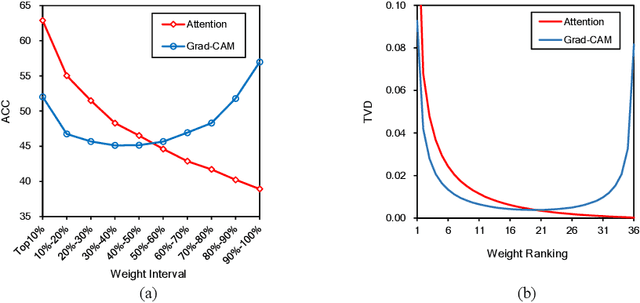

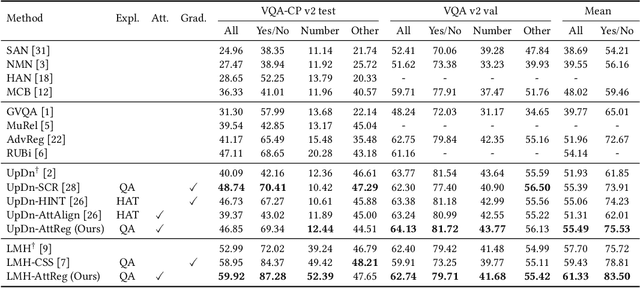

Answer Questions with Right Image Regions: A Visual Attention Regularization Approach

Feb 03, 2021

Abstract:Visual attention in Visual Question Answering (VQA) targets at locating the right image regions regarding the answer prediction. However, recent studies have pointed out that the highlighted image regions from the visual attention are often irrelevant to the given question and answer, leading to model confusion for correct visual reasoning. To tackle this problem, existing methods mostly resort to aligning the visual attention weights with human attentions. Nevertheless, gathering such human data is laborious and expensive, making it burdensome to adapt well-developed models across datasets. To address this issue, in this paper, we devise a novel visual attention regularization approach, namely AttReg, for better visual grounding in VQA. Specifically, AttReg firstly identifies the image regions which are essential for question answering yet unexpectedly ignored (i.e., assigned with low attention weights) by the backbone model. And then a mask-guided learning scheme is leveraged to regularize the visual attention to focus more on these ignored key regions. The proposed method is very flexible and model-agnostic, which can be integrated into most visual attention-based VQA models and require no human attention supervision. Extensive experiments over three benchmark datasets, i.e., VQA-CP v2, VQA-CP v1, and VQA v2, have been conducted to evaluate the effectiveness of AttReg. As a by-product, when incorporating AttReg into the strong baseline LMH, our approach can achieve a new state-of-the-art accuracy of 59.92% with an absolute performance gain of 6.93% on the VQA-CP v2 benchmark dataset. In addition to the effectiveness validation, we recognize that the faithfulness of the visual attention in VQA has not been well explored in literature. In the light of this, we propose to empirically validate such property of visual attention and compare it with the prevalent gradient-based approaches.

SAHDL: Sparse Attention Hypergraph Regularized Dictionary Learning

Oct 23, 2020

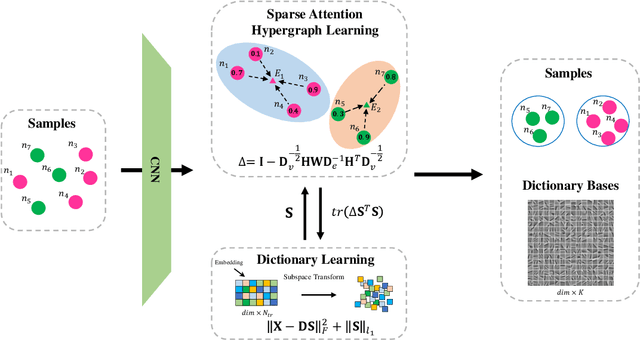

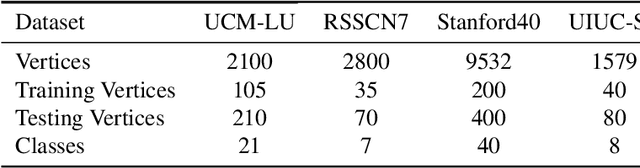

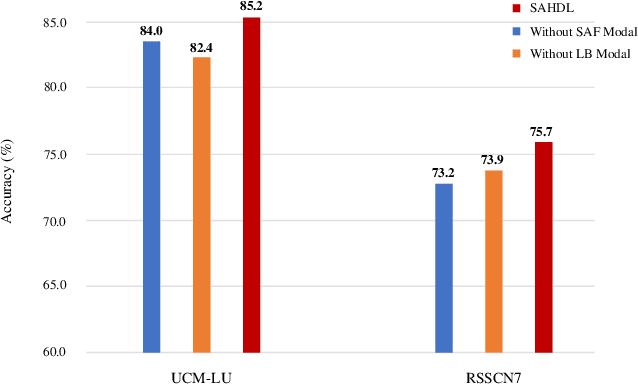

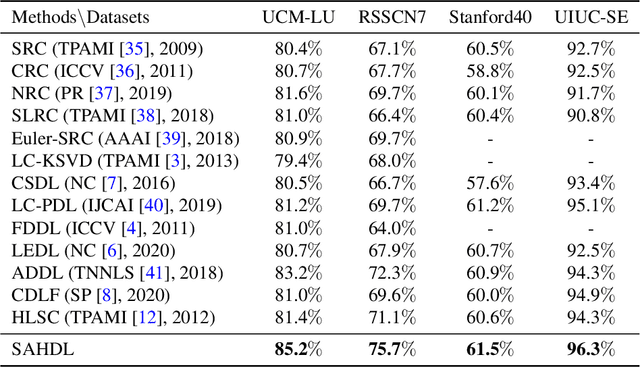

Abstract:In recent years, the attention mechanism contributes significantly to hypergraph based neural networks. However, these methods update the attention weights with the network propagating. That is to say, this type of attention mechanism is only suitable for deep learning-based methods while not applicable to the traditional machine learning approaches. In this paper, we propose a hypergraph based sparse attention mechanism to tackle this issue and embed it into dictionary learning. More specifically, we first construct a sparse attention hypergraph, asset attention weights to samples by employing the $\ell_1$-norm sparse regularization to mine the high-order relationship among sample features. Then, we introduce the hypergraph Laplacian operator to preserve the local structure for subspace transformation in dictionary learning. Besides, we incorporate the discriminative information into the hypergraph as the guidance to aggregate samples. Unlike previous works, our method updates attention weights independently, does not rely on the deep network. We demonstrate the efficacy of our approach on four benchmark datasets.

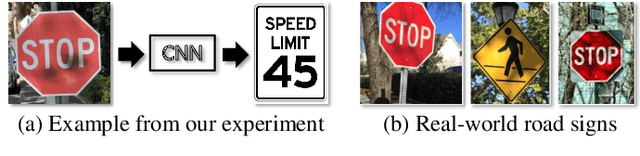

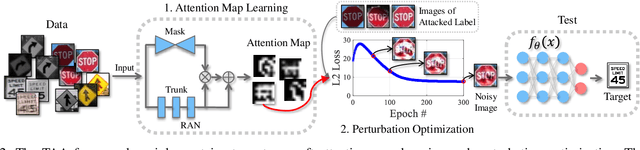

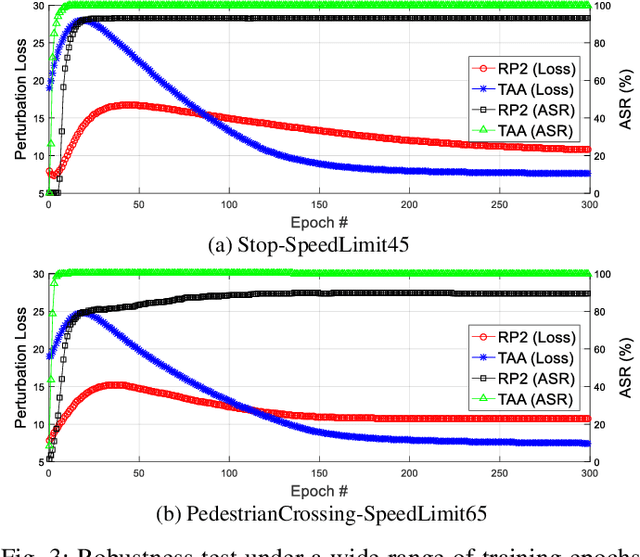

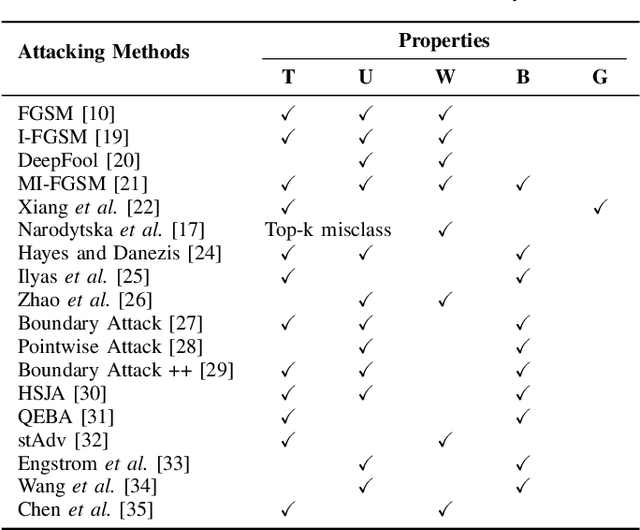

Targeted Attention Attack on Deep Learning Models in Road Sign Recognition

Oct 09, 2020

Abstract:Real world traffic sign recognition is an important step towards building autonomous vehicles, most of which highly dependent on Deep Neural Networks (DNNs). Recent studies demonstrated that DNNs are surprisingly susceptible to adversarial examples. Many attack methods have been proposed to understand and generate adversarial examples, such as gradient based attack, score based attack, decision based attack, and transfer based attacks. However, most of these algorithms are ineffective in real-world road sign attack, because (1) iteratively learning perturbations for each frame is not realistic for a fast moving car and (2) most optimization algorithms traverse all pixels equally without considering their diverse contribution. To alleviate these problems, this paper proposes the targeted attention attack (TAA) method for real world road sign attack. Specifically, we have made the following contributions: (1) we leverage the soft attention map to highlight those important pixels and skip those zero-contributed areas - this also helps to generate natural perturbations, (2) we design an efficient universal attack that optimizes a single perturbation/noise based on a set of training images under the guidance of the pre-trained attention map, (3) we design a simple objective function that can be easily optimized, (4) we evaluate the effectiveness of TAA on real world data sets. Experimental results validate that the TAA method improves the attack successful rate (nearly 10%) and reduces the perturbation loss (about a quarter) compared with the popular RP2 method. Additionally, our TAA also provides good properties, e.g., transferability and generalization capability. We provide code and data to ensure the reproducibility: https://github.com/AdvAttack/RoadSignAttack.

Learning Domain-invariant Graph for Adaptive Semi-supervised Domain Adaptation with Few Labeled Source Samples

Aug 21, 2020

Abstract:Domain adaptation aims to generalize a model from a source domain to tackle tasks in a related but different target domain. Traditional domain adaptation algorithms assume that enough labeled data, which are treated as the prior knowledge are available in the source domain. However, these algorithms will be infeasible when only a few labeled data exist in the source domain, and thus the performance decreases significantly. To address this challenge, we propose a Domain-invariant Graph Learning (DGL) approach for domain adaptation with only a few labeled source samples. Firstly, DGL introduces the Nystrom method to construct a plastic graph that shares similar geometric property as the target domain. And then, DGL flexibly employs the Nystrom approximation error to measure the divergence between plastic graph and source graph to formalize the distribution mismatch from the geometric perspective. Through minimizing the approximation error, DGL learns a domain-invariant geometric graph to bridge source and target domains. Finally, we integrate the learned domain-invariant graph with the semi-supervised learning and further propose an adaptive semi-supervised model to handle the cross-domain problems. The results of extensive experiments on popular datasets verify the superiority of DGL, especially when only a few labeled source samples are available.

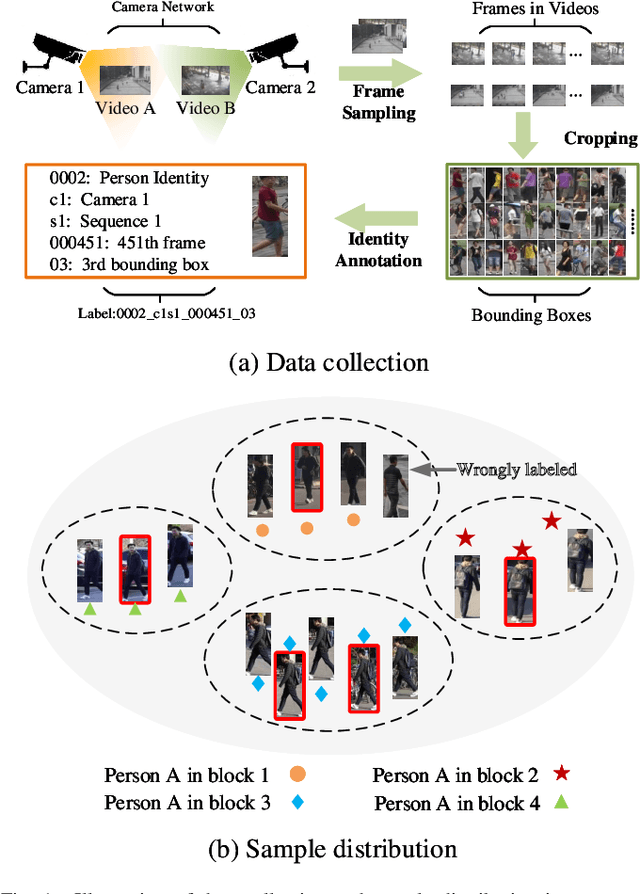

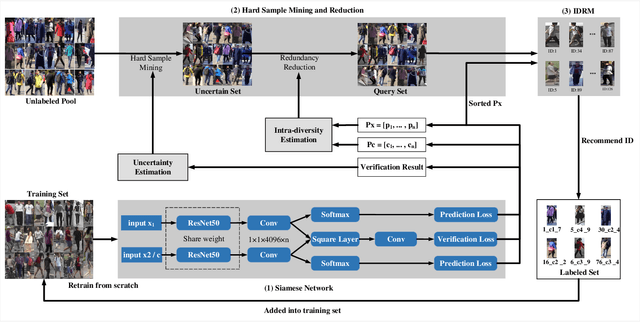

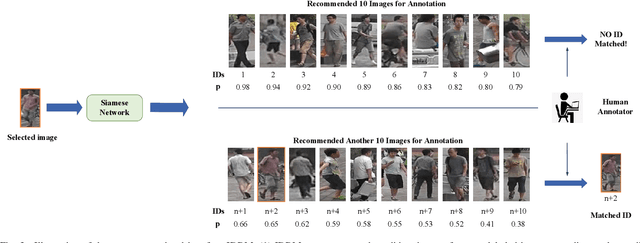

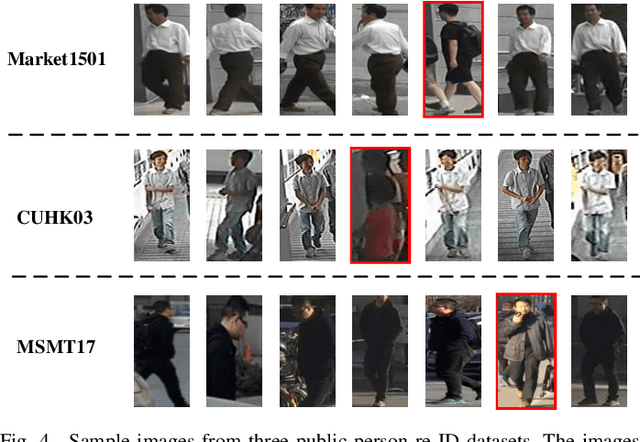

Person Re-Identification via Active Hard Sample Mining

Apr 14, 2020

Abstract:Annotating a large-scale image dataset is very tedious, yet necessary for training person re-identification models. To alleviate such a problem, we present an active hard sample mining framework via training an effective re-ID model with the least labeling efforts. Considering that hard samples can provide informative patterns, we first formulate an uncertainty estimation to actively select hard samples to iteratively train a re-ID model from scratch. Then, intra-diversity estimation is designed to reduce the redundant hard samples by maximizing their diversity. Moreover, we propose a computer-assisted identity recommendation module embedded in the active hard sample mining framework to help human annotators to rapidly and accurately label the selected samples. Extensive experiments were carried out to demonstrate the effectiveness of our method on several public datasets. Experimental results indicate that our method can reduce 57%, 63%, and 49% annotation efforts on the Market1501, MSMT17, and CUHK03, respectively, while maximizing the performance of the re-ID model.

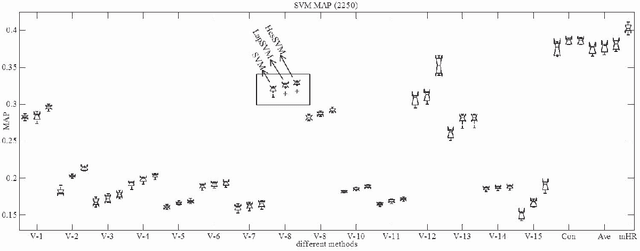

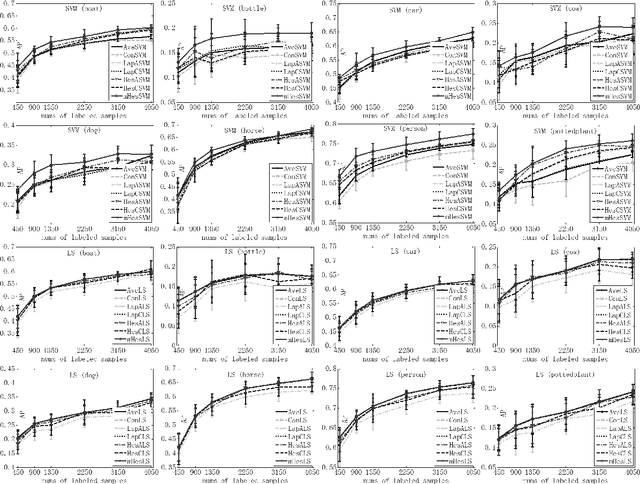

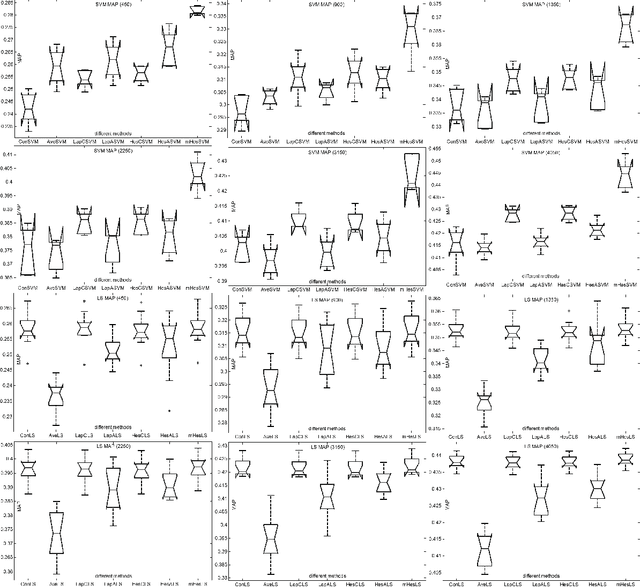

Multiview Hessian Regularization for Image Annotation

Apr 23, 2019

Abstract:The rapid development of computer hardware and Internet technology makes large scale data dependent models computationally tractable, and opens a bright avenue for annotating images through innovative machine learning algorithms. Semi-supervised learning (SSL) has consequently received intensive attention in recent years and has been successfully deployed in image annotation. One representative work in SSL is Laplacian regularization (LR), which smoothes the conditional distribution for classification along the manifold encoded in the graph Laplacian, however, it has been observed that LR biases the classification function towards a constant function which possibly results in poor generalization. In addition, LR is developed to handle uniformly distributed data (or single view data), although instances or objects, such as images and videos, are usually represented by multiview features, such as color, shape and texture. In this paper, we present multiview Hessian regularization (mHR) to address the above two problems in LR-based image annotation. In particular, mHR optimally combines multiple Hessian regularizations, each of which is obtained from a particular view of instances, and steers the classification function which varies linearly along the data manifold. We apply mHR to kernel least squares and support vector machines as two examples for image annotation. Extensive experiments on the PASCAL VOC'07 dataset validate the effectiveness of mHR by comparing it with baseline algorithms, including LR and HR.

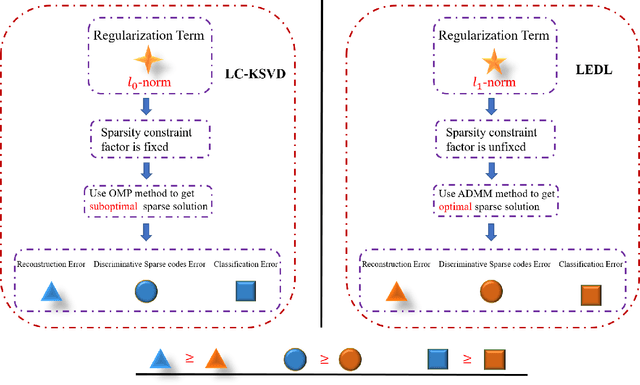

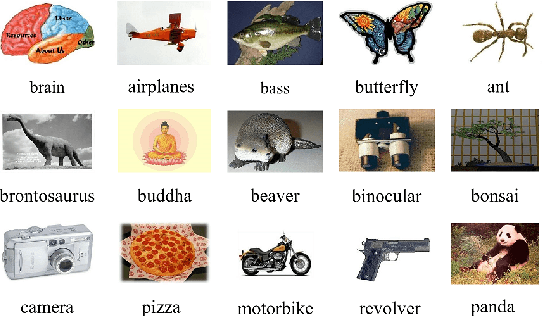

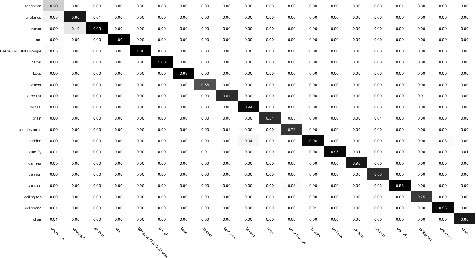

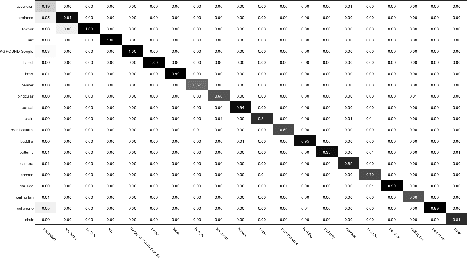

Label Embedded Dictionary Learning for Image Classification

Apr 17, 2019

Abstract:Recently, label consistent k-svd (LC-KSVD) algorithm has been successfully applied in image classification. The objective function of LC-KSVD is consisted of reconstruction error, classification error and discriminative sparse codes error with L0-norm sparse regularization term. The L0-norm, however, leads to NP-hard problem. Despite some methods such as orthogonal matching pursuit can help solve this problem to some extent, it is quite difficult to find the optimum sparse solution. To overcome this limitation, we propose a label embedded dictionary learning (LEDL) method to utilise the L1-norm as the sparse regularization term so that we can avoid the hard-to-optimize problem by solving the convex optimization problem. Alternating direction method of multipliers and blockwise coordinate descent algorithm are then exploited to optimize the corresponding objective function. Extensive experimental results on six benchmark datasets illustrate that the proposed algorithm has achieved superior performance compared to some conventional classification algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge