Peng Cui

Beijing Institute of Technology

Generating Risky Samples with Conformity Constraints via Diffusion Models

Dec 21, 2025Abstract:Although neural networks achieve promising performance in many tasks, they may still fail when encountering some examples and bring about risks to applications. To discover risky samples, previous literature attempts to search for patterns of risky samples within existing datasets or inject perturbation into them. Yet in this way the diversity of risky samples is limited by the coverage of existing datasets. To overcome this limitation, recent works adopt diffusion models to produce new risky samples beyond the coverage of existing datasets. However, these methods struggle in the conformity between generated samples and expected categories, which could introduce label noise and severely limit their effectiveness in applications. To address this issue, we propose RiskyDiff that incorporates the embeddings of both texts and images as implicit constraints of category conformity. We also design a conformity score to further explicitly strengthen the category conformity, as well as introduce the mechanisms of embedding screening and risky gradient guidance to boost the risk of generated samples. Extensive experiments reveal that RiskyDiff greatly outperforms existing methods in terms of the degree of risk, generation quality, and conformity with conditioned categories. We also empirically show the generalization ability of the models can be enhanced by augmenting training data with generated samples of high conformity.

ODP-Bench: Benchmarking Out-of-Distribution Performance Prediction

Oct 31, 2025Abstract:Recently, there has been gradually more attention paid to Out-of-Distribution (OOD) performance prediction, whose goal is to predict the performance of trained models on unlabeled OOD test datasets, so that we could better leverage and deploy off-the-shelf trained models in risk-sensitive scenarios. Although progress has been made in this area, evaluation protocols in previous literature are inconsistent, and most works cover only a limited number of real-world OOD datasets and types of distribution shifts. To provide convenient and fair comparisons for various algorithms, we propose Out-of-Distribution Performance Prediction Benchmark (ODP-Bench), a comprehensive benchmark that includes most commonly used OOD datasets and existing practical performance prediction algorithms. We provide our trained models as a testbench for future researchers, thus guaranteeing the consistency of comparison and avoiding the burden of repeating the model training process. Furthermore, we also conduct in-depth experimental analyses to better understand their capability boundary.

LimiX: Unleashing Structured-Data Modeling Capability for Generalist Intelligence

Sep 03, 2025

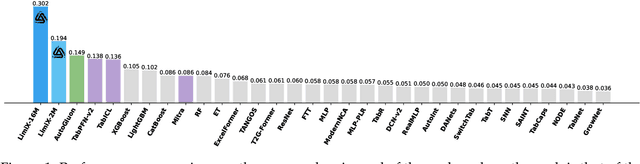

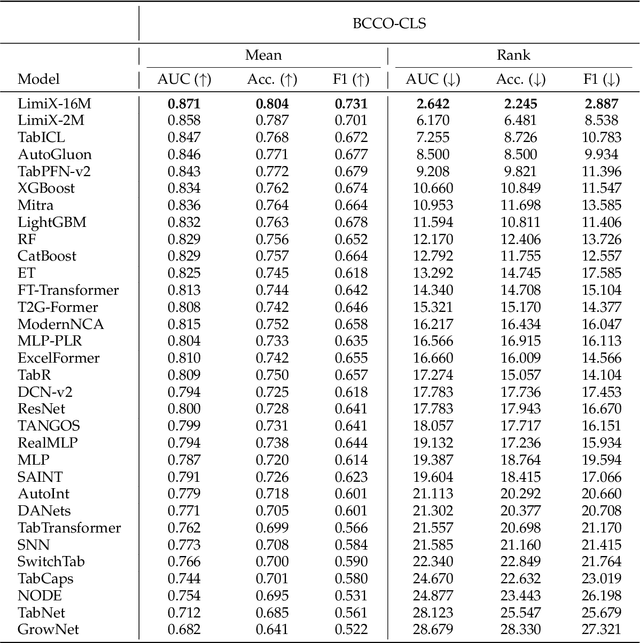

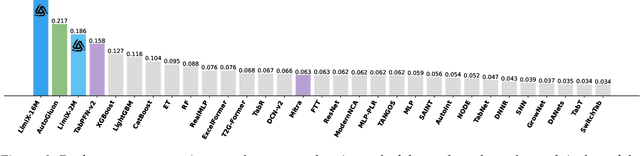

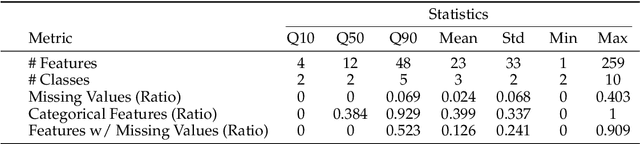

Abstract:We argue that progress toward general intelligence requires complementary foundation models grounded in language, the physical world, and structured data. This report presents LimiX, the first installment of our large structured-data models (LDMs). LimiX treats structured data as a joint distribution over variables and missingness, thus capable of addressing a wide range of tabular tasks through query-based conditional prediction via a single model. LimiX is pretrained using masked joint-distribution modeling with an episodic, context-conditional objective, where the model predicts for query subsets conditioned on dataset-specific contexts, supporting rapid, training-free adaptation at inference. We evaluate LimiX across 10 large structured-data benchmarks with broad regimes of sample size, feature dimensionality, class number, categorical-to-numerical feature ratio, missingness, and sample-to-feature ratios. With a single model and a unified interface, LimiX consistently surpasses strong baselines including gradient-boosting trees, deep tabular networks, recent tabular foundation models, and automated ensembles, as shown in Figure 1 and Figure 2. The superiority holds across a wide range of tasks, such as classification, regression, missing value imputation, and data generation, often by substantial margins, while avoiding task-specific architectures or bespoke training per task. All LimiX models are publicly accessible under Apache 2.0.

COUNTS: Benchmarking Object Detectors and Multimodal Large Language Models under Distribution Shifts

Apr 14, 2025Abstract:Current object detectors often suffer significant perfor-mance degradation in real-world applications when encountering distributional shifts. Consequently, the out-of-distribution (OOD) generalization capability of object detectors has garnered increasing attention from researchers. Despite this growing interest, there remains a lack of a large-scale, comprehensive dataset and evaluation benchmark with fine-grained annotations tailored to assess the OOD generalization on more intricate tasks like object detection and grounding. To address this gap, we introduce COUNTS, a large-scale OOD dataset with object-level annotations. COUNTS encompasses 14 natural distributional shifts, over 222K samples, and more than 1,196K labeled bounding boxes. Leveraging COUNTS, we introduce two novel benchmarks: O(OD)2 and OODG. O(OD)2 is designed to comprehensively evaluate the OOD generalization capabilities of object detectors by utilizing controlled distribution shifts between training and testing data. OODG, on the other hand, aims to assess the OOD generalization of grounding abilities in multimodal large language models (MLLMs). Our findings reveal that, while large models and extensive pre-training data substantially en hance performance in in-distribution (IID) scenarios, significant limitations and opportunities for improvement persist in OOD contexts for both object detectors and MLLMs. In visual grounding tasks, even the advanced GPT-4o and Gemini-1.5 only achieve 56.7% and 28.0% accuracy, respectively. We hope COUNTS facilitates advancements in the development and assessment of robust object detectors and MLLMs capable of maintaining high performance under distributional shifts.

Understanding the Generalization of In-Context Learning in Transformers: An Empirical Study

Mar 19, 2025Abstract:Large language models (LLMs) like GPT-4 and LLaMA-3 utilize the powerful in-context learning (ICL) capability of Transformer architecture to learn on the fly from limited examples. While ICL underpins many LLM applications, its full potential remains hindered by a limited understanding of its generalization boundaries and vulnerabilities. We present a systematic investigation of transformers' generalization capability with ICL relative to training data coverage by defining a task-centric framework along three dimensions: inter-problem, intra-problem, and intra-task generalization. Through extensive simulation and real-world experiments, encompassing tasks such as function fitting, API calling, and translation, we find that transformers lack inter-problem generalization with ICL, but excel in intra-task and intra-problem generalization. When the training data includes a greater variety of mixed tasks, it significantly enhances the generalization ability of ICL on unseen tasks and even on known simple tasks. This guides us in designing training data to maximize the diversity of tasks covered and to combine different tasks whenever possible, rather than solely focusing on the target task for testing.

Grammar Control in Dialogue Response Generation for Language Learning Chatbots

Feb 11, 2025Abstract:Chatbots based on large language models offer cheap conversation practice opportunities for language learners. However, they are hard to control for linguistic forms that correspond to learners' current needs, such as grammar. We control grammar in chatbot conversation practice by grounding a dialogue response generation model in a pedagogical repository of grammar skills. We also explore how this control helps learners to produce specific grammar. We comprehensively evaluate prompting, fine-tuning, and decoding strategies for grammar-controlled dialogue response generation. Strategically decoding Llama3 outperforms GPT-3.5 when tolerating minor response quality losses. Our simulation predicts grammar-controlled responses to support grammar acquisition adapted to learner proficiency. Existing language learning chatbots and research on second language acquisition benefit from these affordances. Code available on GitHub.

Sample Weight Averaging for Stable Prediction

Feb 11, 2025Abstract:The challenge of Out-of-Distribution (OOD) generalization poses a foundational concern for the application of machine learning algorithms to risk-sensitive areas. Inspired by traditional importance weighting and propensity weighting methods, prior approaches employ an independence-based sample reweighting procedure. They aim at decorrelating covariates to counteract the bias introduced by spurious correlations between unstable variables and the outcome, thus enhancing generalization and fulfilling stable prediction under covariate shift. Nonetheless, these methods are prone to experiencing an inflation of variance, primarily attributable to the reduced efficacy in utilizing training samples during the reweighting process. Existing remedies necessitate either environmental labels or substantially higher time costs along with additional assumptions and supervised information. To mitigate this issue, we propose SAmple Weight Averaging (SAWA), a simple yet efficacious strategy that can be universally integrated into various sample reweighting algorithms to decrease the variance and coefficient estimation error, thus boosting the covariate-shift generalization and achieving stable prediction across different environments. We prove its rationality and benefits theoretically. Experiments across synthetic datasets and real-world datasets consistently underscore its superiority against covariate shift.

Investigating the Zone of Proximal Development of Language Models for In-Context Learning

Feb 10, 2025Abstract:In this paper, we introduce a learning analytics framework to analyze the in-context learning (ICL) behavior of large language models (LLMs) through the lens of the Zone of Proximal Development (ZPD), an established theory in educational psychology. ZPD delineates the space between what a learner is capable of doing unsupported and what the learner cannot do even with support. We adapt this concept to ICL, measuring the ZPD of LLMs based on model performance on individual examples with and without ICL. Furthermore, we propose an item response theory (IRT) model to predict the distribution of zones for LLMs. Our findings reveal a series of intricate and multifaceted behaviors of ICL, providing new insights into understanding and leveraging this technique. Finally, we demonstrate how our framework can enhance LLM in both inference and fine-tuning scenarios: (1) By predicting a model's zone of proximal development, we selectively apply ICL to queries that are most likely to benefit from demonstrations, achieving a better balance between inference cost and performance; (2) We propose a human-like curriculum for fine-tuning, which prioritizes examples within the model's ZPD. The curriculum results in improved performance, and we explain its effectiveness through an analysis of the training dynamics of LLMs.

Error Slice Discovery via Manifold Compactness

Jan 31, 2025

Abstract:Despite the great performance of deep learning models in many areas, they still make mistakes and underperform on certain subsets of data, i.e. error slices. Given a trained model, it is important to identify its semantically coherent error slices that are easy to interpret, which is referred to as the error slice discovery problem. However, there is no proper metric of slice coherence without relying on extra information like predefined slice labels. Current evaluation of slice coherence requires access to predefined slices formulated by metadata like attributes or subclasses. Its validity heavily relies on the quality and abundance of metadata, where some possible patterns could be ignored. Besides, current algorithms cannot directly incorporate the constraint of coherence into their optimization objective due to the absence of an explicit coherence metric, which could potentially hinder their effectiveness. In this paper, we propose manifold compactness, a coherence metric without reliance on extra information by incorporating the data geometry property into its design, and experiments on typical datasets empirically validate the rationality of the metric. Then we develop Manifold Compactness based error Slice Discovery (MCSD), a novel algorithm that directly treats risk and coherence as the optimization objective, and is flexible to be applied to models of various tasks. Extensive experiments on the benchmark and case studies on other typical datasets demonstrate the superiority of MCSD.

How to Select Datapoints for Efficient Human Evaluation of NLG Models?

Jan 30, 2025

Abstract:Human evaluation is the gold-standard for evaluating text generation models. It is also expensive, and to fit budgetary constraints, a random subset of the test data is often chosen in practice. The randomly selected data may not accurately represent test performance, making this approach economically inefficient for model comparison. Thus, in this work, we develop a suite of selectors to get the most informative datapoints for human evaluation while taking the evaluation costs into account. We show that selectors based on variance in automated metric scores, diversity in model outputs, or Item Response Theory outperform random selection. We further develop an approach to distill these selectors to the scenario where the model outputs are not yet available. In particular, we introduce source-based estimators, which predict item usefulness for human evaluation just based on the source texts. We demonstrate the efficacy of our selectors in two common NLG tasks, machine translation and summarization, and show that up to only ~50% of the test data is needed to produce the same evaluation result as the entire data. Our implementations are published in the subset2evaluate package.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge