Lu Fang

Zoom in to the details of human-centric videos

May 27, 2020

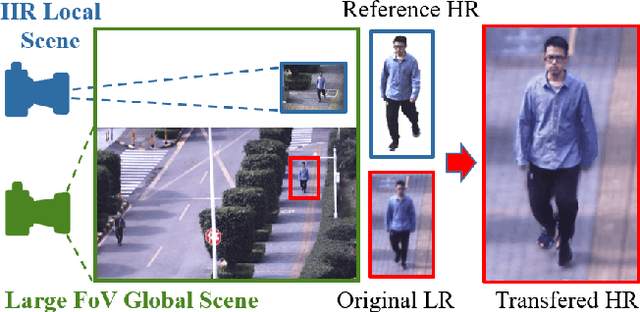

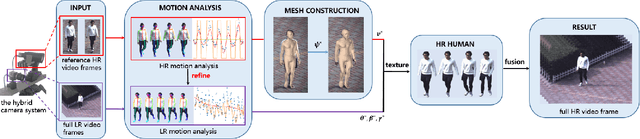

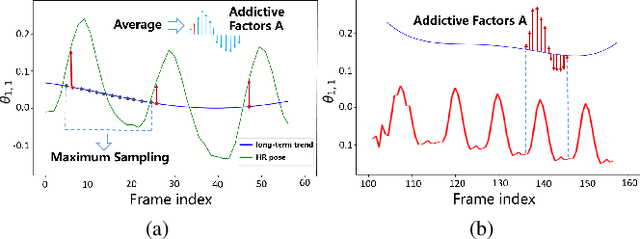

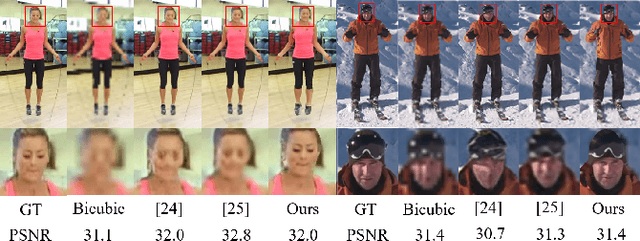

Abstract:Presenting high-resolution (HR) human appearance is always critical for the human-centric videos. However, current imagery equipment can hardly capture HR details all the time. Existing super-resolution algorithms barely mitigate the problem by only considering universal and low-level priors of im-age patches. In contrast, our algorithm is under bias towards the human body super-resolution by taking advantage of high-level prior defined by HR human appearance. Firstly, a motion analysis module extracts inherent motion pattern from the HR reference video to refine the pose estimation of the low-resolution (LR) sequence. Furthermore, a human body reconstruction module maps the HR texture in the reference frames onto a 3D mesh model. Consequently, the input LR videos get super-resolved HR human sequences are generated conditioned on the original LR videos as well as few HR reference frames. Experiments on an existing dataset and real-world data captured by hybrid cameras show that our approach generates superior visual quality of human body compared with the traditional method.

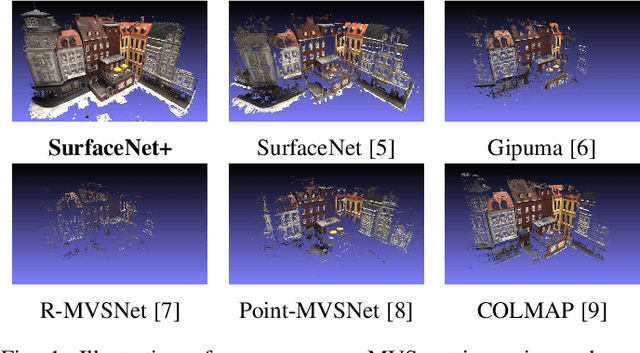

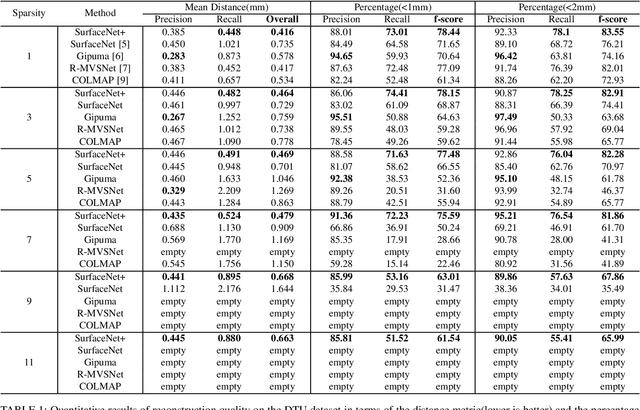

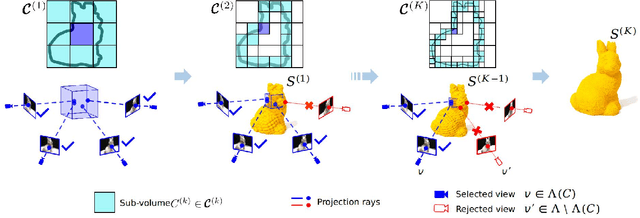

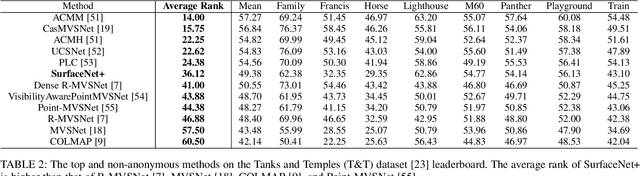

SurfaceNet+: An End-to-end 3D Neural Network for Very Sparse Multi-view Stereopsis

May 26, 2020

Abstract:Multi-view stereopsis (MVS) tries to recover the 3D model from 2D images. As the observations become sparser, the significant 3D information loss makes the MVS problem more challenging. Instead of only focusing on densely sampled conditions, we investigate sparse-MVS with large baseline angles since the sparser sensation is more practical and more cost-efficient. By investigating various observation sparsities, we show that the classical depth-fusion pipeline becomes powerless for the case with a larger baseline angle that worsens the photo-consistency check. As another line of the solution, we present SurfaceNet+, a volumetric method to handle the 'incompleteness' and the 'inaccuracy' problems induced by a very sparse MVS setup. Specifically, the former problem is handled by a novel volume-wise view selection approach. It owns superiority in selecting valid views while discarding invalid occluded views by considering the geometric prior. Furthermore, the latter problem is handled via a multi-scale strategy that consequently refines the recovered geometry around the region with the repeating pattern. The experiments demonstrate the tremendous performance gap between SurfaceNet+ and state-of-the-art methods in terms of precision and recall. Under the extreme sparse-MVS settings in two datasets, where existing methods can only return very few points, SurfaceNet+ still works as well as in the dense MVS setting. The benchmark and the implementation are publicly available at https://github.com/mjiUST/SurfaceNet-plus.

* Accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), May 2020

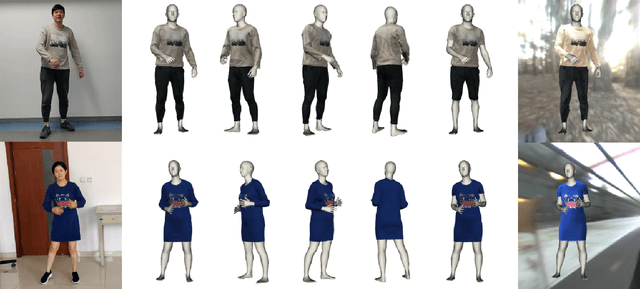

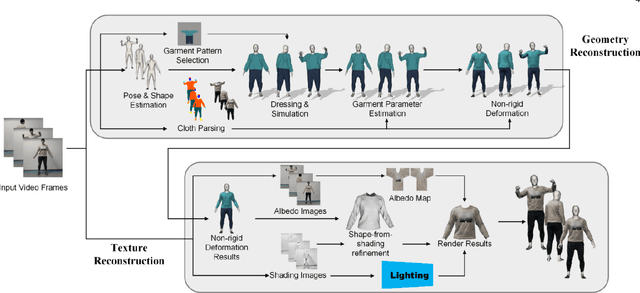

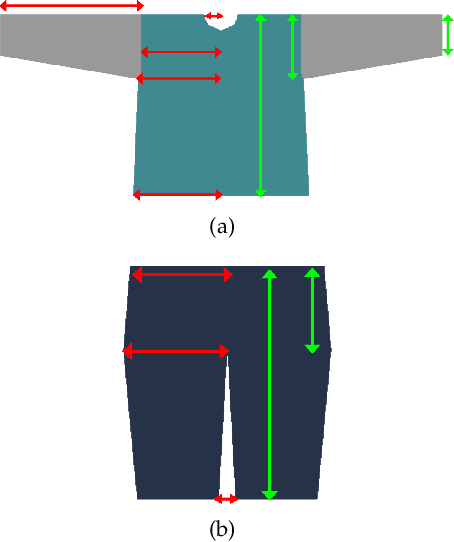

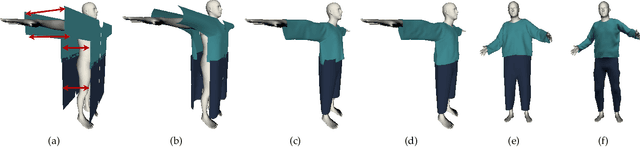

MulayCap: Multi-layer Human Performance Capture Using A Monocular Video Camera

Apr 19, 2020

Abstract:We introduce MulayCap, a novel human performance capture method using a monocular video camera without the need for pre-scanning. The method uses "multi-layer" representations for geometry reconstruction and texture rendering, respectively. For geometry reconstruction, we decompose the clothed human into multiple geometry layers, namely a body mesh layer and a garment piece layer. The key technique behind is a Garment-from-Video (GfV) method for optimizing the garment shape and reconstructing the dynamic cloth to fit the input video sequence, based on a cloth simulation model which is effectively solved with gradient descent. For texture rendering, we decompose each input image frame into a shading layer and an albedo layer, and propose a method for fusing a fixed albedo map and solving for detailed garment geometry using the shading layer. Compared with existing single view human performance capture systems, our "multi-layer" approach bypasses the tedious and time consuming scanning step for obtaining a human specific mesh template. Experimental results demonstrate that MulayCap produces realistic rendering of dynamically changing details that has not been achieved in any previous monocular video camera systems. Benefiting from its fully semantic modeling, MulayCap can be applied to various important editing applications, such as cloth editing, re-targeting, relighting, and AR applications.

OccuSeg: Occupancy-aware 3D Instance Segmentation

Apr 08, 2020

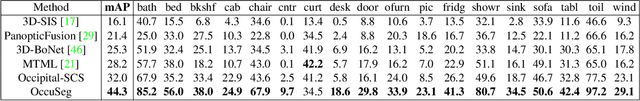

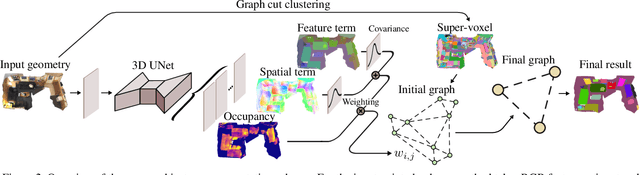

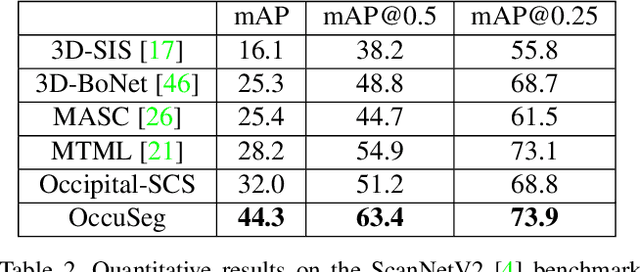

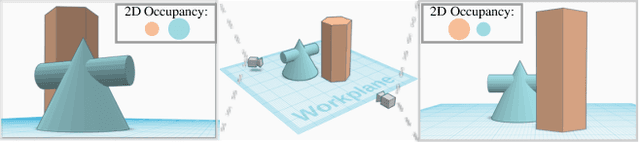

Abstract:3D instance segmentation, with a variety of applications in robotics and augmented reality, is in large demands these days. Unlike 2D images that are projective observations of the environment, 3D models provide metric reconstruction of the scenes without occlusion or scale ambiguity. In this paper, we define "3D occupancy size", as the number of voxels occupied by each instance. It owns advantages of robustness in prediction, on which basis, OccuSeg, an occupancy-aware 3D instance segmentation scheme is proposed. Our multi-task learning produces both occupancy signal and embedding representations, where the training of spatial and feature embeddings varies with their difference in scale-aware. Our clustering scheme benefits from the reliable comparison between the predicted occupancy size and the clustered occupancy size, which encourages hard samples being correctly clustered and avoids over segmentation. The proposed approach achieves state-of-the-art performance on 3 real-world datasets, i.e. ScanNetV2, S3DIS and SceneNN, while maintaining high efficiency.

PANDA: A Gigapixel-level Human-centric Video Dataset

Mar 10, 2020

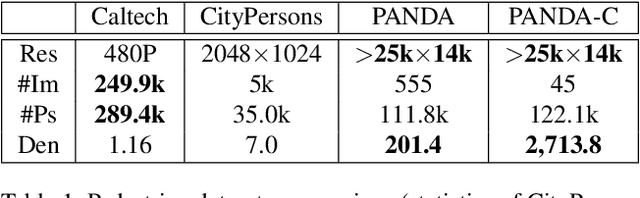

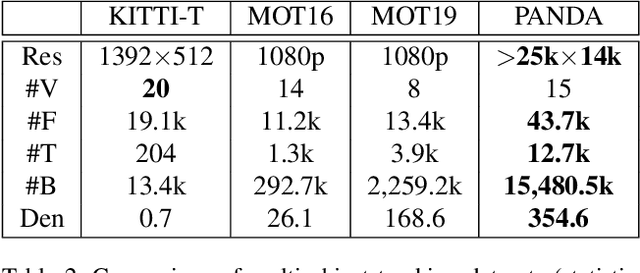

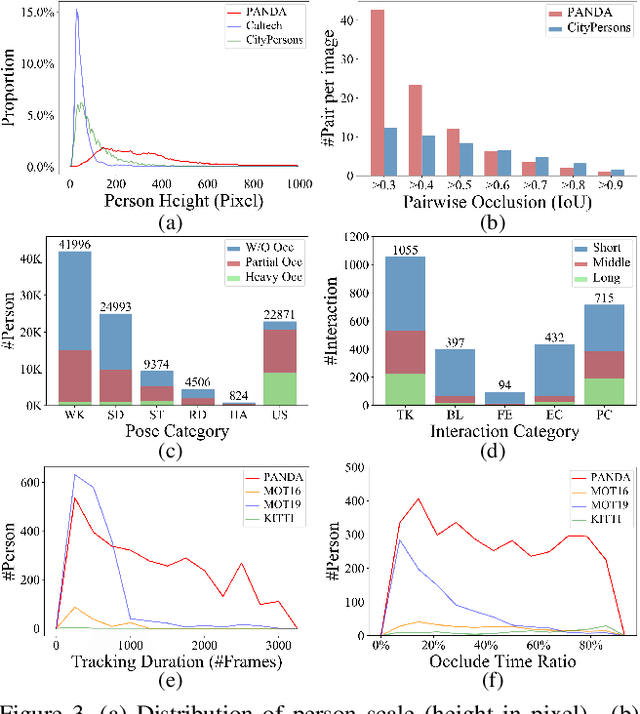

Abstract:We present PANDA, the first gigaPixel-level humAN-centric viDeo dAtaset, for large-scale, long-term, and multi-object visual analysis. The videos in PANDA were captured by a gigapixel camera and cover real-world scenes with both wide field-of-view (~1 square kilometer area) and high-resolution details (~gigapixel-level/frame). The scenes may contain 4k head counts with over 100x scale variation. PANDA provides enriched and hierarchical ground-truth annotations, including 15,974.6k bounding boxes, 111.8k fine-grained attribute labels, 12.7k trajectories, 2.2k groups and 2.9k interactions. We benchmark the human detection and tracking tasks. Due to the vast variance of pedestrian pose, scale, occlusion and trajectory, existing approaches are challenged by both accuracy and efficiency. Given the uniqueness of PANDA with both wide FoV and high resolution, a new task of interaction-aware group detection is introduced. We design a 'global-to-local zoom-in' framework, where global trajectories and local interactions are simultaneously encoded, yielding promising results. We believe PANDA will contribute to the community of artificial intelligence and praxeology by understanding human behaviors and interactions in large-scale real-world scenes. PANDA Website: http://www.panda-dataset.com.

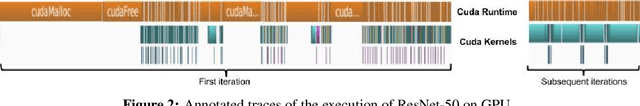

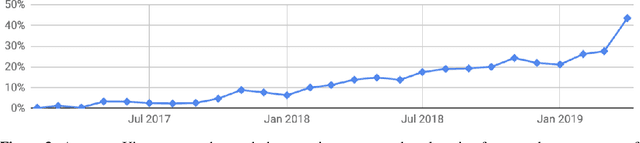

PyTorch: An Imperative Style, High-Performance Deep Learning Library

Dec 03, 2019

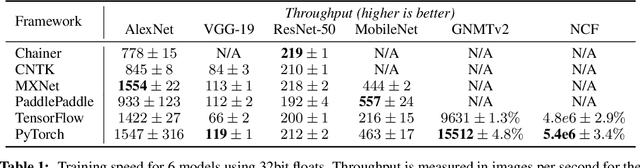

Abstract:Deep learning frameworks have often focused on either usability or speed, but not both. PyTorch is a machine learning library that shows that these two goals are in fact compatible: it provides an imperative and Pythonic programming style that supports code as a model, makes debugging easy and is consistent with other popular scientific computing libraries, while remaining efficient and supporting hardware accelerators such as GPUs. In this paper, we detail the principles that drove the implementation of PyTorch and how they are reflected in its architecture. We emphasize that every aspect of PyTorch is a regular Python program under the full control of its user. We also explain how the careful and pragmatic implementation of the key components of its runtime enables them to work together to achieve compelling performance. We demonstrate the efficiency of individual subsystems, as well as the overall speed of PyTorch on several common benchmarks.

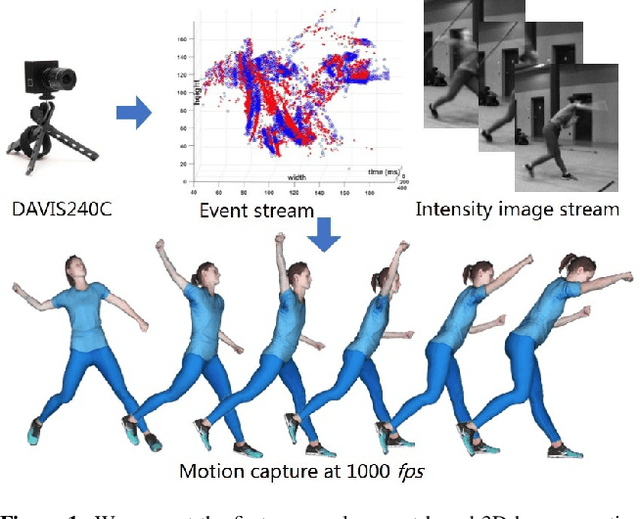

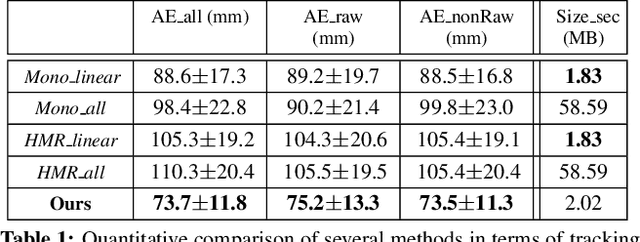

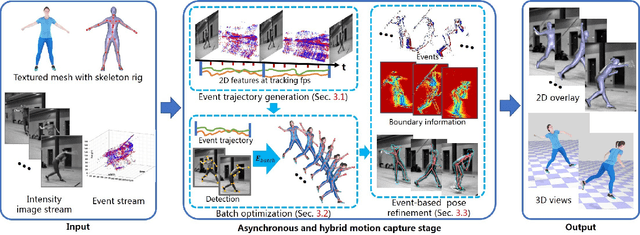

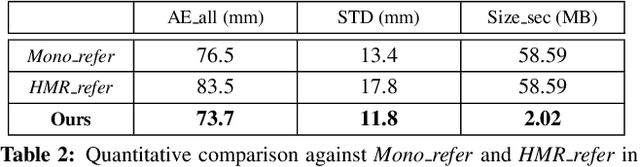

EventCap: Monocular 3D Capture of High-Speed Human Motions using an Event Camera

Aug 30, 2019

Abstract:The high frame rate is a critical requirement for capturing fast human motions. In this setting, existing markerless image-based methods are constrained by the lighting requirement, the high data bandwidth and the consequent high computation overhead. In this paper, we propose EventCap --- the first approach for 3D capturing of high-speed human motions using a single event camera. Our method combines model-based optimization and CNN-based human pose detection to capture high-frequency motion details and to reduce the drifting in the tracking. As a result, we can capture fast motions at millisecond resolution with significantly higher data efficiency than using high frame rate videos. Experiments on our new event-based fast human motion dataset demonstrate the effectiveness and accuracy of our method, as well as its robustness to challenging lighting conditions.

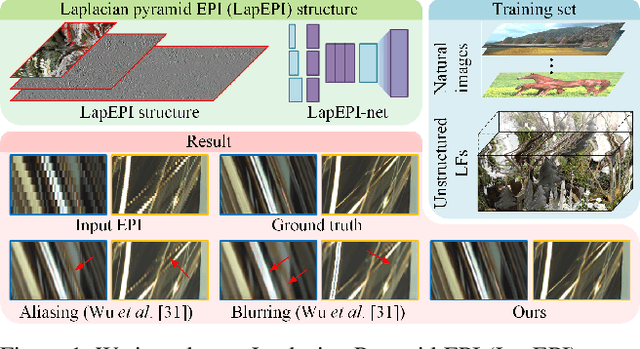

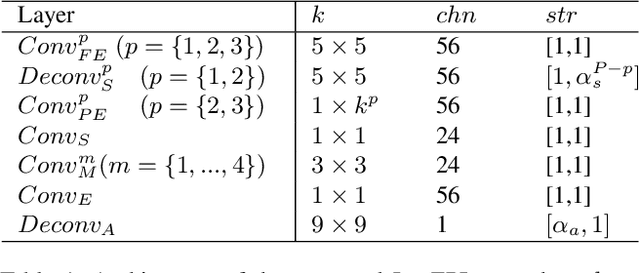

LapEPI-Net: A Laplacian Pyramid EPI structure for Learning-based Dense Light Field Reconstruction

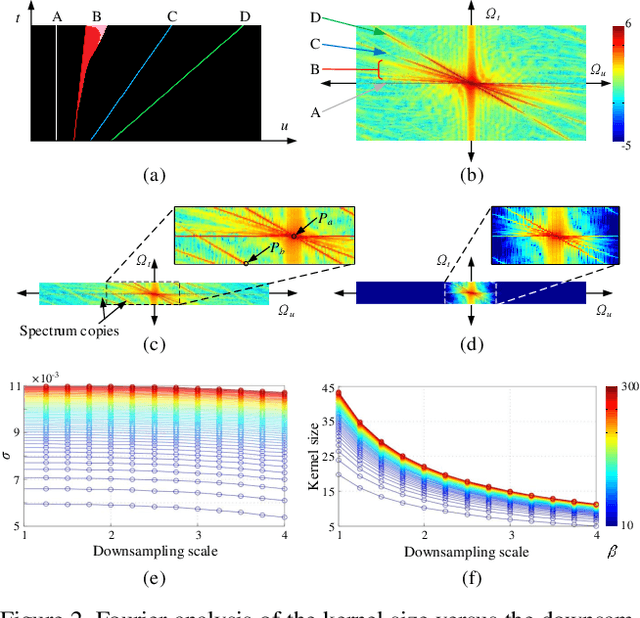

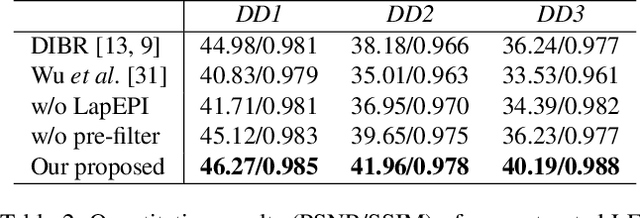

Feb 17, 2019

Abstract:For dense sampled light field (LF) reconstruction problem, existing approaches focus on a depth-free framework to achieve non-Lambertian performance. However, they trap in the trade-off "either aliasing or blurring" problem, i.e., pre-filtering the aliasing components (caused by the angular sparsity of the input LF) always leads to a blurry result. In this paper, we intend to solve this challenge by introducing an elaborately designed epipolar plane image (EPI) structure within a learning-based framework. Specifically, we start by analytically showing that decreasing the spatial scale of an EPI shows higher efficiency in addressing the aliasing problem than simply adopting pre-filtering. Accordingly, we design a Laplacian Pyramid EPI (LapEPI) structure that contains both low spatial scale EPI (for aliasing) and high-frequency residuals (for blurring) to solve the trade-off problem. We then propose a novel network architecture for the LapEPI structure, termed as LapEPI-net. To ensure the non-Lambertian performance, we adopt a transfer-learning strategy by first pre-training the network with natural images then fine-tuning it with unstructured LFs. Extensive experiments demonstrate the high performance and robustness of the proposed approach for tackling the aliasing-or-blurring problem as well as the non-Lambertian reconstruction.

SPI-Optimizer: an integral-Separated PI Controller for Stochastic Optimization

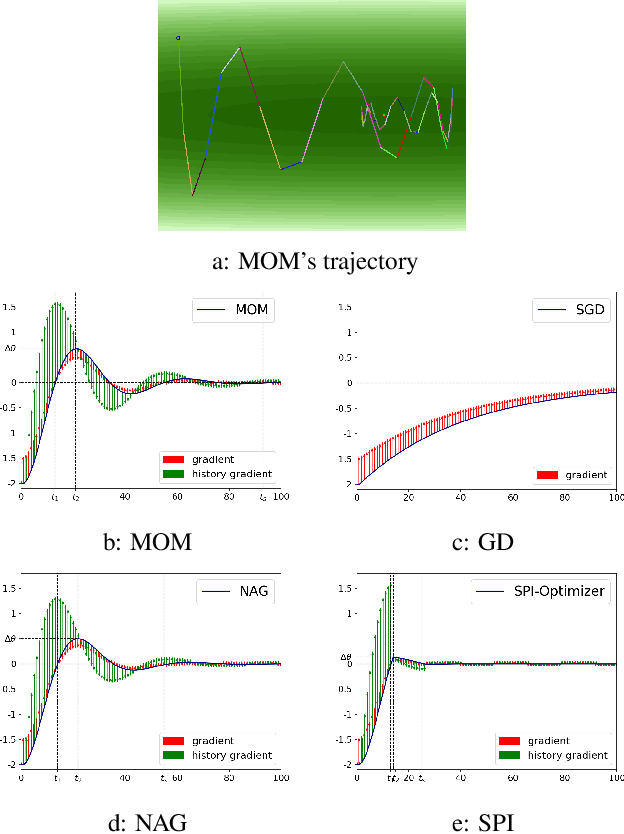

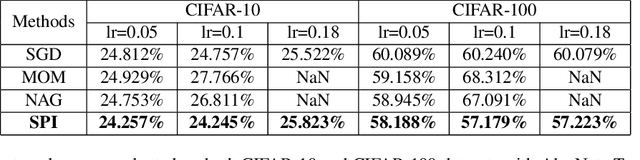

Dec 29, 2018

Abstract:To overcome the oscillation problem in the classical momentum-based optimizer, recent work associates it with the proportional-integral (PI) controller, and artificially adds D term producing a PID controller. It suppresses oscillation with the sacrifice of introducing extra hyper-parameter. In this paper, we start by analyzing: why momentum-based method oscillates about the optimal point? and answering that: the fluctuation problem relates to the lag effect of integral (I) term. Inspired by the conditional integration idea in classical control society, we propose SPI-Optimizer, an integral-Separated PI controller based optimizer WITHOUT introducing extra hyperparameter. It separates momentum term adaptively when the inconsistency of current and historical gradient direction occurs. Extensive experiments demonstrate that SPIOptimizer generalizes well on popular network architectures to eliminate the oscillation, and owns competitive performance with faster convergence speed (up to 40% epochs reduction ratio ) and more accurate classification result on MNIST, CIFAR10, and CIFAR100 (up to 27.5% error reduction ratio) than the state-of-the-art methods.

RegNet: Learning the Optimization of Direct Image-to-Image Pose Registration

Dec 26, 2018

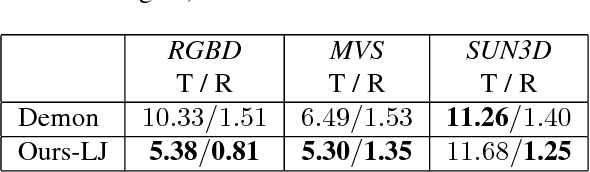

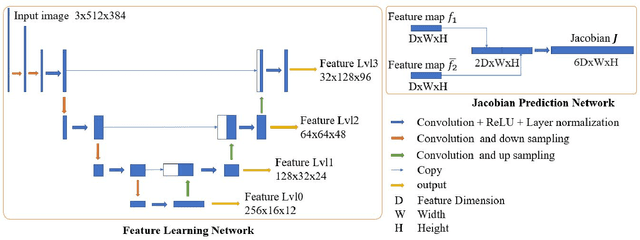

Abstract:Direct image-to-image alignment that relies on the optimization of photometric error metrics suffers from limited convergence range and sensitivity to lighting conditions. Deep learning approaches has been applied to address this problem by learning better feature representations using convolutional neural networks, yet still require a good initialization. In this paper, we demonstrate that the inaccurate numerical Jacobian limits the convergence range which could be improved greatly using learned approaches. Based on this observation, we propose a novel end-to-end network, RegNet, to learn the optimization of image-to-image pose registration. By jointly learning feature representation for each pixel and partial derivatives that replace handcrafted ones (e.g., numerical differentiation) in the optimization step, the neural network facilitates end-to-end optimization. The energy landscape is constrained on both the feature representation and the learned Jacobian, hence providing more flexibility for the optimization as a consequence leads to more robust and faster convergence. In a series of experiments, including a broad ablation study, we demonstrate that RegNet is able to converge for large-baseline image pairs with fewer iterations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge