Ling Shao

Terminus Group, Beijing, China

Hierarchical Variational Memory for Few-shot Learning Across Domains

Dec 15, 2021

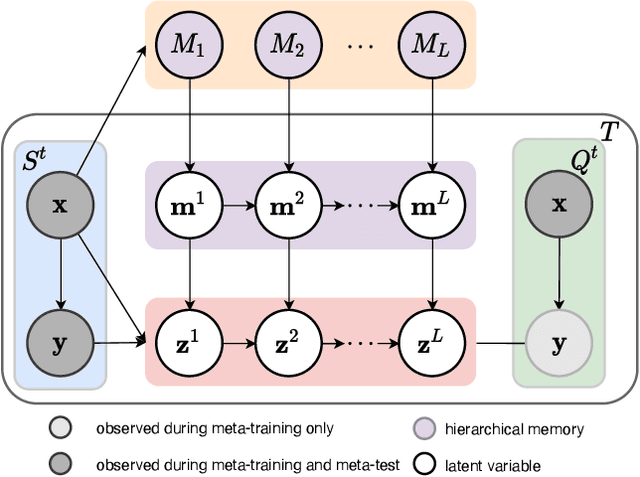

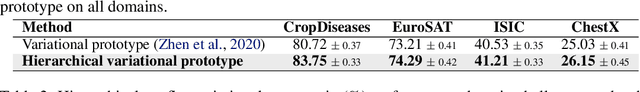

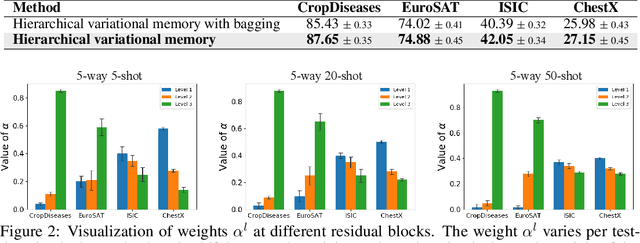

Abstract:Neural memory enables fast adaptation to new tasks with just a few training samples. Existing memory models store features only from the single last layer, which does not generalize well in presence of a domain shift between training and test distributions. Rather than relying on a flat memory, we propose a hierarchical alternative that stores features at different semantic levels. We introduce a hierarchical prototype model, where each level of the prototype fetches corresponding information from the hierarchical memory. The model is endowed with the ability to flexibly rely on features at different semantic levels if the domain shift circumstances so demand. We meta-learn the model by a newly derived hierarchical variational inference framework, where hierarchical memory and prototypes are jointly optimized. To explore and exploit the importance of different semantic levels, we further propose to learn the weights associated with the prototype at each level in a data-driven way, which enables the model to adaptively choose the most generalizable features. We conduct thorough ablation studies to demonstrate the effectiveness of each component in our model. The new state-of-the-art performance on cross-domain and competitive performance on traditional few-shot classification further substantiates the benefit of hierarchical variational memory.

Specificity-Preserving Federated Learning for MR Image Reconstruction

Dec 09, 2021

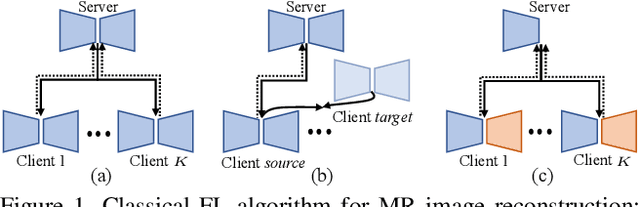

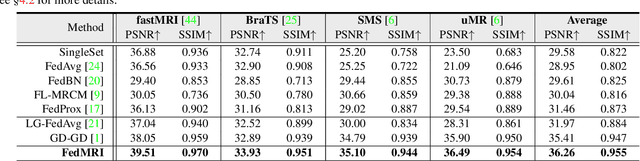

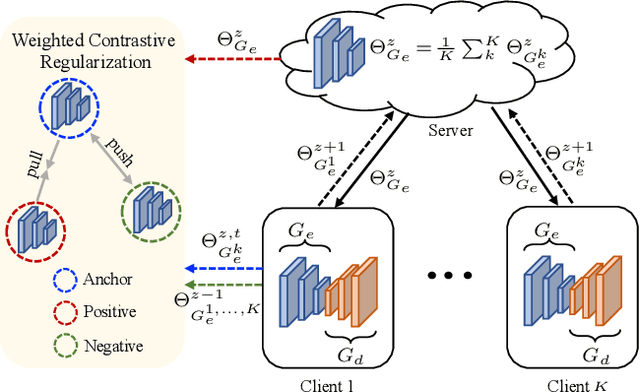

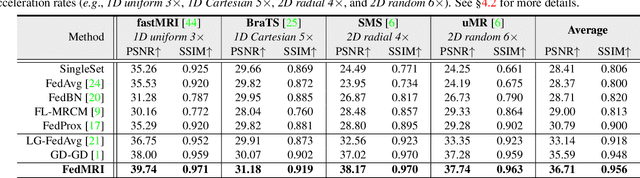

Abstract:Federated learning (FL) can be used to improve data privacy and efficiency in magnetic resonance (MR) image reconstruction by enabling multiple institutions to collaborate without needing to aggregate local data. However, the domain shift caused by different MR imaging protocols can substantially degrade the performance of FL models. Recent FL techniques tend to solve this by enhancing the generalization of the global model, but they ignore the domain-specific features, which may contain important information about the device properties and be useful for local reconstruction. In this paper, we propose a specificity-preserving FL algorithm for MR image reconstruction (FedMRI). The core idea is to divide the MR reconstruction model into two parts: a globally shared encoder to obtain a generalized representation at the global level, and a client-specific decoder to preserve the domain-specific properties of each client, which is important for collaborative reconstruction when the clients have unique distribution. Moreover, to further boost the convergence of the globally shared encoder when a domain shift is present, a weighted contrastive regularization is introduced to directly correct any deviation between the client and server during optimization. Extensive experiments demonstrate that our FedMRI's reconstructed results are the closest to the ground-truth for multi-institutional data, and that it outperforms state-of-the-art FL methods.

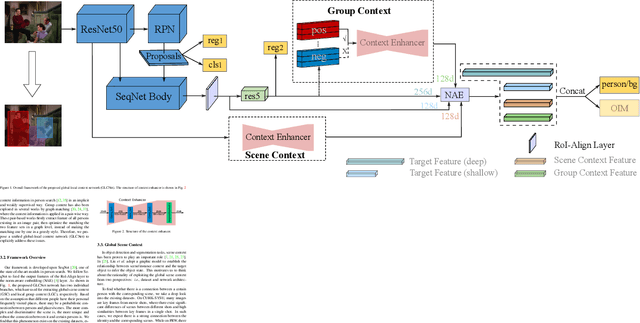

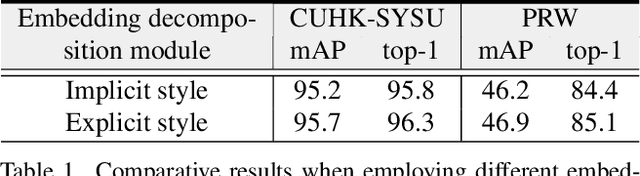

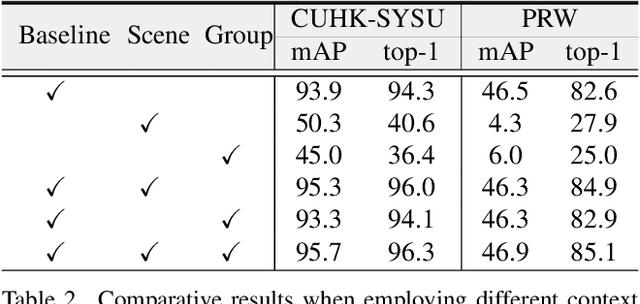

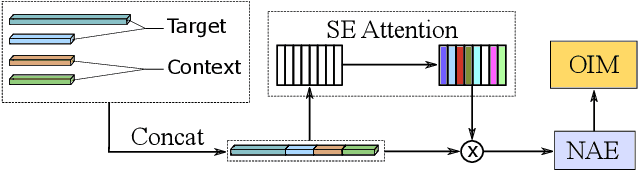

Global-Local Context Network for Person Search

Dec 05, 2021

Abstract:Person search aims to jointly localize and identify a query person from natural, uncropped images, which has been actively studied in the computer vision community over the past few years. In this paper, we delve into the rich context information globally and locally surrounding the target person, which we refer to scene and group context, respectively. Unlike previous works that treat the two types of context individually, we exploit them in a unified global-local context network (GLCNet) with the intuitive aim of feature enhancement. Specifically, re-ID embeddings and context features are enhanced simultaneously in a multi-stage fashion, ultimately leading to enhanced, discriminative features for person search. We conduct the experiments on two person search benchmarks (i.e., CUHK-SYSU and PRW) as well as extend our approach to a more challenging setting (i.e., character search on MovieNet). Extensive experimental results demonstrate the consistent improvement of the proposed GLCNet over the state-of-the-art methods on the three datasets. Our source codes, pre-trained models, and the new setting for character search are available at: https://github.com/ZhengPeng7/GLCNet.

Multi-Task Neural Processes

Dec 02, 2021

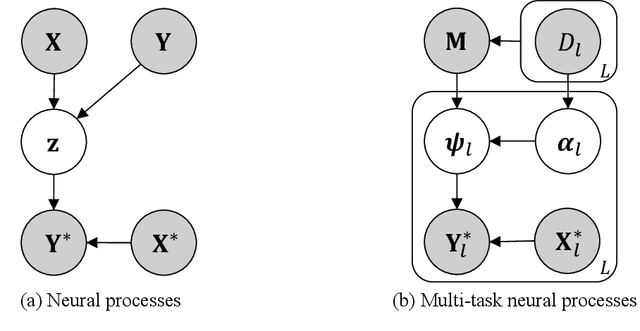

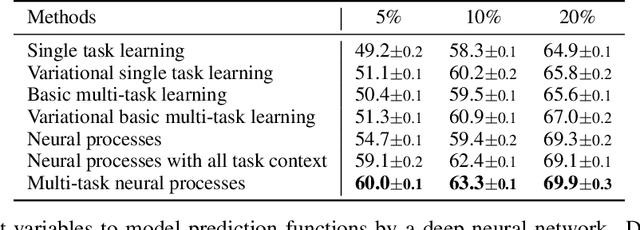

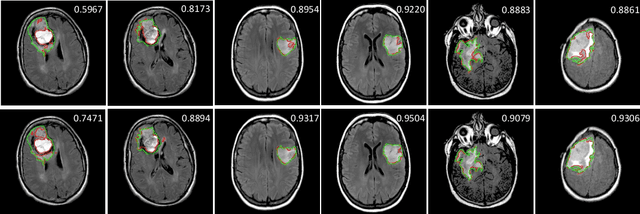

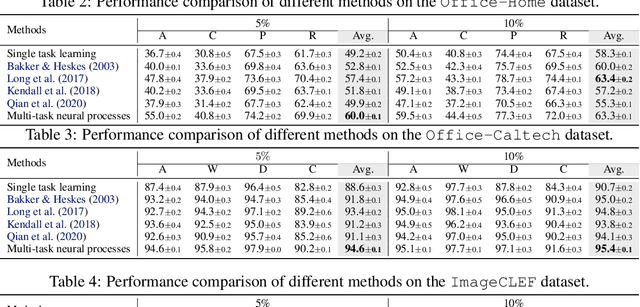

Abstract:Neural processes have recently emerged as a class of powerful neural latent variable models that combine the strengths of neural networks and stochastic processes. As they can encode contextual data in the network's function space, they offer a new way to model task relatedness in multi-task learning. To study its potential, we develop multi-task neural processes, a new variant of neural processes for multi-task learning. In particular, we propose to explore transferable knowledge from related tasks in the function space to provide inductive bias for improving each individual task. To do so, we derive the function priors in a hierarchical Bayesian inference framework, which enables each task to incorporate the shared knowledge provided by related tasks into its context of the prediction function. Our multi-task neural processes methodologically expand the scope of vanilla neural processes and provide a new way of exploring task relatedness in function spaces for multi-task learning. The proposed multi-task neural processes are capable of learning multiple tasks with limited labeled data and in the presence of domain shift. We perform extensive experimental evaluations on several benchmarks for the multi-task regression and classification tasks. The results demonstrate the effectiveness of multi-task neural processes in transferring useful knowledge among tasks for multi-task learning and superior performance in multi-task classification and brain image segmentation.

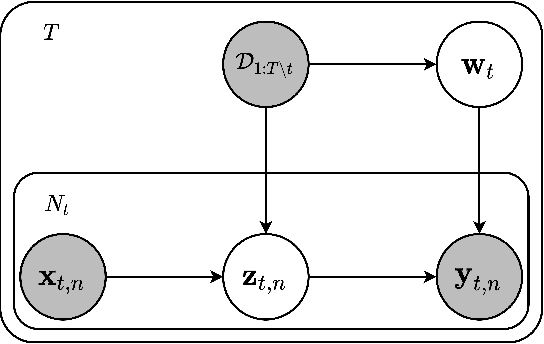

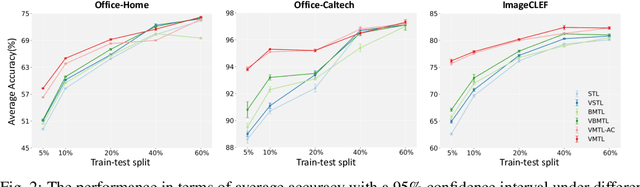

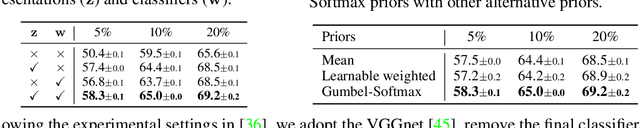

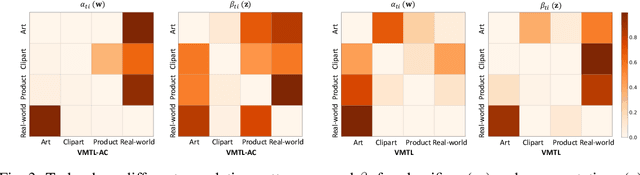

Variational Multi-Task Learning with Gumbel-Softmax Priors

Nov 09, 2021

Abstract:Multi-task learning aims to explore task relatedness to improve individual tasks, which is of particular significance in the challenging scenario that only limited data is available for each task. To tackle this challenge, we propose variational multi-task learning (VMTL), a general probabilistic inference framework for learning multiple related tasks. We cast multi-task learning as a variational Bayesian inference problem, in which task relatedness is explored in a unified manner by specifying priors. To incorporate shared knowledge into each task, we design the prior of a task to be a learnable mixture of the variational posteriors of other related tasks, which is learned by the Gumbel-Softmax technique. In contrast to previous methods, our VMTL can exploit task relatedness for both representations and classifiers in a principled way by jointly inferring their posteriors. This enables individual tasks to fully leverage inductive biases provided by related tasks, therefore improving the overall performance of all tasks. Experimental results demonstrate that the proposed VMTL is able to effectively tackle a variety of challenging multi-task learning settings with limited training data for both classification and regression. Our method consistently surpasses previous methods, including strong Bayesian approaches, and achieves state-of-the-art performance on five benchmark datasets.

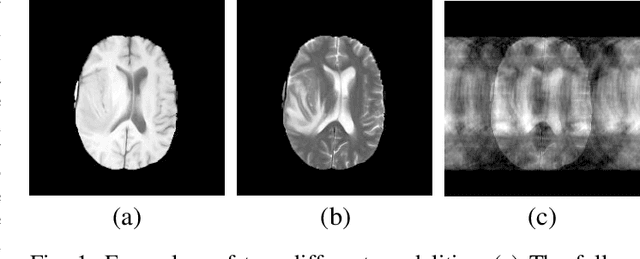

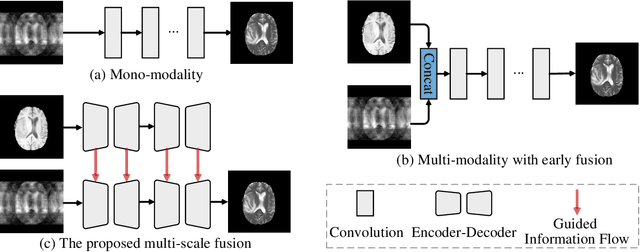

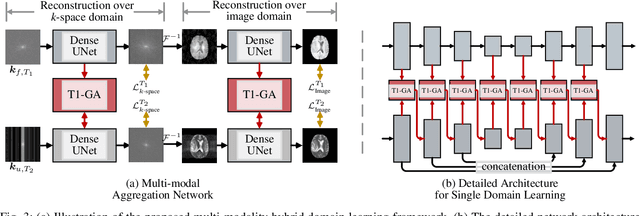

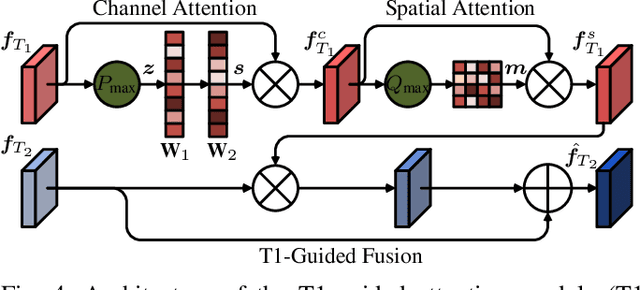

Multi-modal Aggregation Network for Fast MR Imaging

Oct 20, 2021

Abstract:Magnetic resonance (MR) imaging is a commonly used scanning technique for disease detection, diagnosis and treatment monitoring. Although it is able to produce detailed images of organs and tissues with better contrast, it suffers from a long acquisition time, which makes the image quality vulnerable to say motion artifacts. Recently, many approaches have been developed to reconstruct full-sampled images from partially observed measurements in order to accelerate MR imaging. However, most of these efforts focus on reconstruction over a single modality or simple fusion of multiple modalities, neglecting the discovery of correlation knowledge at different feature level. In this work, we propose a novel Multi-modal Aggregation Network, named MANet, which is capable of discovering complementary representations from a fully sampled auxiliary modality, with which to hierarchically guide the reconstruction of a given target modality. In our MANet, the representations from the fully sampled auxiliary and undersampled target modalities are learned independently through a specific network. Then, a guided attention module is introduced in each convolutional stage to selectively aggregate multi-modal features for better reconstruction, yielding comprehensive, multi-scale, multi-modal feature fusion. Moreover, our MANet follows a hybrid domain learning framework, which allows it to simultaneously recover the frequency signal in the $k$-space domain as well as restore the image details from the image domain. Extensive experiments demonstrate the superiority of the proposed approach over state-of-the-art MR image reconstruction methods.

HSVA: Hierarchical Semantic-Visual Adaptation for Zero-Shot Learning

Oct 08, 2021

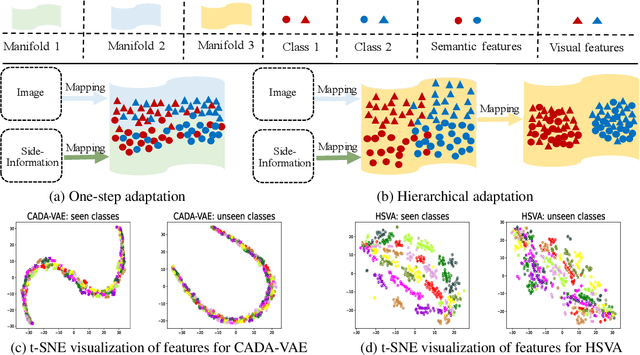

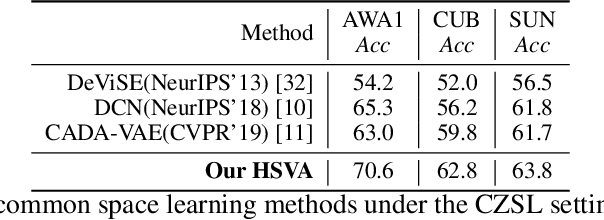

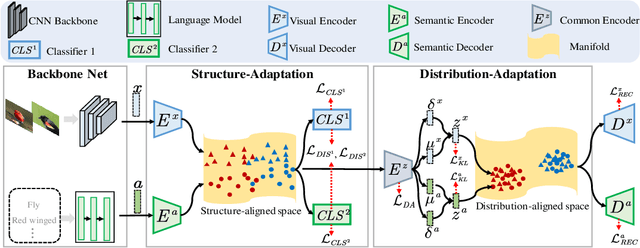

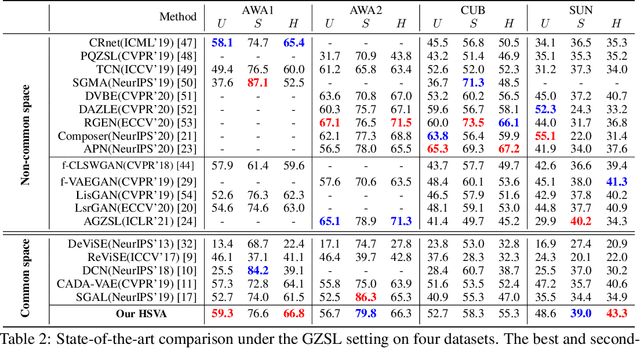

Abstract:Zero-shot learning (ZSL) tackles the unseen class recognition problem, transferring semantic knowledge from seen classes to unseen ones. Typically, to guarantee desirable knowledge transfer, a common (latent) space is adopted for associating the visual and semantic domains in ZSL. However, existing common space learning methods align the semantic and visual domains by merely mitigating distribution disagreement through one-step adaptation. This strategy is usually ineffective due to the heterogeneous nature of the feature representations in the two domains, which intrinsically contain both distribution and structure variations. To address this and advance ZSL, we propose a novel hierarchical semantic-visual adaptation (HSVA) framework. Specifically, HSVA aligns the semantic and visual domains by adopting a hierarchical two-step adaptation, i.e., structure adaptation and distribution adaptation. In the structure adaptation step, we take two task-specific encoders to encode the source data (visual domain) and the target data (semantic domain) into a structure-aligned common space. To this end, a supervised adversarial discrepancy (SAD) module is proposed to adversarially minimize the discrepancy between the predictions of two task-specific classifiers, thus making the visual and semantic feature manifolds more closely aligned. In the distribution adaptation step, we directly minimize the Wasserstein distance between the latent multivariate Gaussian distributions to align the visual and semantic distributions using a common encoder. Finally, the structure and distribution adaptation are derived in a unified framework under two partially-aligned variational autoencoders. Extensive experiments on four benchmark datasets demonstrate that HSVA achieves superior performance on both conventional and generalized ZSL. The code is available at \url{https://github.com/shiming-chen/HSVA} .

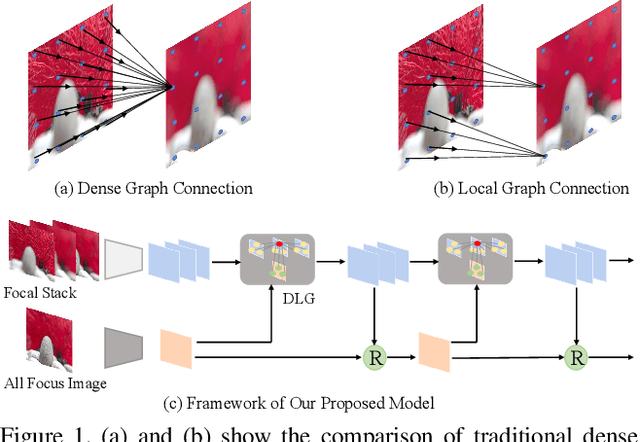

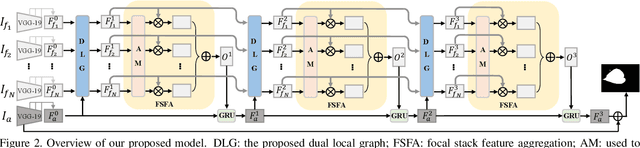

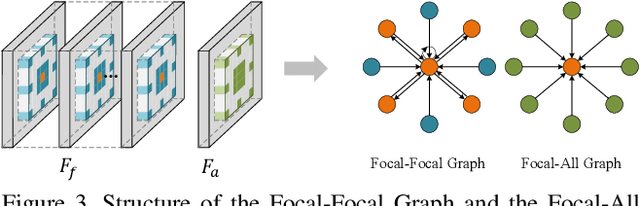

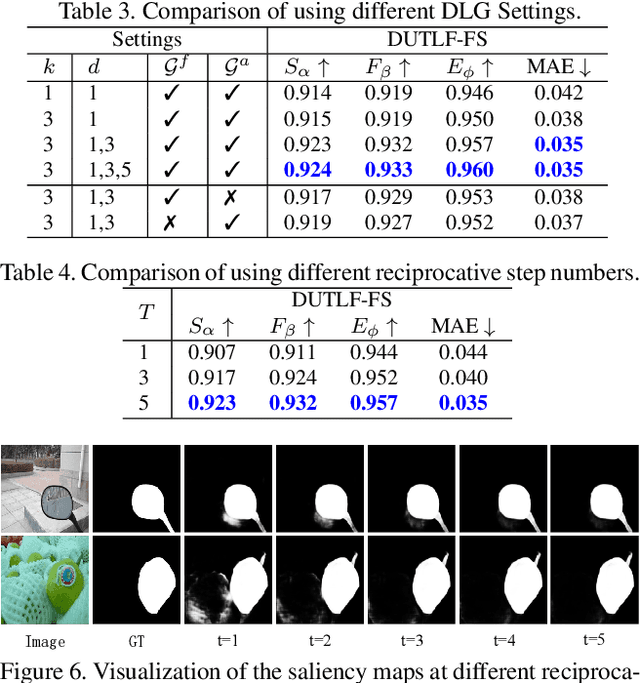

Light Field Saliency Detection with Dual Local Graph Learning andReciprocative Guidance

Oct 02, 2021

Abstract:The application of light field data in salient object de-tection is becoming increasingly popular recently. The diffi-culty lies in how to effectively fuse the features within the fo-cal stack and how to cooperate them with the feature of theall-focus image. Previous methods usually fuse focal stackfeatures via convolution or ConvLSTM, which are both lesseffective and ill-posed. In this paper, we model the infor-mation fusion within focal stack via graph networks. Theyintroduce powerful context propagation from neighbouringnodes and also avoid ill-posed implementations. On the onehand, we construct local graph connections thus avoidingprohibitive computational costs of traditional graph net-works. On the other hand, instead of processing the twokinds of data separately, we build a novel dual graph modelto guide the focal stack fusion process using all-focus pat-terns. To handle the second difficulty, previous methods usu-ally implement one-shot fusion for focal stack and all-focusfeatures, hence lacking a thorough exploration of their sup-plements. We introduce a reciprocative guidance schemeand enable mutual guidance between these two kinds of in-formation at multiple steps. As such, both kinds of featurescan be enhanced iteratively, finally benefiting the saliencyprediction. Extensive experimental results show that theproposed models are all beneficial and we achieve signif-icantly better results than state-of-the-art methods.

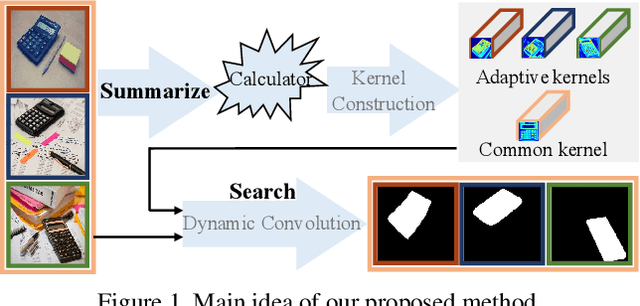

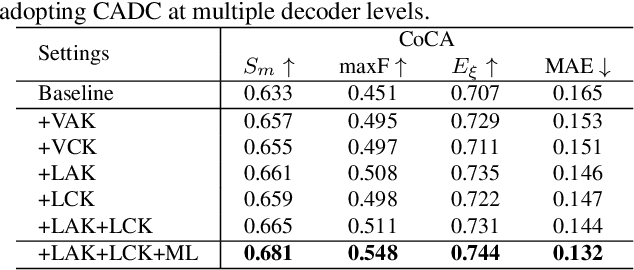

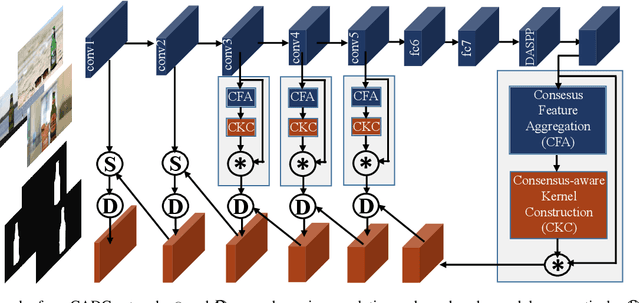

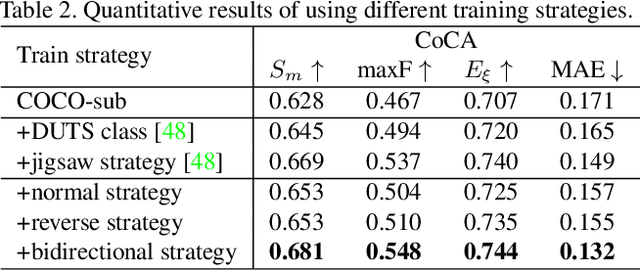

Summarize and Search: Learning Consensus-aware Dynamic Convolution for Co-Saliency Detection

Oct 01, 2021

Abstract:Humans perform co-saliency detection by first summarizing the consensus knowledge in the whole group and then searching corresponding objects in each image. Previous methods usually lack robustness, scalability, or stability for the first process and simply fuse consensus features with image features for the second process. In this paper, we propose a novel consensus-aware dynamic convolution model to explicitly and effectively perform the "summarize and search" process. To summarize consensus image features, we first summarize robust features for every single image using an effective pooling method and then aggregate cross-image consensus cues via the self-attention mechanism. By doing this, our model meets the scalability and stability requirements. Next, we generate dynamic kernels from consensus features to encode the summarized consensus knowledge. Two kinds of kernels are generated in a supplementary way to summarize fine-grained image-specific consensus object cues and the coarse group-wise common knowledge, respectively. Then, we can effectively perform object searching by employing dynamic convolution at multiple scales. Besides, a novel and effective data synthesis method is also proposed to train our network. Experimental results on four benchmark datasets verify the effectiveness of our proposed method. Our code and saliency maps are available at \url{https://github.com/nnizhang/CADC}.

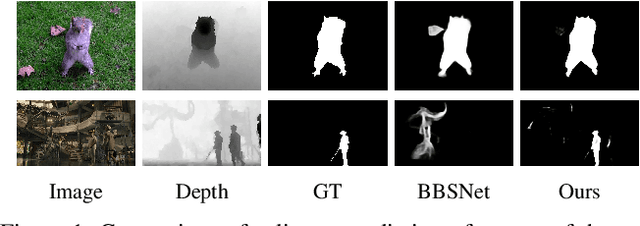

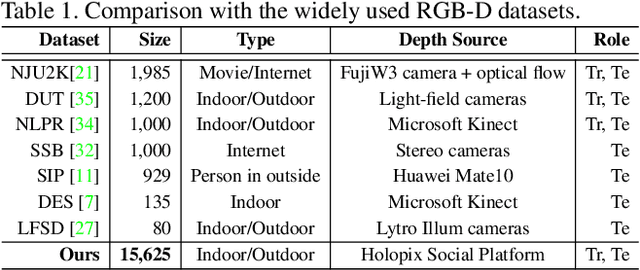

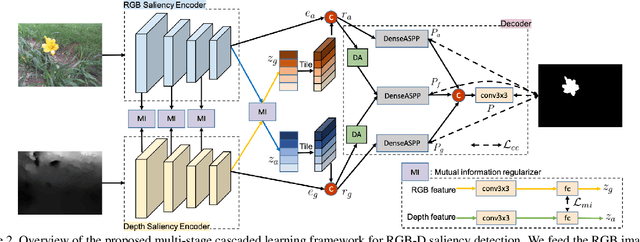

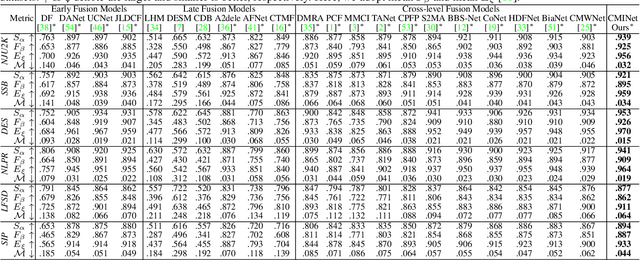

RGB-D Saliency Detection via Cascaded Mutual Information Minimization

Sep 15, 2021

Abstract:Existing RGB-D saliency detection models do not explicitly encourage RGB and depth to achieve effective multi-modal learning. In this paper, we introduce a novel multi-stage cascaded learning framework via mutual information minimization to "explicitly" model the multi-modal information between RGB image and depth data. Specifically, we first map the feature of each mode to a lower dimensional feature vector, and adopt mutual information minimization as a regularizer to reduce the redundancy between appearance features from RGB and geometric features from depth. We then perform multi-stage cascaded learning to impose the mutual information minimization constraint at every stage of the network. Extensive experiments on benchmark RGB-D saliency datasets illustrate the effectiveness of our framework. Further, to prosper the development of this field, we contribute the largest (7x larger than NJU2K) dataset, which contains 15,625 image pairs with high quality polygon-/scribble-/object-/instance-/rank-level annotations. Based on these rich labels, we additionally construct four new benchmarks with strong baselines and observe some interesting phenomena, which can motivate future model design. Source code and dataset are available at "https://github.com/JingZhang617/cascaded_rgbd_sod".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge