Li Wang

Northeast Normal University

Domain Adaptation Using Class Similarity for Robust Speech Recognition

Nov 05, 2020

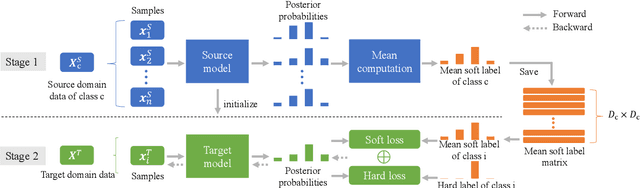

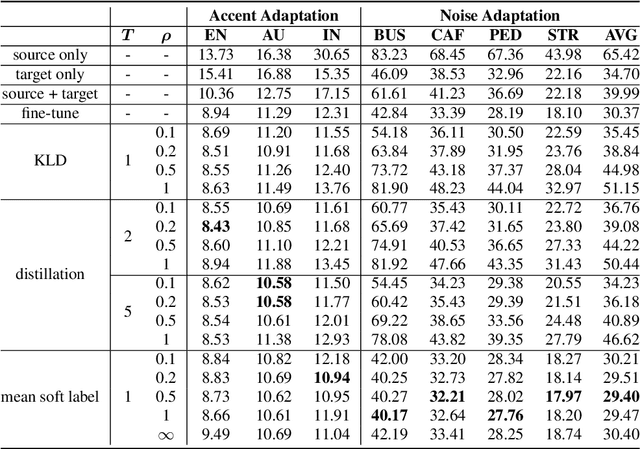

Abstract:When only limited target domain data is available, domain adaptation could be used to promote performance of deep neural network (DNN) acoustic model by leveraging well-trained source model and target domain data. However, suffering from domain mismatch and data sparsity, domain adaptation is very challenging. This paper proposes a novel adaptation method for DNN acoustic model using class similarity. Since the output distribution of DNN model contains the knowledge of similarity among classes, which is applicable to both source and target domain, it could be transferred from source to target model for the performance improvement. In our approach, we first compute the frame level posterior probabilities of source samples using source model. Then, for each class, probabilities of this class are used to compute a mean vector, which we refer to as mean soft labels. During adaptation, these mean soft labels are used in a regularization term to train the target model. Experiments showed that our approach outperforms fine-tuning using one-hot labels on both accent and noise adaptation task, especially when source and target domain are highly mismatched.

Multi-Accent Adaptation based on Gate Mechanism

Nov 05, 2020

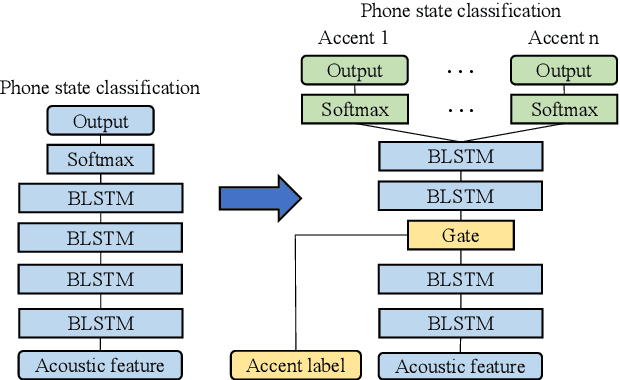

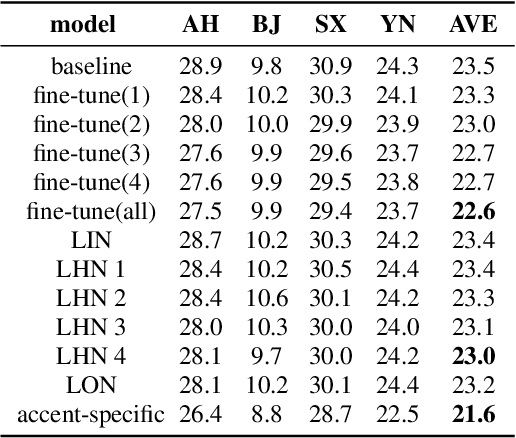

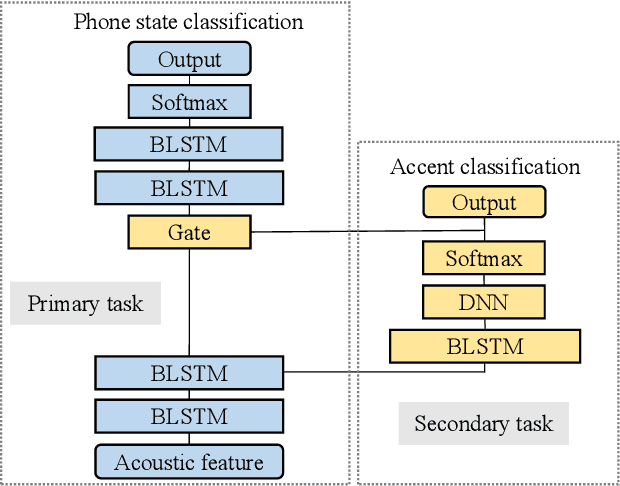

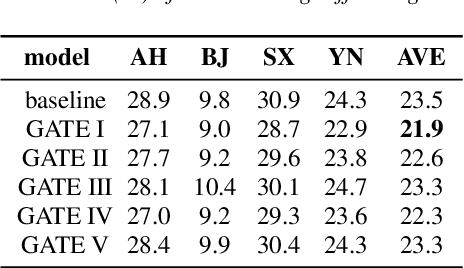

Abstract:When only a limited amount of accented speech data is available, to promote multi-accent speech recognition performance, the conventional approach is accent-specific adaptation, which adapts the baseline model to multiple target accents independently. To simplify the adaptation procedure, we explore adapting the baseline model to multiple target accents simultaneously with multi-accent mixed data. Thus, we propose using accent-specific top layer with gate mechanism (AST-G) to realize multi-accent adaptation. Compared with the baseline model and accent-specific adaptation, AST-G achieves 9.8% and 1.9% average relative WER reduction respectively. However, in real-world applications, we can't obtain the accent category label for inference in advance. Therefore, we apply using an accent classifier to predict the accent label. To jointly train the acoustic model and the accent classifier, we propose the multi-task learning with gate mechanism (MTL-G). As the accent label prediction could be inaccurate, it performs worse than the accent-specific adaptation. Yet, in comparison with the baseline model, MTL-G achieves 5.1% average relative WER reduction.

Orthogonal Multi-view Analysis by Successive Approximations via Eigenvectors

Oct 04, 2020

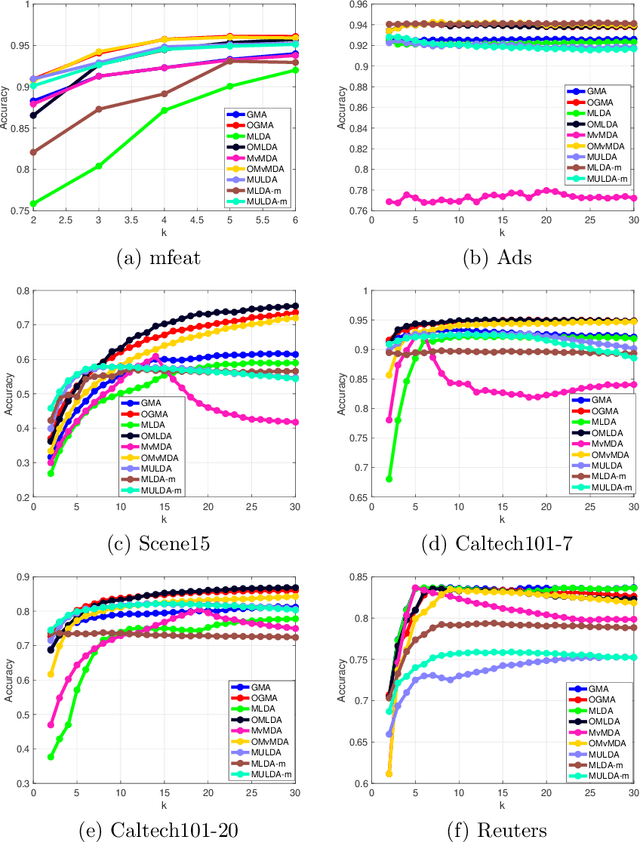

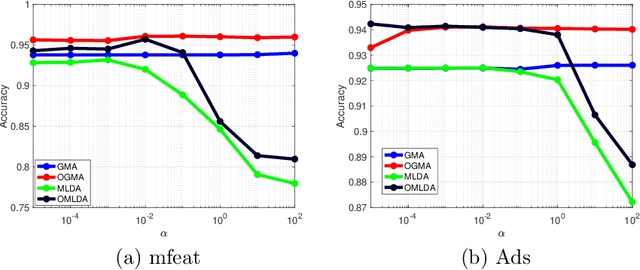

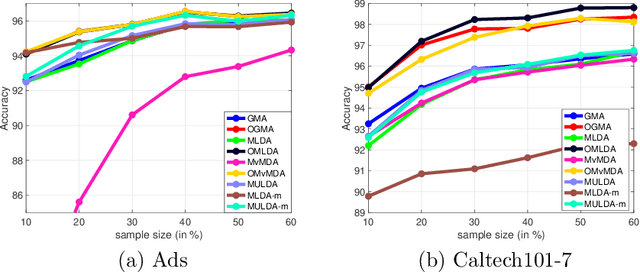

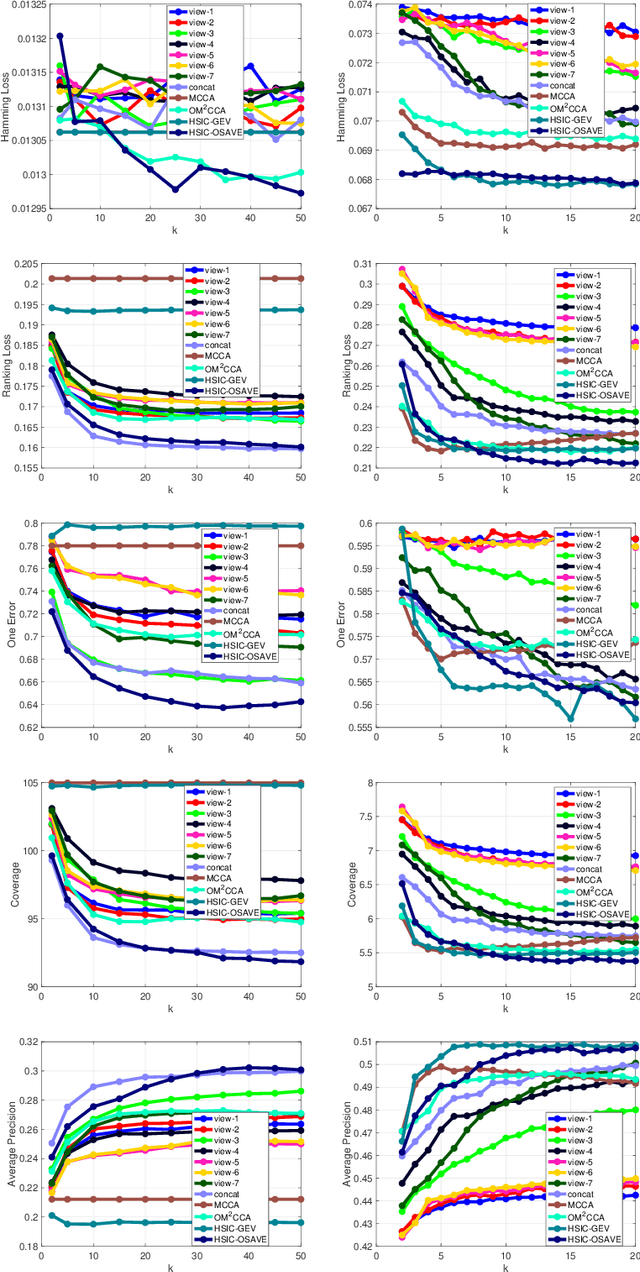

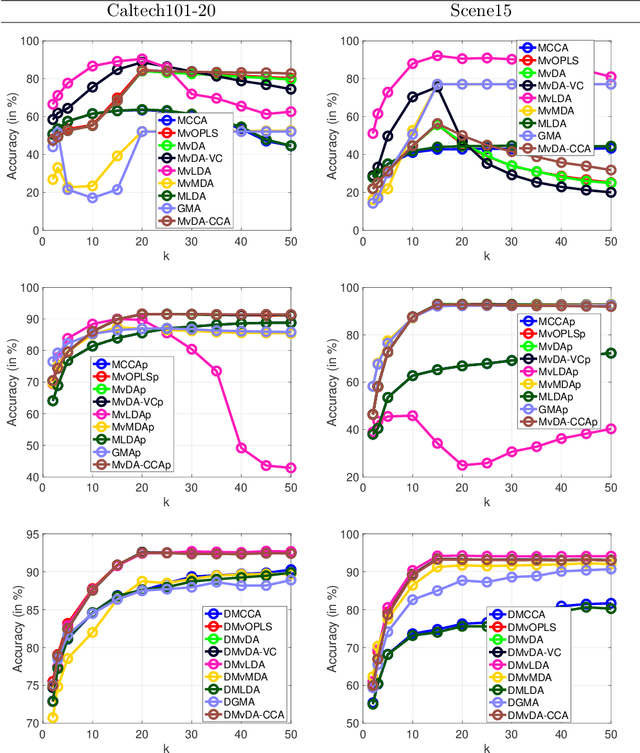

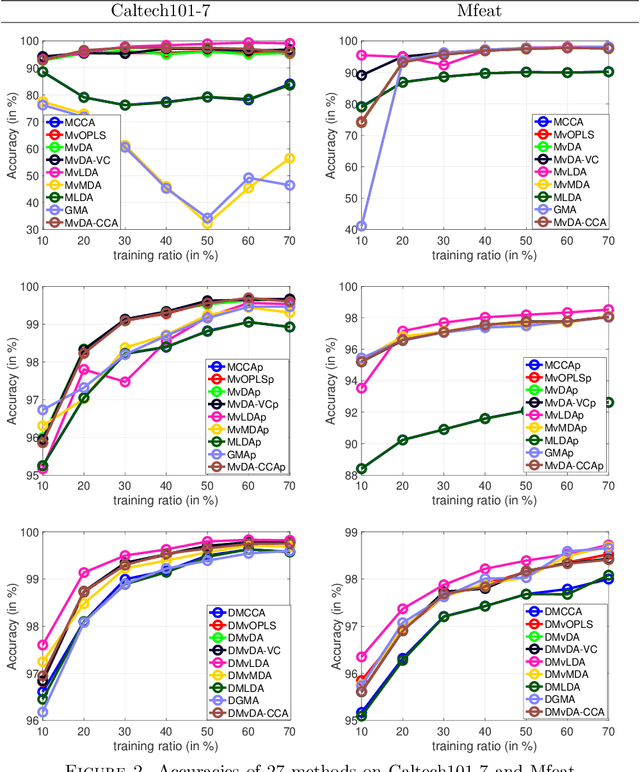

Abstract:We propose a unified framework for multi-view subspace learning to learn individual orthogonal projections for all views. The framework integrates the correlations within multiple views, supervised discriminant capacity, and distance preservation in a concise and compact way. It not only includes several existing models as special cases, but also inspires new novel models. To demonstrate its versatility to handle different learning scenarios, we showcase three new multi-view discriminant analysis models and two new multi-view multi-label classification ones under this framework. An efficient numerical method based on successive approximations via eigenvectors is presented to solve the associated optimization problem. The method is built upon an iterative Krylov subspace method which can easily scale up for high-dimensional datasets. Extensive experiments are conducted on various real-world datasets for multi-view discriminant analysis and multi-view multi-label classification. The experimental results demonstrate that the proposed models are consistently competitive to and often better than the compared methods that do not learn orthogonal projections.

Privacy-preserving Transfer Learning via Secure Maximum Mean Discrepancy

Oct 03, 2020

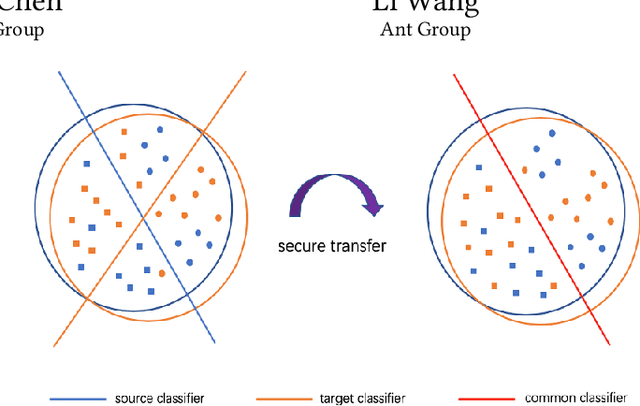

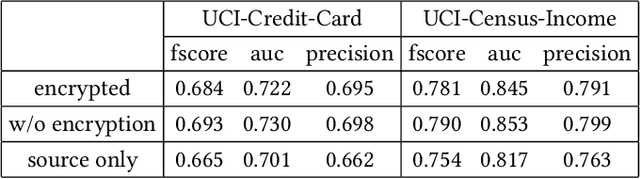

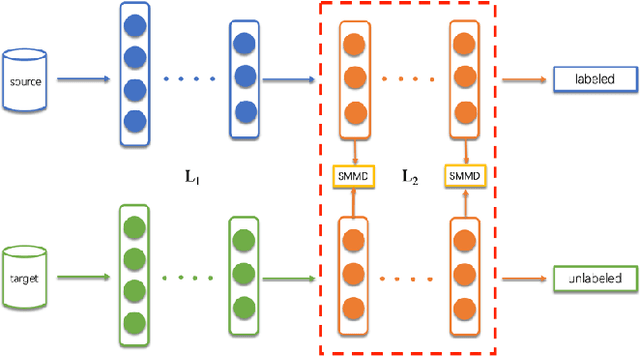

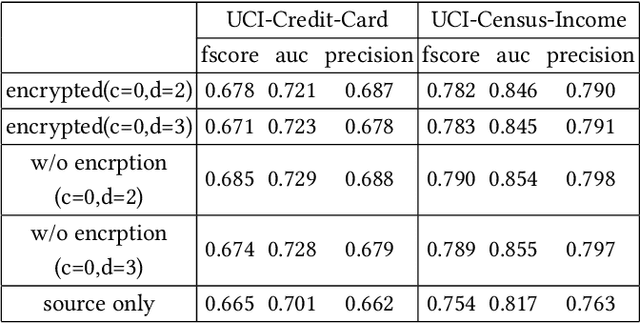

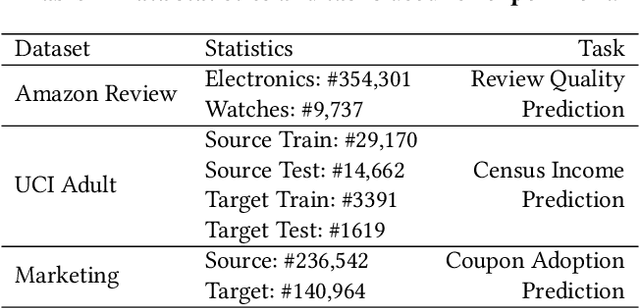

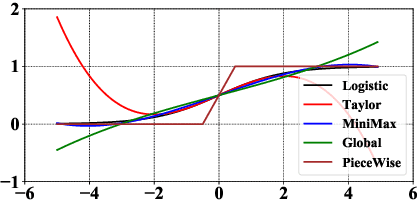

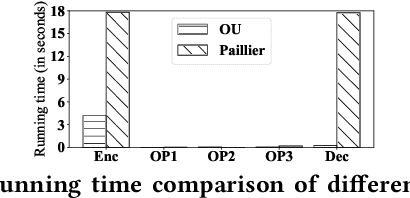

Abstract:The success of machine learning algorithms often relies on a large amount of high-quality data to train well-performed models. However, data is a valuable resource and are always held by different parties in reality. An effective solution to such a data isolation problem is to employ federated learning, which allows multiple parties to collaboratively train a model. In this paper, we propose a Secure version of the widely used Maximum Mean Discrepancy (SMMD) based on homomorphic encryption to enable effective knowledge transfer under the data federation setting without compromising the data privacy. The proposed SMMD is able to avoid the potential information leakage in transfer learning when aligning the source and target data distribution. As a result, both the source domain and target domain can fully utilize their data to build more scalable models. Experimental results demonstrate that our proposed SMMD is secure and effective.

A Comprehensive Analysis of Information Leakage in Deep Transfer Learning

Sep 04, 2020

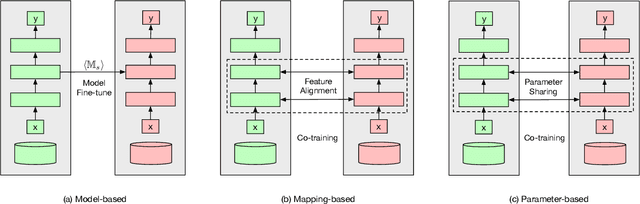

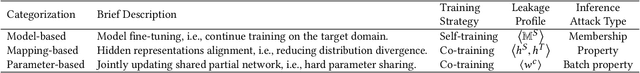

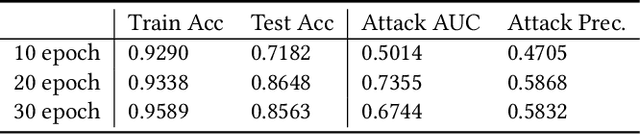

Abstract:Transfer learning is widely used for transferring knowledge from a source domain to the target domain where the labeled data is scarce. Recently, deep transfer learning has achieved remarkable progress in various applications. However, the source and target datasets usually belong to two different organizations in many real-world scenarios, potential privacy issues in deep transfer learning are posed. In this study, to thoroughly analyze the potential privacy leakage in deep transfer learning, we first divide previous methods into three categories. Based on that, we demonstrate specific threats that lead to unintentional privacy leakage in each category. Additionally, we also provide some solutions to prevent these threats. To the best of our knowledge, our study is the first to provide a thorough analysis of the information leakage issues in deep transfer learning methods and provide potential solutions to the issue. Extensive experiments on two public datasets and an industry dataset are conducted to show the privacy leakage under different deep transfer learning settings and defense solution effectiveness.

When Homomorphic Encryption Marries Secret Sharing: Secure Large-Scale Sparse Logistic Regression and Applications in Risk Control

Aug 20, 2020

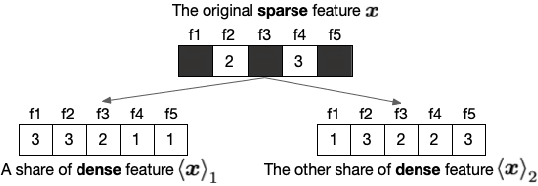

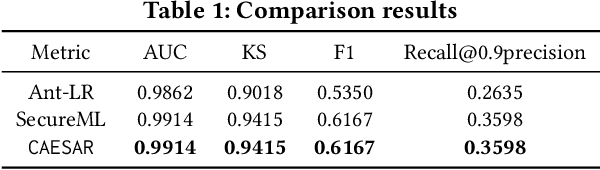

Abstract:Logistic Regression (LR) is the most widely used machine learning model in industry due to its efficiency, robustness, and interpretability. Meanwhile, with the problem of data isolation and the requirement of high model performance, building secure and efficient LR model for multi-parties becomes a hot topic for both academia and industry. Existing works mainly employ either Homomorphic Encryption (HE) or Secret Sharing (SS) to build secure LR. HE based methods can deal with high-dimensional sparse features, but they may suffer potential security risk. In contrast, SS based methods have provable security but they have efficiency issue under high-dimensional sparse features. In this paper, we first present CAESAR, which combines HE and SS to build seCure lArge-scalE SpArse logistic Regression model and thus has the advantages of both efficiency and security. We then present the distributed implementation of CAESAR for scalability requirement. We finally deploy CAESAR into a risk control task and conduct comprehensive experiments to study the efficiency of CAESAR.

Multi-Site Infant Brain Segmentation Algorithms: The iSeg-2019 Challenge

Jul 11, 2020

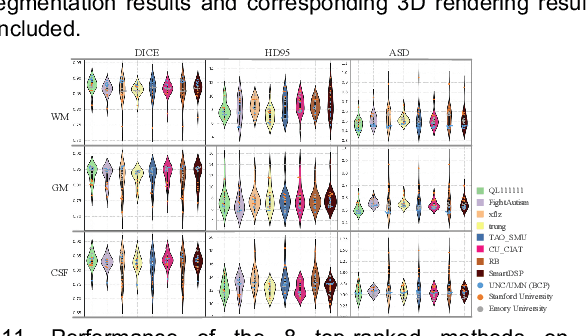

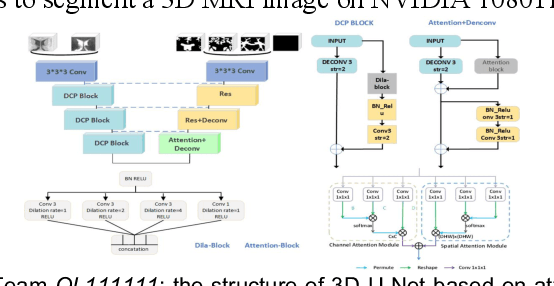

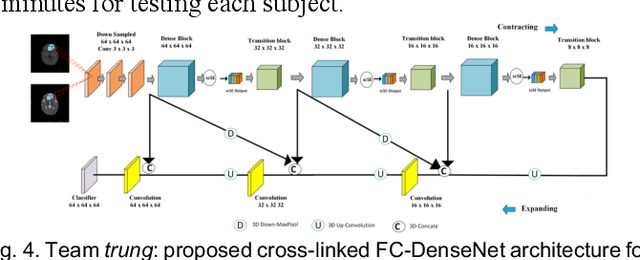

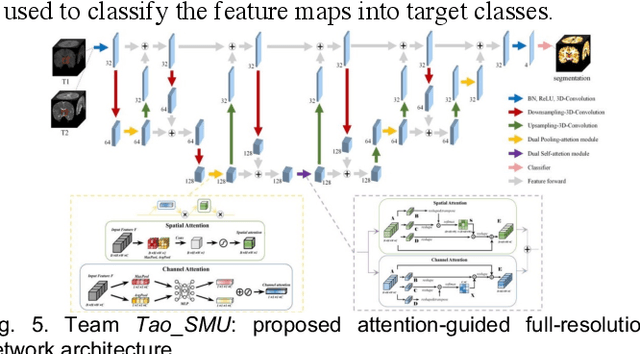

Abstract:To better understand early brain growth patterns in health and disorder, it is critical to accurately segment infant brain magnetic resonance (MR) images into white matter (WM), gray matter (GM), and cerebrospinal fluid (CSF). Deep learning-based methods have achieved state-of-the-art performance; however, one of major limitations is that the learning-based methods may suffer from the multi-site issue, that is, the models trained on a dataset from one site may not be applicable to the datasets acquired from other sites with different imaging protocols/scanners. To promote methodological development in the community, iSeg-2019 challenge (http://iseg2019.web.unc.edu) provides a set of 6-month infant subjects from multiple sites with different protocols/scanners for the participating methods. Training/validation subjects are from UNC (MAP) and testing subjects are from UNC/UMN (BCP), Stanford University, and Emory University. By the time of writing, there are 30 automatic segmentation methods participating in iSeg-2019. We review the 8 top-ranked teams by detailing their pipelines/implementations, presenting experimental results and evaluating performance in terms of the whole brain, regions of interest, and gyral landmark curves. We also discuss their limitations and possible future directions for the multi-site issue. We hope that the multi-site dataset in iSeg-2019 and this review article will attract more researchers on the multi-site issue.

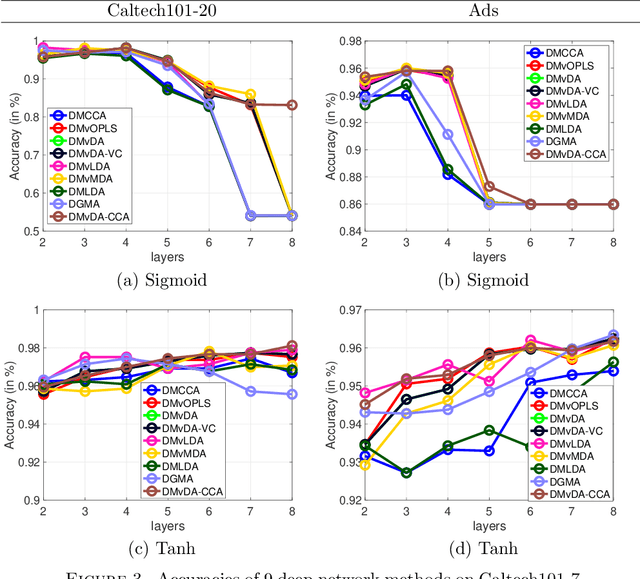

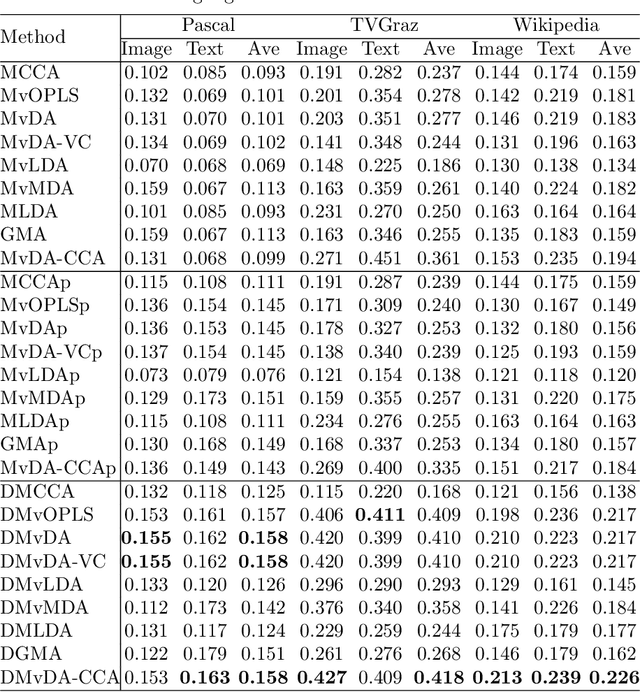

Multi-view Orthonormalized Partial Least Squares: Regularizations and Deep Extensions

Jul 09, 2020

Abstract:We establish a family of subspace-based learning method for multi-view learning using the least squares as the fundamental basis. Specifically, we investigate orthonormalized partial least squares (OPLS) and study its important properties for both multivariate regression and classification. Building on the least squares reformulation of OPLS, we propose a unified multi-view learning framework to learn a classifier over a common latent space shared by all views. The regularization technique is further leveraged to unleash the power of the proposed framework by providing three generic types of regularizers on its inherent ingredients including model parameters, decision values and latent projected points. We instantiate a set of regularizers in terms of various priors. The proposed framework with proper choices of regularizers not only can recast existing methods, but also inspire new models. To further improve the performance of the proposed framework on complex real problems, we propose to learn nonlinear transformations parameterized by deep networks. Extensive experiments are conducted to compare various methods on nine data sets with different numbers of views in terms of both feature extraction and cross-modal retrieval.

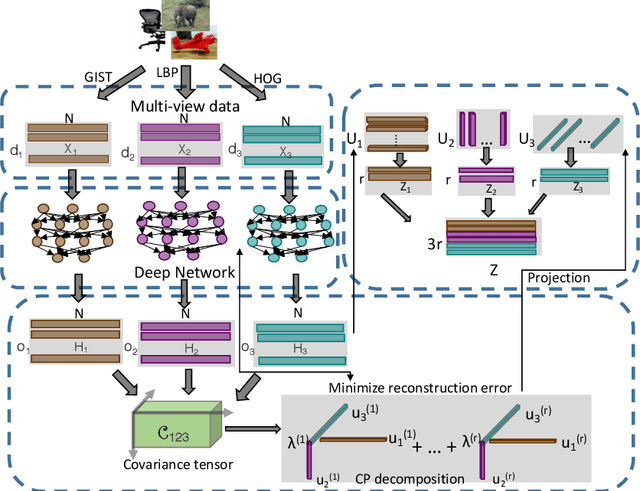

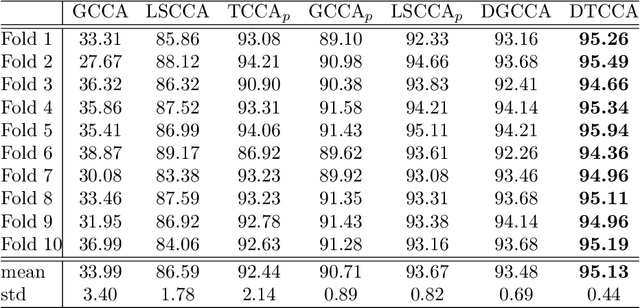

Deep Tensor CCA for Multi-view Learning

May 25, 2020

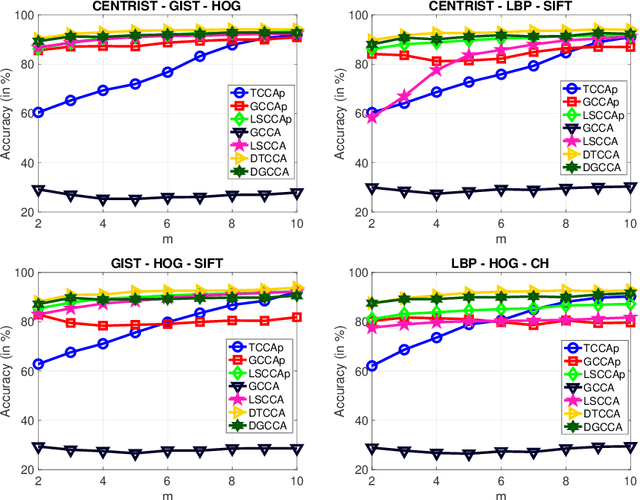

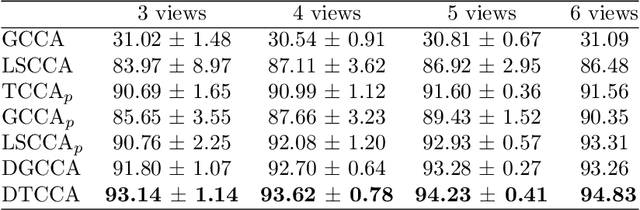

Abstract:We present Deep Tensor Canonical Correlation Analysis (DTCCA), a method to learn complex nonlinear transformations of multiple views (more than two) of data such that the resulting representations are linearly correlated in high order. The high-order correlation of given multiple views is modeled by covariance tensor, which is different from most CCA formulations relying solely on the pairwise correlations. Parameters of transformations of each view are jointly learned by maximizing the high-order canonical correlation. To solve the resulting problem, we reformulate it as the best sum of rank-1 approximation, which can be efficiently solved by existing tensor decomposition method. DTCCA is a nonlinear extension of tensor CCA (TCCA) via deep networks. The transformations of DTCCA are parametric functions, which are very different from implicit mapping in the form of kernel function. Comparing with kernel TCCA, DTCCA not only can deal with arbitrary dimensions of the input data, but also does not need to maintain the training data for computing representations of any given data point. Hence, DTCCA as a unified model can efficiently overcome the scalable issue of TCCA for either high-dimensional multi-view data or a large amount of views, and it also naturally extends TCCA for learning nonlinear representation. Extensive experiments on three multi-view data sets demonstrate the effectiveness of the proposed method.

Privacy-Preserving Graph Neural Network for Node Classification

May 25, 2020

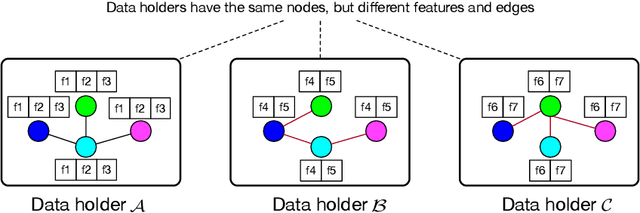

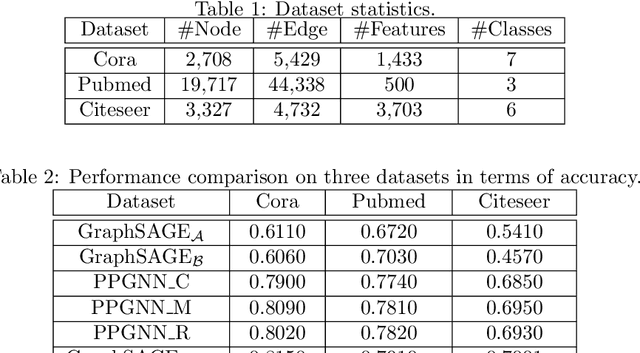

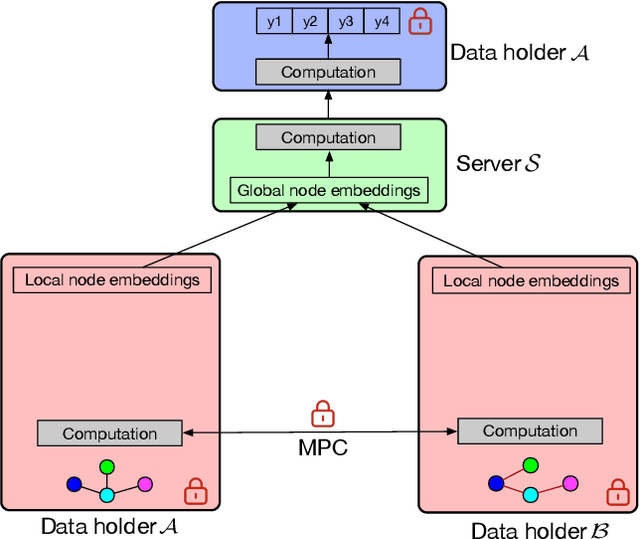

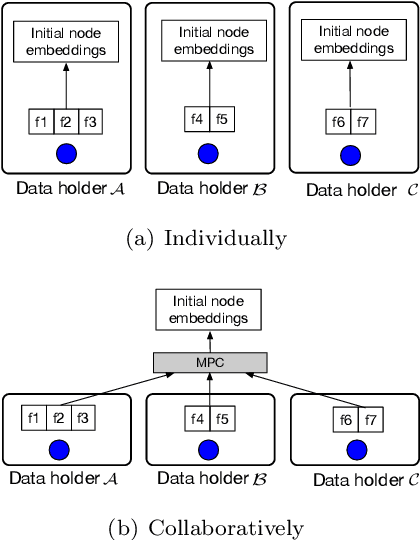

Abstract:Recently, Graph Neural Network (GNN) has achieved remarkable progresses in various real-world tasks on graph data, consisting of node features and the adjacent information between different nodes. High-performance GNN models always depend on both rich features and complete edge information in graph. However, such information could possibly be isolated by different data holders in practice, which is the so-called data isolation problem. To solve this problem, in this paper, we propose a Privacy-Preserving GNN (PPGNN) learning paradigm for node classification task, which can be generalized to existing GNN models. Specifically, we split the computation graph into two parts. We leave the private data (i.e., features, edges, and labels) related computations on data holders, and delegate the rest of computations to a semi-honest server. We conduct experiments on three benchmarks and the results demonstrate that PPGNN significantly outperforms the GNN models trained on the isolated data and has comparable performance with the traditional GNN trained on the mixed plaintext data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge