Kai Xu

National University of Defense Technology

Learning Accurate Template Matching with Differentiable Coarse-to-Fine Correspondence Refinement

Mar 15, 2023Abstract:Template matching is a fundamental task in computer vision and has been studied for decades. It plays an essential role in manufacturing industry for estimating the poses of different parts, facilitating downstream tasks such as robotic grasping. Existing methods fail when the template and source images have different modalities, cluttered backgrounds or weak textures. They also rarely consider geometric transformations via homographies, which commonly exist even for planar industrial parts. To tackle the challenges, we propose an accurate template matching method based on differentiable coarse-to-fine correspondence refinement. We use an edge-aware module to overcome the domain gap between the mask template and the grayscale image, allowing robust matching. An initial warp is estimated using coarse correspondences based on novel structure-aware information provided by transformers. This initial alignment is passed to a refinement network using references and aligned images to obtain sub-pixel level correspondences which are used to give the final geometric transformation. Extensive evaluation shows that our method is significantly better than state-of-the-art methods and baselines, providing good generalization ability and visually plausible results even on unseen real data.

Multi-Symmetry Ensembles: Improving Diversity and Generalization via Opposing Symmetries

Mar 04, 2023Abstract:Deep ensembles (DE) have been successful in improving model performance by learning diverse members via the stochasticity of random initialization. While recent works have attempted to promote further diversity in DE via hyperparameters or regularizing loss functions, these methods primarily still rely on a stochastic approach to explore the hypothesis space. In this work, we present Multi-Symmetry Ensembles (MSE), a framework for constructing diverse ensembles by capturing the multiplicity of hypotheses along symmetry axes, which explore the hypothesis space beyond stochastic perturbations of model weights and hyperparameters. We leverage recent advances in contrastive representation learning to create models that separately capture opposing hypotheses of invariant and equivariant symmetries and present a simple ensembling approach to efficiently combine appropriate hypotheses for a given task. We show that MSE effectively captures the multiplicity of conflicting hypotheses that is often required in large, diverse datasets like ImageNet. As a result of their inherent diversity, MSE improves classification performance, uncertainty quantification, and generalization across a series of transfer tasks.

S4R: Self-Supervised Semantic Scene Reconstruction from RGB-D Scans

Feb 21, 2023

Abstract:Most deep learning approaches to comprehensive semantic modeling of 3D indoor spaces require costly dense annotations in the 3D domain. In this work, we explore a central 3D scene modeling task, namely, semantic scene reconstruction, using a fully self-supervised approach. To this end, we design a trainable model that employs both incomplete 3D reconstructions and their corresponding source RGB-D images, fusing cross-domain features into volumetric embeddings to predict complete 3D geometry, color, and semantics. Our key technical innovation is to leverage differentiable rendering of color and semantics, using the observed RGB images and a generic semantic segmentation model as color and semantics supervision, respectively. We additionally develop a method to synthesize an augmented set of virtual training views complementing the original real captures, enabling more efficient self-supervision for semantics. In this work we propose an end-to-end trainable solution jointly addressing geometry completion, colorization, and semantic mapping from a few RGB-D images, without 3D or 2D ground-truth. Our method is the first, to our knowledge, fully self-supervised method addressing completion and semantic segmentation of real-world 3D scans. It performs comparably well with the 3D supervised baselines, surpasses baselines with 2D supervision on real datasets, and generalizes well to unseen scenes.

Modelling the performance of delivery vehicles across urban micro-regions to accelerate the transition to cargo-bike logistics

Jan 30, 2023Abstract:Light goods vehicles (LGV) used extensively in the last mile of delivery are one of the leading polluters in cities. Cargo-bike logistics has been put forward as a high impact candidate for replacing LGVs, with experts estimating over half of urban van deliveries being replaceable by cargo bikes, due to their faster speeds, shorter parking times and more efficient routes across cities. By modelling the relative delivery performance of different vehicle types across urban micro-regions, machine learning can help operators evaluate the business and environmental impact of adding cargo-bikes to their fleets. In this paper, we introduce two datasets, and present initial progress in modelling urban delivery service time (e.g. cruising for parking, unloading, walking). Using Uber's H3 index to divide the cities into hexagonal cells, and aggregating OpenStreetMap tags for each cell, we show that urban context is a critical predictor of delivery performance.

Multimodal Video Adapter for Parameter Efficient Video Text Retrieval

Jan 19, 2023Abstract:State-of-the-art video-text retrieval (VTR) methods usually fully fine-tune the pre-trained model (e.g. CLIP) on specific datasets, which may suffer from substantial storage costs in practical applications since a separate model per task needs to be stored. To overcome this issue, we present the premier work on performing parameter-efficient VTR from the pre-trained model, i.e., only a small number of parameters are tunable while freezing the backbone. Towards this goal, we propose a new method dubbed Multimodal Video Adapter (MV-Adapter) for efficiently transferring the knowledge in the pre-trained CLIP from image-text to video-text. Specifically, MV-Adapter adopts bottleneck structures in both video and text branches and introduces two novel components. The first is a Temporal Adaptation Module employed in the video branch to inject global and local temporal contexts. We also learn weights calibrations to adapt to the dynamic variations across frames. The second is a Cross-Modal Interaction Module that generates weights for video/text branches through a shared parameter space, for better aligning between modalities. Thanks to above innovations, MV-Adapter can achieve on-par or better performance than standard fine-tuning with negligible parameters overhead. Notably, on five widely used VTR benchmarks (MSR-VTT, MSVD, LSMDC, DiDemo, and ActivityNet), MV-Adapter consistently outperforms various competing methods in V2T/T2V tasks with large margins. Codes will be released.

Edge Preserving Implicit Surface Representation of Point Clouds

Jan 12, 2023Abstract:Learning implicit surface directly from raw data recently has become a very attractive representation method for 3D reconstruction tasks due to its excellent performance. However, as the raw data quality deteriorates, the implicit functions often lead to unsatisfactory reconstruction results. To this end, we propose a novel edge-preserving implicit surface reconstruction method, which mainly consists of a differentiable Laplican regularizer and a dynamic edge sampling strategy. Among them, the differential Laplican regularizer can effectively alleviate the implicit surface unsmoothness caused by the point cloud quality deteriorates; Meanwhile, in order to reduce the excessive smoothing at the edge regions of implicit suface, we proposed a dynamic edge extract strategy for sampling near the sharp edge of point cloud, which can effectively avoid the Laplacian regularizer from smoothing all regions. Finally, we combine them with a simple regularization term for robust implicit surface reconstruction. Compared with the state-of-the-art methods, experimental results show that our method significantly improves the quality of 3D reconstruction results. Moreover, we demonstrate through several experiments that our method can be conveniently and effectively applied to some point cloud analysis tasks, including point cloud edge feature extraction, normal estimation,etc.

Learning Physically Realizable Skills for Online Packing of General 3D Shapes

Dec 05, 2022Abstract:We study the problem of learning online packing skills for irregular 3D shapes, which is arguably the most challenging setting of bin packing problems. The goal is to consecutively move a sequence of 3D objects with arbitrary shapes into a designated container with only partial observations of the object sequence. Meanwhile, we take physical realizability into account, involving physics dynamics and constraints of a placement. The packing policy should understand the 3D geometry of the object to be packed and make effective decisions to accommodate it in the container in a physically realizable way. We propose a Reinforcement Learning (RL) pipeline to learn the policy. The complex irregular geometry and imperfect object placement together lead to huge solution space. Direct training in such space is prohibitively data intensive. We instead propose a theoretically-provable method for candidate action generation to reduce the action space of RL and the learning burden. A parameterized policy is then learned to select the best placement from the candidates. Equipped with an efficient method of asynchronous RL acceleration and a data preparation process of simulation-ready training sequences, a mature packing policy can be trained in a physics-based environment within 48 hours. Through extensive evaluation on a variety of real-life shape datasets and comparisons with state-of-the-art baselines, we demonstrate that our method outperforms the best-performing baseline on all datasets by at least 12.8% in terms of packing utility.

Multi-resolution Monocular Depth Map Fusion by Self-supervised Gradient-based Composition

Dec 03, 2022Abstract:Monocular depth estimation is a challenging problem on which deep neural networks have demonstrated great potential. However, depth maps predicted by existing deep models usually lack fine-grained details due to the convolution operations and the down-samplings in networks. We find that increasing input resolution is helpful to preserve more local details while the estimation at low resolution is more accurate globally. Therefore, we propose a novel depth map fusion module to combine the advantages of estimations with multi-resolution inputs. Instead of merging the low- and high-resolution estimations equally, we adopt the core idea of Poisson fusion, trying to implant the gradient domain of high-resolution depth into the low-resolution depth. While classic Poisson fusion requires a fusion mask as supervision, we propose a self-supervised framework based on guided image filtering. We demonstrate that this gradient-based composition performs much better at noisy immunity, compared with the state-of-the-art depth map fusion method. Our lightweight depth fusion is one-shot and runs in real-time, making our method 80X faster than a state-of-the-art depth fusion method. Quantitative evaluations demonstrate that the proposed method can be integrated into many fully convolutional monocular depth estimation backbones with a significant performance boost, leading to state-of-the-art results of detail enhancement on depth maps.

3D-Aware Object Goal Navigation via Simultaneous Exploration and Identification

Dec 01, 2022Abstract:Object goal navigation (ObjectNav) in unseen environments is a fundamental task for Embodied AI. Agents in existing works learn ObjectNav policies based on 2D maps, scene graphs, or image sequences. Considering this task happens in 3D space, a 3D-aware agent can advance its ObjectNav capability via learning from fine-grained spatial information. However, leveraging 3D scene representation can be prohibitively unpractical for policy learning in this floor-level task, due to low sample efficiency and expensive computational cost. In this work, we propose a framework for the challenging 3D-aware ObjectNav based on two straightforward sub-policies. The two sub-polices, namely corner-guided exploration policy and category-aware identification policy, simultaneously perform by utilizing online fused 3D points as observation. Through extensive experiments, we show that this framework can dramatically improve the performance in ObjectNav through learning from 3D scene representation. Our framework achieves the best performance among all modular-based methods on the Matterport3D and Gibson datasets, while requiring (up to 30x) less computational cost for training.

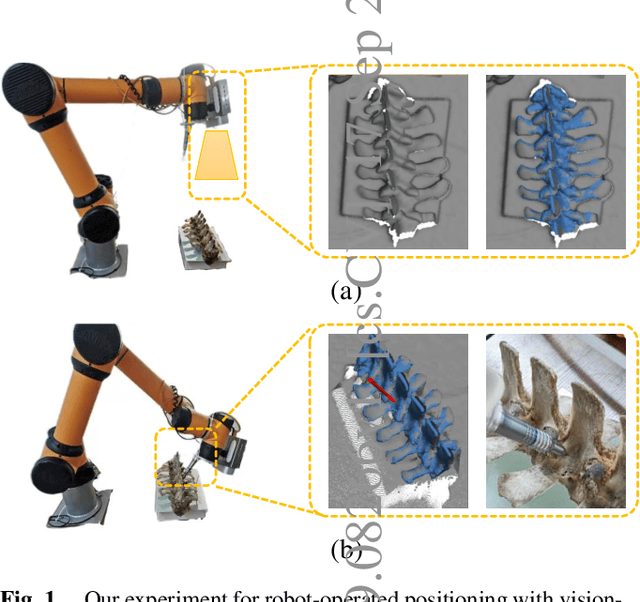

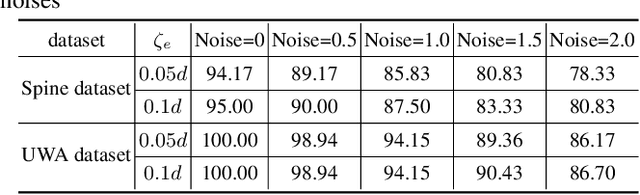

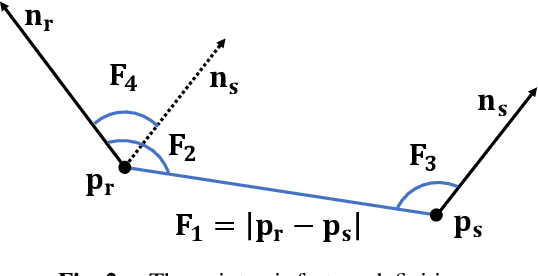

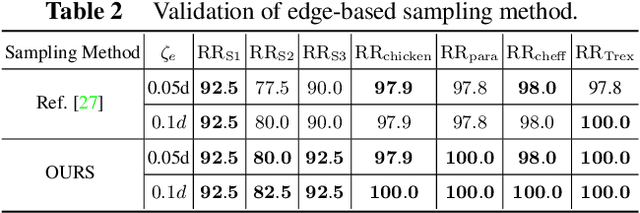

6DOF Pose Estimation of a 3D Rigid Object based on Edge-enhanced Point Pair Features

Sep 17, 2022

Abstract:The point pair feature (PPF) is widely used for 6D pose estimation. In this paper, we propose an efficient 6D pose estimation method based on the PPF framework. We introduce a well-targeted down-sampling strategy that focuses more on edge area for efficient feature extraction of complex geometry. A pose hypothesis validation approach is proposed to resolve the symmetric ambiguity by calculating edge matching degree. We perform evaluations on two challenging datasets and one real-world collected dataset, demonstrating the superiority of our method on pose estimation of geometrically complex, occluded, symmetrical objects. We further validate our method by applying it to simulated punctures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge