Jing Gao

Zhejiang University

SeATrans: Learning Segmentation-Assisted diagnosis model via Transformer

Jun 22, 2022

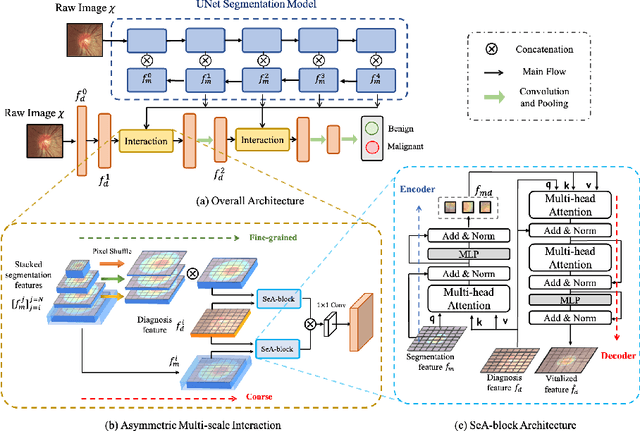

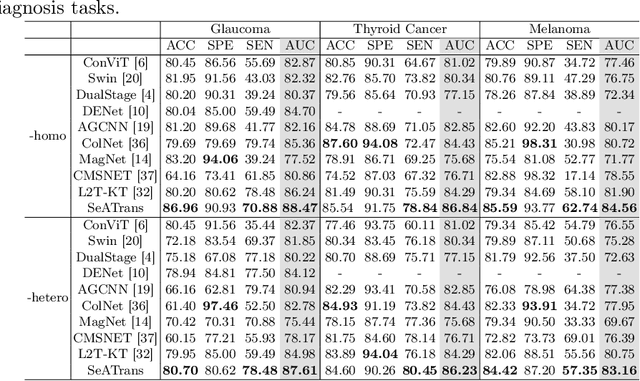

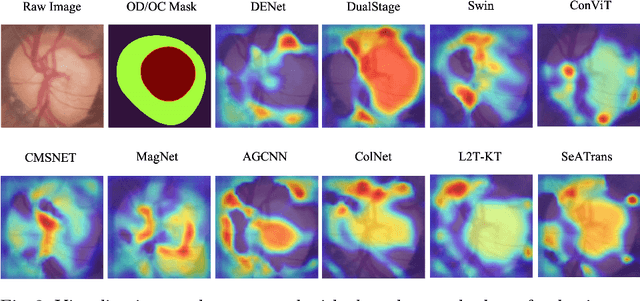

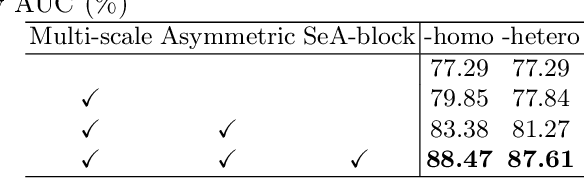

Abstract:Clinically, the accurate annotation of lesions/tissues can significantly facilitate the disease diagnosis. For example, the segmentation of optic disc/cup (OD/OC) on fundus image would facilitate the glaucoma diagnosis, the segmentation of skin lesions on dermoscopic images is helpful to the melanoma diagnosis, etc. With the advancement of deep learning techniques, a wide range of methods proved the lesions/tissues segmentation can also facilitate the automated disease diagnosis models. However, existing methods are limited in the sense that they can only capture static regional correlations in the images. Inspired by the global and dynamic nature of Vision Transformer, in this paper, we propose Segmentation-Assisted diagnosis Transformer (SeATrans) to transfer the segmentation knowledge to the disease diagnosis network. Specifically, we first propose an asymmetric multi-scale interaction strategy to correlate each single low-level diagnosis feature with multi-scale segmentation features. Then, an effective strategy called SeA-block is adopted to vitalize diagnosis feature via correlated segmentation features. To model the segmentation-diagnosis interaction, SeA-block first embeds the diagnosis feature based on the segmentation information via the encoder, and then transfers the embedding back to the diagnosis feature space by a decoder. Experimental results demonstrate that SeATrans surpasses a wide range of state-of-the-art (SOTA) segmentation-assisted diagnosis methods on several disease diagnosis tasks.

AdaMix: Mixture-of-Adapter for Parameter-efficient Tuning of Large Language Models

May 24, 2022

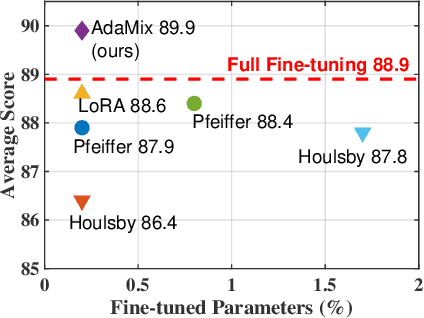

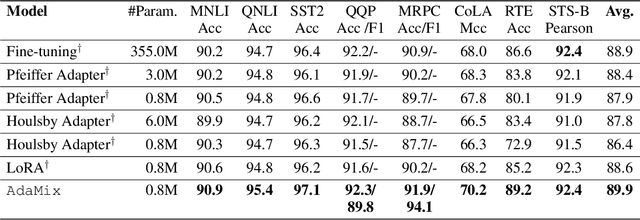

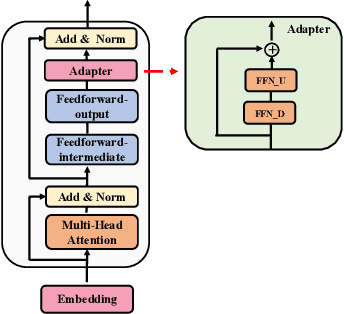

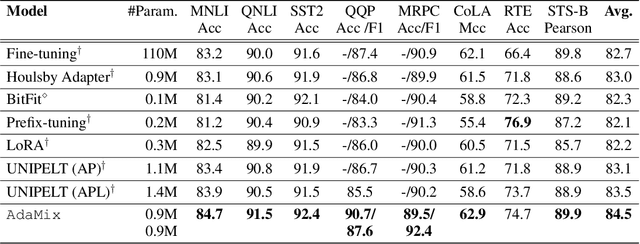

Abstract:Fine-tuning large-scale pre-trained language models to downstream tasks require updating hundreds of millions of parameters. This not only increases the serving cost to store a large copy of the model weights for every task, but also exhibits instability during few-shot task adaptation. Parameter-efficient techniques have been developed that tune small trainable components (e.g., adapters) injected in the large model while keeping most of the model weights frozen. The prevalent mechanism to increase adapter capacity is to increase the bottleneck dimension which increases the adapter parameters. In this work, we introduce a new mechanism to improve adapter capacity without increasing parameters or computational cost by two key techniques. (i) We introduce multiple shared adapter components in each layer of the Transformer architecture. We leverage sparse learning via random routing to update the adapter parameters (encoder is kept frozen) resulting in the same amount of computational cost (FLOPs) as that of training a single adapter. (ii) We propose a simple merging mechanism to average the weights of multiple adapter components to collapse to a single adapter in each Transformer layer, thereby, keeping the overall parameters also the same but with significant performance improvement. We demonstrate these techniques to work well across multiple task settings including fully supervised and few-shot Natural Language Understanding tasks. By only tuning 0.23% of a pre-trained language model's parameters, our model outperforms the full model fine-tuning performance and several competing methods.

Label a Herd in Minutes: Individual Holstein-Friesian Cattle Identification

Apr 22, 2022

Abstract:We describe a practically evaluated approach for training visual cattle ID systems for a whole farm requiring only ten minutes of labelling effort. In particular, for the task of automatic identification of individual Holstein-Friesians in real-world farm CCTV, we show that self-supervision, metric learning, cluster analysis, and active learning can complement each other to significantly reduce the annotation requirements usually needed to train cattle identification frameworks. Evaluating the approach on the test portion of the publicly available Cows2021 dataset, for training we use 23,350 frames across 435 single individual tracklets generated by automated oriented cattle detection and tracking in operational farm footage. Self-supervised metric learning is first employed to initialise a candidate identity space where each tracklet is considered a distinct entity. Grouping entities into equivalence classes representing cattle identities is then performed by automated merging via cluster analysis and active learning. Critically, we identify the inflection point at which automated choices cannot replicate improvements based on human intervention to reduce annotation to a minimum. Experimental results show that cluster analysis and a few minutes of labelling after automated self-supervision can improve the test identification accuracy of 153 identities to 92.44% (ARI=0.93) from the 74.9% (ARI=0.754) obtained by self-supervision only. These promising results indicate that a tailored combination of human and machine reasoning in visual cattle ID pipelines can be highly effective whilst requiring only minimal labelling effort. We provide all key source code and network weights with this paper for easy result reproduction.

CNN LEGO: Disassembling and Assembling Convolutional Neural Network

Mar 25, 2022

Abstract:Convolutional Neural Network (CNN), which mimics human visual perception mechanism, has been successfully used in many computer vision areas. Some psychophysical studies show that the visual perception mechanism synchronously processes the form, color, movement, depth, etc., in the initial stage [7,20] and then integrates all information for final recognition [38]. What's more, the human visual system [20] contains different subdivisions or different tasks. Inspired by the above visual perception mechanism, we investigate a new task, termed as Model Disassembling and Assembling (MDA-Task), which can disassemble the deep models into independent parts and assemble those parts into a new deep model without performance cost like playing LEGO toys. To this end, we propose a feature route attribution technique (FRAT) for disassembling CNN classifiers in this paper. In FRAT, the positive derivatives of predicted class probability w.r.t. the feature maps are adopted to locate the critical features in each layer. Then, relevance analysis between the critical features and preceding/subsequent parameter layers is adopted to bridge the route between two adjacent parameter layers. In the assembling phase, class-wise components of each layer are assembled into a new deep model for a specific task. Extensive experiments demonstrate that the assembled CNN classifier can achieve close accuracy with the original classifier without any fine-tune, and excess original performance with one-epoch fine-tune. What's more, we also conduct massive experiments to verify the broad application of MDA-Task on model decision route visualization, model compression, knowledge distillation, transfer learning, incremental learning, and so on.

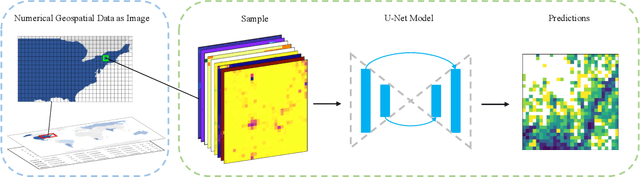

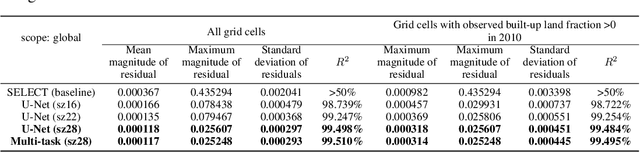

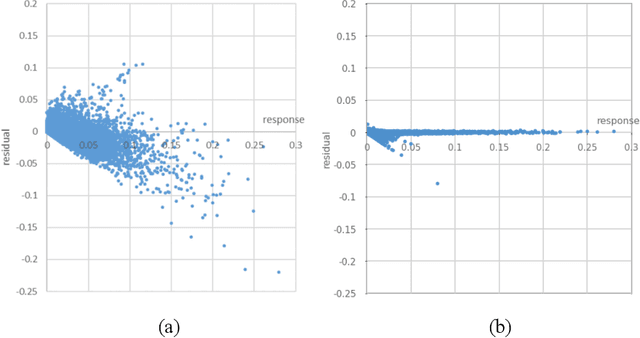

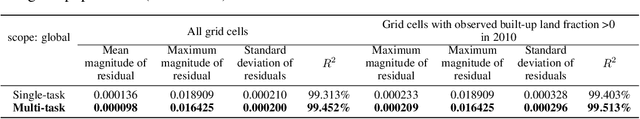

Deep Learning for Spatiotemporal Modeling of Urbanization

Dec 17, 2021

Abstract:Urbanization has a strong impact on the health and wellbeing of populations across the world. Predictive spatial modeling of urbanization therefore can be a useful tool for effective public health planning. Many spatial urbanization models have been developed using classic machine learning and numerical modeling techniques. However, deep learning with its proven capacity to capture complex spatiotemporal phenomena has not been applied to urbanization modeling. Here we explore the capacity of deep spatial learning for the predictive modeling of urbanization. We treat numerical geospatial data as images with pixels and channels, and enrich the dataset by augmentation, in order to leverage the high capacity of deep learning. Our resulting model can generate end-to-end multi-variable urbanization predictions, and outperforms a state-of-the-art classic machine learning urbanization model in preliminary comparisons.

LiST: Lite Self-training Makes Efficient Few-shot Learners

Oct 12, 2021

Abstract:We present a new method LiST for efficient fine-tuning of large pre-trained language models (PLMs) in few-shot learning settings. LiST significantly improves over recent methods that adopt prompt fine-tuning using two key techniques. The first one is the use of self-training to leverage large amounts of unlabeled data for prompt-tuning to significantly boost the model performance in few-shot settings. We use self-training in conjunction with meta-learning for re-weighting noisy pseudo-prompt labels. However, traditional self-training is expensive as it requires updating all the model parameters repetitively. Therefore, we use a second technique for light-weight fine-tuning where we introduce a small number of task-specific adapter parameters that are fine-tuned during self-training while keeping the PLM encoder frozen. This also significantly reduces the overall model footprint across several tasks that can now share a common PLM encoder as backbone for inference. Combining the above techniques, LiST not only improves the model performance for few-shot learning on target domains but also reduces the model memory footprint. We present a comprehensive study on six NLU tasks to validate the effectiveness of LiST. The results show that LiST improves by 35% over classic fine-tuning methods and 6% over prompt-tuning with 96% reduction in number of trainable parameters when fine-tuned with no more than 30 labeled examples from each target domain.

Learning from Language Description: Low-shot Named Entity Recognition via Decomposed Framework

Sep 11, 2021

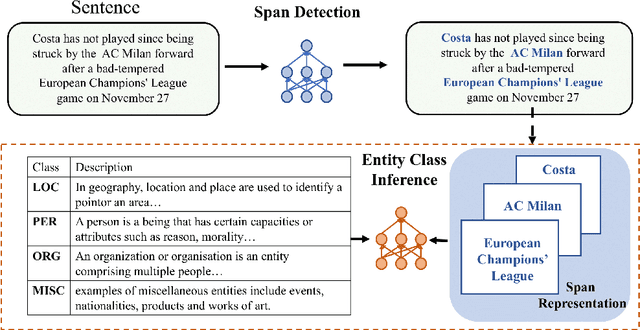

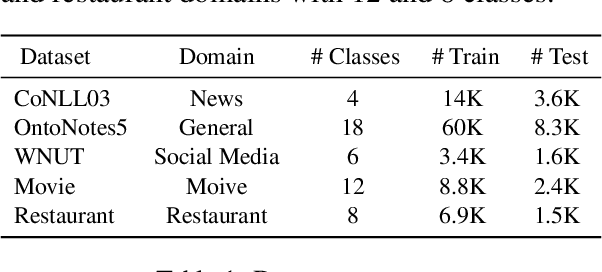

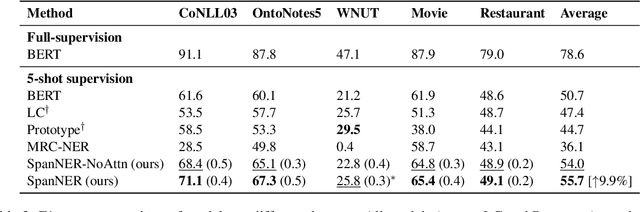

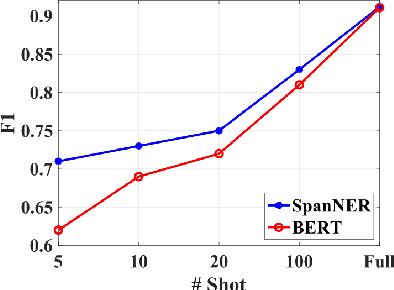

Abstract:In this work, we study the problem of named entity recognition (NER) in a low resource scenario, focusing on few-shot and zero-shot settings. Built upon large-scale pre-trained language models, we propose a novel NER framework, namely SpanNER, which learns from natural language supervision and enables the identification of never-seen entity classes without using in-domain labeled data. We perform extensive experiments on 5 benchmark datasets and evaluate the proposed method in the few-shot learning, domain transfer and zero-shot learning settings. The experimental results show that the proposed method can bring 10%, 23% and 26% improvements in average over the best baselines in few-shot learning, domain transfer and zero-shot learning settings respectively.

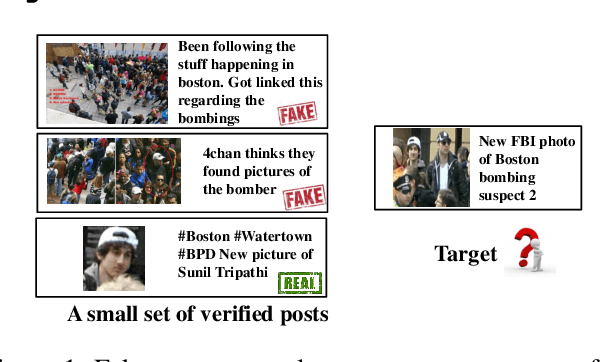

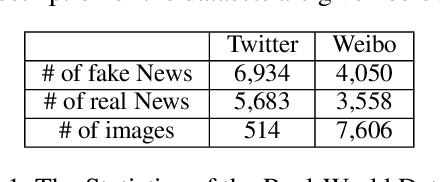

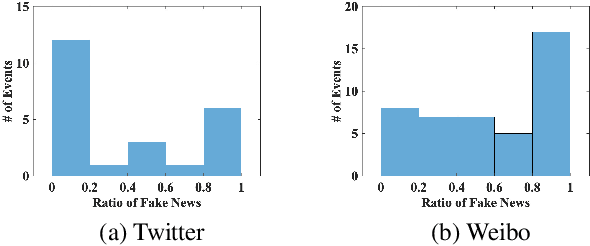

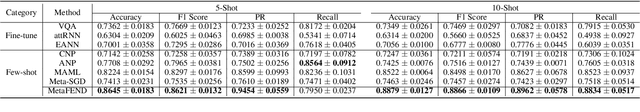

Multimodal Emergent Fake News Detection via Meta Neural Process Networks

Jun 22, 2021

Abstract:Fake news travels at unprecedented speeds, reaches global audiences and puts users and communities at great risk via social media platforms. Deep learning based models show good performance when trained on large amounts of labeled data on events of interest, whereas the performance of models tends to degrade on other events due to domain shift. Therefore, significant challenges are posed for existing detection approaches to detect fake news on emergent events, where large-scale labeled datasets are difficult to obtain. Moreover, adding the knowledge from newly emergent events requires to build a new model from scratch or continue to fine-tune the model, which can be challenging, expensive, and unrealistic for real-world settings. In order to address those challenges, we propose an end-to-end fake news detection framework named MetaFEND, which is able to learn quickly to detect fake news on emergent events with a few verified posts. Specifically, the proposed model integrates meta-learning and neural process methods together to enjoy the benefits of these approaches. In particular, a label embedding module and a hard attention mechanism are proposed to enhance the effectiveness by handling categorical information and trimming irrelevant posts. Extensive experiments are conducted on multimedia datasets collected from Twitter and Weibo. The experimental results show our proposed MetaFEND model can detect fake news on never-seen events effectively and outperform the state-of-the-art methods.

On Sampling Top-K Recommendation Evaluation

Jun 20, 2021

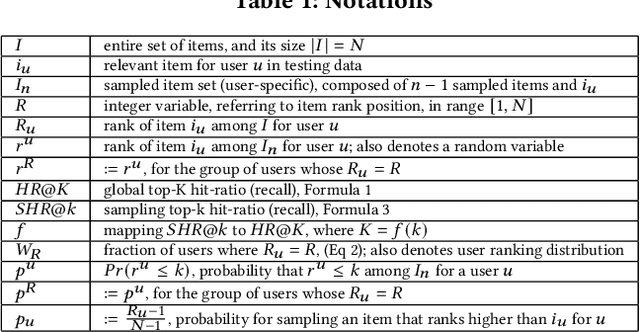

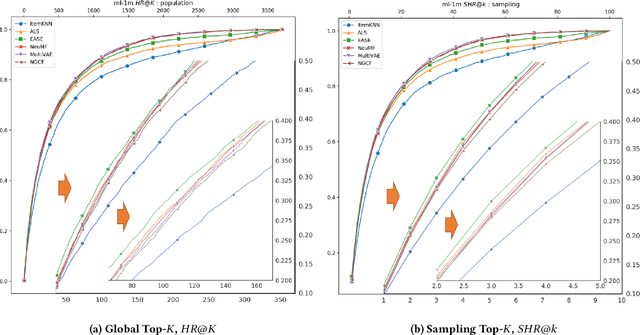

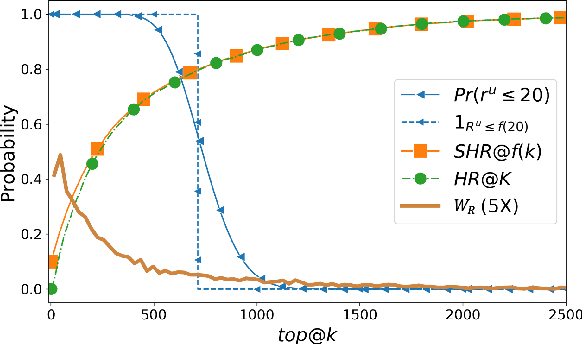

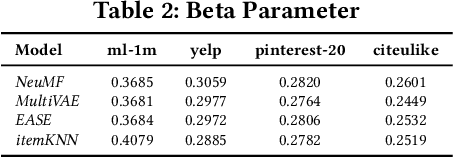

Abstract:Recently, Rendle has warned that the use of sampling-based top-$k$ metrics might not suffice. This throws a number of recent studies on deep learning-based recommendation algorithms, and classic non-deep-learning algorithms using such a metric, into jeopardy. In this work, we thoroughly investigate the relationship between the sampling and global top-$K$ Hit-Ratio (HR, or Recall), originally proposed by Koren[2] and extensively used by others. By formulating the problem of aligning sampling top-$k$ ($SHR@k$) and global top-$K$ ($HR@K$) Hit-Ratios through a mapping function $f$, so that $SHR@k\approx HR@f(k)$, we demonstrate both theoretically and experimentally that the sampling top-$k$ Hit-Ratio provides an accurate approximation of its global (exact) counterpart, and can consistently predict the correct winners (the same as indicate by their corresponding global Hit-Ratios).

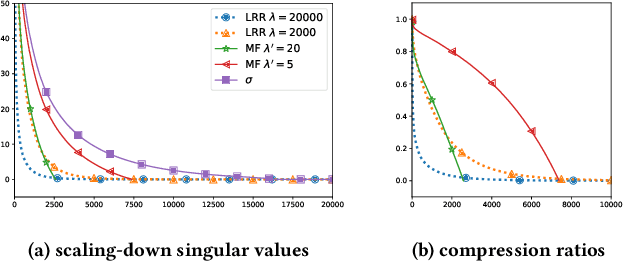

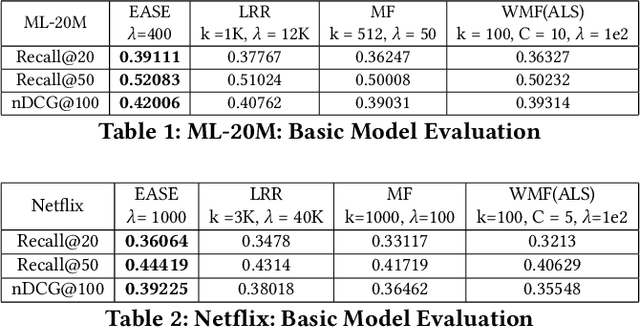

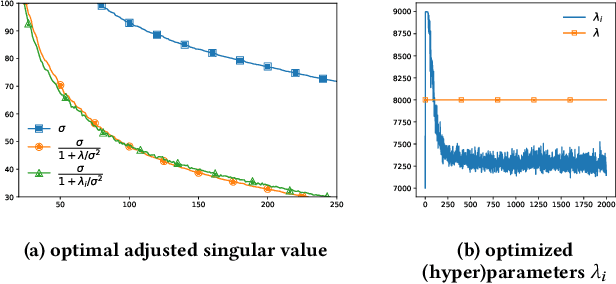

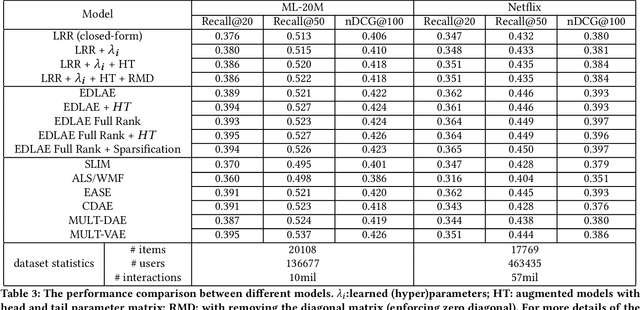

Towards a Better Understanding of Linear Models for Recommendation

Jun 16, 2021

Abstract:Recently, linear regression models, such as EASE and SLIM, have shown to often produce rather competitive results against more sophisticated deep learning models. On the other side, the (weighted) matrix factorization approaches have been popular choices for recommendation in the past and widely adopted in the industry. In this work, we aim to theoretically understand the relationship between these two approaches, which are the cornerstones of model-based recommendations. Through the derivation and analysis of the closed-form solutions for two basic regression and matrix factorization approaches, we found these two approaches are indeed inherently related but also diverge in how they "scale-down" the singular values of the original user-item interaction matrix. This analysis also helps resolve the questions related to the regularization parameter range and model complexities. We further introduce a new learning algorithm in searching (hyper)parameters for the closed-form solution and utilize it to discover the nearby models of the existing solutions. The experimental results demonstrate that the basic models and their closed-form solutions are indeed quite competitive against the state-of-the-art models, thus, confirming the validity of studying the basic models. The effectiveness of exploring the nearby models are also experimentally validated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge