Hongjian Fang

IntraSlice: Towards High-Performance Structural Pruning with Block-Intra PCA for LLMs

Feb 02, 2026Abstract:Large Language Models (LLMs) achieve strong performance across diverse tasks but face deployment challenges due to their massive size. Structured pruning offers acceleration benefits but leads to significant performance degradation. Recent PCA-based pruning methods have alleviated this issue by retaining key activation components, but are only applied between modules in order to fuse the transformation matrix, which introduces extra parameters and severely disrupts activation distributions due to residual connections. To address these issues, we propose IntraSlice, a framework that applies block-wise module-intra PCA compression pruning. By leveraging the structural characteristics of Transformer modules, we design an approximate PCA method whose transformation matrices can be fully fused into the model without additional parameters. We also introduce a PCA-based global pruning ratio estimator that further considers the distribution of compressed activations, building on conventional module importance. We validate our method on Llama2, Llama3, and Phi series across various language benchmarks. Experimental results demonstrate that our approach achieves superior compression performance compared to recent baselines at the same compression ratio or inference speed.

ELDeR: Getting Efficient LLMs through Data-Driven Regularized Layer-wise Pruning

May 23, 2025Abstract:The deployment of Large language models (LLMs) in many fields is largely hindered by their high computational and memory costs. Recent studies suggest that LLMs exhibit sparsity, which can be used for pruning. Previous pruning methods typically follow a prune-then-finetune paradigm. Since the pruned parts still contain valuable information, statically removing them without updating the remaining parameters often results in irreversible performance degradation, requiring costly recovery fine-tuning (RFT) to maintain performance. To address this, we propose a novel paradigm: first apply regularization, then prune. Based on this paradigm, we propose ELDeR: Getting Efficient LLMs through Data-Driven Regularized Layer-wise Pruning. We multiply the output of each transformer layer by an initial weight, then we iteratively learn the weights of each transformer layer by using a small amount of data in a simple way. After that, we apply regularization to the difference between the output and input of the layers with smaller weights, forcing the information to be transferred to the remaining layers. Compared with direct pruning, ELDeR reduces the information loss caused by direct parameter removal, thus better preserving the model's language modeling ability. Experimental results show that ELDeR achieves superior performance compared with powerful layer-wise structured pruning methods, while greatly reducing RFT computational costs. Since ELDeR is a layer-wise pruning method, its end-to-end acceleration effect is obvious, making it a promising technique for efficient LLMs.

XeMap: Contextual Referring in Large-Scale Remote Sensing Environments

Apr 30, 2025

Abstract:Advancements in remote sensing (RS) imagery have provided high-resolution detail and vast coverage, yet existing methods, such as image-level captioning/retrieval and object-level detection/segmentation, often fail to capture mid-scale semantic entities essential for interpreting large-scale scenes. To address this, we propose the conteXtual referring Map (XeMap) task, which focuses on contextual, fine-grained localization of text-referred regions in large-scale RS scenes. Unlike traditional approaches, XeMap enables precise mapping of mid-scale semantic entities that are often overlooked in image-level or object-level methods. To achieve this, we introduce XeMap-Network, a novel architecture designed to handle the complexities of pixel-level cross-modal contextual referring mapping in RS. The network includes a fusion layer that applies self- and cross-attention mechanisms to enhance the interaction between text and image embeddings. Furthermore, we propose a Hierarchical Multi-Scale Semantic Alignment (HMSA) module that aligns multiscale visual features with the text semantic vector, enabling precise multimodal matching across large-scale RS imagery. To support XeMap task, we provide a novel, annotated dataset, XeMap-set, specifically tailored for this task, overcoming the lack of XeMap datasets in RS imagery. XeMap-Network is evaluated in a zero-shot setting against state-of-the-art methods, demonstrating superior performance. This highlights its effectiveness in accurately mapping referring regions and providing valuable insights for interpreting large-scale RS environments.

A Brain-inspired Memory Transformation based Differentiable Neural Computer for Reasoning-based Question Answering

Jan 07, 2023Abstract:Reasoning and question answering as a basic cognitive function for humans, is nevertheless a great challenge for current artificial intelligence. Although the Differentiable Neural Computer (DNC) model could solve such problems to a certain extent, the development is still limited by its high algorithm complexity, slow convergence speed, and poor test robustness. Inspired by the learning and memory mechanism of the brain, this paper proposed a Memory Transformation based Differentiable Neural Computer (MT-DNC) model. MT-DNC incorporates working memory and long-term memory into DNC, and realizes the autonomous transformation of acquired experience between working memory and long-term memory, thereby helping to effectively extract acquired knowledge to improve reasoning ability. Experimental results on bAbI question answering task demonstrated that our proposed method achieves superior performance and faster convergence speed compared to other existing DNN and DNC models. Ablation studies also indicated that the memory transformation from working memory to long-term memory plays essential role in improving the robustness and stability of reasoning. This work explores how brain-inspired memory transformation can be integrated and applied to complex intelligent dialogue and reasoning systems.

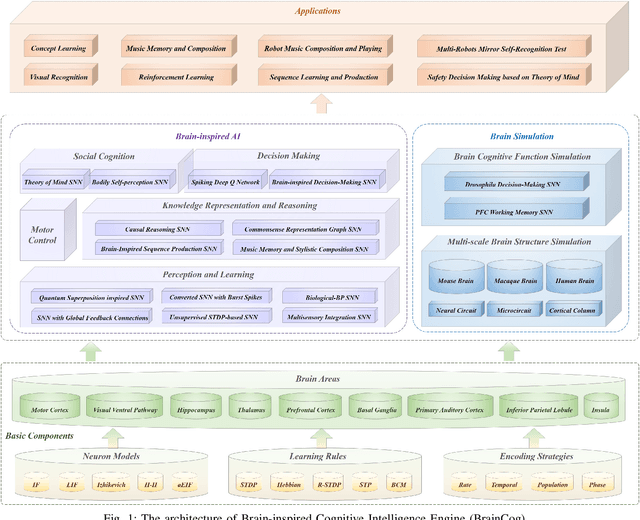

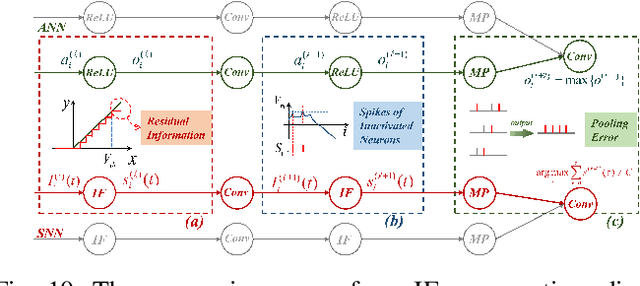

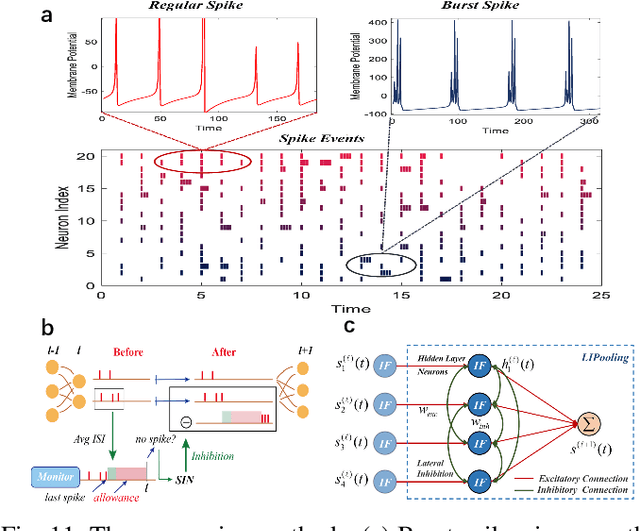

BrainCog: A Spiking Neural Network based Brain-inspired Cognitive Intelligence Engine for Brain-inspired AI and Brain Simulation

Jul 18, 2022

Abstract:Spiking neural networks (SNNs) have attracted extensive attentions in Brain-inspired Artificial Intelligence and computational neuroscience. They can be used to simulate biological information processing in the brain at multiple scales. More importantly, SNNs serve as an appropriate level of abstraction to bring inspirations from brain and cognition to Artificial Intelligence. In this paper, we present the Brain-inspired Cognitive Intelligence Engine (BrainCog) for creating brain-inspired AI and brain simulation models. BrainCog incorporates different types of spiking neuron models, learning rules, brain areas, etc., as essential modules provided by the platform. Based on these easy-to-use modules, BrainCog supports various brain-inspired cognitive functions, including Perception and Learning, Decision Making, Knowledge Representation and Reasoning, Motor Control, and Social Cognition. These brain-inspired AI models have been effectively validated on various supervised, unsupervised, and reinforcement learning tasks, and they can be used to enable AI models to be with multiple brain-inspired cognitive functions. For brain simulation, BrainCog realizes the function simulation of decision-making, working memory, the structure simulation of the Neural Circuit, and whole brain structure simulation of Mouse brain, Macaque brain, and Human brain. An AI engine named BORN is developed based on BrainCog, and it demonstrates how the components of BrainCog can be integrated and used to build AI models and applications. To enable the scientific quest to decode the nature of biological intelligence and create AI, BrainCog aims to provide essential and easy-to-use building blocks, and infrastructural support to develop brain-inspired spiking neural network based AI, and to simulate the cognitive brains at multiple scales. The online repository of BrainCog can be found at https://github.com/braincog-x.

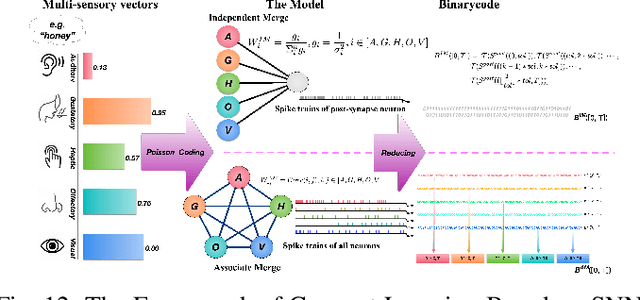

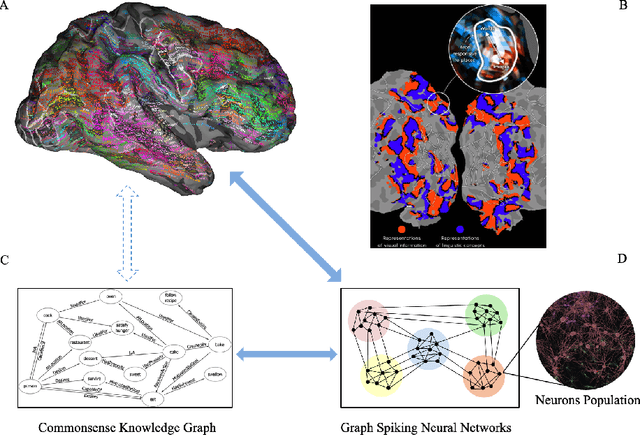

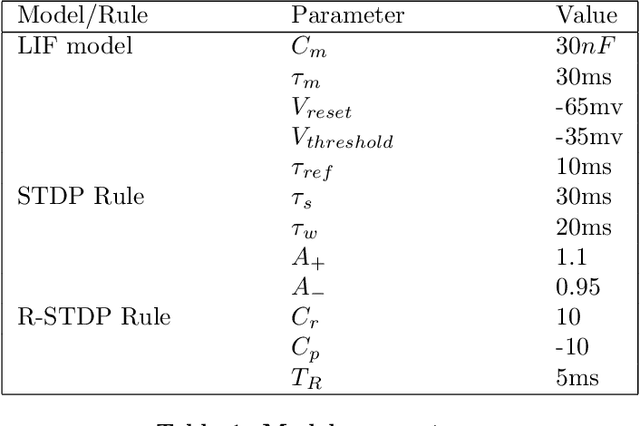

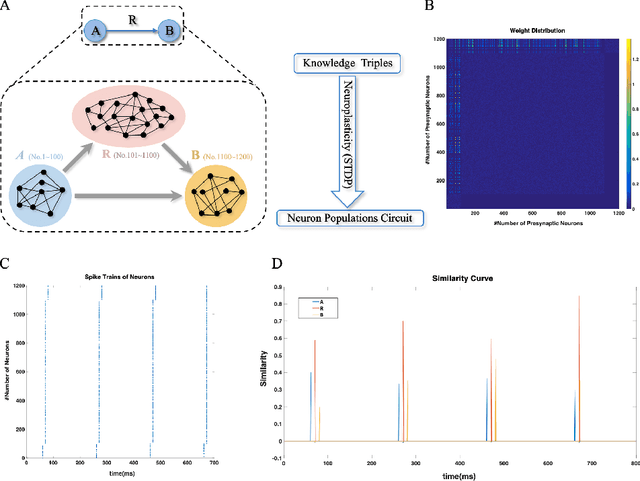

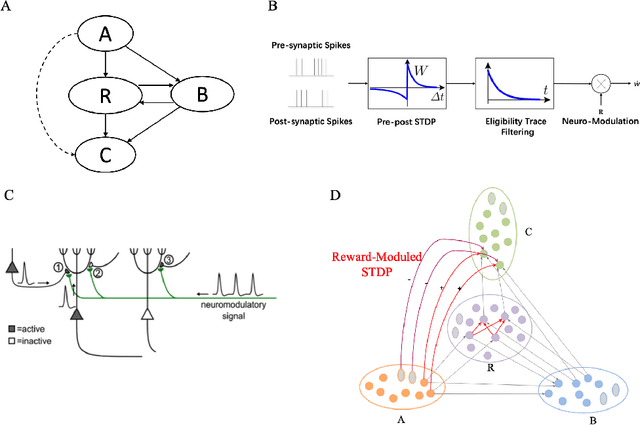

Brain-inspired Graph Spiking Neural Networks for Commonsense Knowledge Representation and Reasoning

Jul 11, 2022

Abstract:How neural networks in the human brain represent commonsense knowledge, and complete related reasoning tasks is an important research topic in neuroscience, cognitive science, psychology, and artificial intelligence. Although the traditional artificial neural network using fixed-length vectors to represent symbols has gained good performance in some specific tasks, it is still a black box that lacks interpretability, far from how humans perceive the world. Inspired by the grandmother-cell hypothesis in neuroscience, this work investigates how population encoding and spiking timing-dependent plasticity (STDP) mechanisms can be integrated into the learning of spiking neural networks, and how a population of neurons can represent a symbol via guiding the completion of sequential firing between different neuron populations. The neuron populations of different communities together constitute the entire commonsense knowledge graph, forming a giant graph spiking neural network. Moreover, we introduced the Reward-modulated spiking timing-dependent plasticity (R-STDP) mechanism to simulate the biological reinforcement learning process and completed the related reasoning tasks accordingly, achieving comparable accuracy and faster convergence speed than the graph convolutional artificial neural networks. For the fields of neuroscience and cognitive science, the work in this paper provided the foundation of computational modeling for further exploration of the way the human brain represents commonsense knowledge. For the field of artificial intelligence, this paper indicated the exploration direction for realizing a more robust and interpretable neural network by constructing a commonsense knowledge representation and reasoning spiking neural networks with solid biological plausibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge