Helin Wang

DPM-TSE: A Diffusion Probabilistic Model for Target Sound Extraction

Oct 10, 2023

Abstract:Common target sound extraction (TSE) approaches primarily relied on discriminative approaches in order to separate the target sound while minimizing interference from the unwanted sources, with varying success in separating the target from the background. This study introduces DPM-TSE, a first generative method based on diffusion probabilistic modeling (DPM) for target sound extraction, to achieve both cleaner target renderings as well as improved separability from unwanted sounds. The technique also tackles common background noise issues with DPM by introducing a correction method for noise schedules and sample steps. This approach is evaluated using both objective and subjective quality metrics on the FSD Kaggle 2018 dataset. The results show that DPM-TSE has a significant improvement in perceived quality in terms of target extraction and purity.

DuTa-VC: A Duration-aware Typical-to-atypical Voice Conversion Approach with Diffusion Probabilistic Model

Jun 18, 2023

Abstract:We present a novel typical-to-atypical voice conversion approach (DuTa-VC), which (i) can be trained with nonparallel data (ii) first introduces diffusion probabilistic model (iii) preserves the target speaker identity (iv) is aware of the phoneme duration of the target speaker. DuTa-VC consists of three parts: an encoder transforms the source mel-spectrogram into a duration-modified speaker-independent mel-spectrogram, a decoder performs the reverse diffusion to generate the target mel-spectrogram, and a vocoder is applied to reconstruct the waveform. Objective evaluations conducted on the UASpeech show that DuTa-VC is able to capture severity characteristics of dysarthric speech, reserves speaker identity, and significantly improves dysarthric speech recognition as a data augmentation. Subjective evaluations by two expert speech pathologists validate that DuTa-VC can preserve the severity and type of dysarthria of the target speakers in the synthesized speech.

Benchmarking Large Language Models on CMExam -- A Comprehensive Chinese Medical Exam Dataset

Jun 08, 2023Abstract:Recent advancements in large language models (LLMs) have transformed the field of question answering (QA). However, evaluating LLMs in the medical field is challenging due to the lack of standardized and comprehensive datasets. To address this gap, we introduce CMExam, sourced from the Chinese National Medical Licensing Examination. CMExam consists of 60K+ multiple-choice questions for standardized and objective evaluations, as well as solution explanations for model reasoning evaluation in an open-ended manner. For in-depth analyses of LLMs, we invited medical professionals to label five additional question-wise annotations, including disease groups, clinical departments, medical disciplines, areas of competency, and question difficulty levels. Alongside the dataset, we further conducted thorough experiments with representative LLMs and QA algorithms on CMExam. The results show that GPT-4 had the best accuracy of 61.6% and a weighted F1 score of 0.617. These results highlight a great disparity when compared to human accuracy, which stood at 71.6%. For explanation tasks, while LLMs could generate relevant reasoning and demonstrate improved performance after finetuning, they fall short of a desired standard, indicating ample room for improvement. To the best of our knowledge, CMExam is the first Chinese medical exam dataset to provide comprehensive medical annotations. The experiments and findings of LLM evaluation also provide valuable insights into the challenges and potential solutions in developing Chinese medical QA systems and LLM evaluation pipelines. The dataset and relevant code are available at https://github.com/williamliujl/CMExam.

NoreSpeech: Knowledge Distillation based Conditional Diffusion Model for Noise-robust Expressive TTS

Nov 04, 2022Abstract:Expressive text-to-speech (TTS) can synthesize a new speaking style by imiating prosody and timbre from a reference audio, which faces the following challenges: (1) The highly dynamic prosody information in the reference audio is difficult to extract, especially, when the reference audio contains background noise. (2) The TTS systems should have good generalization for unseen speaking styles. In this paper, we present a \textbf{no}ise-\textbf{r}obust \textbf{e}xpressive TTS model (NoreSpeech), which can robustly transfer speaking style in a noisy reference utterance to synthesized speech. Specifically, our NoreSpeech includes several components: (1) a novel DiffStyle module, which leverages powerful probabilistic denoising diffusion models to learn noise-agnostic speaking style features from a teacher model by knowledge distillation; (2) a VQ-VAE block, which maps the style features into a controllable quantized latent space for improving the generalization of style transfer; and (3) a straight-forward but effective parameter-free text-style alignment module, which enables NoreSpeech to transfer style to a textual input from a length-mismatched reference utterance. Experiments demonstrate that NoreSpeech is more effective than previous expressive TTS models in noise environments. Audio samples and code are available at: \href{http://dongchaoyang.top/NoreSpeech\_demo/}{http://dongchaoyang.top/NoreSpeech\_demo/}

Diffsound: Discrete Diffusion Model for Text-to-sound Generation

Jul 20, 2022

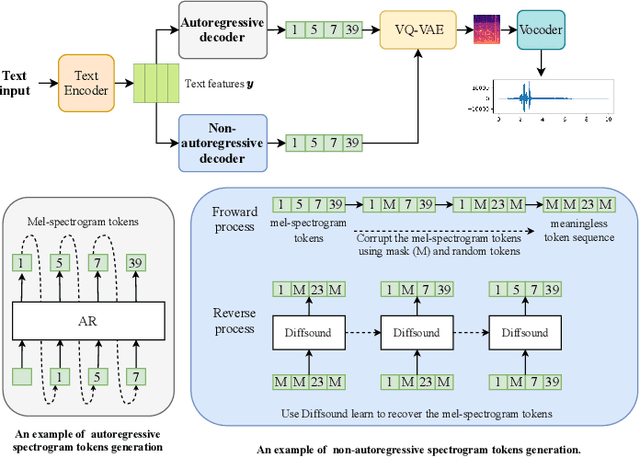

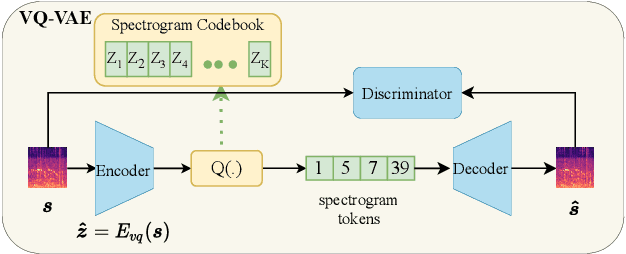

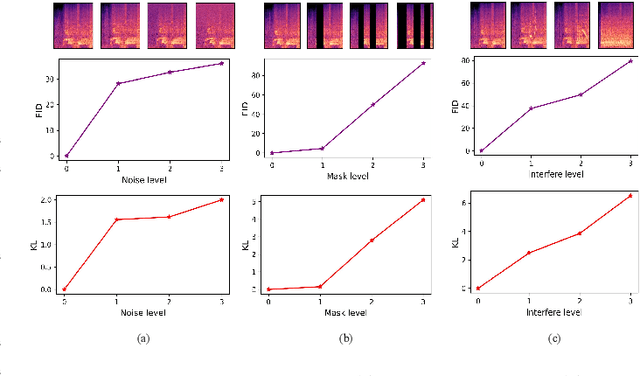

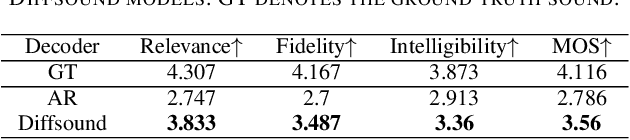

Abstract:Generating sound effects that humans want is an important topic. However, there are few studies in this area for sound generation. In this study, we investigate generating sound conditioned on a text prompt and propose a novel text-to-sound generation framework that consists of a text encoder, a Vector Quantized Variational Autoencoder (VQ-VAE), a decoder, and a vocoder. The framework first uses the decoder to transfer the text features extracted from the text encoder to a mel-spectrogram with the help of VQ-VAE, and then the vocoder is used to transform the generated mel-spectrogram into a waveform. We found that the decoder significantly influences the generation performance. Thus, we focus on designing a good decoder in this study. We begin with the traditional autoregressive decoder, which has been proved as a state-of-the-art method in previous sound generation works. However, the AR decoder always predicts the mel-spectrogram tokens one by one in order, which introduces the unidirectional bias and accumulation of errors problems. Moreover, with the AR decoder, the sound generation time increases linearly with the sound duration. To overcome the shortcomings introduced by AR decoders, we propose a non-autoregressive decoder based on the discrete diffusion model, named Diffsound. Specifically, the Diffsound predicts all of the mel-spectrogram tokens in one step and then refines the predicted tokens in the next step, so the best-predicted results can be obtained after several steps. Our experiments show that our proposed Diffsound not only produces better text-to-sound generation results when compared with the AR decoder but also has a faster generation speed, e.g., MOS: 3.56 \textit{v.s} 2.786, and the generation speed is five times faster than the AR decoder.

Calibrate and Refine! A Novel and Agile Framework for ASR-error Robust Intent Detection

May 23, 2022

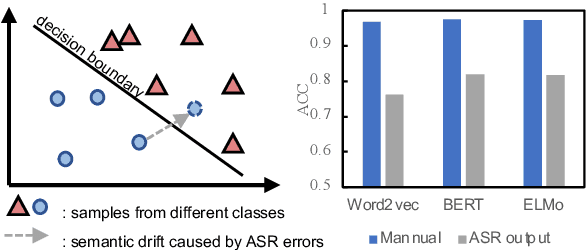

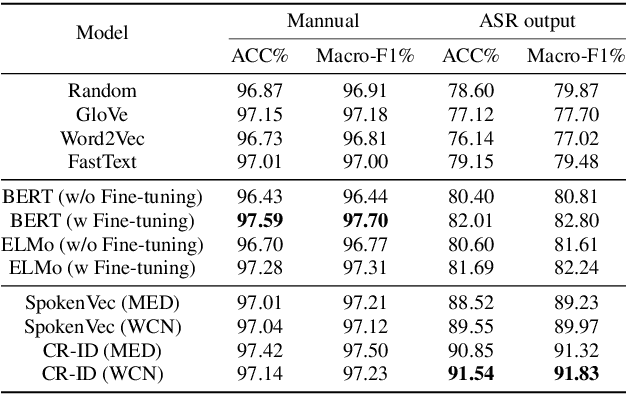

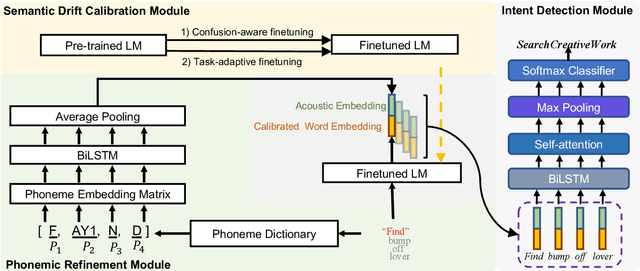

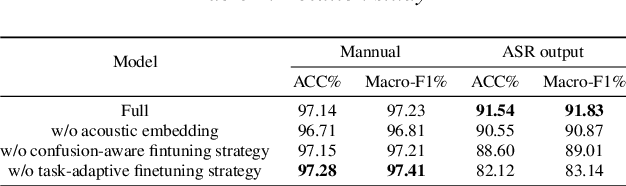

Abstract:The past ten years have witnessed the rapid development of text-based intent detection, whose benchmark performances have already been taken to a remarkable level by deep learning techniques. However, automatic speech recognition (ASR) errors are inevitable in real-world applications due to the environment noise, unique speech patterns and etc, leading to sharp performance drop in state-of-the-art text-based intent detection models. Essentially, this phenomenon is caused by the semantic drift brought by ASR errors and most existing works tend to focus on designing new model structures to reduce its impact, which is at the expense of versatility and flexibility. Different from previous one-piece model, in this paper, we propose a novel and agile framework called CR-ID for ASR error robust intent detection with two plug-and-play modules, namely semantic drift calibration module (SDCM) and phonemic refinement module (PRM), which are both model-agnostic and thus could be easily integrated to any existing intent detection models without modifying their structures. Experimental results on SNIPS dataset show that, our proposed CR-ID framework achieves competitive performance and outperform all the baseline methods on ASR outputs, which verifies that CR-ID can effectively alleviate the semantic drift caused by ASR errors.

Masked Spectrogram Prediction For Self-Supervised Audio Pre-Training

Apr 27, 2022

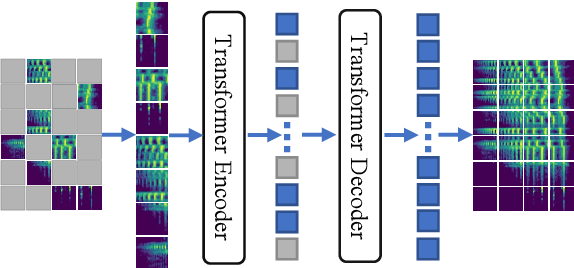

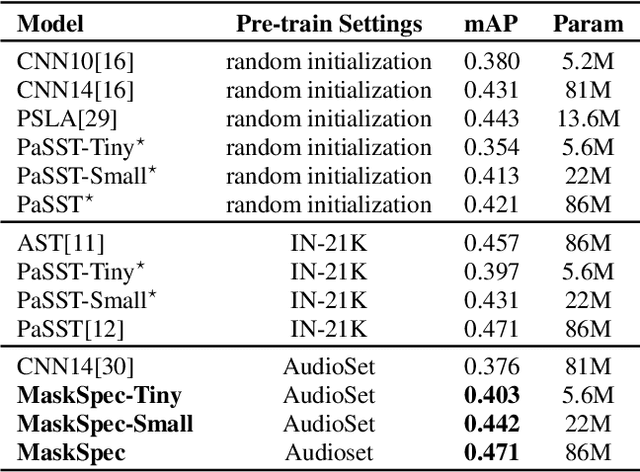

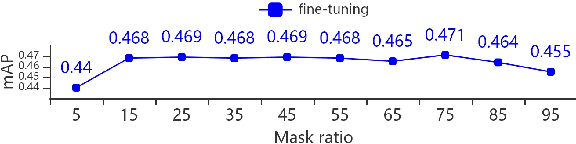

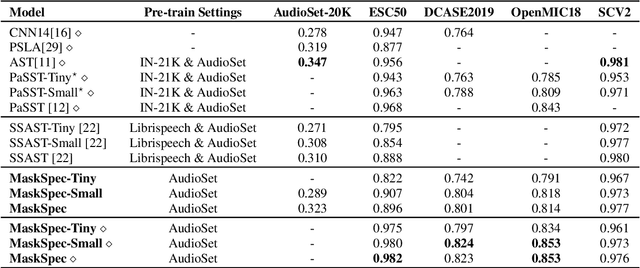

Abstract:Transformer-based models attain excellent results and generalize well when trained on sufficient amounts of data. However, constrained by the limited data available in the audio domain, most transformer-based models for audio tasks are finetuned from pre-trained models in other domains (e.g. image), which has a notable gap with the audio domain. Other methods explore the self-supervised learning approaches directly in the audio domain but currently do not perform well in the downstream tasks. In this paper, we present a novel self-supervised learning method for transformer-based audio models, called masked spectrogram prediction (MaskSpec), to learn powerful audio representations from unlabeled audio data (AudioSet used in this paper). Our method masks random patches of the input spectrogram and reconstructs the masked regions with an encoder-decoder architecture. Without using extra model weights or supervision, experimental results on multiple downstream datasets demonstrate MaskSpec achieves a significant performance gain against the supervised methods and outperforms the previous pre-trained models. In particular, our best model reaches the performance of 0.471 (mAP) on AudioSet, 0.854 (mAP) on OpenMIC2018, 0.982 (accuracy) on ESC-50, 0.976 (accuracy) on SCV2, and 0.823 (accuracy) on DCASE2019 Task1A respectively.

RaDur: A Reference-aware and Duration-robust Network for Target Sound Detection

Apr 05, 2022

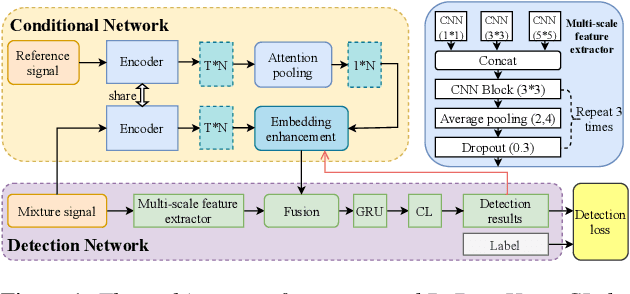

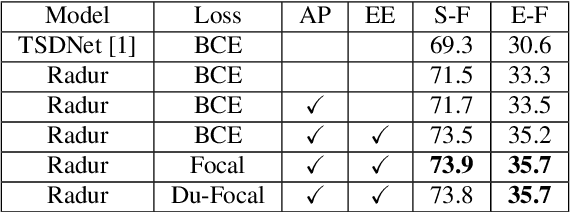

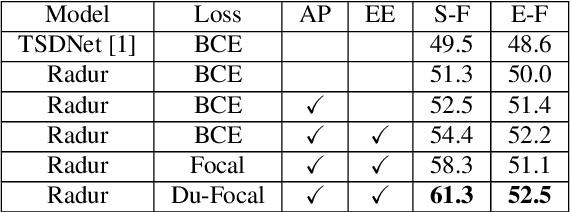

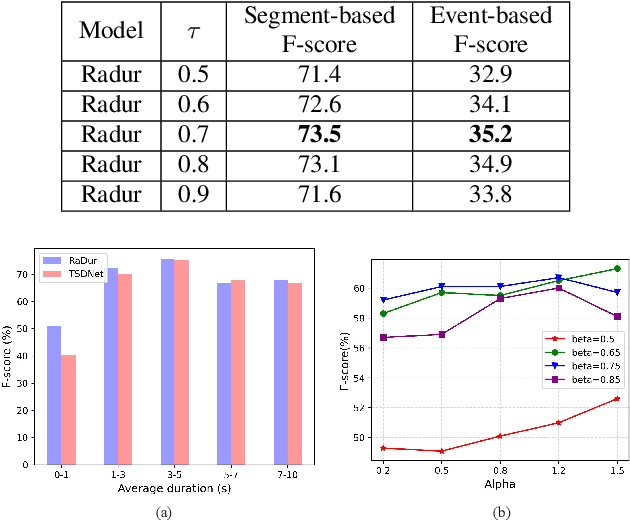

Abstract:Target sound detection (TSD) aims to detect the target sound from a mixture audio given the reference information. Previous methods use a conditional network to extract a sound-discriminative embedding from the reference audio, and then use it to detect the target sound from the mixture audio. However, the network performs much differently when using different reference audios (e.g. performs poorly for noisy and short-duration reference audios), and tends to make wrong decisions for transient events (i.e. shorter than $1$ second). To overcome these problems, in this paper, we present a reference-aware and duration-robust network (RaDur) for TSD. More specifically, in order to make the network more aware of the reference information, we propose an embedding enhancement module to take into account the mixture audio while generating the embedding, and apply the attention pooling to enhance the features of target sound-related frames and weaken the features of noisy frames. In addition, a duration-robust focal loss is proposed to help model different-duration events. To evaluate our method, we build two TSD datasets based on UrbanSound and Audioset. Extensive experiments show the effectiveness of our methods.

A Two-student Learning Framework for Mixed Supervised Target Sound Detection

Apr 05, 2022

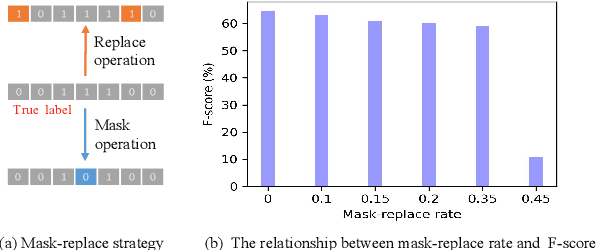

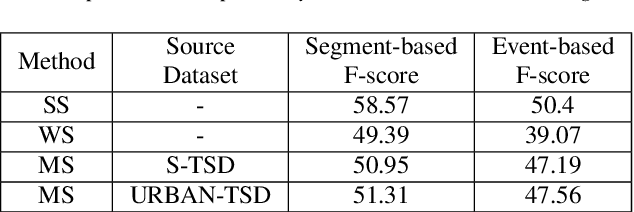

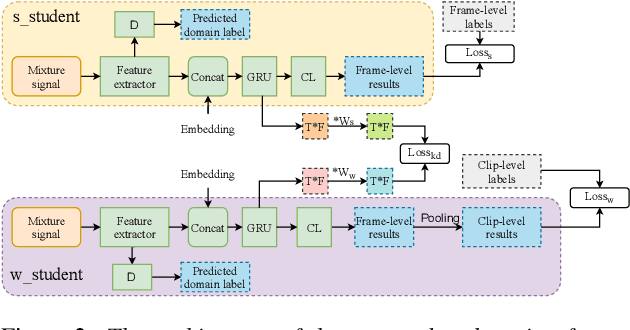

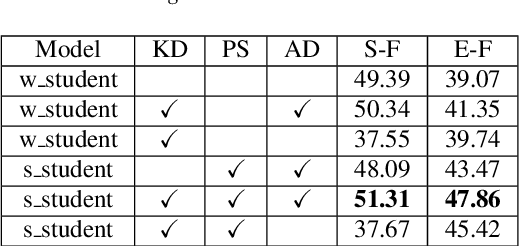

Abstract:Target sound detection (TSD) aims to detect the target sound from mixture audio given the reference information. Previous work shows that a good detection performance relies on fully-annotated data. However, collecting fully-annotated data is labor-extensive. Therefore, we consider TSD with mixed supervision, which learns novel categories (target domain) using weak annotations with the help of full annotations of existing base categories (source domain). We propose a novel two-student learning framework, which contains two mutual helping student models ($\mathit{s\_student}$ and $\mathit{w\_student}$) that learn from fully- and weakly-annotated datasets, respectively. Specifically, we first propose a frame-level knowledge distillation strategy to transfer the class-agnostic knowledge from $\mathit{s\_student}$ to $\mathit{w\_student}$. After that, a pseudo supervised (PS) training is designed to transfer the knowledge from $\mathit{w\_student}$ to $\mathit{s\_student}$. Lastly, an adversarial training strategy is proposed, which aims to align the data distribution between source and target domains. To evaluate our method, we build three TSD datasets based on UrbanSound and Audioset. Experimental results show that our methods offer about 8\% improvement in event-based F score.

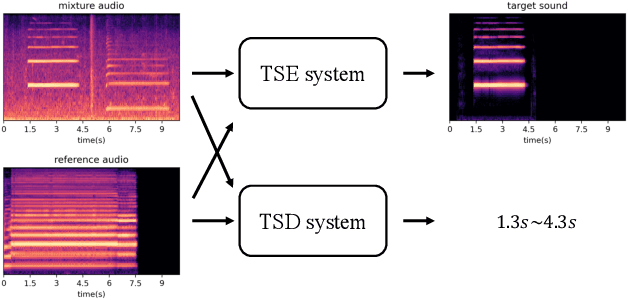

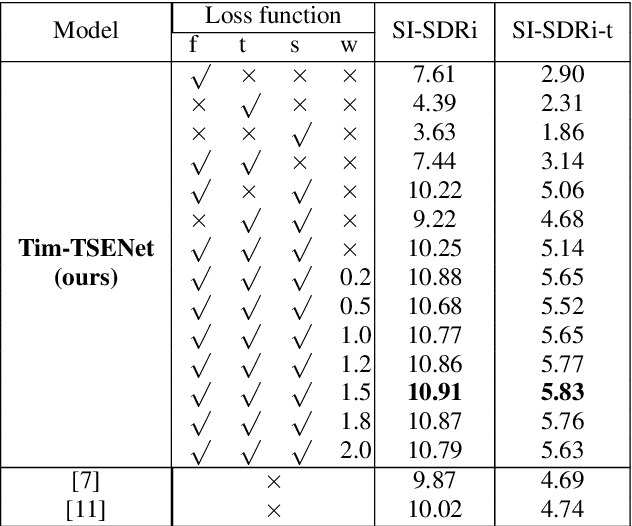

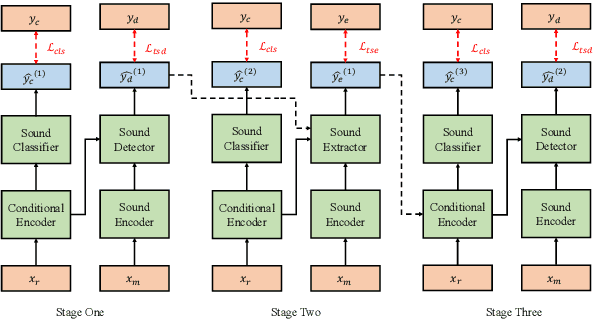

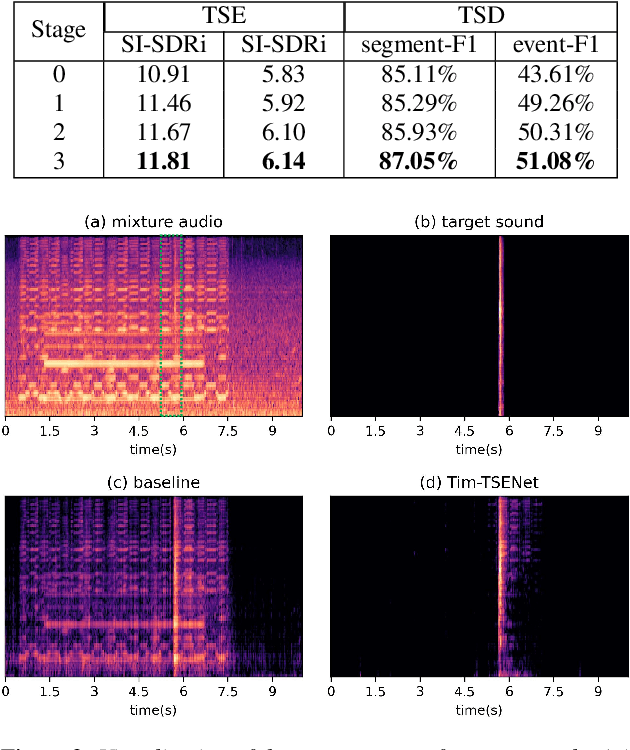

Improving Target Sound Extraction with Timestamp Information

Apr 02, 2022

Abstract:Target sound extraction (TSE) aims to extract the sound part of a target sound event class from a mixture audio with multiple sound events. The previous works mainly focus on the problems of weakly-labelled data, jointly learning and new classes, however, no one cares about the onset and offset times of the target sound event, which has been emphasized in the auditory scene analysis. In this paper, we study to utilize such timestamp information to help extract the target sound via a target sound detection network and a target-weighted time-frequency loss function. More specifically, we use the detection result of a target sound detection (TSD) network as the additional information to guide the learning of target sound extraction network. We also find that the result of TSE can further improve the performance of the TSD network, so that a mutual learning framework of the target sound detection and extraction is proposed. In addition, a target-weighted time-frequency loss function is designed to pay more attention to the temporal regions of the target sound during training. Experimental results on the synthesized data generated from the Freesound Datasets show that our proposed method can significantly improve the performance of TSE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge