Predict-and-Update Network: Audio-Visual Speech Recognition Inspired by Human Speech Perception

Sep 05, 2022Jiadong Wang, Xinyuan Qian, Haizhou Li

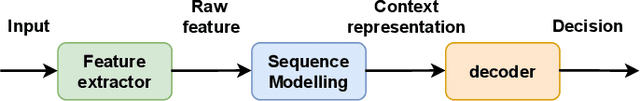

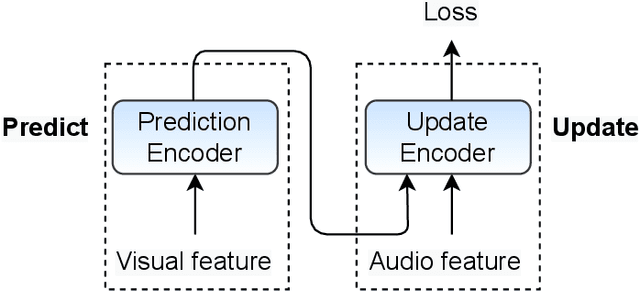

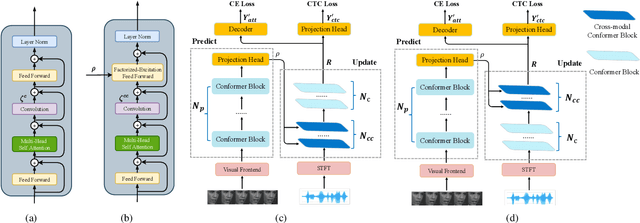

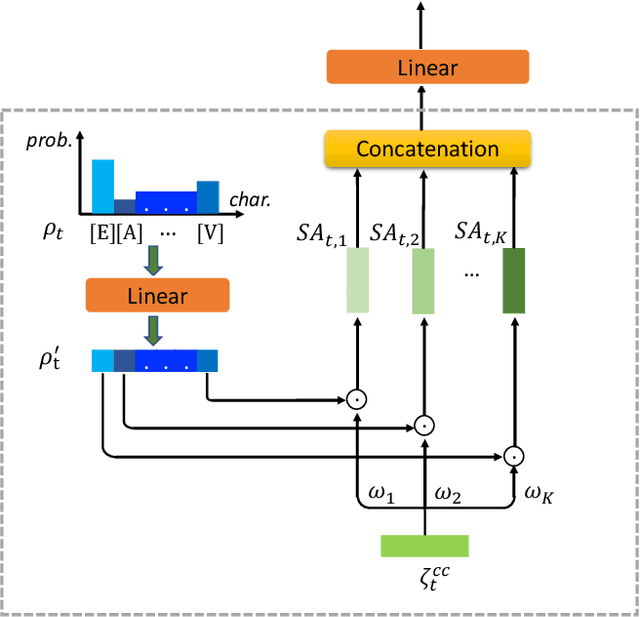

Audio and visual signals complement each other in human speech perception, so do they in speech recognition. The visual hint is less evident than the acoustic hint, but more robust in a complex acoustic environment, as far as speech perception is concerned. It remains a challenge how we effectively exploit the interaction between audio and visual signals for automatic speech recognition. There have been studies to exploit visual signals as redundant or complementary information to audio input in a synchronous manner. Human studies suggest that visual signal primes the listener in advance as to when and on which frequency to attend to. We propose a Predict-and-Update Network (P&U net), to simulate such a visual cueing mechanism for Audio-Visual Speech Recognition (AVSR). In particular, we first predict the character posteriors of the spoken words, i.e. the visual embedding, based on the visual signals. The audio signal is then conditioned on the visual embedding via a novel cross-modal Conformer, that updates the character posteriors. We validate the effectiveness of the visual cueing mechanism through extensive experiments. The proposed P&U net outperforms the state-of-the-art AVSR methods on both LRS2-BBC and LRS3-BBC datasets, with the relative reduced Word Error Rate (WER)s exceeding 10% and 40% under clean and noisy conditions, respectively.

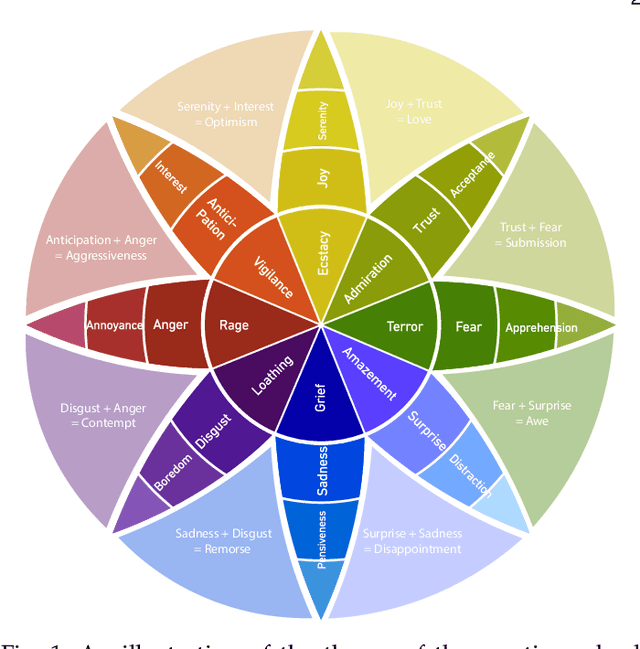

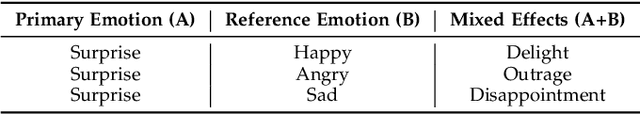

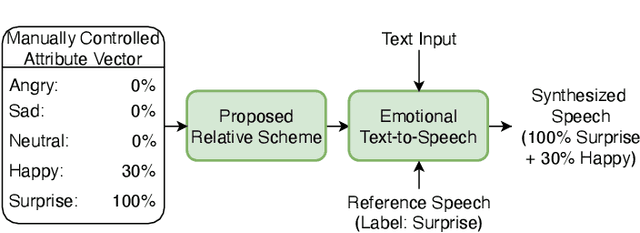

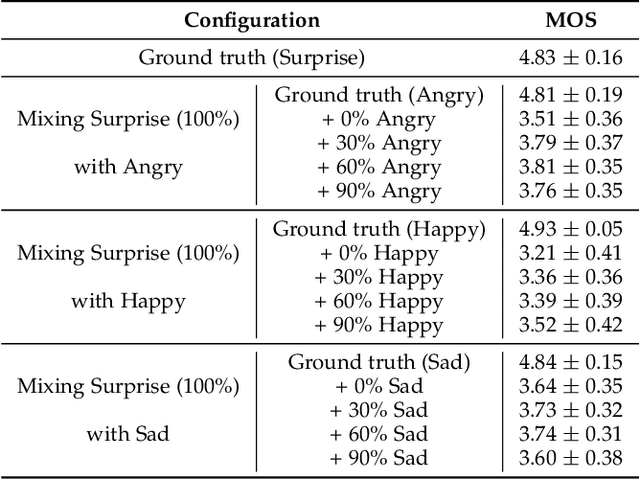

Speech Synthesis with Mixed Emotions

Aug 11, 2022Kun Zhou, Berrak Sisman, Rajib Rana, B. W. Schuller, Haizhou Li

Emotional speech synthesis aims to synthesize human voices with various emotional effects. The current studies are mostly focused on imitating an averaged style belonging to a specific emotion type. In this paper, we seek to generate speech with a mixture of emotions at run-time. We propose a novel formulation that measures the relative difference between the speech samples of different emotions. We then incorporate our formulation into a sequence-to-sequence emotional text-to-speech framework. During the training, the framework does not only explicitly characterize emotion styles, but also explores the ordinal nature of emotions by quantifying the differences with other emotions. At run-time, we control the model to produce the desired emotion mixture by manually defining an emotion attribute vector. The objective and subjective evaluations have validated the effectiveness of the proposed framework. To our best knowledge, this research is the first study on modelling, synthesizing and evaluating mixed emotions in speech.

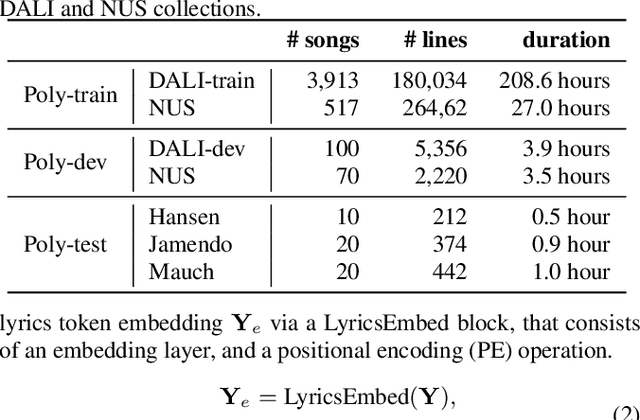

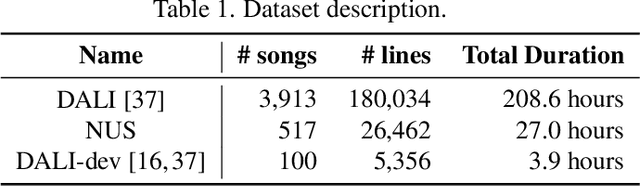

PoLyScribers: Joint Training of Vocal Extractor and Lyrics Transcriber for Polyphonic Music

Jul 15, 2022Xiaoxue Gao, Chitralekha Gupta, Haizhou Li

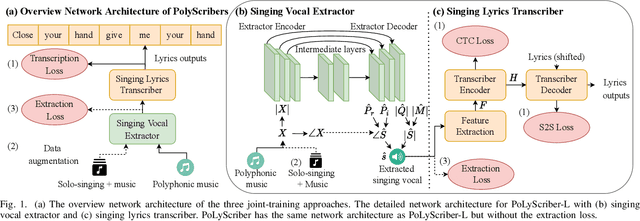

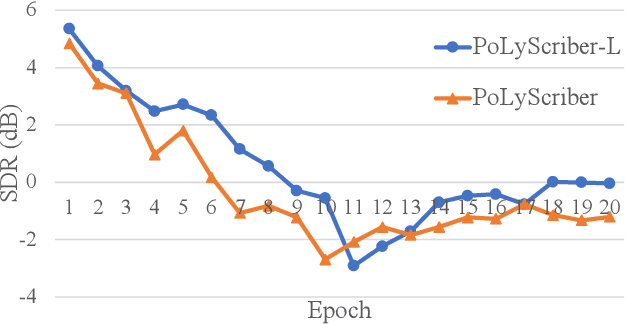

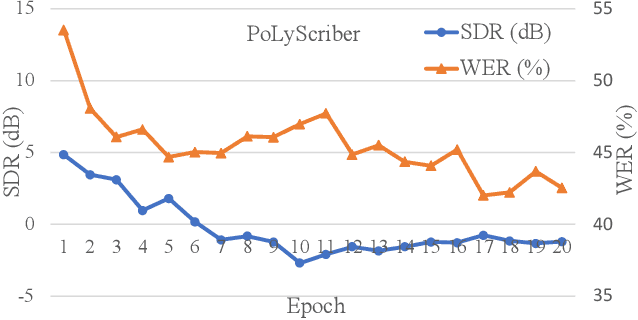

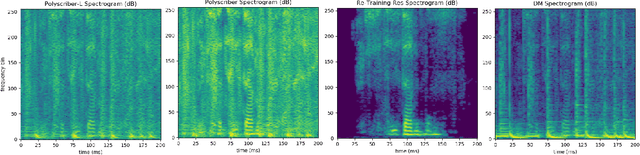

Lyrics transcription of polyphonic music is challenging as the background music affects lyrics intelligibility. Typically, lyrics transcription can be performed by a two step pipeline, i.e. singing vocal extraction frontend, followed by a lyrics transcriber decoder backend, where the frontend and backend are trained separately. Such a two step pipeline suffers from both imperfect vocal extraction and mismatch between frontend and backend. In this work, we propose novel end-to-end joint-training framework, that we call PoLyScribers, to jointly optimize the vocal extractor front-end and lyrics transcriber backend for lyrics transcription in polyphonic music. The experimental results show that our proposed joint-training model achieves substantial improvements over the existing approaches on publicly available test datasets.

Accurate Emotion Strength Assessment for Seen and Unseen Speech Based on Data-Driven Deep Learning

Jun 15, 2022Rui Liu, Berrak Sisman, Björn Schuller, Guanglai Gao, Haizhou Li

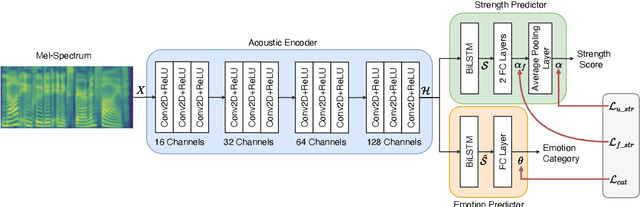

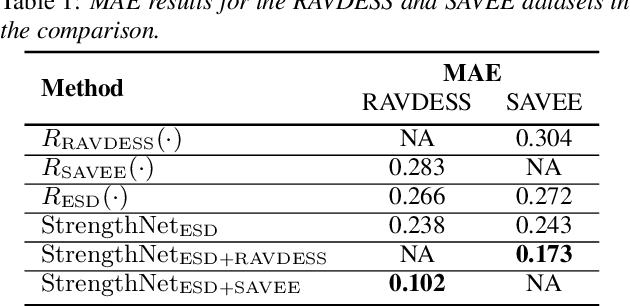

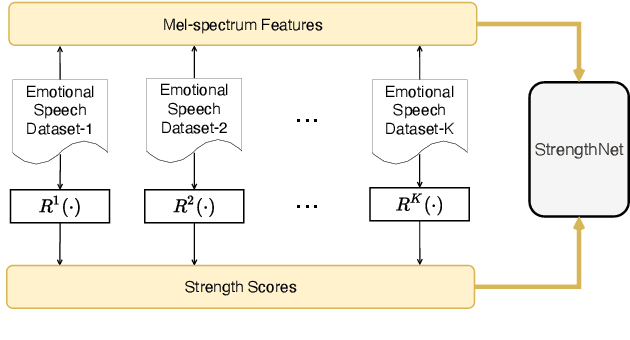

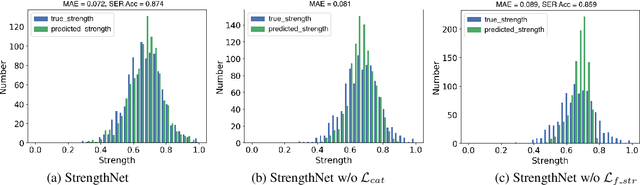

Emotion classification of speech and assessment of the emotion strength are required in applications such as emotional text-to-speech and voice conversion. The emotion attribute ranking function based on Support Vector Machine (SVM) was proposed to predict emotion strength for emotional speech corpus. However, the trained ranking function doesn't generalize to new domains, which limits the scope of applications, especially for out-of-domain or unseen speech. In this paper, we propose a data-driven deep learning model, i.e. StrengthNet, to improve the generalization of emotion strength assessment for seen and unseen speech. This is achieved by the fusion of emotional data from various domains. We follow a multi-task learning network architecture that includes an acoustic encoder, a strength predictor, and an auxiliary emotion predictor. Experiments show that the predicted emotion strength of the proposed StrengthNet is highly correlated with ground truth scores for both seen and unseen speech. We release the source codes at: https://github.com/ttslr/StrengthNet.

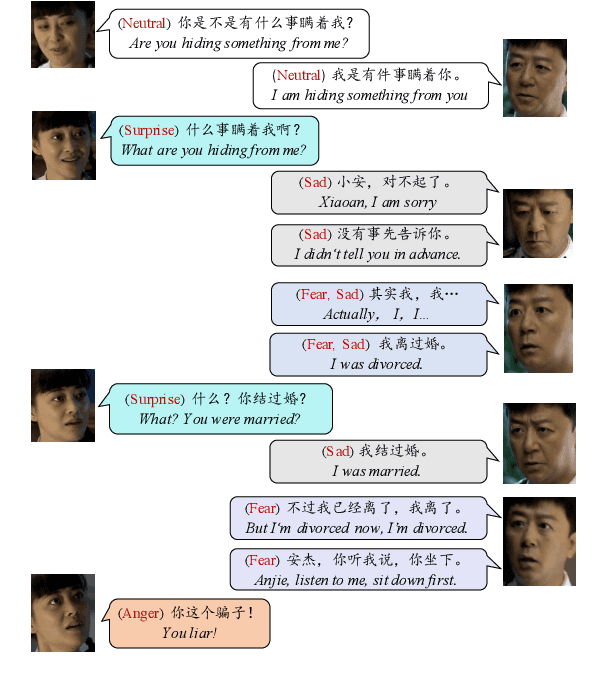

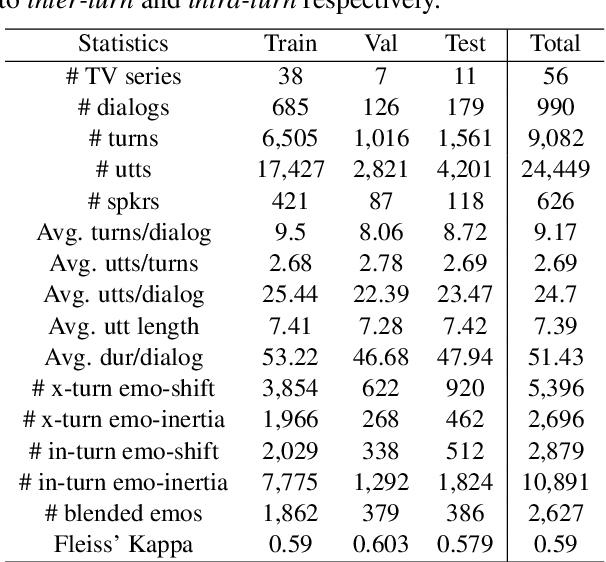

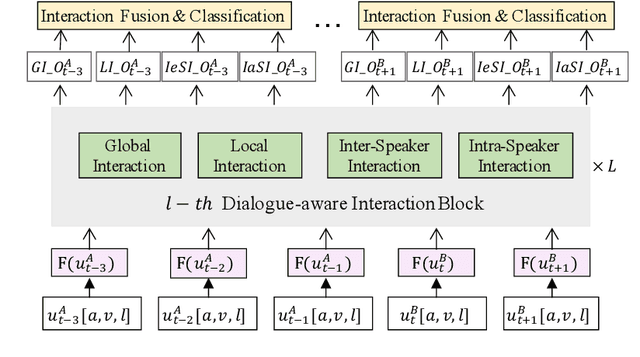

M3ED: Multi-modal Multi-scene Multi-label Emotional Dialogue Database

May 09, 2022Jinming Zhao, Tenggan Zhang, Jingwen Hu, Yuchen Liu, Qin Jin, Xinchao Wang, Haizhou Li

The emotional state of a speaker can be influenced by many different factors in dialogues, such as dialogue scene, dialogue topic, and interlocutor stimulus. The currently available data resources to support such multimodal affective analysis in dialogues are however limited in scale and diversity. In this work, we propose a Multi-modal Multi-scene Multi-label Emotional Dialogue dataset, M3ED, which contains 990 dyadic emotional dialogues from 56 different TV series, a total of 9,082 turns and 24,449 utterances. M3 ED is annotated with 7 emotion categories (happy, surprise, sad, disgust, anger, fear, and neutral) at utterance level, and encompasses acoustic, visual, and textual modalities. To the best of our knowledge, M3ED is the first multimodal emotional dialogue dataset in Chinese. It is valuable for cross-culture emotion analysis and recognition. We apply several state-of-the-art methods on the M3ED dataset to verify the validity and quality of the dataset. We also propose a general Multimodal Dialogue-aware Interaction framework, MDI, to model the dialogue context for emotion recognition, which achieves comparable performance to the state-of-the-art methods on the M3ED. The full dataset and codes are available.

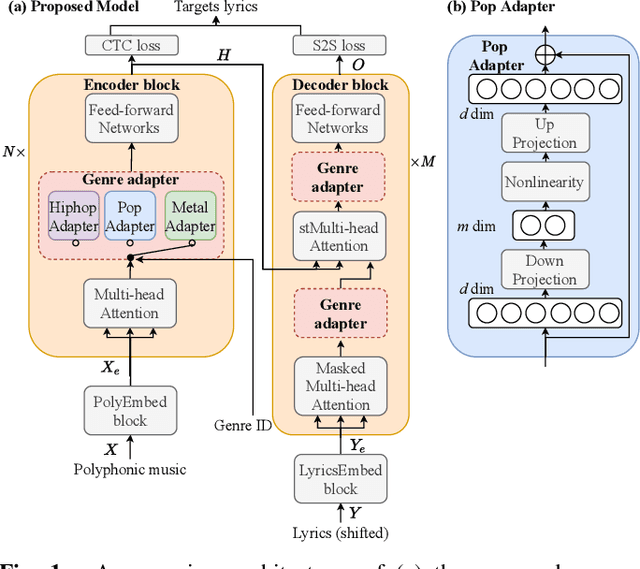

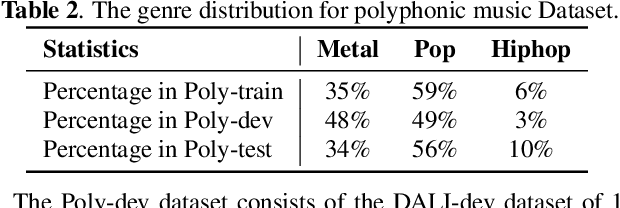

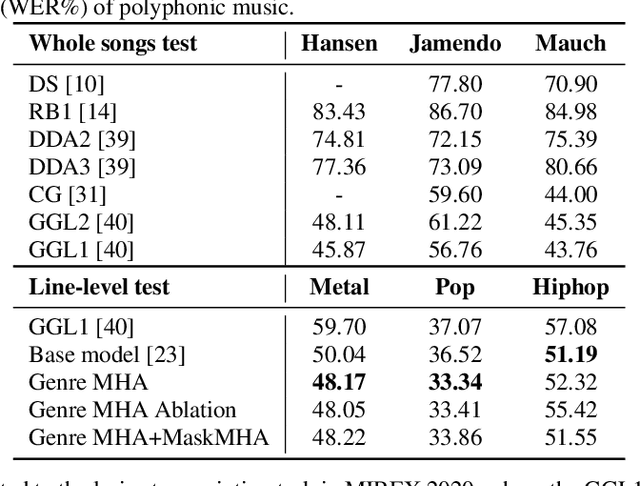

Genre-conditioned Acoustic Models for Automatic Lyrics Transcription of Polyphonic Music

Apr 07, 2022Xiaoxue Gao, Chitralekha Gupta, Haizhou Li

Lyrics transcription of polyphonic music is challenging not only because the singing vocals are corrupted by the background music, but also because the background music and the singing style vary across music genres, such as pop, metal, and hip hop, which affects lyrics intelligibility of the song in different ways. In this work, we propose to transcribe the lyrics of polyphonic music using a novel genre-conditioned network. The proposed network adopts pre-trained model parameters, and incorporates the genre adapters between layers to capture different genre peculiarities for lyrics-genre pairs, thereby only requiring lightweight genre-specific parameters for training. Our experiments show that the proposed genre-conditioned network outperforms the existing lyrics transcription systems.

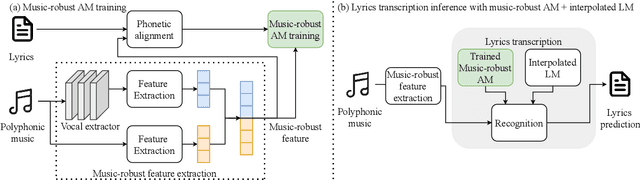

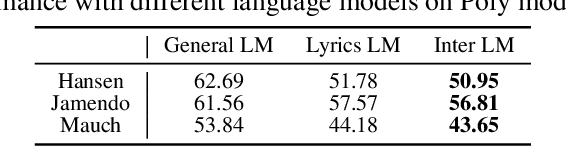

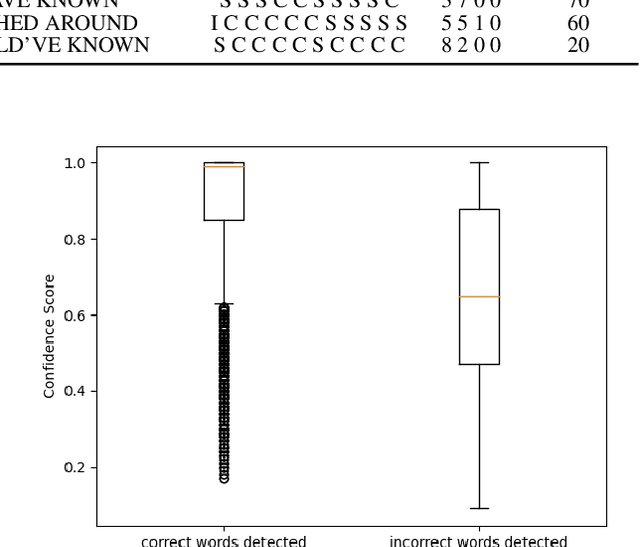

Music-robust Automatic Lyrics Transcription of Polyphonic Music

Apr 07, 2022Xiaoxue Gao, Chitralekha Gupta, Haizhou Li

Lyrics transcription of polyphonic music is challenging because singing vocals are corrupted by the background music. To improve the robustness of lyrics transcription to the background music, we propose a strategy of combining the features that emphasize the singing vocals, i.e. music-removed features that represent singing vocal extracted features, and the features that capture the singing vocals as well as the background music, i.e. music-present features. We show that these two sets of features complement each other, and their combination performs better than when they are used alone, thus improving the robustness of the acoustic model to the background music. Furthermore, language model interpolation between a general-purpose language model and an in-domain lyrics-specific language model provides further improvement in transcription results. Our experiments show that our proposed strategy outperforms the existing lyrics transcription systems for polyphonic music. Moreover, we find that our proposed music-robust features specially improve the lyrics transcription performance in metal genre of songs, where the background music is loud and dominant.

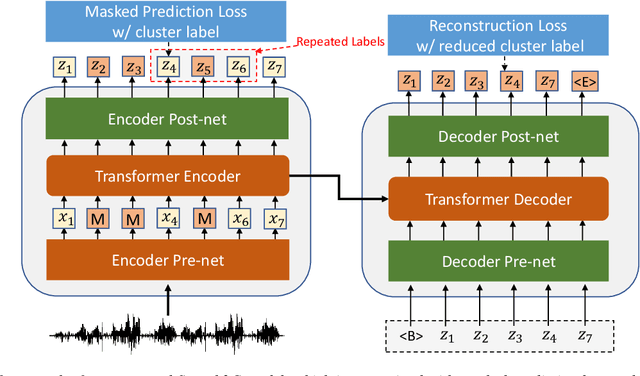

Pre-Training Transformer Decoder for End-to-End ASR Model with Unpaired Speech Data

Mar 31, 2022Junyi Ao, Ziqiang Zhang, Long Zhou, Shujie Liu, Haizhou Li, Tom Ko, Lirong Dai, Jinyu Li, Yao Qian, Furu Wei

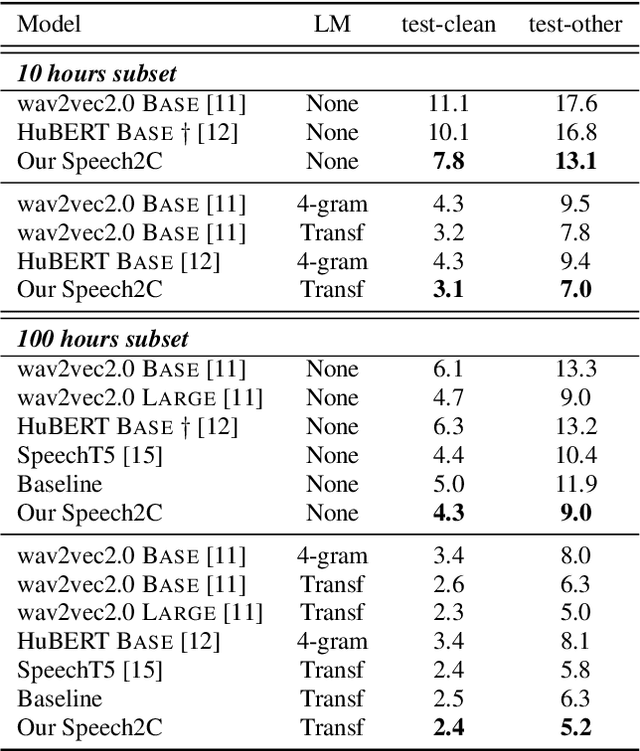

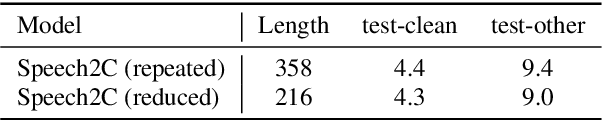

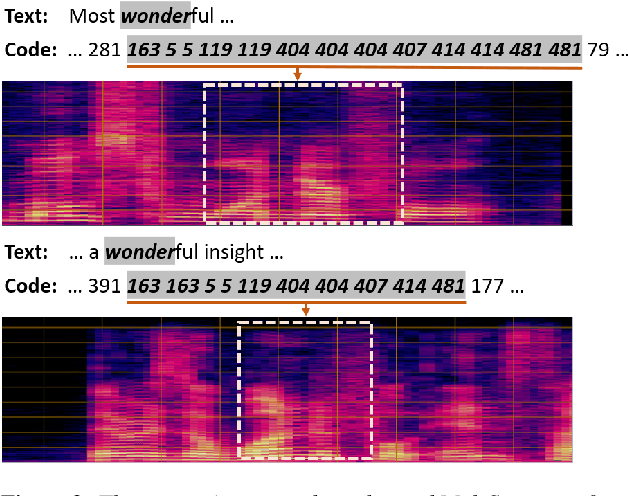

This paper studies a novel pre-training technique with unpaired speech data, Speech2C, for encoder-decoder based automatic speech recognition (ASR). Within a multi-task learning framework, we introduce two pre-training tasks for the encoder-decoder network using acoustic units, i.e., pseudo codes, derived from an offline clustering model. One is to predict the pseudo codes via masked language modeling in encoder output, like HuBERT model, while the other lets the decoder learn to reconstruct pseudo codes autoregressively instead of generating textual scripts. In this way, the decoder learns to reconstruct original speech information with codes before learning to generate correct text. Comprehensive experiments on the LibriSpeech corpus show that the proposed Speech2C can relatively reduce the word error rate (WER) by 19.2% over the method without decoder pre-training, and also outperforms significantly the state-of-the-art wav2vec 2.0 and HuBERT on fine-tuning subsets of 10h and 100h.

A Hybrid Continuity Loss to Reduce Over-Suppression for Time-domain Target Speaker Extraction

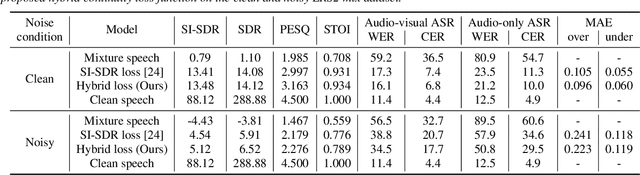

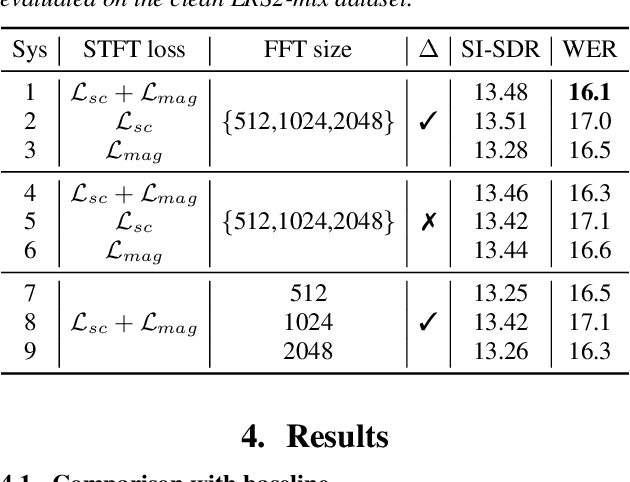

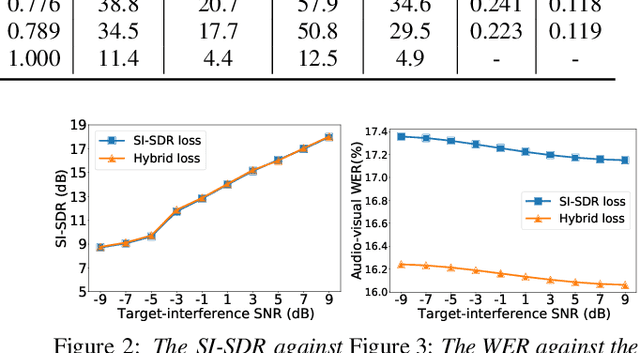

Mar 31, 2022Zexu Pan, Meng Ge, Haizhou Li

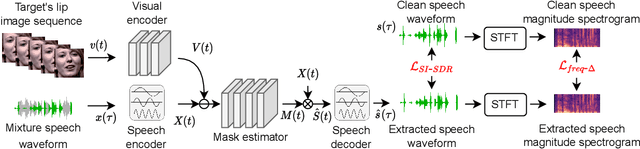

Speaker extraction algorithm extracts the target speech from a mixture speech containing interference speech and background noise. The extraction process sometimes over-suppresses the extracted target speech, which not only creates artifacts during listening but also harms the performance of downstream automatic speech recognition algorithms. We propose a hybrid continuity loss function for time-domain speaker extraction algorithms to settle the over-suppression problem. On top of the waveform-level loss used for superior signal quality, i.e., SI-SDR, we introduce a multi-resolution delta spectrum loss in the frequency-domain, to ensure the continuity of an extracted speech signal, thus alleviating the over-suppression. We examine the hybrid continuity loss function using a time-domain audio-visual speaker extraction algorithm on the YouTube LRS2-BBC dataset. Experimental results show that the proposed loss function reduces the over-suppression and improves the word error rate of speech recognition on both clean and noisy two-speakers mixtures, without harming the reconstructed speech quality.

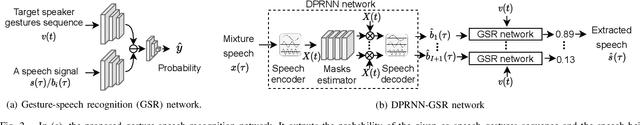

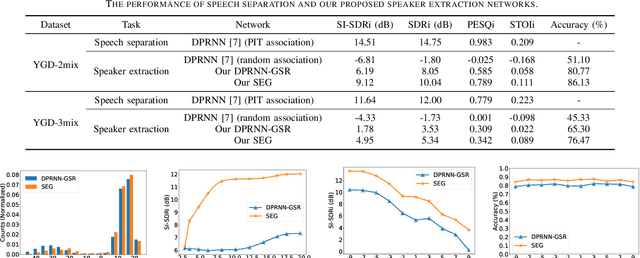

Speaker Extraction with Co-Speech Gestures Cue

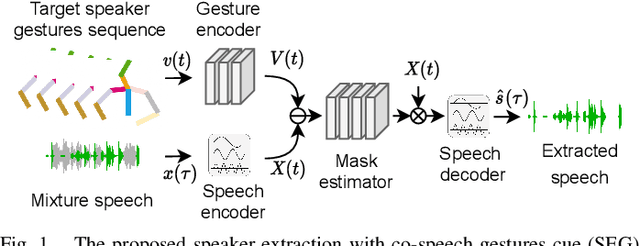

Mar 31, 2022Zexu Pan, Xinyuan Qian, Haizhou Li

Speaker extraction seeks to extract the clean speech of a target speaker from a multi-talker mixture speech. There have been studies to use a pre-recorded speech sample or face image of the target speaker as the speaker cue. In human communication, co-speech gestures that are naturally timed with speech also contribute to speech perception. In this work, we explore the use of co-speech gestures sequence, e.g. hand and body movements, as the speaker cue for speaker extraction, which could be easily obtained from low-resolution video recordings, thus more available than face recordings. We propose two networks using the co-speech gestures cue to perform attentive listening on the target speaker, one that implicitly fuses the co-speech gestures cue in the speaker extraction process, the other performs speech separation first, followed by explicitly using the co-speech gestures cue to associate a separated speech to the target speaker. The experimental results show that the co-speech gestures cue is informative in associating the target speaker, and the quality of the extracted speech shows significant improvements over the unprocessed mixture speech.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge