Björn Schuller

Technische Universität München, Germany

SmoothCLAP: Soft-Target Enhanced Contrastive Language\--Audio Pretraining for Affective Computing

Jan 18, 2026Abstract:The ambiguity of human emotions poses several challenges for machine learning models, as they often overlap and lack clear delineating boundaries. Contrastive language-audio pretraining (CLAP) has emerged as a key technique for generalisable emotion recognition. However, as conventional CLAP enforces a strict one-to-one alignment between paired audio-text samples, it overlooks intra-modal similarity and treats all non-matching pairs as equally negative. This conflicts with the fuzzy boundaries between different emotions. To address this limitation, we propose SmoothCLAP, which introduces softened targets derived from intra-modal similarity and paralinguistic features. By combining these softened targets with conventional contrastive supervision, SmoothCLAP learns embeddings that respect graded emotional relationships, while retaining the same inference pipeline as CLAP. Experiments on eight affective computing tasks across English and German demonstrate that SmoothCLAP is consistently achieving superior performance. Our results highlight that leveraging soft supervision is a promising strategy for building emotion-aware audio-text models.

Affect and Effect: Limitations of regularisation-based continual learning in EEG-based emotion classification

Jan 09, 2026Abstract:Generalisation to unseen subjects in EEG-based emotion classification remains a challenge due to high inter-and intra-subject variability. Continual learning (CL) poses a promising solution by learning from a sequence of tasks while mitigating catastrophic forgetting. Regularisation-based CL approaches, such as Elastic Weight Consolidation (EWC), Synaptic Intelligence (SI), and Memory Aware Synapses (MAS), are commonly used as baselines in EEG-based CL studies, yet their suitability for this problem remains underexplored. This study theoretically and empirically finds that regularisation-based CL methods show limited performance for EEG-based emotion classification on the DREAMER and SEED datasets. We identify a fundamental misalignment in the stability-plasticity trade-off, where regularisation-based methods prioritise mitigating catastrophic forgetting (backward transfer) over adapting to new subjects (forward transfer). We investigate this limitation under subject-incremental sequences and observe that: (1) the heuristics for estimating parameter importance become less reliable under noisy data and covariate shift, (2) gradients on parameters deemed important by these heuristics often interfere with gradient updates required for new subjects, moving optimisation away from the minimum, (3) importance values accumulated across tasks over-constrain the model, and (4) performance is sensitive to subject order. Forward transfer showed no statistically significant improvement over sequential fine-tuning (p > 0.05 across approaches and datasets). The high variability of EEG signals means past subjects provide limited value to future subjects. Regularisation-based continual learning approaches are therefore limited for robust generalisation to unseen subjects in EEG-based emotion classification.

Discovering and Causally Validating Emotion-Sensitive Neurons in Large Audio-Language Models

Jan 06, 2026Abstract:Emotion is a central dimension of spoken communication, yet, we still lack a mechanistic account of how modern large audio-language models (LALMs) encode it internally. We present the first neuron-level interpretability study of emotion-sensitive neurons (ESNs) in LALMs and provide causal evidence that such units exist in Qwen2.5-Omni, Kimi-Audio, and Audio Flamingo 3. Across these three widely used open-source models, we compare frequency-, entropy-, magnitude-, and contrast-based neuron selectors on multiple emotion recognition benchmarks. Using inference-time interventions, we reveal a consistent emotion-specific signature: ablating neurons selected for a given emotion disproportionately degrades recognition of that emotion while largely preserving other classes, whereas gain-based amplification steers predictions toward the target emotion. These effects arise with modest identification data and scale systematically with intervention strength. We further observe that ESNs exhibit non-uniform layer-wise clustering with partial cross-dataset transfer. Taken together, our results offer a causal, neuron-level account of emotion decisions in LALMs and highlight targeted neuron interventions as an actionable handle for controllable affective behaviors.

AI-enhanced conversational agents for personalized asthma support Factors for engagement, value and efficacy

Jul 22, 2025Abstract:Asthma-related deaths in the UK are the highest in Europe, and only 30% of patients access basic care. There is a need for alternative approaches to reaching people with asthma in order to provide health education, self-management support and bridges to care. Automated conversational agents (specifically, mobile chatbots) present opportunities for providing alternative and individually tailored access to health education, self-management support and risk self-assessment. But would patients engage with a chatbot, and what factors influence engagement? We present results from a patient survey (N=1257) devised by a team of asthma clinicians, patients, and technology developers, conducted to identify optimal factors for efficacy, value and engagement for a chatbot. Results indicate that most adults with asthma (53%) are interested in using a chatbot and the patients most likely to do so are those who believe their asthma is more serious and who are less confident about self-management. Results also indicate enthusiasm for 24/7 access, personalisation, and for WhatsApp as the preferred access method (compared to app, voice assistant, SMS or website). Obstacles to uptake include security/privacy concerns and skepticism of technological capabilities. We present detailed findings and consolidate these into 7 recommendations for developers for optimising efficacy of chatbot-based health support.

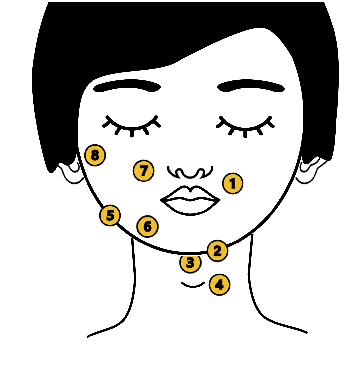

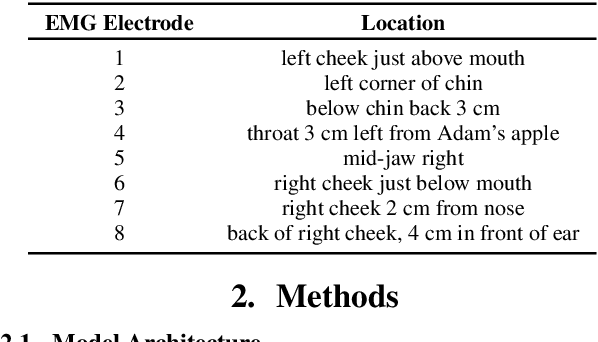

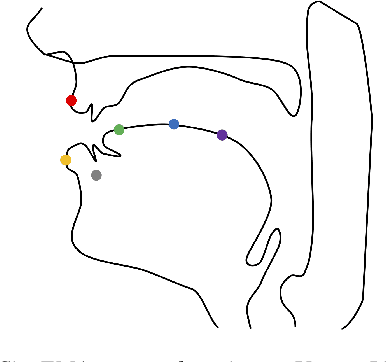

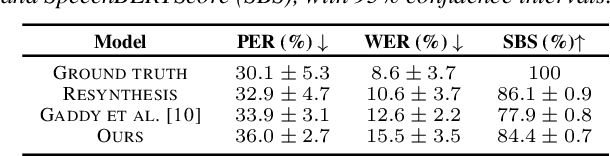

Articulatory Feature Prediction from Surface EMG during Speech Production

May 20, 2025

Abstract:We present a model for predicting articulatory features from surface electromyography (EMG) signals during speech production. The proposed model integrates convolutional layers and a Transformer block, followed by separate predictors for articulatory features. Our approach achieves a high prediction correlation of approximately 0.9 for most articulatory features. Furthermore, we demonstrate that these predicted articulatory features can be decoded into intelligible speech waveforms. To our knowledge, this is the first method to decode speech waveforms from surface EMG via articulatory features, offering a novel approach to EMG-based speech synthesis. Additionally, we analyze the relationship between EMG electrode placement and articulatory feature predictability, providing knowledge-driven insights for optimizing EMG electrode configurations. The source code and decoded speech samples are publicly available.

The First MPDD Challenge: Multimodal Personality-aware Depression Detection

May 15, 2025

Abstract:Depression is a widespread mental health issue affecting diverse age groups, with notable prevalence among college students and the elderly. However, existing datasets and detection methods primarily focus on young adults, neglecting the broader age spectrum and individual differences that influence depression manifestation. Current approaches often establish a direct mapping between multimodal data and depression indicators, failing to capture the complexity and diversity of depression across individuals. This challenge includes two tracks based on age-specific subsets: Track 1 uses the MPDD-Elderly dataset for detecting depression in older adults, and Track 2 uses the MPDD-Young dataset for detecting depression in younger participants. The Multimodal Personality-aware Depression Detection (MPDD) Challenge aims to address this gap by incorporating multimodal data alongside individual difference factors. We provide a baseline model that fuses audio and video modalities with individual difference information to detect depression manifestations in diverse populations. This challenge aims to promote the development of more personalized and accurate de pression detection methods, advancing mental health research and fostering inclusive detection systems. More details are available on the official challenge website: https://hacilab.github.io/MPDDChallenge.github.io.

ECOSoundSet: a finely annotated dataset for the automated acoustic identification of Orthoptera and Cicadidae in North, Central and temperate Western Europe

Apr 29, 2025Abstract:Currently available tools for the automated acoustic recognition of European insects in natural soundscapes are limited in scope. Large and ecologically heterogeneous acoustic datasets are currently needed for these algorithms to cross-contextually recognize the subtle and complex acoustic signatures produced by each species, thus making the availability of such datasets a key requisite for their development. Here we present ECOSoundSet (European Cicadidae and Orthoptera Sound dataSet), a dataset containing 10,653 recordings of 200 orthopteran and 24 cicada species (217 and 26 respective taxa when including subspecies) present in North, Central, and temperate Western Europe (Andorra, Belgium, Denmark, mainland France and Corsica, Germany, Ireland, Luxembourg, Monaco, Netherlands, United Kingdom, Switzerland), collected partly through targeted fieldwork in South France and Catalonia and partly through contributions from various European entomologists. The dataset is composed of a combination of coarsely labeled recordings, for which we can only infer the presence, at some point, of their target species (weak labeling), and finely annotated recordings, for which we know the specific time and frequency range of each insect sound present in the recording (strong labeling). We also provide a train/validation/test split of the strongly labeled recordings, with respective approximate proportions of 0.8, 0.1 and 0.1, in order to facilitate their incorporation in the training and evaluation of deep learning algorithms. This dataset could serve as a meaningful complement to recordings already available online for the training of deep learning algorithms for the acoustic classification of orthopterans and cicadas in North, Central, and temperate Western Europe.

PerCoV2: Improved Ultra-Low Bit-Rate Perceptual Image Compression with Implicit Hierarchical Masked Image Modeling

Mar 12, 2025Abstract:We introduce PerCoV2, a novel and open ultra-low bit-rate perceptual image compression system designed for bandwidth- and storage-constrained applications. Building upon prior work by Careil et al., PerCoV2 extends the original formulation to the Stable Diffusion 3 ecosystem and enhances entropy coding efficiency by explicitly modeling the discrete hyper-latent image distribution. To this end, we conduct a comprehensive comparison of recent autoregressive methods (VAR and MaskGIT) for entropy modeling and evaluate our approach on the large-scale MSCOCO-30k benchmark. Compared to previous work, PerCoV2 (i) achieves higher image fidelity at even lower bit-rates while maintaining competitive perceptual quality, (ii) features a hybrid generation mode for further bit-rate savings, and (iii) is built solely on public components. Code and trained models will be released at https://github.com/Nikolai10/PerCoV2.

PerCo (SD): Open Perceptual Compression

Sep 30, 2024

Abstract:We introduce PerCo (SD), a perceptual image compression method based on Stable Diffusion v2.1, targeting the ultra-low bit range. PerCo (SD) serves as an open and competitive alternative to the state-of-the-art method PerCo, which relies on a proprietary variant of GLIDE and remains closed to the public. In this work, we review the theoretical foundations, discuss key engineering decisions in adapting PerCo to the Stable Diffusion ecosystem, and provide a comprehensive comparison, both quantitatively and qualitatively. On the MSCOCO-30k dataset, PerCo (SD) demonstrates improved perceptual characteristics at the cost of higher distortion. We partly attribute this gap to the different model capacities being used (866M vs. 1.4B). We hope our work contributes to a deeper understanding of the underlying mechanisms and paves the way for future advancements in the field. Code and trained models will be released at https://github.com/Nikolai10/PerCo.

Affective Computing Has Changed: The Foundation Model Disruption

Sep 13, 2024Abstract:The dawn of Foundation Models has on the one hand revolutionised a wide range of research problems, and, on the other hand, democratised the access and use of AI-based tools by the general public. We even observe an incursion of these models into disciplines related to human psychology, such as the Affective Computing domain, suggesting their affective, emerging capabilities. In this work, we aim to raise awareness of the power of Foundation Models in the field of Affective Computing by synthetically generating and analysing multimodal affective data, focusing on vision, linguistics, and speech (acoustics). We also discuss some fundamental problems, such as ethical issues and regulatory aspects, related to the use of Foundation Models in this research area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge