Haichao Zhu

MiniMax-M1: Scaling Test-Time Compute Efficiently with Lightning Attention

Jun 16, 2025

Abstract:We introduce MiniMax-M1, the world's first open-weight, large-scale hybrid-attention reasoning model. MiniMax-M1 is powered by a hybrid Mixture-of-Experts (MoE) architecture combined with a lightning attention mechanism. The model is developed based on our previous MiniMax-Text-01 model, which contains a total of 456 billion parameters with 45.9 billion parameters activated per token. The M1 model natively supports a context length of 1 million tokens, 8x the context size of DeepSeek R1. Furthermore, the lightning attention mechanism in MiniMax-M1 enables efficient scaling of test-time compute. These properties make M1 particularly suitable for complex tasks that require processing long inputs and thinking extensively. MiniMax-M1 is trained using large-scale reinforcement learning (RL) on diverse problems including sandbox-based, real-world software engineering environments. In addition to M1's inherent efficiency advantage for RL training, we propose CISPO, a novel RL algorithm to further enhance RL efficiency. CISPO clips importance sampling weights rather than token updates, outperforming other competitive RL variants. Combining hybrid-attention and CISPO enables MiniMax-M1's full RL training on 512 H800 GPUs to complete in only three weeks, with a rental cost of just $534,700. We release two versions of MiniMax-M1 models with 40K and 80K thinking budgets respectively, where the 40K model represents an intermediate phase of the 80K training. Experiments on standard benchmarks show that our models are comparable or superior to strong open-weight models such as the original DeepSeek-R1 and Qwen3-235B, with particular strengths in complex software engineering, tool utilization, and long-context tasks. We publicly release MiniMax-M1 at https://github.com/MiniMax-AI/MiniMax-M1.

Ultron: Enabling Temporal Geometry Compression of 3D Mesh Sequences using Temporal Correspondence and Mesh Deformation

Sep 08, 2024Abstract:With the advancement of computer vision, dynamic 3D reconstruction techniques have seen significant progress and found applications in various fields. However, these techniques generate large amounts of 3D data sequences, necessitating efficient storage and transmission methods. Existing 3D model compression methods primarily focus on static models and do not consider inter-frame information, limiting their ability to reduce data size. Temporal mesh compression, which has received less attention, often requires all input meshes to have the same topology, a condition rarely met in real-world applications. This research proposes a method to compress mesh sequences with arbitrary topology using temporal correspondence and mesh deformation. The method establishes temporal correspondence between consecutive frames, applies a deformation model to transform the mesh from one frame to subsequent frames, and replaces the original meshes with deformed ones if the quality meets a tolerance threshold. Extensive experiments demonstrate that this method can achieve state-of-the-art performance in terms of compression performance. The contributions of this paper include a geometry and motion-based model for establishing temporal correspondence between meshes, a mesh quality assessment for temporal mesh sequences, an entropy-based encoding and corner table-based method for compressing mesh sequences, and extensive experiments showing the effectiveness of the proposed method. All the code will be open-sourced at https://github.com/lszhuhaichao/ultron.

Learning to Tokenize for Generative Retrieval

Apr 09, 2023

Abstract:Conventional document retrieval techniques are mainly based on the index-retrieve paradigm. It is challenging to optimize pipelines based on this paradigm in an end-to-end manner. As an alternative, generative retrieval represents documents as identifiers (docid) and retrieves documents by generating docids, enabling end-to-end modeling of document retrieval tasks. However, it is an open question how one should define the document identifiers. Current approaches to the task of defining document identifiers rely on fixed rule-based docids, such as the title of a document or the result of clustering BERT embeddings, which often fail to capture the complete semantic information of a document. We propose GenRet, a document tokenization learning method to address the challenge of defining document identifiers for generative retrieval. GenRet learns to tokenize documents into short discrete representations (i.e., docids) via a discrete auto-encoding approach. Three components are included in GenRet: (i) a tokenization model that produces docids for documents; (ii) a reconstruction model that learns to reconstruct a document based on a docid; and (iii) a sequence-to-sequence retrieval model that generates relevant document identifiers directly for a designated query. By using an auto-encoding framework, GenRet learns semantic docids in a fully end-to-end manner. We also develop a progressive training scheme to capture the autoregressive nature of docids and to stabilize training. We conduct experiments on the NQ320K, MS MARCO, and BEIR datasets to assess the effectiveness of GenRet. GenRet establishes the new state-of-the-art on the NQ320K dataset. Especially, compared to generative retrieval baselines, GenRet can achieve significant improvements on the unseen documents. GenRet also outperforms comparable baselines on MS MARCO and BEIR, demonstrating the method's generalizability.

VEM$^2$L: A Plug-and-play Framework for Fusing Text and Structure Knowledge on Sparse Knowledge Graph Completion

Jul 12, 2022

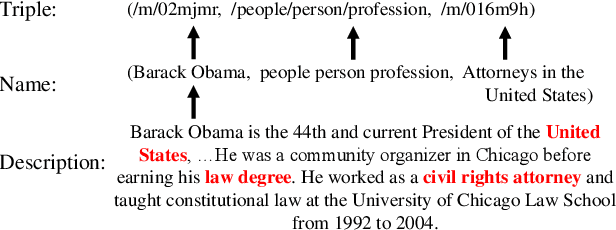

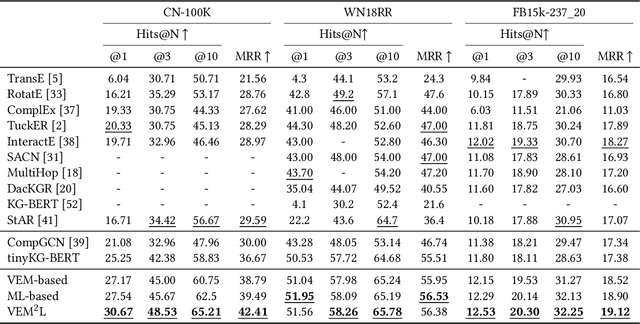

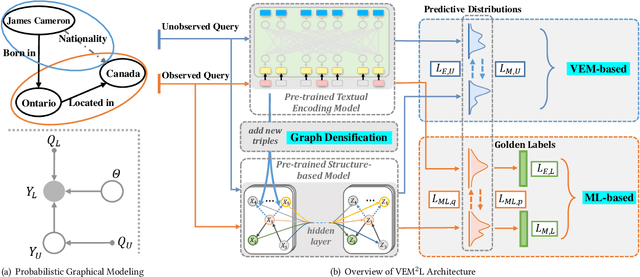

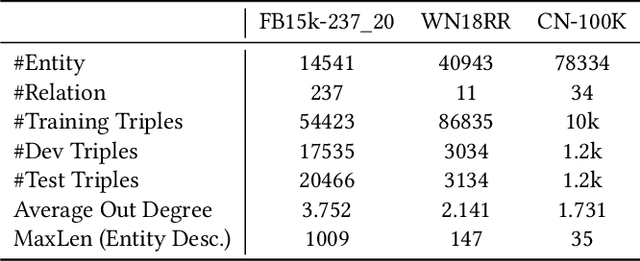

Abstract:Knowledge Graph Completion has been widely studied recently to complete missing elements within triples via mainly modeling graph structural features, but performs sensitive to the sparsity of graph structure. Relevant texts like entity names and descriptions, acting as another expression form for Knowledge Graphs (KGs), are expected to solve this challenge. Several methods have been proposed to utilize both structure and text messages with two encoders, but only achieved limited improvements due to the failure to balance weights between them. And reserving both structural and textual encoders during inference also suffers from heavily overwhelmed parameters. Motivated by Knowledge Distillation, we view knowledge as mappings from input to output probabilities and propose a plug-and-play framework VEM2L over sparse KGs to fuse knowledge extracted from text and structure messages into a unity. Specifically, we partition knowledge acquired by models into two nonoverlapping parts: one part is relevant to the fitting capacity upon training triples, which could be fused by motivating two encoders to learn from each other on training sets; the other reflects the generalization ability upon unobserved queries. And correspondingly, we propose a new fusion strategy proved by Variational EM algorithm to fuse the generalization ability of models, during which we also apply graph densification operations to further alleviate the sparse graph problem. By combining these two fusion methods, we propose VEM2L framework finally. Both detailed theoretical evidence, as well as quantitative and qualitative experiments, demonstrates the effectiveness and efficiency of our proposed framework.

Distilled Dual-Encoder Model for Vision-Language Understanding

Dec 16, 2021

Abstract:We propose a cross-modal attention distillation framework to train a dual-encoder model for vision-language understanding tasks, such as visual reasoning and visual question answering. Dual-encoder models have a faster inference speed than fusion-encoder models and enable the pre-computation of images and text during inference. However, the shallow interaction module used in dual-encoder models is insufficient to handle complex vision-language understanding tasks. In order to learn deep interactions of images and text, we introduce cross-modal attention distillation, which uses the image-to-text and text-to-image attention distributions of a fusion-encoder model to guide the training of our dual-encoder model. In addition, we show that applying the cross-modal attention distillation for both pre-training and fine-tuning stages achieves further improvements. Experimental results demonstrate that the distilled dual-encoder model achieves competitive performance for visual reasoning, visual entailment and visual question answering tasks while enjoying a much faster inference speed than fusion-encoder models. Our code and models will be publicly available at https://github.com/kugwzk/Distilled-DualEncoder.

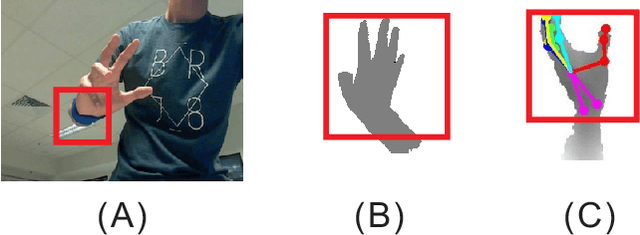

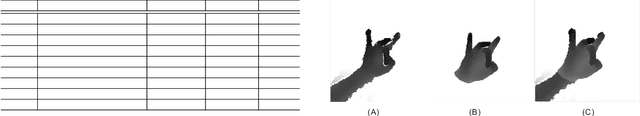

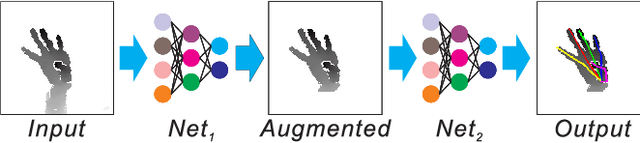

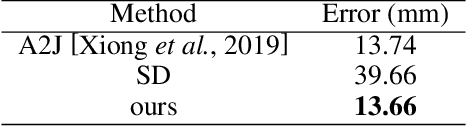

HandAugment: A Simple Data Augmentation Method for Depth-Based 3D Hand Pose Estimation

Jan 23, 2020

Abstract:Hand pose estimation from 3D depth images, has been explored widely using various kinds of techniques in the field of computer vision. Though, deep learning based method improve the performance greatly recently, however, this problem still remains unsolved due to lack of large datasets, like ImageNet or effective data synthesis methods. In this paper, we propose HandAugment, a method to synthesize image data to augment the training process of the neural networks. Our method has two main parts: First, We propose a scheme of two-stage neural networks. This scheme can make the neural networks focus on the hand regions and thus to improve the performance. Second, we introduce a simple and effective method to synthesize data by combining real and synthetic image together in the image space. Finally, we show that our method achieves the first place in the task of depth-based 3D hand pose estimation in HANDS 2019 challenge.

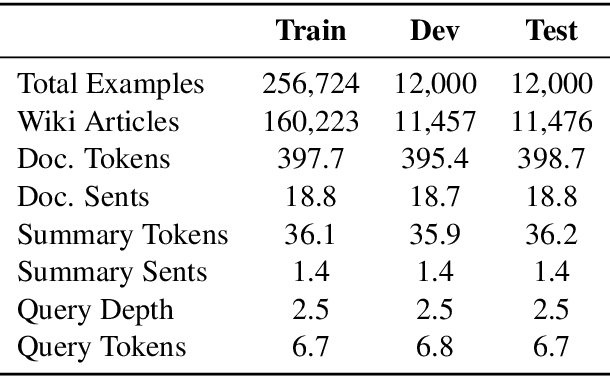

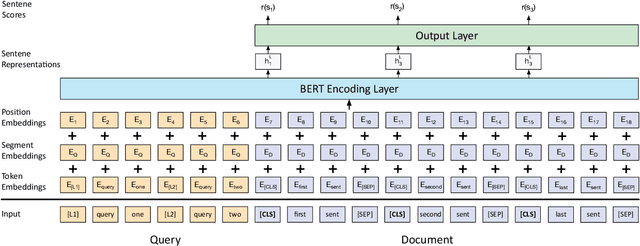

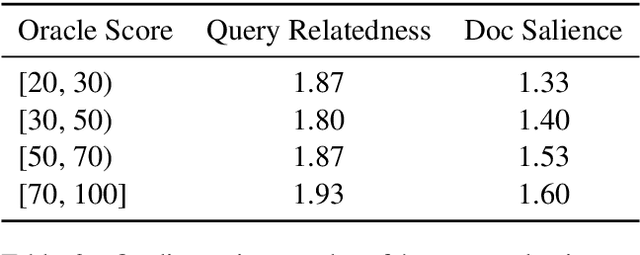

Transforming Wikipedia into Augmented Data for Query-Focused Summarization

Nov 08, 2019

Abstract:The manual construction of a query-focused summarization corpus is costly and timeconsuming. The limited size of existing datasets renders training data-driven summarization models challenging. In this paper, we use Wikipedia to automatically collect a large query-focused summarization dataset (named as WIKIREF) of more than 280,000 examples, which can serve as a means of data augmentation. Moreover, we develop a query-focused summarization model based on BERT to extract summaries from the documents. Experimental results on three DUC benchmarks show that the model pre-trained on WIKIREF has already achieved reasonable performance. After fine-tuning on the specific datasets, the model with data augmentation outperforms the state of the art on the benchmarks.

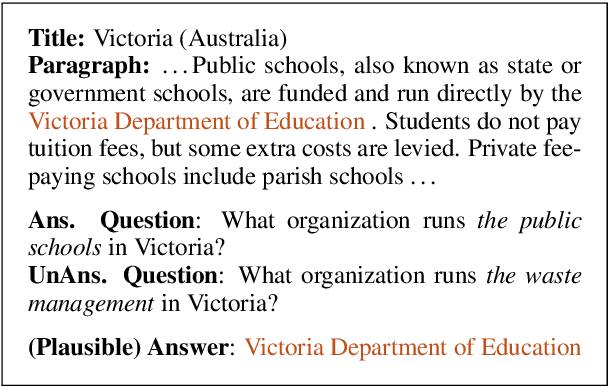

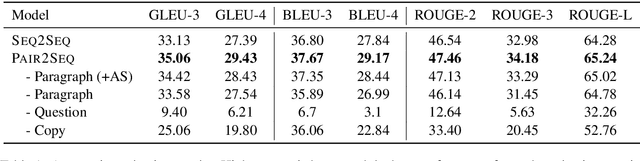

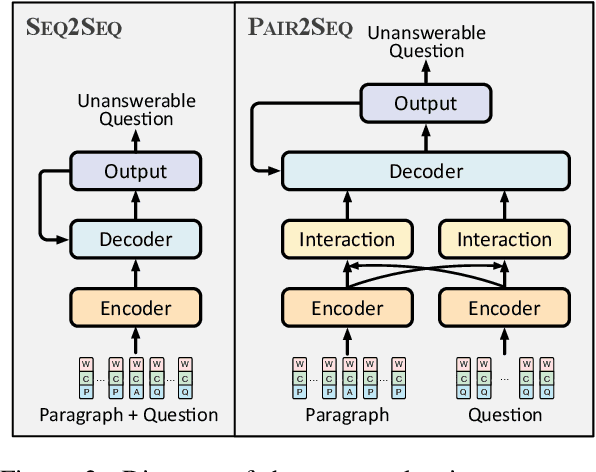

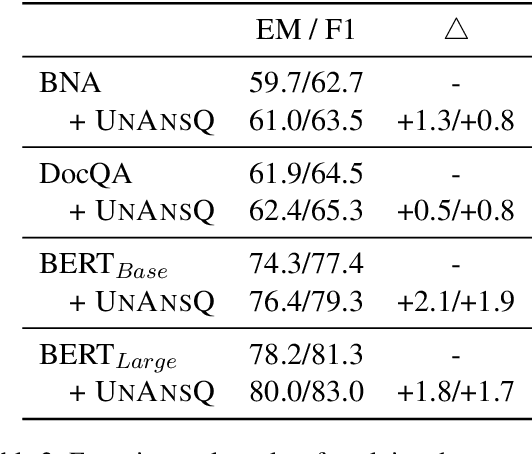

Learning to Ask Unanswerable Questions for Machine Reading Comprehension

Jun 14, 2019

Abstract:Machine reading comprehension with unanswerable questions is a challenging task. In this work, we propose a data augmentation technique by automatically generating relevant unanswerable questions according to an answerable question paired with its corresponding paragraph that contains the answer. We introduce a pair-to-sequence model for unanswerable question generation, which effectively captures the interactions between the question and the paragraph. We also present a way to construct training data for our question generation models by leveraging the existing reading comprehension dataset. Experimental results show that the pair-to-sequence model performs consistently better compared with the sequence-to-sequence baseline. We further use the automatically generated unanswerable questions as a means of data augmentation on the SQuAD 2.0 dataset, yielding 1.9 absolute F1 improvement with BERT-base model and 1.7 absolute F1 improvement with BERT-large model.

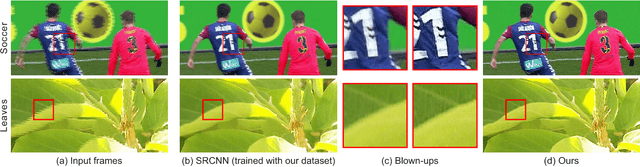

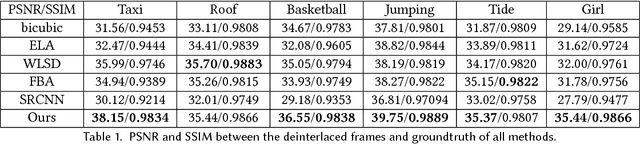

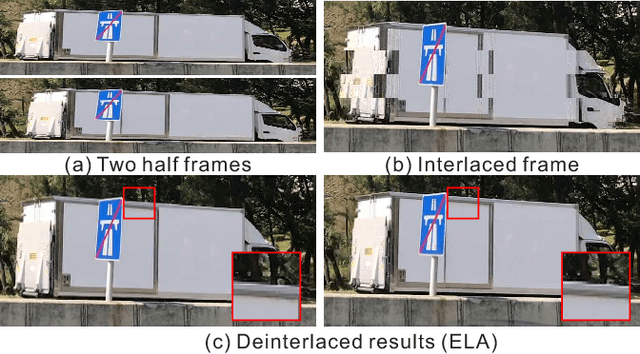

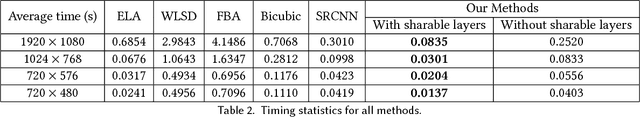

Real-time Deep Video Deinterlacing

Aug 01, 2017

Abstract:Interlacing is a widely used technique, for television broadcast and video recording, to double the perceived frame rate without increasing the bandwidth. But it presents annoying visual artifacts, such as flickering and silhouette "serration," during the playback. Existing state-of-the-art deinterlacing methods either ignore the temporal information to provide real-time performance but lower visual quality, or estimate the motion for better deinterlacing but with a trade-off of higher computational cost. In this paper, we present the first and novel deep convolutional neural networks (DCNNs) based method to deinterlace with high visual quality and real-time performance. Unlike existing models for super-resolution problems which relies on the translation-invariant assumption, our proposed DCNN model utilizes the temporal information from both the odd and even half frames to reconstruct only the missing scanlines, and retains the given odd and even scanlines for producing the full deinterlaced frames. By further introducing a layer-sharable architecture, our system can achieve real-time performance on a single GPU. Experiments shows that our method outperforms all existing methods, in terms of reconstruction accuracy and computational performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge