Haibin Ling

FAMNet: Joint Learning of Feature, Affinity and Multi-dimensional Assignment for Online Multiple Object Tracking

Apr 10, 2019

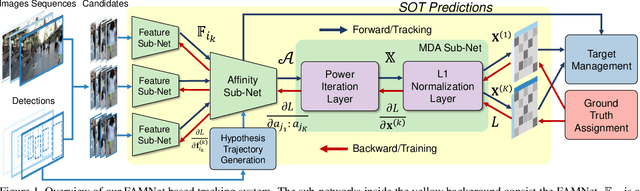

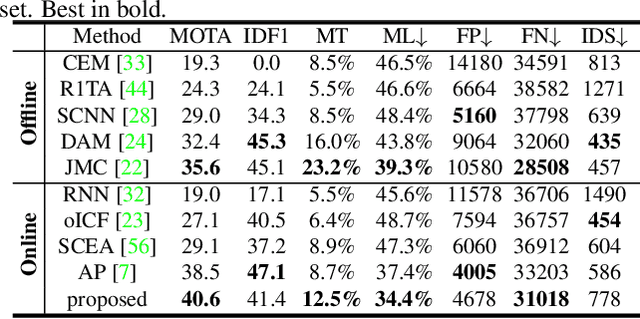

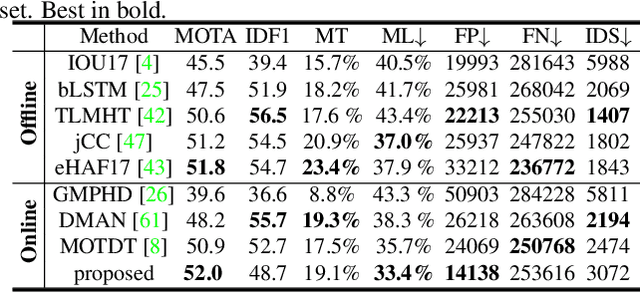

Abstract:Data association-based multiple object tracking (MOT) involves multiple separated modules processed or optimized differently, which results in complex method design and requires non-trivial tuning of parameters. In this paper, we present an end-to-end model, named FAMNet, where Feature extraction, Affinity estimation and Multi-dimensional assignment are refined in a single network. All layers in FAMNet are designed differentiable thus can be optimized jointly to learn the discriminative features and higher-order affinity model for robust MOT, which is supervised by the loss directly from the assignment ground truth. We also integrate single object tracking technique and a dedicated target management scheme into the FAMNet-based tracking system to further recover false negatives and inhibit noisy target candidates generated by the external detector. The proposed method is evaluated on a diverse set of benchmarks including MOT2015, MOT2017, KITTI-Car and UA-DETRAC, and achieves promising performance on all of them in comparison with state-of-the-arts.

End-to-end Projector Photometric Compensation

Apr 08, 2019

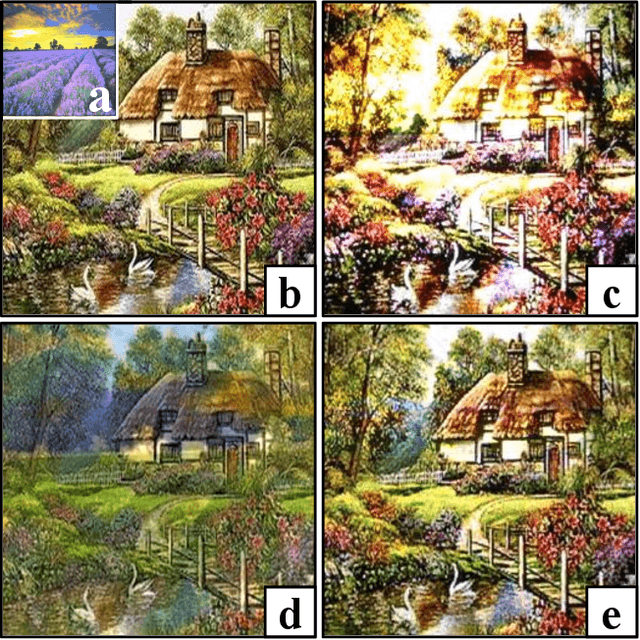

Abstract:Projector photometric compensation aims to modify a projector input image such that it can compensate for disturbance from the appearance of projection surface. In this paper, for the first time, we formulate the compensation problem as an end-to-end learning problem and propose a convolutional neural network, named CompenNet, to implicitly learn the complex compensation function. CompenNet consists of a UNet-like backbone network and an autoencoder subnet. Such architecture encourages rich multi-level interactions between the camera-captured projection surface image and the input image, and thus captures both photometric and environment information of the projection surface. In addition, the visual details and interaction information are carried to deeper layers along the multi-level skip convolution layers. The architecture is of particular importance for the projector compensation task, for which only a small training dataset is allowed in practice. Another contribution we make is a novel evaluation benchmark, which is independent of system setup and thus quantitatively verifiable. Such benchmark is not previously available, to our best knowledge, due to the fact that conventional evaluation requests the hardware system to actually project the final results. Our key idea, motivated from our end-to-end problem formulation, is to use a reasonable surrogate to avoid such projection process so as to be setup-independent. Our method is evaluated carefully on the benchmark, and the results show that our end-to-end learning solution outperforms state-of-the-arts both qualitatively and quantitatively by a significant margin.

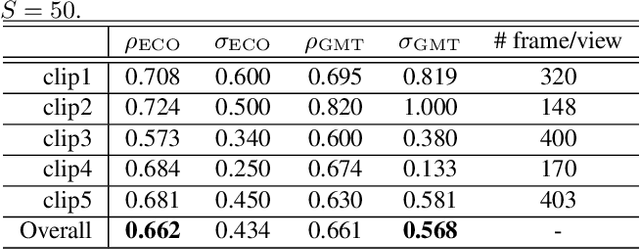

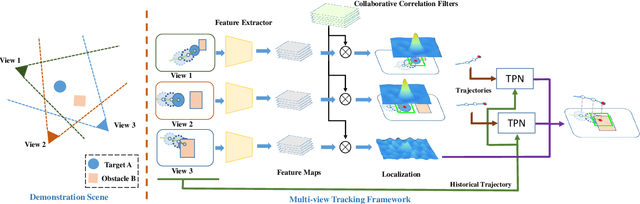

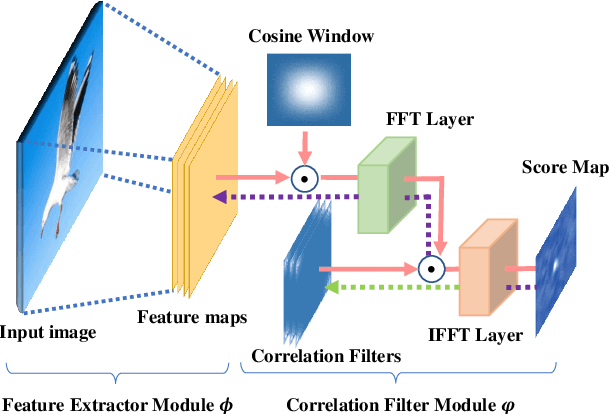

Generic Multiview Visual Tracking

Apr 04, 2019

Abstract:Recent progresses in visual tracking have greatly improved the tracking performance. However, challenges such as occlusion and view change remain obstacles in real world deployment. A natural solution to these challenges is to use multiple cameras with multiview inputs, though existing systems are mostly limited to specific targets (e.g. human), static cameras, and/or camera calibration. To break through these limitations, we propose a generic multiview tracking (GMT) framework that allows camera movement, while requiring neither specific object model nor camera calibration. A key innovation in our framework is a cross-camera trajectory prediction network (TPN), which implicitly and dynamically encodes camera geometric relations, and hence addresses missing target issues such as occlusion. Moreover, during tracking, we assemble information across different cameras to dynamically update a novel collaborative correlation filter (CCF), which is shared among cameras to achieve robustness against view change. The two components are integrated into a correlation filter tracking framework, where the features are trained offline using existing single view tracking datasets. For evaluation, we first contribute a new generic multiview tracking dataset (GMTD) with careful annotations, and then run experiments on GMTD and the PETS2009 datasets. On both datasets, the proposed GMT algorithm shows clear advantages over state-of-the-art ones.

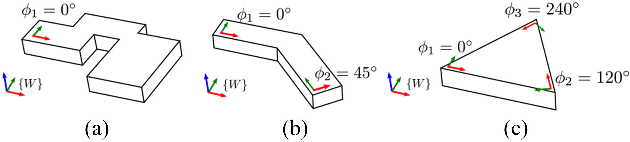

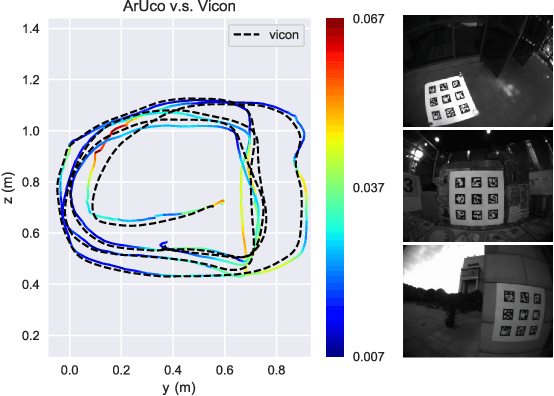

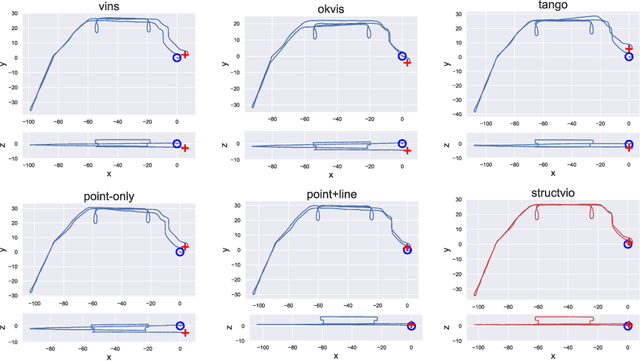

StructVIO : Visual-inertial Odometry with Structural Regularity of Man-made Environments

Mar 05, 2019

Abstract:We propose a novel visual-inertial odometry approach that adopts structural regularity in man-made environments. Instead of using Manhattan world assumption, we use Atlanta world model to describe such regularity. An Atlanta world is a world that contains multiple local Manhattan worlds with different heading directions. Each local Manhattan world is detected on-the-fly, and their headings are gradually refined by the state estimator when new observations are coming. With fully exploration of structural lines that aligned with each local Manhattan worlds, our visual-inertial odometry method become more accurate and robust, as well as much more flexible to different kinds of complex man-made environments. Through extensive benchmark tests and real-world tests, the results show that the proposed approach outperforms existing visual-inertial systems in large-scale man-made environments

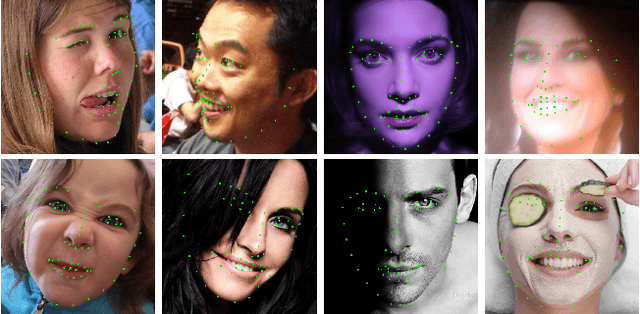

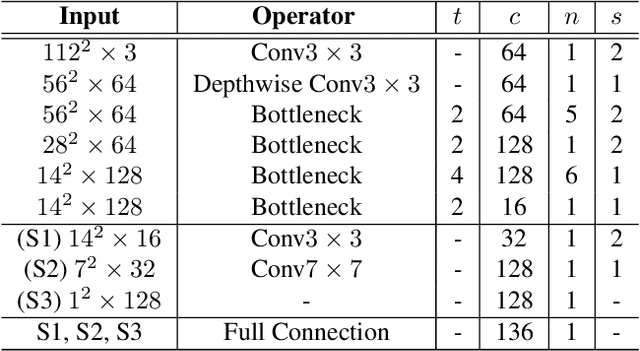

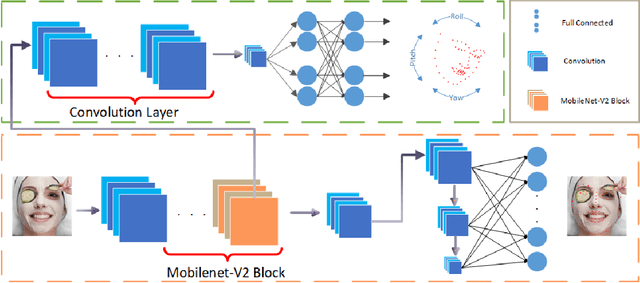

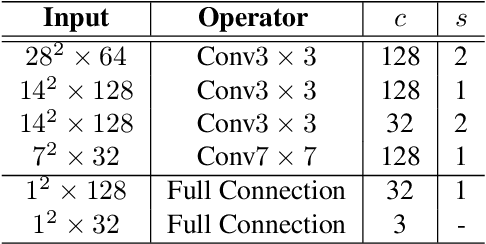

PFLD: A Practical Facial Landmark Detector

Mar 03, 2019

Abstract:Being accurate, efficient, and compact is essential to a facial landmark detector for practical use. To simultaneously consider the three concerns, this paper investigates a neat model with promising detection accuracy under wild environments e.g., unconstrained pose, expression, lighting, and occlusion conditions) and super real-time speed on a mobile device. More concretely, we customize an end-to-end single stage network associated with acceleration techniques. During the training phase, for each sample, rotation information is estimated for geometrically regularizing landmark localization, which is then NOT involved in the testing phase. A novel loss is designed to, besides considering the geometrical regularization, mitigate the issue of data imbalance by adjusting weights of samples to different states, such as large pose, extreme lighting, and occlusion, in the training set. Extensive experiments are conducted to demonstrate the efficacy of our design and reveal its superior performance over state-of-the-art alternatives on widely-adopted challenging benchmarks, i.e., 300W (including iBUG, LFPW, AFW, HELEN, and XM2VTS) and AFLW. Our model can be merely 2.1Mb of size and reach over 140 fps per face on a mobile phone (Qualcomm ARM 845 processor) with high precision, making it attractive for large-scale or real-time applications. We have made our practical system based on PFLD 0.25X model publicly available at \url{http://sites.google.com/view/xjguo/fld} for encouraging comparisons and improvements from the community.

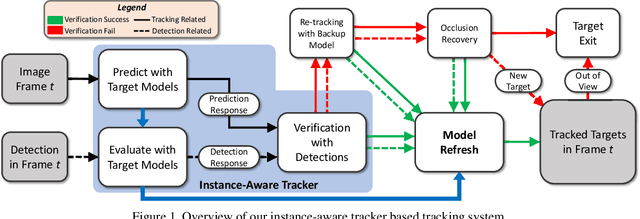

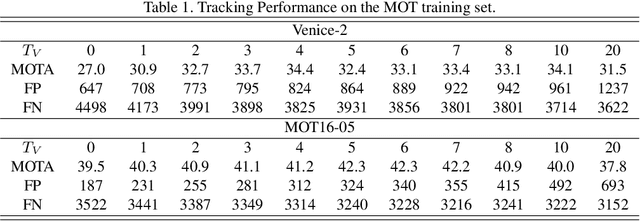

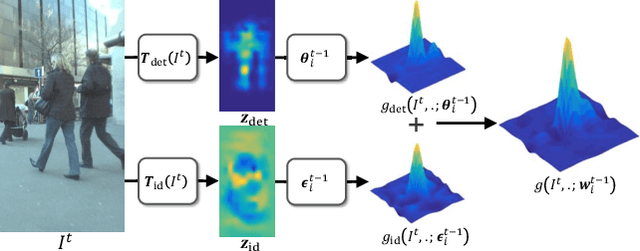

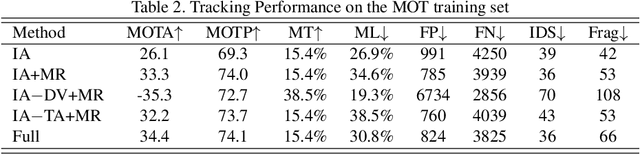

Online Multi-Object Tracking with Instance-Aware Tracker and Dynamic Model Refreshment

Feb 21, 2019

Abstract:Recent progresses in model-free single object tracking (SOT) algorithms have largely inspired applying SOT to \emph{multi-object tracking} (MOT) to improve the robustness as well as relieving dependency on external detector. However, SOT algorithms are generally designed for distinguishing a target from its environment, and hence meet problems when a target is spatially mixed with similar objects as observed frequently in MOT. To address this issue, in this paper we propose an instance-aware tracker to integrate SOT techniques for MOT by encoding awareness both within and between target models. In particular, we construct each target model by fusing information for distinguishing target both from background and other instances (tracking targets). To conserve uniqueness of all target models, our instance-aware tracker considers response maps from all target models and assigns spatial locations exclusively to optimize the overall accuracy. Another contribution we make is a dynamic model refreshing strategy learned by a convolutional neural network. This strategy helps to eliminate initialization noise as well as to adapt to the variation of target size and appearance. To show the effectiveness of the proposed approach, it is evaluated on the popular MOT15 and MOT16 challenge benchmarks. On both benchmarks, our approach achieves the best overall performances in comparison with published results.

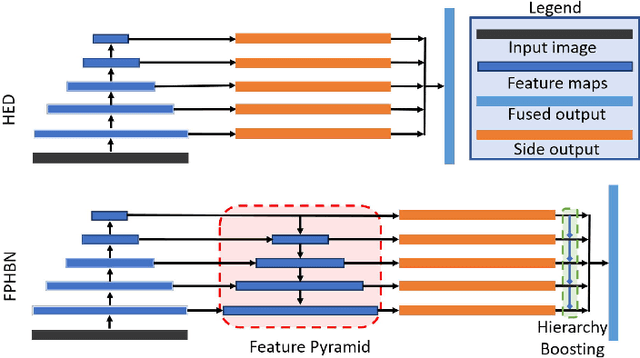

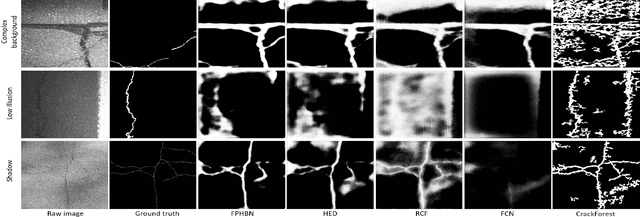

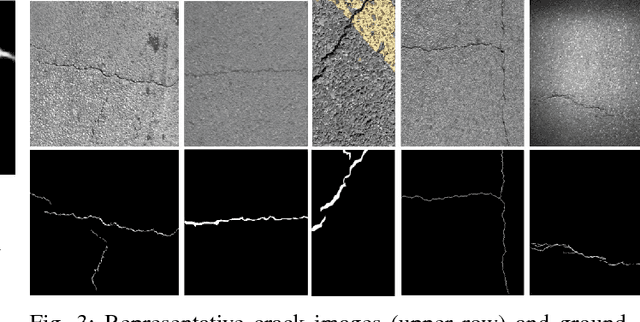

Feature Pyramid and Hierarchical Boosting Network for Pavement Crack Detection

Jan 25, 2019

Abstract:Pavement crack detection is a critical task for insuring road safety. Manual crack detection is extremely time-consuming. Therefore, an automatic road crack detection method is required to boost this progress. However, it remains a challenging task due to the intensity inhomogeneity of cracks and complexity of the background, e.g., the low contrast with surrounding pavements and possible shadows with similar intensity. Inspired by recent advances of deep learning in computer vision, we propose a novel network architecture, named Feature Pyramid and Hierarchical Boosting Network (FPHBN), for pavement crack detection. The proposed network integrates semantic information to low-level features for crack detection in a feature pyramid way. And, it balances the contribution of both easy and hard samples to loss by nested sample reweighting in a hierarchical way. To demonstrate the superiority and generality of the proposed method, we evaluate the proposed method on five crack datasets and compare it with state-of-the-art crack detection, edge detection, semantic segmentation methods. Extensive experiments show that the proposed method outperforms these state-of-the-art methods in terms of accuracy and generality.

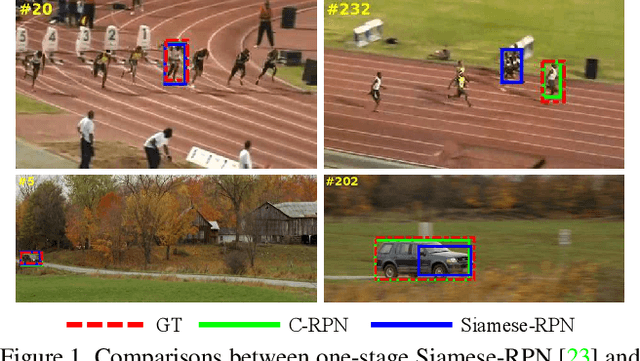

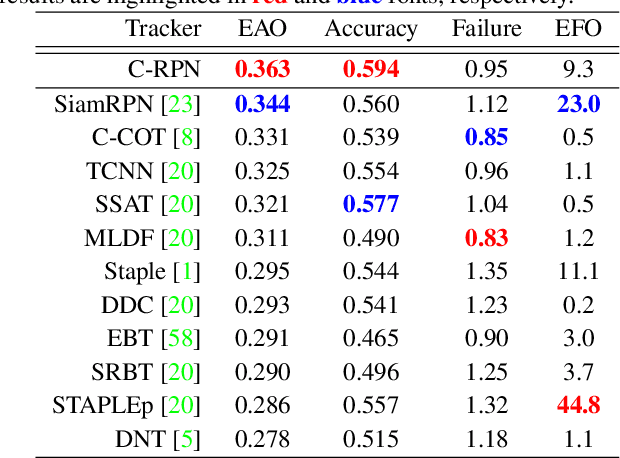

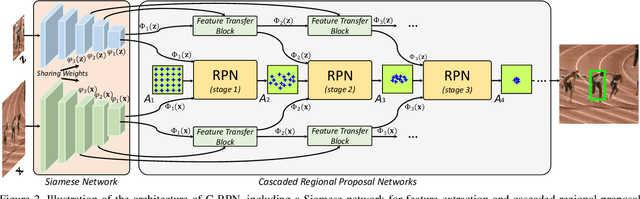

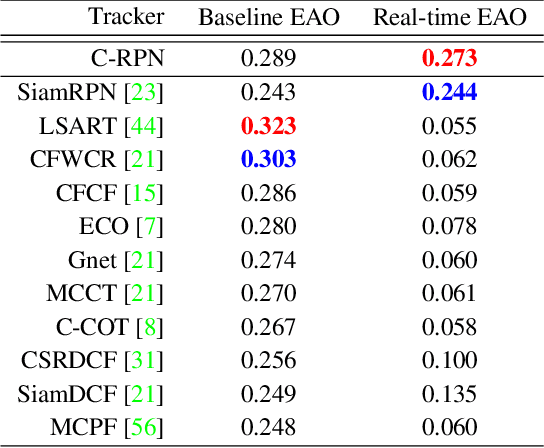

Siamese Cascaded Region Proposal Networks for Real-Time Visual Tracking

Dec 14, 2018

Abstract:Region proposal networks (RPN) have been recently combined with the Siamese network for tracking, and shown excellent accuracy with high efficiency. Nevertheless, previously proposed one-stage Siamese-RPN trackers degenerate in presence of similar distractors and large scale variation. Addressing these issues, we propose a multi-stage tracking framework, Siamese Cascaded RPN (C-RPN), which consists of a sequence of RPNs cascaded from deep high-level to shallow low-level layers in a Siamese network. Compared to previous solutions, C-RPN has several advantages: (1) Each RPN is trained using the outputs of RPN in the previous stage. Such process stimulates hard negative sampling, resulting in more balanced training samples. Consequently, the RPNs are sequentially more discriminative in distinguishing difficult background (i.e., similar distractors). (2) Multi-level features are fully leveraged through a novel feature transfer block (FTB) for each RPN, further improving the discriminability of C-RPN using both high-level semantic and low-level spatial information. (3) With multiple steps of regressions, C-RPN progressively refines the location and shape of the target in each RPN with adjusted anchor boxes in the previous stage, which makes localization more accurate. C-RPN is trained end-to-end with the multi-task loss function. In inference, C-RPN is deployed as it is, without any temporal adaption, for real-time tracking. In extensive experiments on OTB-2013, OTB-2015, VOT-2016, VOT-2017, LaSOT and TrackingNet, C-RPN consistently achieves state-of-the-art results and runs in real-time.

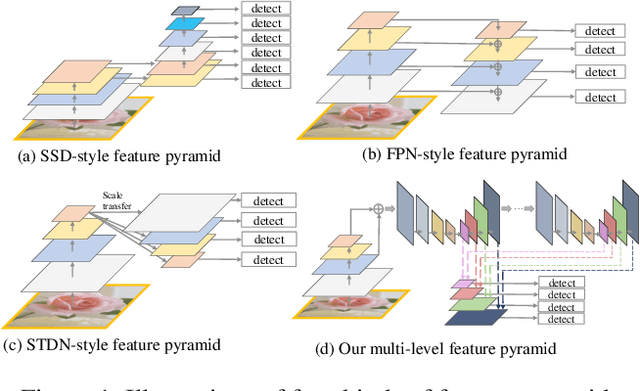

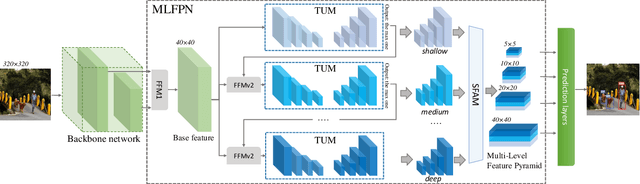

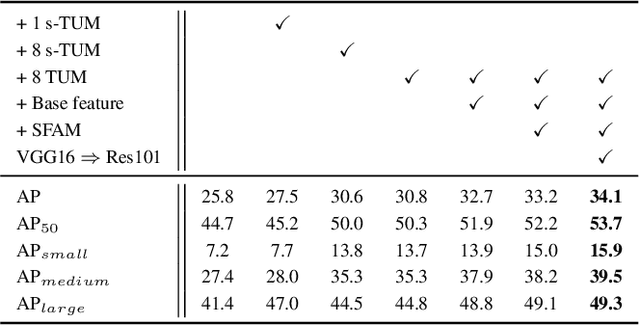

M2Det: A Single-Shot Object Detector based on Multi-Level Feature Pyramid Network

Nov 13, 2018

Abstract:Feature pyramids are widely exploited by both the state-of-the-art one-stage object detectors (e.g., DSSD, RetinaNet, RefineDet) and the two-stage object detectors (e.g., Mask R-CNN, DetNet) to alleviate the problem arising from scale variation across object instances. Although these object detectors with feature pyramids achieve encouraging results, they have some limitations due to that they only simply construct the feature pyramid according to the inherent multi-scale, pyramidal architecture of the backbones which are actually designed for object classification task. Newly, in this work, we present a method called Multi-Level Feature Pyramid Network (MLFPN) to construct more effective feature pyramids for detecting objects of different scales. First, we fuse multi-level features (i.e. multiple layers) extracted by backbone as the base feature. Second, we feed the base feature into a block of alternating joint Thinned U-shape Modules and Feature Fusion Modules and exploit the decoder layers of each u-shape module as the features for detecting objects. Finally, we gather up the decoder layers with equivalent scales (sizes) to develop a feature pyramid for object detection, in which every feature map consists of the layers (features) from multiple levels. To evaluate the effectiveness of the proposed MLFPN, we design and train a powerful end-to-end one-stage object detector we call M2Det by integrating it into the architecture of SSD, which gets better detection performance than state-of-the-art one-stage detectors. Specifically, on MS-COCO benchmark, M2Det achieves AP of 41.0 at speed of 11.8 FPS with single-scale inference strategy and AP of 44.2 with multi-scale inference strategy, which is the new state-of-the-art results among one-stage detectors. The code will be made available on \url{https://github.com/qijiezhao/M2Det.

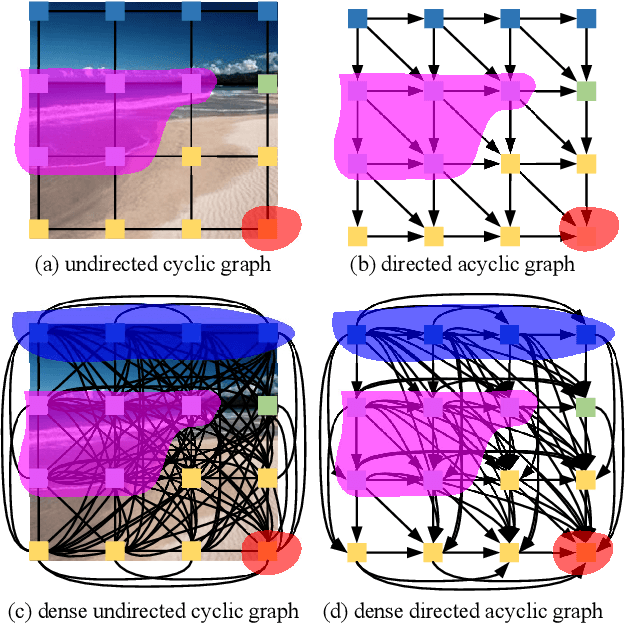

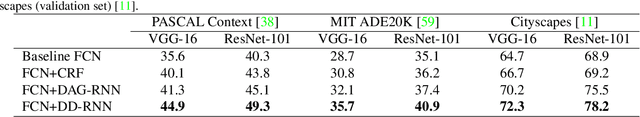

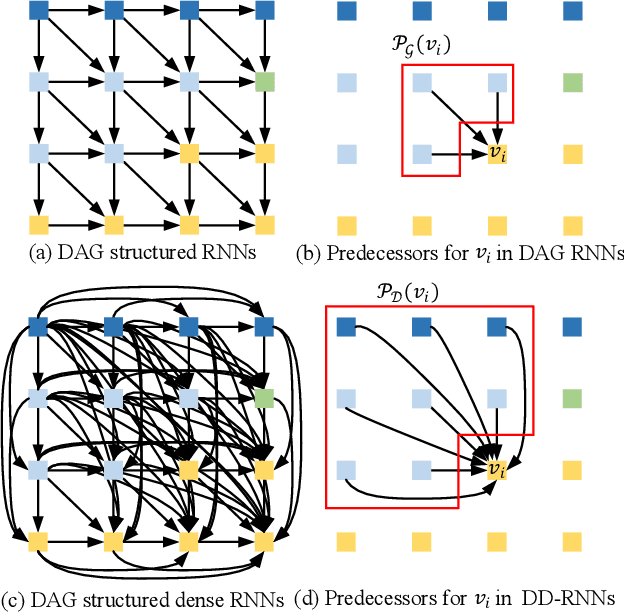

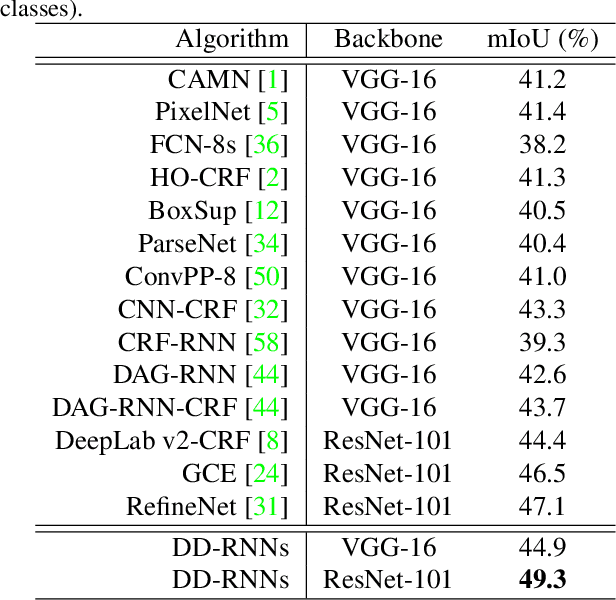

Scene Parsing via Dense Recurrent Neural Networks with Attentional Selection

Nov 09, 2018

Abstract:Recurrent neural networks (RNNs) have shown the ability to improve scene parsing through capturing long-range dependencies among image units. In this paper, we propose dense RNNs for scene labeling by exploring various long-range semantic dependencies among image units. Different from existing RNN based approaches, our dense RNNs are able to capture richer contextual dependencies for each image unit by enabling immediate connections between each pair of image units, which significantly enhances their discriminative power. Besides, to select relevant dependencies and meanwhile to restrain irrelevant ones for each unit from dense connections, we introduce an attention model into dense RNNs. The attention model allows automatically assigning more importance to helpful dependencies while less weight to unconcerned dependencies. Integrating with convolutional neural networks (CNNs), we develop an end-to-end scene labeling system. Extensive experiments on three large-scale benchmarks demonstrate that the proposed approach can improve the baselines by large margins and outperform other state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge