Haibin Ling

Vision Meets Drones: Past, Present and Future

Jan 16, 2020

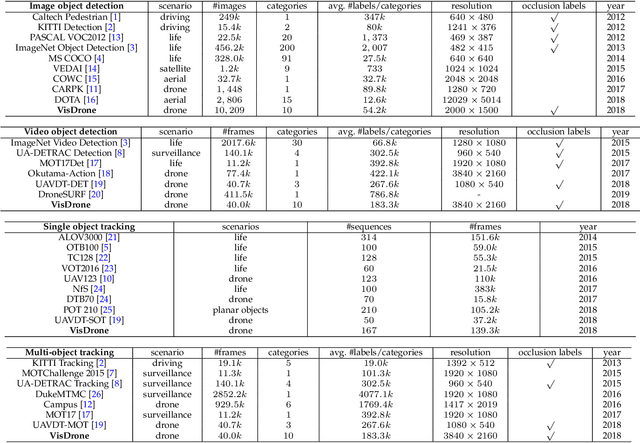

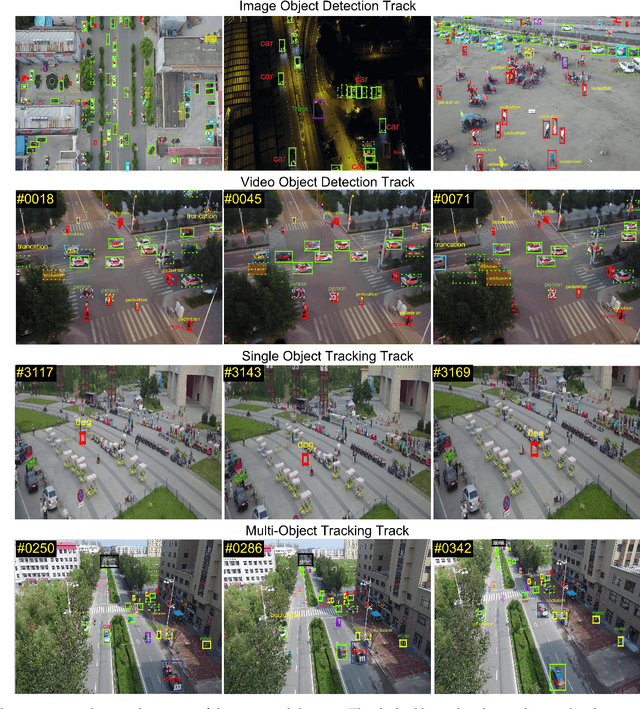

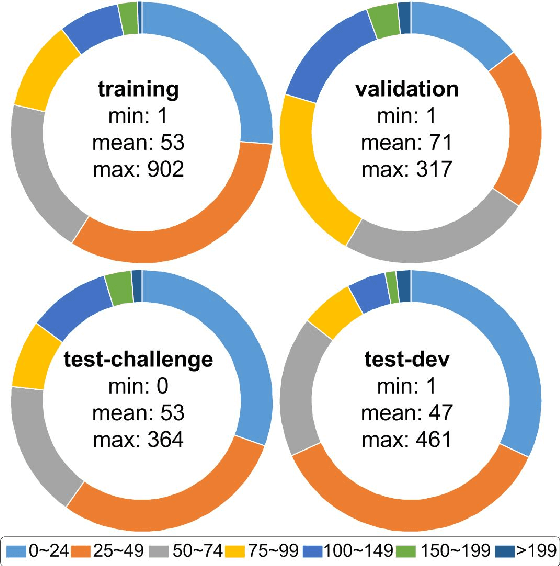

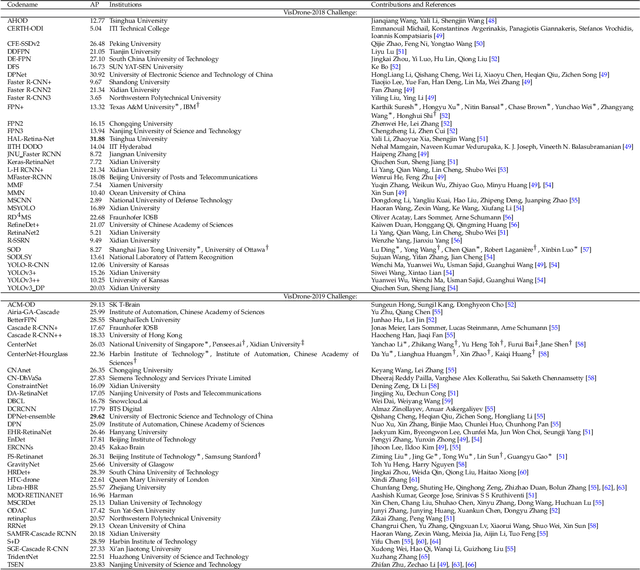

Abstract:Drones, or general UAVs, equipped with cameras have been fast deployed with a wide range of applications, including agriculture, aerial photography, fast delivery, and surveillance. Consequently, automatic understanding of visual data collected from drones becomes highly demanding, bringing computer vision and drones more and more closely. To promote and track the developments of object detection and tracking algorithms, we have organized two challenge workshops in conjunction with European Conference on Computer Vision (ECCV) 2018, and IEEE International Conference on Computer Vision (ICCV) 2019, attracting more than 100 teams around the world. We provide a large-scale drone captured dataset, VisDrone, which includes four tracks, i.e., (1) image object detection, (2) video object detection, (3) single object tracking, and (4) multi-object tracking. This paper first presents a thorough review of object detection and tracking datasets and benchmarks, and discuss the challenges of collecting large-scale drone-based object detection and tracking datasets with fully manual annotations. After that, we describe our VisDrone dataset, which is captured over various urban/suburban areas of $14$ different cities across China from North to South. Being the largest such dataset ever published, VisDrone enables extensive evaluation and investigation of visual analysis algorithms on the drone platform. We provide a detailed analysis of the current state of the field of large-scale object detection and tracking on drones, and conclude the challenge as well as propose future directions and improvements. We expect the benchmark largely boost the research and development in video analysis on drone platforms. All the datasets and experimental results can be downloaded from the website: https://github.com/VisDrone/VisDrone-Dataset.

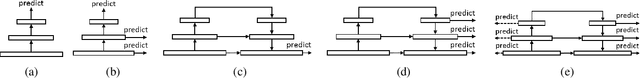

MFPN: A Novel Mixture Feature Pyramid Network of Multiple Architectures for Object Detection

Dec 20, 2019

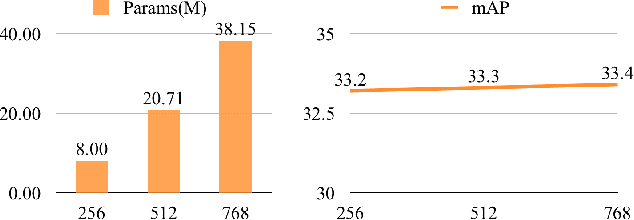

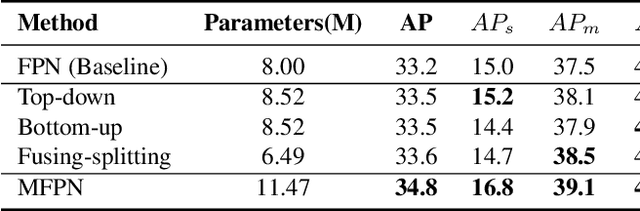

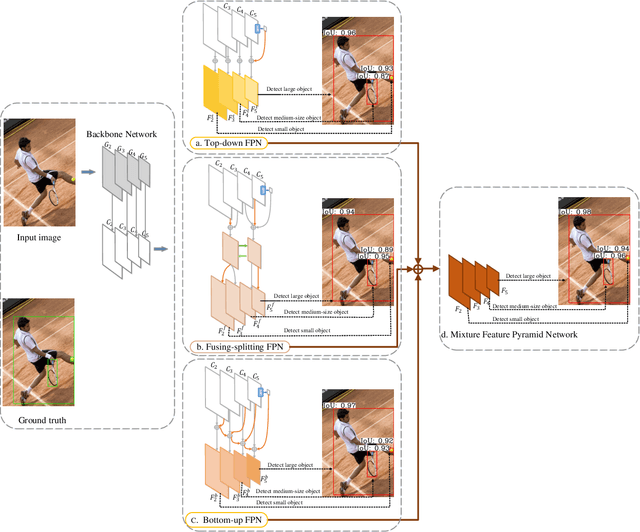

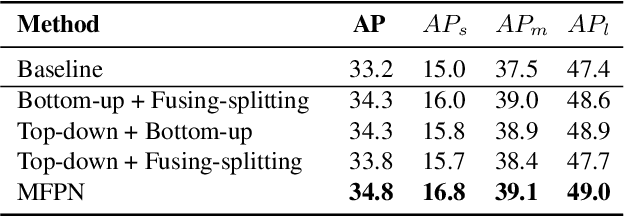

Abstract:Feature pyramids are widely exploited in many detectors to solve the scale variation problem for object detection. In this paper, we first investigate the Feature Pyramid Network (FPN) architectures and briefly categorize them into three typical fashions: top-down, bottom-up and fusing-splitting, which have their own merits for detecting small objects, large objects, and medium-sized objects, respectively. Further, we design three FPNs of different architectures and propose a novel Mixture Feature Pyramid Network (MFPN) which inherits the merits of all these three kinds of FPNs, by assembling the three kinds of FPNs in a parallel multi-branch architecture and mixing the features. MFPN can significantly enhance both one-stage and two-stage FPN-based detectors with about 2 percent Average Precision(AP) increment on the MS-COCO benchmark, at little sacrifice in running time latency. By simply assembling MFPN with the one-stage and two-stage baseline detectors, we achieve competitive single-model detection results on the COCO detection benchmark without bells and whistles.

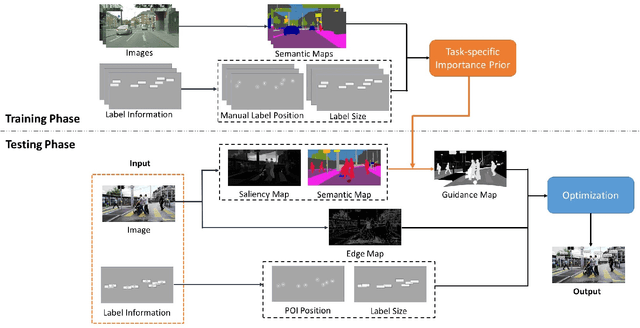

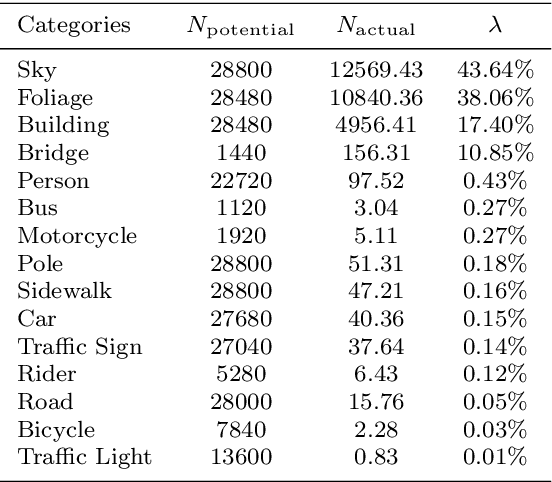

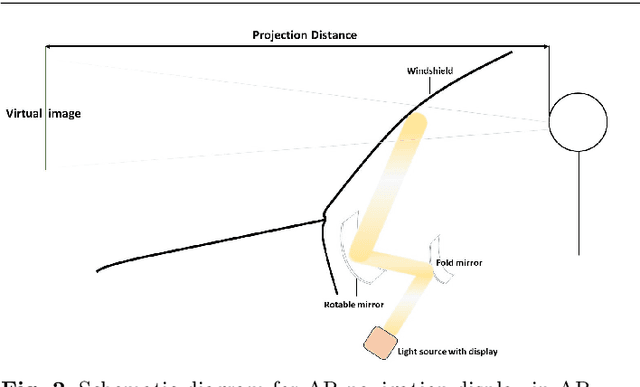

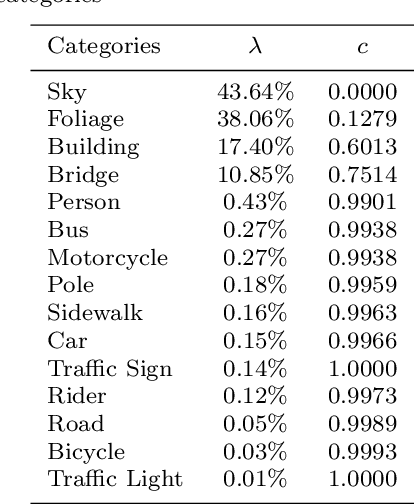

Semantic-Aware Label Placement for Augmented Reality in Street View

Dec 15, 2019

Abstract:In an augmented reality (AR) application, placing labels in a manner that is clear and readable without occluding the critical information from the real-world can be a challenging problem. This paper introduces a label placement technique for AR used in street view scenarios. We propose a semantic-aware task-specific label placement method by identifying potentially important image regions through a novel feature map, which we refer to as guidance map. Given an input image, its saliency information, semantic information and the task-specific importance prior are integrated into the guidance map for our labeling task. To learn the task prior, we created a label placement dataset with the users' labeling preferences, as well as use it for evaluation. Our solution encodes the constraints for placing labels in an optimization problem to obtain the final label layout, and the labels will be placed in appropriate positions to reduce the chances of overlaying important real-world objects in street view AR scenarios. The experimental validation shows clearly the benefits of our method over previous solutions in the AR street view navigation and similar applications.

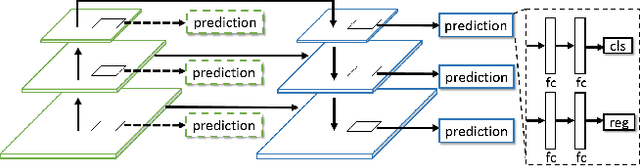

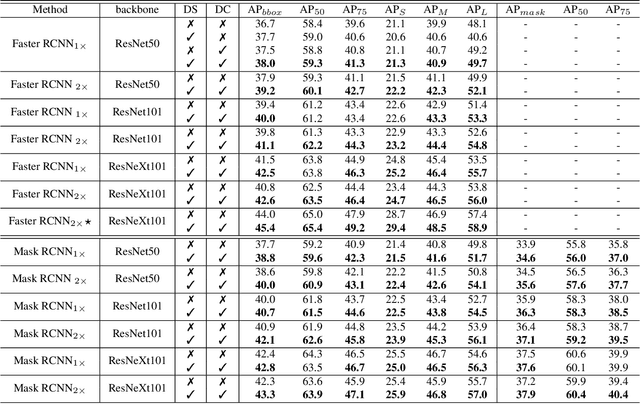

Dually Supervised Feature Pyramid for Object Detection and Segmentation

Dec 13, 2019

Abstract:Feature pyramid architecture has been broadly adopted in object detection and segmentation to deal with multi-scale problem. However, in this paper we show that the capacity of the architecture has not been fully explored due to the inadequate utilization of the supervision information. Such insufficient utilization is caused by the supervision signal degradation in back propagation. Thus inspired, we propose a dually supervised method, named dually supervised FPN (DSFPN), to enhance the supervision signal when training the feature pyramid network (FPN). In particular, DSFPN is constructed by attaching extra prediction (i.e., detection or segmentation) heads to the bottom-up subnet of FPN. Hence, the features can be optimized by the additional heads before being forwarded to subsequent networks. Further, the auxiliary heads can serve as a regularization term to facilitate the model training. In addition, to strengthen the capability of the detection heads in DSFPN for handling two inhomogeneous tasks, i.e., classification and regression, the originally shared hidden feature space is separated by decoupling classification and regression subnets. To demonstrate the generalizability, effectiveness, and efficiency of the proposed method, DSFPN is integrated into four representative detectors (Faster RCNN, Mask RCNN, Cascade RCNN, and Cascade Mask RCNN) and assessed on the MS COCO dataset. Promising precision improvement, state-of-the-art performance, and negligible additional computational cost are demonstrated through extensive experiments. Code will be provided.

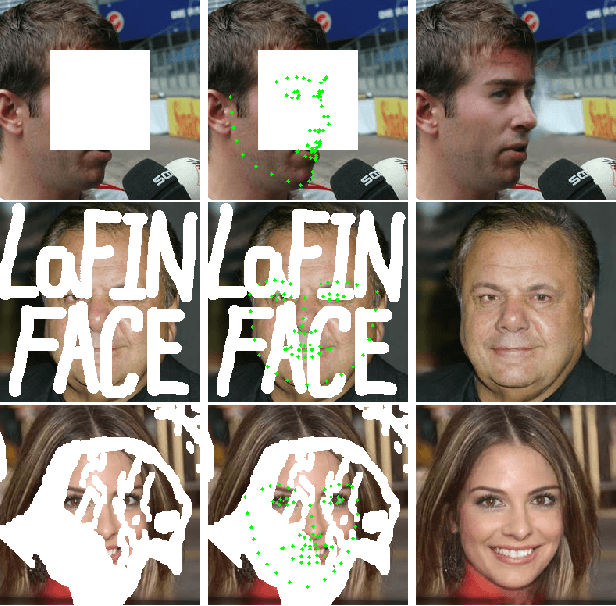

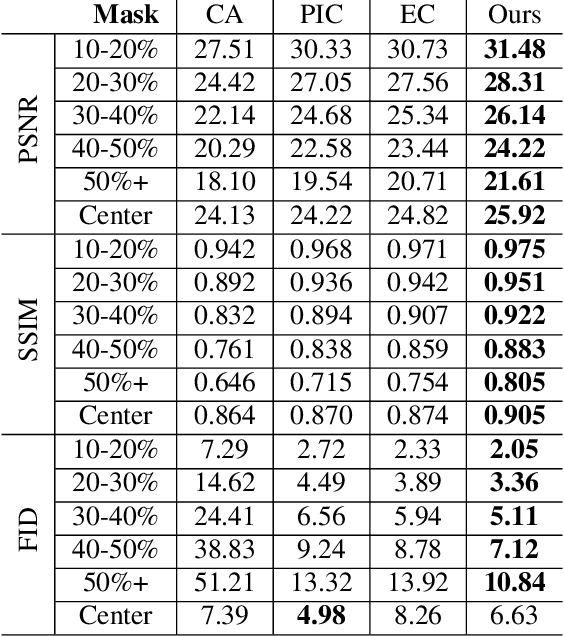

LaFIn: Generative Landmark Guided Face Inpainting

Nov 26, 2019

Abstract:It is challenging to inpaint face images in the wild, due to the large variation of appearance, such as different poses, expressions and occlusions. A good inpainting algorithm should guarantee the realism of output, including the topological structure among eyes, nose and mouth, as well as the attribute consistency on pose, gender, ethnicity, expression, etc. This paper studies an effective deep learning based strategy to deal with these issues, which comprises of a facial landmark predicting subnet and an image inpainting subnet. Concretely, given partial observation, the landmark predictor aims to provide the structural information (e.g. topological relationship and expression) of incomplete faces, while the inpaintor is to generate plausible appearance (e.g. gender and ethnicity) conditioned on the predicted landmarks. Experiments on the CelebA-HQ and CelebA datasets are conducted to reveal the efficacy of our design and, to demonstrate its superiority over state-of-the-art alternatives both qualitatively and quantitatively. In addition, we assume that high-quality completed faces together with their landmarks can be utilized as augmented data to further improve the performance of (any) landmark predictor, which is corroborated by experimental results on the 300W and WFLW datasets.

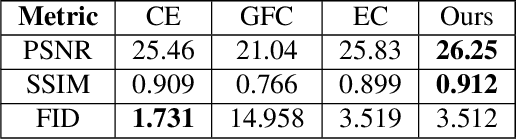

TracKlinic: Diagnosis of Challenge Factors in Visual Tracking

Nov 25, 2019

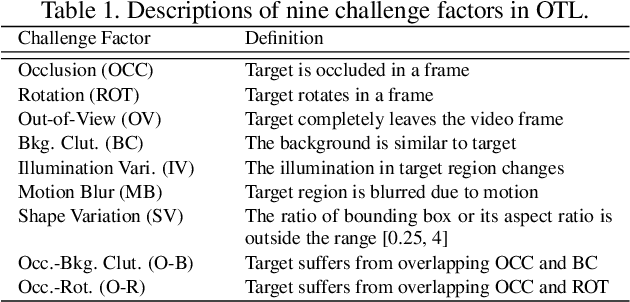

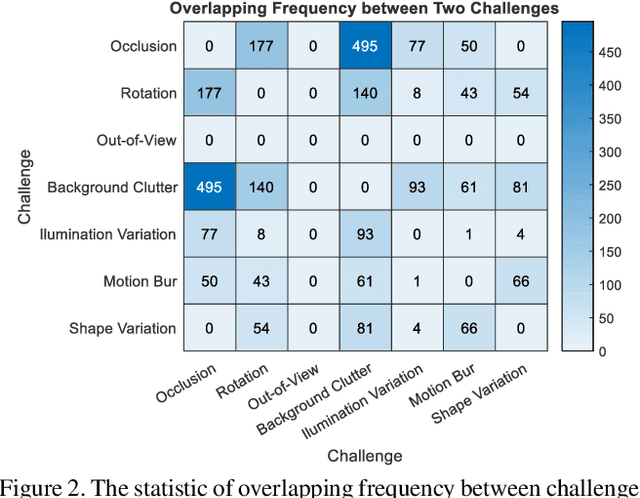

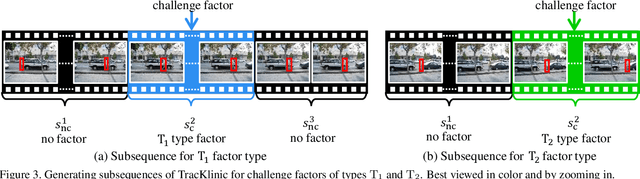

Abstract:Generic visual tracking is difficult due to many challenge factors (e.g., occlusion, blur, etc.). Each of these factors may cause serious problems for a tracking algorithm, and when they work together can make things even more complicated. Despite a great amount of efforts devoted to understanding the behavior of tracking algorithms, reliable and quantifiable ways for studying the per factor tracking behavior remain barely available. Addressing this issue, in this paper we contribute to the community a tracking diagnosis toolkit, TracKlinic, for diagnosis of challenge factors of tracking algorithms. TracKlinic consists of two novel components focusing on the data and analysis aspects, respectively. For the data component, we carefully prepare a set of 2,390 annotated videos, each involving one and only one major challenge factor. When analyzing an algorithm for a specific challenge factor, such one-factor-per-sequence rule greatly inhibits the disturbance from other factors and consequently leads to more faithful analysis. For the analysis component, given the tracking results on all sequences, it investigates the behavior of the tracker under each individual factor and generates the report automatically. With TracKlinic, a thorough study is conducted on ten state-of-the-art trackers on nine challenge factors (including two compound ones). The results suggest that, heavy shape variation and occlusion are the two most challenging factors faced by most trackers. Besides, out-of-view, though does not happen frequently, is often fatal. By sharing TracKlinic, we expect to make it much easier for diagnosing tracking algorithms, and to thus facilitate developing better ones.

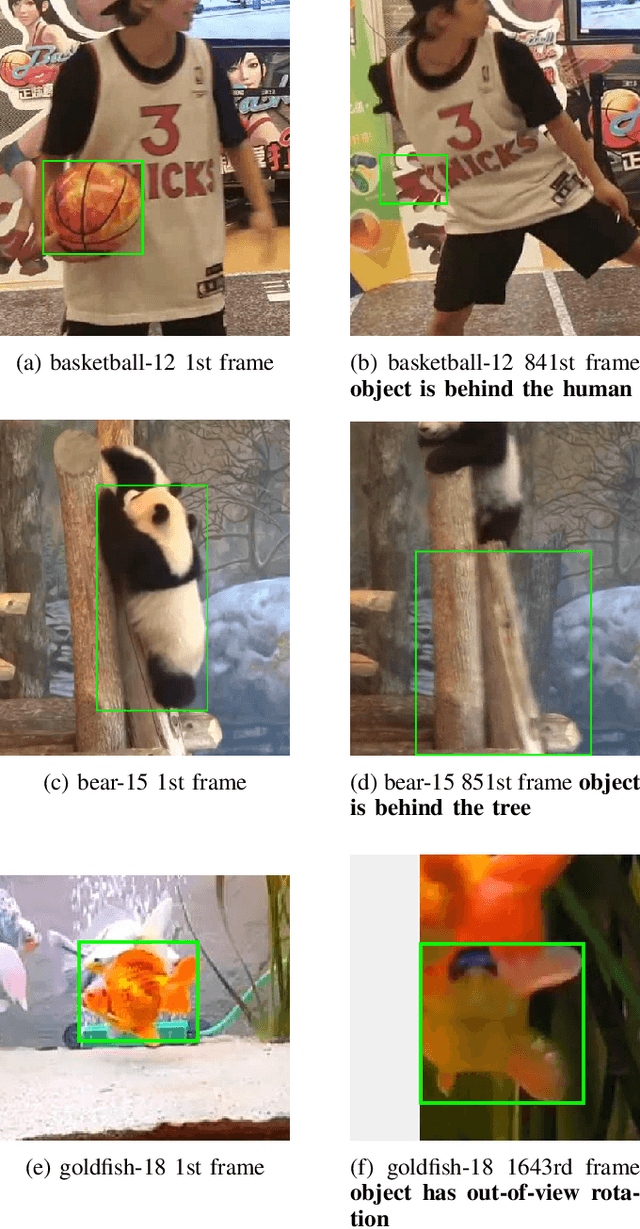

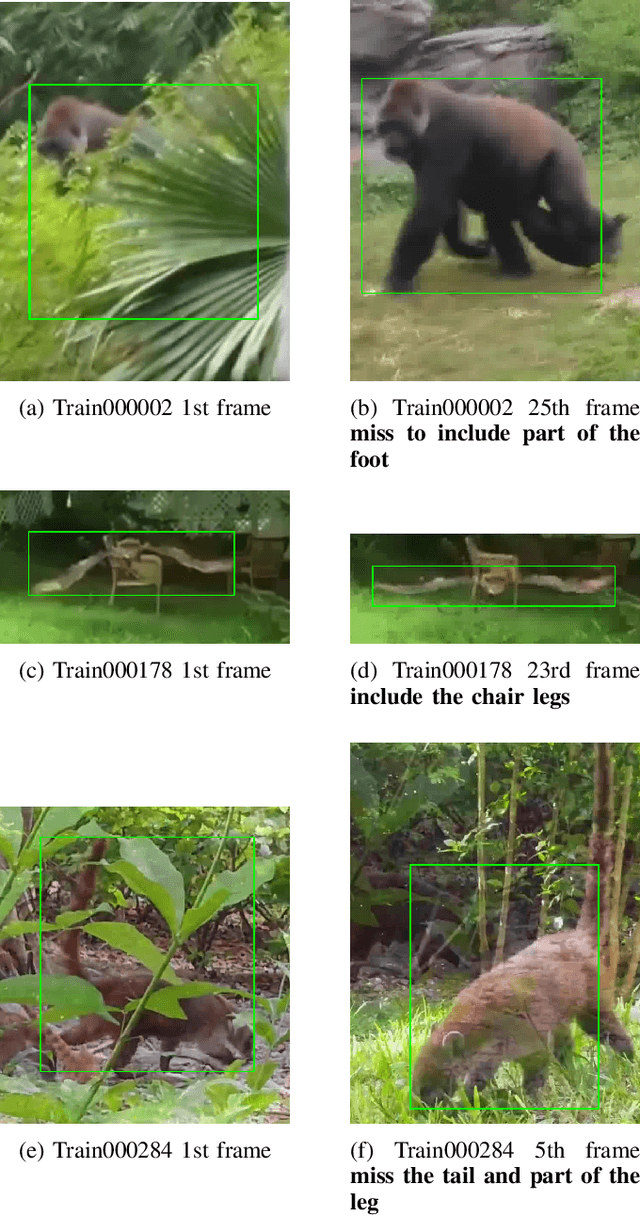

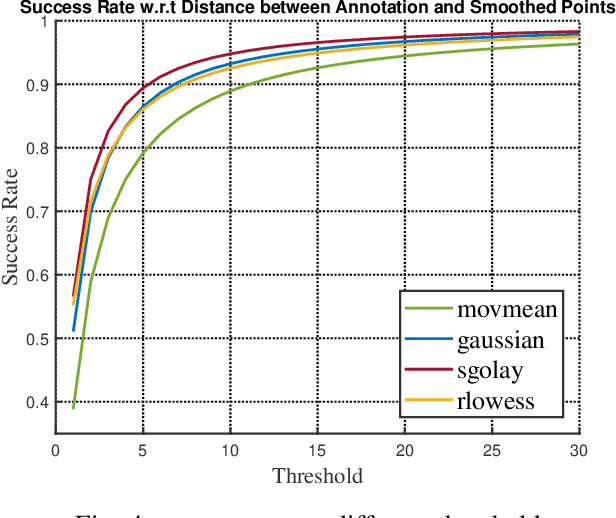

Improving Human Annotation in Single Object Tracking

Nov 07, 2019

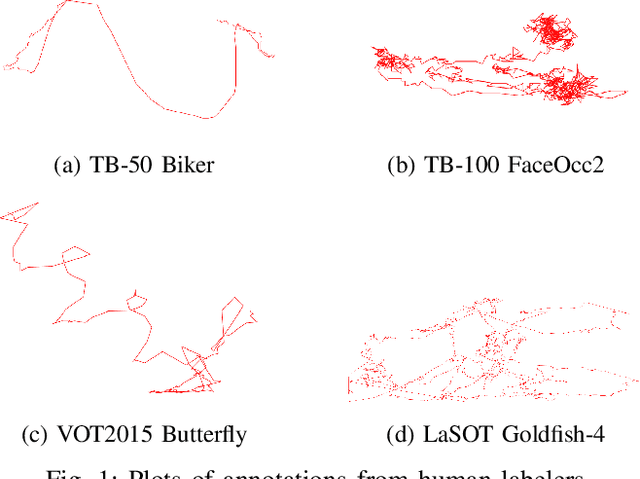

Abstract:Human annotation is always considered as ground truth in video object tracking tasks. It is used in both training and evaluation purposes. Thus, ensuring its high quality is an important task for the success of trackers and evaluations between them. In this paper, we give a qualitative and quantitative analysis of the existing human annotations. We show that human annotation tends to be non-smooth and is prone to partial visibility and deformation. We propose a smoothing trajectory strategy with the ability to handle moving scenes. We use a two-step adaptive image alignment algorithm to find the canonical view of the video sequence. We then use different techniques to smooth the trajectories at certain degree. Once we convert back to the original image coordination, we can compare with the human annotation. With the experimental results, we can get more consistent trajectories. At a certain degree, it can also slightly improve the trained model. If go beyond a certain threshold, the smoothing error will start eating up the benefit. Overall, our method could help extrapolate the missing annotation frames or identify and correct human annotation outliers as well as help improve the training data quality.

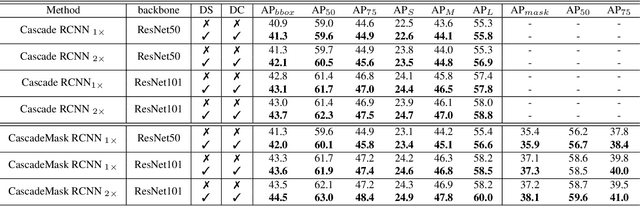

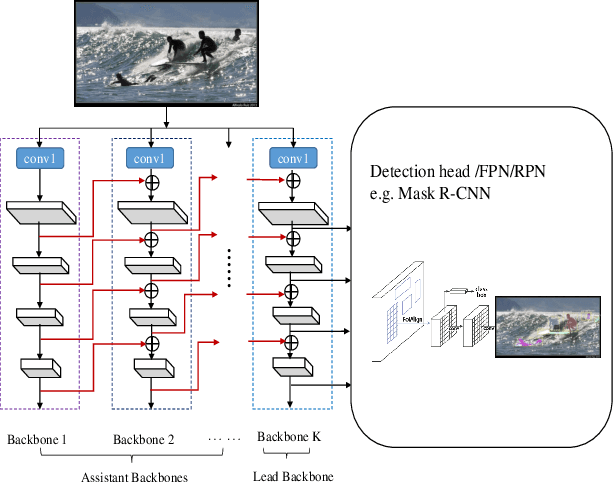

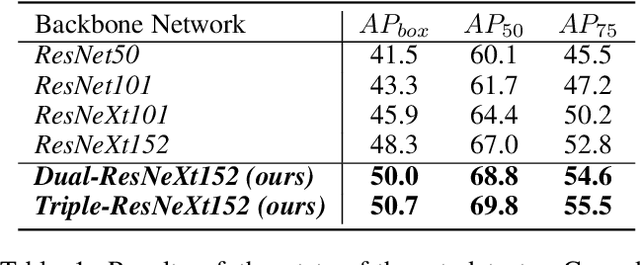

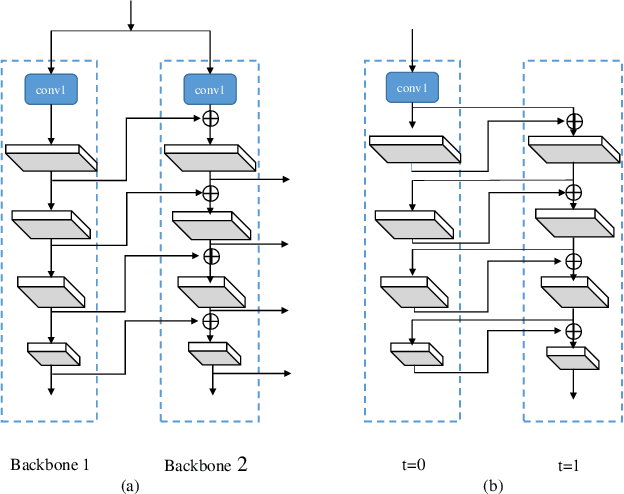

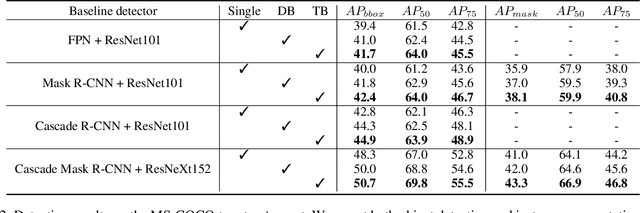

CBNet: A Novel Composite Backbone Network Architecture for Object Detection

Sep 09, 2019

Abstract:In existing CNN based detectors, the backbone network is a very important component for basic feature extraction, and the performance of the detectors highly depends on it. In this paper, we aim to achieve better detection performance by building a more powerful backbone from existing backbones like ResNet and ResNeXt. Specifically, we propose a novel strategy for assembling multiple identical backbones by composite connections between the adjacent backbones, to form a more powerful backbone named Composite Backbone Network (CBNet). In this way, CBNet iteratively feeds the output features of the previous backbone, namely high-level features, as part of input features to the succeeding backbone, in a stage-by-stage fashion, and finally the feature maps of the last backbone (named Lead Backbone) are used for object detection. We show that CBNet can be very easily integrated into most state-of-the-art detectors and significantly improve their performances. For example, it boosts the mAP of FPN, Mask R-CNN and Cascade R-CNN on the COCO dataset by about 1.5 to 3.0 percent. Meanwhile, experimental results show that the instance segmentation results can also be improved. Specially, by simply integrating the proposed CBNet into the baseline detector Cascade Mask R-CNN, we achieve a new state-of-the-art result on COCO dataset (mAP of 53.3) with single model, which demonstrates great effectiveness of the proposed CBNet architecture. Code will be made available on https://github.com/PKUbahuangliuhe/CBNet.

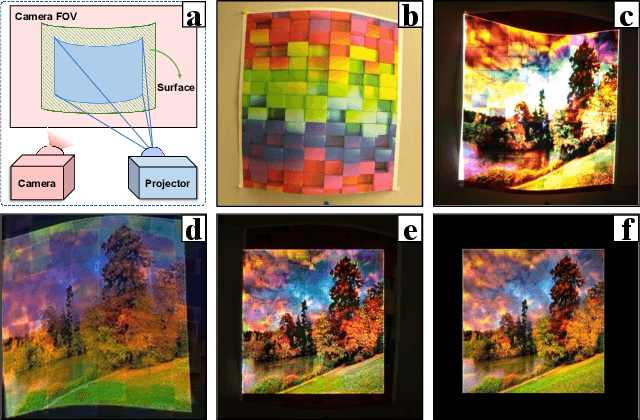

CompenNet++: End-to-end Full Projector Compensation

Aug 17, 2019

Abstract:Full projector compensation aims to modify a projector input image such that it can compensate for both geometric and photometric disturbance of the projection surface. Traditional methods usually solve the two parts separately, although they are known to correlate with each other. In this paper, we propose the first end-to-end solution, named CompenNet++, to solve the two problems jointly. Our work non-trivially extends CompenNet, which was recently proposed for photometric compensation with promising performance. First, we propose a novel geometric correction subnet, which is designed with a cascaded coarse-to-fine structure to learn the sampling grid directly from photometric sampling images. Second, by concatenating the geometric correction subset with CompenNet, CompenNet++ accomplishes full projector compensation and is end-to-end trainable. Third, after training, we significantly simplify both geometric and photometric compensation parts, and hence largely improves the running time efficiency. Moreover, we construct the first setup-independent full compensation benchmark to facilitate the study on this topic. In our thorough experiments, our method shows clear advantages over previous arts with promising compensation quality and meanwhile being practically convenient.

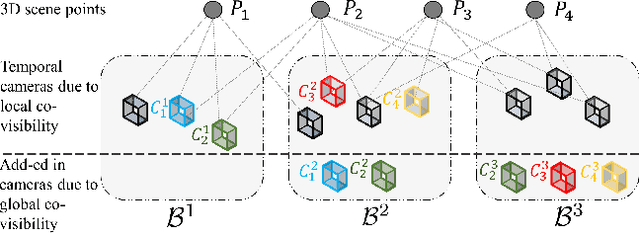

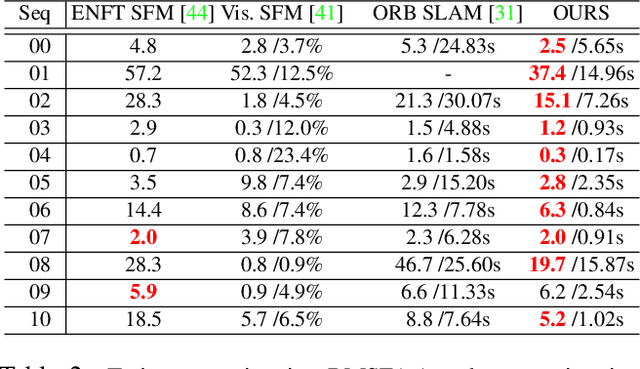

Hybrid Camera Pose Estimation with Online Partitioning

Aug 05, 2019

Abstract:This paper presents a hybrid real-time camera pose estimation framework with a novel partitioning scheme and introduces motion averaging to on-line monocular systems. Breaking through the limitations of fixed-size temporal partitioning in most conventional pose estimation mechanisms, the proposed approach significantly improves the accuracy of local bundle adjustment by gathering spatially-strongly-connected cameras into each block. With the dynamic initialization using intermediate computation values, our proposed self-adaptive Levenberg-Marquardt solver achieves a quadratic convergence rate to further enhance the efficiency of the local optimization. Moreover, the dense data association between blocks by virtue of our co-visibility-based partitioning enables us to explore and implement motion averaging to efficiently align the blocks globally, updating camera motion estimations on-the-fly. Experiment results on benchmarks convincingly demonstrate the practicality and robustness of our proposed approach by outperforming conventional bundle adjustment by orders of magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge