Gaojie Chen

Large Artificial Intelligence Models for Future Wireless Communications

Jan 11, 2026Abstract:The anticipated integration of large artificial intelligence (AI) models with wireless communications is estimated to usher a transformative wave in the forthcoming information age. As wireless networks grow in complexity, the traditional methodologies employed for optimization and management face increasingly challenges. Large AI models have extensive parameter spaces and enhanced learning capabilities and can offer innovative solutions to these challenges. They are also capable of learning, adapting and optimizing in real-time. We introduce the potential and challenges of integrating large AI models into wireless communications, highlighting existing AIdriven applications and inherent challenges for future large AI models. In this paper, we propose the architecture of large AI models for future wireless communications, introduce their advantages in data analysis, resource allocation and real-time adaptation, discuss the potential challenges and corresponding solutions of energy, architecture design, privacy, security, ethical and regulatory. In addition, we explore the potential future directions of large AI models in wireless communications, laying the groundwork for forthcoming research in this area.

Semantic Transmission Framework in Direct Satellite Communications

Jan 01, 2026Abstract:Insufficient link budget has become a bottleneck problem for direct access in current satellite communications. In this paper, we develop a semantic transmission framework for direct satellite communications as an effective and viable solution to tackle this problem. To measure the tradeoffs between communication, computation, and generation quality, we introduce a semantic efficiency metric with optimized weights. The optimization aims to maximize the average semantic efficiency metric by jointly optimizing transmission mode selection, satellite-user association, ISL task migration, denoising steps, and adaptive weights, which is a complex nonlinear integer programming problem. To maximize the average semantic efficiency metric, we propose a decision-assisted REINFORCE++ algorithm that utilizes feasibility-aware action space and a critic-free stabilized policy update. Numerical results show that the proposed algorithm achieves higher semantic efficiency than baselines.

Flexible Reconfigurable Intelligent Surface-Aided Covert Communications in UAV Networks

Dec 10, 2025

Abstract:In recent years, unmanned aerial vehicles (UAVs) have become a key role in wireless communication networks due to their flexibility and dynamic adaptability. However, the openness of UAV-based communications leads to security and privacy concerns in wireless transmissions. This paper investigates a framework of UAV covert communications which introduces flexible reconfigurable intelligent surfaces (F-RIS) in UAV networks. Unlike traditional RIS, F-RIS provides advanced deployment flexibility by conforming to curved surfaces and dynamically reconfiguring its electromagnetic properties to enhance the covert communication performance. We establish an electromagnetic model for F-RIS and further develop a fitted model that describes the relationship between F-RIS reflection amplitude, reflection phase, and incident angle. To maximize the covert transmission rate among UAVs while meeting the covert constraint and public transmission constraint, we introduce a strategy of jointly optimizing UAV trajectories, F-RIS reflection vectors, F-RIS incident angles, and non-orthogonal multiple access (NOMA) power allocation. Considering this is a complicated non-convex optimization problem, we propose a deep reinforcement learning (DRL) algorithm-based optimization solution. Simulation results demonstrate that our proposed framework and optimization method significantly outperform traditional benchmarks, and highlight the advantages of F-RIS in enhancing covert communication performance within UAV networks.

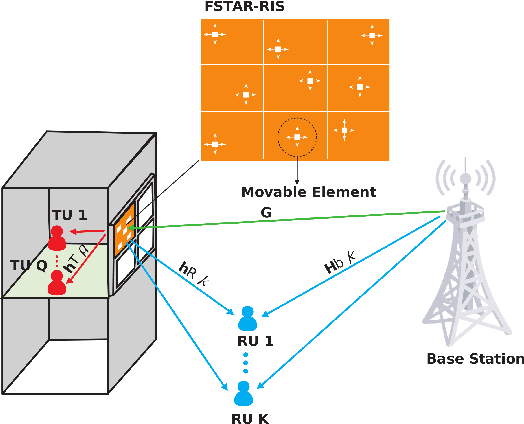

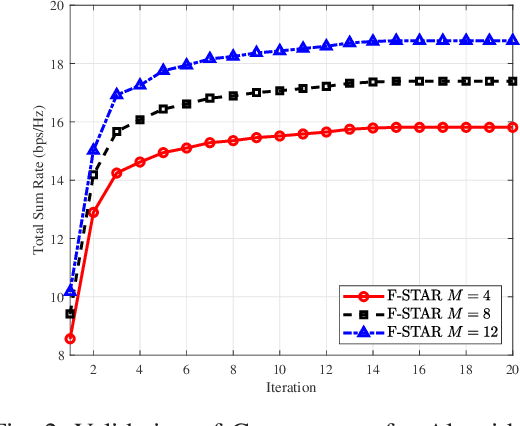

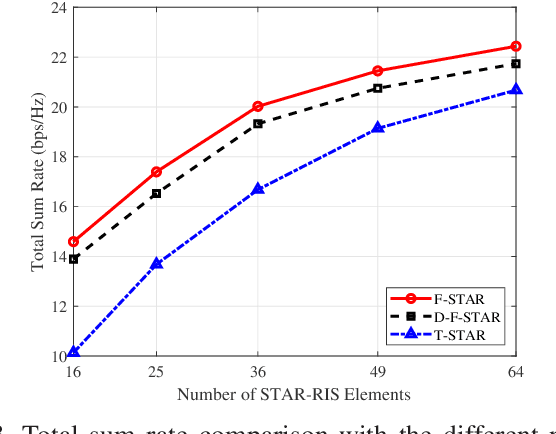

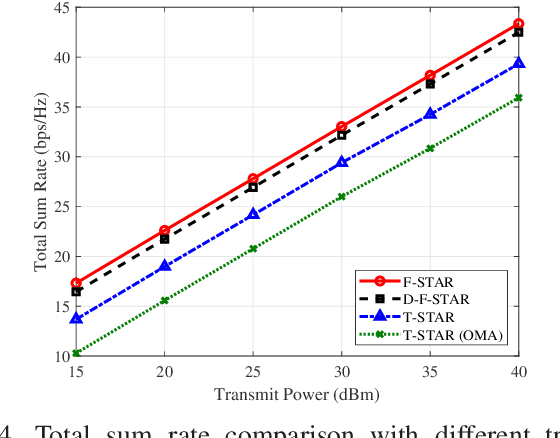

Joint Beamforming and Position Optimization for Fluid STAR-RIS-NOMA Assisted Wireless Communication Systems

Jul 09, 2025

Abstract:To address the limitations of traditional reconfigurable intelligent surfaces (RIS) in spatial control capability, this paper introduces the concept of the fluid antenna system (FAS) and proposes a fluid simultaneously transmitting and reflecting RIS (FSTAR-RIS) assisted non-orthogonal multiple access (NOMA) multi-user communication system. In this system, each FSTAR-RIS element is capable of flexible mobility and can dynamically adjust its position in response to environmental variations, thereby enabling simultaneous service to users in both the transmission and reflection zones. This significantly enhances the system's spatial degrees of freedom (DoF) and service adaptability. To maximize the system's weighted sum-rate, we formulate a non-convex optimization problem that jointly optimizes the base station beamforming, the transmission/reflection coefficients of the FSTAR-RIS, and the element positions. An alternating optimization (AO) algorithm is developed, incorporating successive convex approximation (SCA), semi-definite relaxation (SDR), and majorization-minimization (MM) techniques. In particular, to address the complex channel coupling introduced by the coexistence of direct and FSTAR-RIS paths, the MM framework is employed in the element position optimization subproblem, enabling an efficient iterative solution strategy. Simulation results validate that the proposed system achieves up to a 27% increase in total sum rate compared to traditional STAR-RIS systems and requires approximately 50% fewer RIS elements to attain the same performance, highlighting its effectiveness for cost-efficient large-scale deployment.

Widely Linear Augmented Extreme Learning Machine Based Impairments Compensation for Satellite Communications

Jun 17, 2025Abstract:Satellite communications are crucial for the evolution beyond fifth-generation networks. However, the dynamic nature of satellite channels and their inherent impairments present significant challenges. In this paper, a novel post-compensation scheme that combines the complex-valued extreme learning machine with augmented hidden layer (CELMAH) architecture and widely linear processing (WLP) is developed to address these issues by exploiting signal impropriety in satellite communications. Although CELMAH shares structural similarities with WLP, it employs a different core algorithm and does not fully exploit the signal impropriety. By incorporating WLP principles, we derive a tailored formulation suited to the network structure and propose the CELM augmented by widely linear least squares (CELM-WLLS) for post-distortion. The proposed approach offers enhanced communication robustness and is highly effective for satellite communication scenarios characterized by dynamic channel conditions and non-linear impairments. CELM-WLLS is designed to improve signal recovery performance and outperform traditional methods such as least square (LS) and minimum mean square error (MMSE). Compared to CELMAH, CELM-WLLS demonstrates approximately 0.8 dB gain in BER performance, and also achieves a two-thirds reduction in computational complexity, making it a more efficient solution.

Model Splitting Enhanced Communication-Efficient Federated Learning for CSI Feedback

Jun 04, 2025Abstract:Recent advancements have introduced federated machine learning-based channel state information (CSI) compression before the user equipments (UEs) upload the downlink CSI to the base transceiver station (BTS). However, most existing algorithms impose a high communication overhead due to frequent parameter exchanges between UEs and BTS. In this work, we propose a model splitting approach with a shared model at the BTS and multiple local models at the UEs to reduce communication overhead. Moreover, we implant a pipeline module at the BTS to reduce training time. By limiting exchanges of boundary parameters during forward and backward passes, our algorithm can significantly reduce the exchanged parameters over the benchmarks during federated CSI feedback training.

MmWave-LoRadar Empowered Vehicular Integrated Sensing and Communication Systems: LoRa Meets FMCW

May 16, 2025Abstract:The integrated sensing and communication (ISAC) technique is regarded as a key component in future vehicular applications. In this paper, we propose an ISAC solution that integrates Long Range (LoRa) modulation with frequency-modulated continuous wave (FMCW) radar in the millimeter-wave (mmWave) band, called mmWave-LoRadar. This design introduces the sensing capabilities to the LoRa communication with a simplified hardware architecture. Particularly, we uncover the dual discontinuity issues in time and phase of the mmWave-LoRadar received signals, rendering conventional signal processing techniques ineffective. As a remedy, we propose a corresponding hardware design and signal processing schemes under the compressed sampling framework. These techniques effectively cope with the dual discontinuity issues and mitigate the demands for high-sampling-rate analog-to-digital converters while achieving good performance. Simulation results demonstrate the superiority of the mmWave-LoRadar ISAC system in vehicular communication and sensing networks.

The Communication and Computation Trade-off in Wireless Semantic Communications

Apr 14, 2025

Abstract:Semantic communications have emerged as a crucial research direction for future wireless communication networks. However, as wireless systems become increasingly complex, the demands for computation and communication resources in semantic communications continue to grow rapidly. This paper investigates the trade-off between computation and communication in wireless semantic communications, taking into consideration transmission task delay and performance constraints within the semantic communication framework. We propose a novel tradeoff metric to analyze the balance between computation and communication in semantic transmissions and employ the deep reinforcement learning (DRL) algorithm to minimize this metric, thereby reducing the cost associated with balancing computation and communication. Through simulations, we analyze the tradeoff between computation and communication and demonstrate the effectiveness of optimizing this trade-off metric.

Amplitude-Domain Reflection Modulation for Active RIS-Assisted Wireless Communications

Mar 27, 2025Abstract:In this paper, we propose a novel active reconfigurable intelligent surface (RIS)-assisted amplitude-domain reflection modulation (ADRM) transmission scheme, termed as ARIS-ADRM. This innovative approach leverages the additional degree of freedom (DoF) provided by the amplitude domain of the active RIS to perform index modulation (IM), thereby enhancing spectral efficiency (SE) without increasing the costs associated with additional radio frequency (RF) chains. Specifically, the ARIS-ADRM scheme transmits information bits through both the modulation symbol and the index of active RIS amplitude allocation patterns (AAPs). To evaluate the performance of the proposed ARIS-ADRM scheme, we provide an achievable rate analysis and derive a closed-form expression for the upper bound on the average bit error probability (ABEP). Furthermore, we formulate an optimization problem to construct the AAP codebook, aiming to minimize the ABEP. Simulation results demonstrate that the proposed scheme significantly improves error performance under the same SE conditions compared to its benchmarks. This improvement is due to its ability to flexibly adapt the transmission rate by fully exploiting the amplitude domain DoF provided by the active RIS.

Joint Sparse Graph for Enhanced MIMO-AFDM Receiver Design

Mar 24, 2025Abstract:Affine frequency division multiplexing (AFDM) is a promising chirp-assisted multicarrier waveform for future high-mobility communications. This paper is devoted to enhanced receiver design for multiple input and multiple output AFDM (MIMO-AFDM) systems. Firstly, we introduce a unified variational inference (VI) approach to approximate the target posterior distribution, under which the belief propagation (BP) and expectation propagation (EP)-based algorithms are derived. As both VI-based detection and low-density parity-check (LDPC) decoding can be expressed by bipartite graphs in MIMO-AFDM systems, we construct a joint sparse graph (JSG) by merging the graphs of these two for low-complexity receiver design. Then, based on this graph model, we present the detailed message propagation of the proposed JSG. Additionally, we propose an enhanced JSG (E-JSG) receiver based on the linear constellation encoding model. The proposed E-JSG eliminates the need for interleavers, de-interleavers, and log-likelihood ratio transformations, thus leading to concurrent detection and decoding over the integrated sparse graph. To further reduce detection complexity, we introduce a sparse channel method by approaximating multiple graph edges with insignificant channel coefficients into a single edge on the VI graph. Simulation results show the superiority of the proposed receivers in terms of computational complexity, detection and decoding latency, and error rate performance compared to the conventional ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge