Devesh K. Jha

A Virtual Reality Teleoperation Interface for Industrial Robot Manipulators

May 18, 2023Abstract:We address the problem of teleoperating an industrial robot manipulator via a commercially available Virtual Reality (VR) interface. Previous works on VR teleoperation for robot manipulators focus primarily on collaborative or research robot platforms (whose dynamics and constraints differ from industrial robot arms), or only address tasks where the robot's dynamics are not as important (e.g: pick and place tasks). We investigate the usage of commercially available VR interfaces for effectively teleoeprating industrial robot manipulators in a variety of contact-rich manipulation tasks. We find that applying standard practices for VR control of robot arms is challenging for industrial platforms because torque and velocity control is not exposed, and position control is mediated through a black-box controller. To mitigate these problems, we propose a simplified filtering approach to process command signals to enable operators to effectively teleoperate industrial robot arms with VR interfaces in dexterous manipulation tasks. We hope our findings will help robot practitioners implement and setup effective VR teleoperation interfaces for robot manipulators. The proposed method is demonstrated on a variety of contact-rich manipulation tasks which can also involve very precise movement of the robot during execution (videos can be found at https://www.youtube.com/watch?v=OhkCB9mOaBc)

Covariance Steering for Uncertain Contact-rich Systems

Mar 23, 2023Abstract:Planning and control for uncertain contact systems is challenging as it is not clear how to propagate uncertainty for planning. Contact-rich tasks can be modeled efficiently using complementarity constraints among other techniques. In this paper, we present a stochastic optimization technique with chance constraints for systems with stochastic complementarity constraints. We use a particle filter-based approach to propagate moments for stochastic complementarity system. To circumvent the issues of open-loop chance constrained planning, we propose a contact-aware controller for covariance steering of the complementarity system. Our optimization problem is formulated as Non-Linear Programming (NLP) using bilevel optimization. We present an important-particle algorithm for numerical efficiency for the underlying control problem. We verify that our contact-aware closed-loop controller is able to steer the covariance of the states under stochastic contact-rich tasks.

Robust Pivoting Manipulation using Contact Implicit Bilevel Optimization

Mar 15, 2023Abstract:Generalizable manipulation requires that robots be able to interact with novel objects and environment. This requirement makes manipulation extremely challenging as a robot has to reason about complex frictional interactions with uncertainty in physical properties of the object and the environment. In this paper, we study robust optimization for planning of pivoting manipulation in the presence of uncertainties. We present insights about how friction can be exploited to compensate for inaccuracies in the estimates of the physical properties during manipulation. Under certain assumptions, we derive analytical expressions for stability margin provided by friction during pivoting manipulation. This margin is then used in a Contact Implicit Bilevel Optimization (CIBO) framework to optimize a trajectory that maximizes this stability margin to provide robustness against uncertainty in several physical parameters of the object. We present analysis of the stability margin with respect to several parameters involved in the underlying bilevel optimization problem. We demonstrate our proposed method using a 6 DoF manipulator for manipulating several different objects.

Tactile-Filter: Interactive Tactile Perception for Part Mating

Mar 10, 2023Abstract:Humans rely on touch and tactile sensing for a lot of dexterous manipulation tasks. Our tactile sensing provides us with a lot of information regarding contact formations as well as geometric information about objects during any interaction. With this motivation, vision-based tactile sensors are being widely used for various robotic perception and control tasks. In this paper, we present a method for interactive perception using vision-based tactile sensors for multi-object assembly. In particular, we are interested in tactile perception during part mating, where a robot can use tactile sensors and a feedback mechanism using particle filter to incrementally improve its estimate of objects that fit together for assembly. To do this, we first train a deep neural network that makes use of tactile images to predict the probabilistic correspondence between arbitrarily shaped objects that fit together. The trained model is used to design a particle filter which is used twofold. First, given one partial (or non-unique) observation of the hole, it incrementally improves the estimate of the correct peg by sampling more tactile observations. Second, it selects the next action for the robot to sample the next touch (and thus image) which results in maximum uncertainty reduction to minimize the number of interactions during the perception task. We evaluate our method on several part-mating tasks for assembly using a robot equipped with a vision-based tactile sensor. We also show the efficiency of the proposed action selection method against a naive method. See supplementary video at https://www.youtube.com/watch?v=jMVBg_e3gLw .

Simultaneous Tactile Estimation and Control of Extrinsic Contact

Mar 06, 2023Abstract:We propose a method that simultaneously estimates and controls extrinsic contact with tactile feedback. The method enables challenging manipulation tasks that require controlling light forces and accurate motions in contact, such as balancing an unknown object on a thin rod standing upright. A factor graph-based framework fuses a sequence of tactile and kinematic measurements to estimate and control the interaction between gripper-object-environment, including the location and wrench at the extrinsic contact between the grasped object and the environment and the grasp wrench transferred from the gripper to the object. The same framework simultaneously plans the gripper motions that make it possible to estimate the state while satisfying regularizing control objectives to prevent slip, such as minimizing the grasp wrench and minimizing frictional force at the extrinsic contact. We show results with sub-millimeter contact localization error and good slip prevention even on slippery environments, for multiple contact formations (point, line, patch contact) and transitions between them. See supplementary video and results at https://sites.google.com/view/sim-tact.

Tactile Tool Manipulation

Jan 17, 2023Abstract:Humans can effortlessly perform very complex, dexterous manipulation tasks by reacting to sensor observations. In contrast, robots can not perform reactive manipulation and they mostly operate in open-loop while interacting with their environment. Consequently, the current manipulation algorithms either are inefficient in performance or can only work in highly structured environments. In this paper, we present closed-loop control of a complex manipulation task where a robot uses a tool to interact with objects. Manipulation using a tool leads to complex kinematics and contact constraints that need to be satisfied for generating feasible manipulation trajectories. We first present an open-loop controller design using Non-Linear Programming (NLP) that satisfies these constraints. In order to design a closed-loop controller, we present a pose estimator of objects and tools using tactile sensors. Using our tactile estimator, we design a closed-loop controller based on Model Predictive Control (MPC). The proposed algorithm is verified using a 6 DoF manipulator on tasks using a variety of objects and tools. We verify that our closed-loop controller can successfully perform tool manipulation under several unexpected contacts. Video summarizing this work and hardware experiments are found https://youtu.be/VsClK04qDhk.

Generalizable Human-Robot Collaborative Assembly Using Imitation Learning and Force Control

Dec 02, 2022Abstract:Robots have been steadily increasing their presence in our daily lives, where they can work along with humans to provide assistance in various tasks on industry floors, in offices, and in homes. Automated assembly is one of the key applications of robots, and the next generation assembly systems could become much more efficient by creating collaborative human-robot systems. However, although collaborative robots have been around for decades, their application in truly collaborative systems has been limited. This is because a truly collaborative human-robot system needs to adjust its operation with respect to the uncertainty and imprecision in human actions, ensure safety during interaction, etc. In this paper, we present a system for human-robot collaborative assembly using learning from demonstration and pose estimation, so that the robot can adapt to the uncertainty caused by the operation of humans. Learning from demonstration is used to generate motion trajectories for the robot based on the pose estimate of different goal locations from a deep learning-based vision system. The proposed system is demonstrated using a physical 6 DoF manipulator in a collaborative human-robot assembly scenario. We show successful generalization of the system's operation to changes in the initial and final goal locations through various experiments.

Active Exploration for Robotic Manipulation

Oct 23, 2022Abstract:Robotic manipulation stands as a largely unsolved problem despite significant advances in robotics and machine learning in recent years. One of the key challenges in manipulation is the exploration of the dynamics of the environment when there is continuous contact between the objects being manipulated. This paper proposes a model-based active exploration approach that enables efficient learning in sparse-reward robotic manipulation tasks. The proposed method estimates an information gain objective using an ensemble of probabilistic models and deploys model predictive control (MPC) to plan actions online that maximize the expected reward while also performing directed exploration. We evaluate our proposed algorithm in simulation and on a real robot, trained from scratch with our method, on a challenging ball pushing task on tilted tables, where the target ball position is not known to the agent a-priori. Our real-world robot experiment serves as a fundamental application of active exploration in model-based reinforcement learning of complex robotic manipulation tasks.

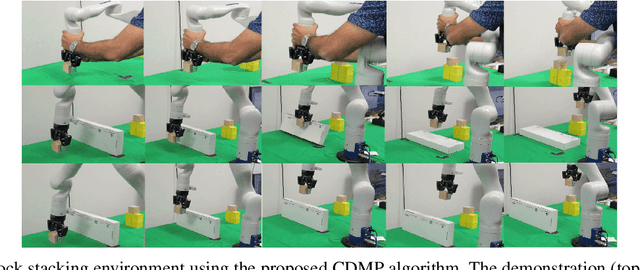

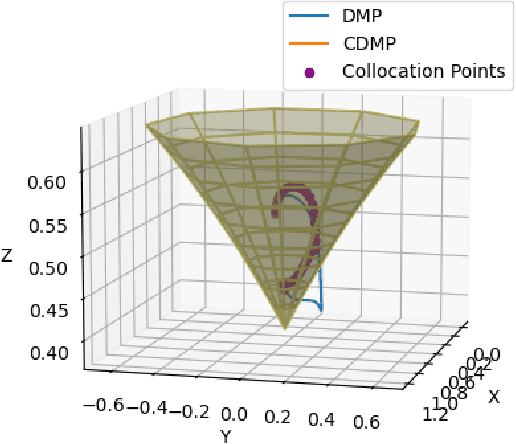

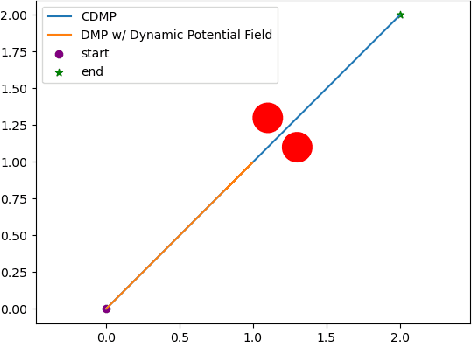

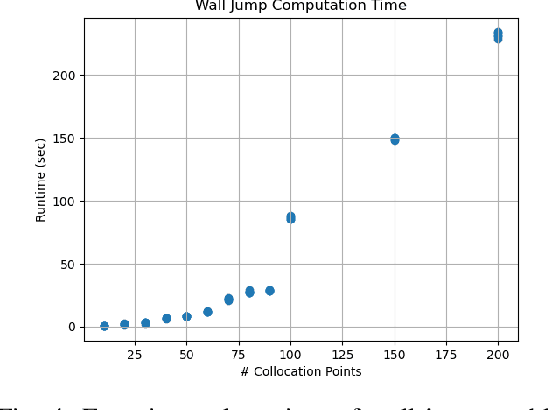

Constrained Dynamic Movement Primitives for Safe Learning of Motor Skills

Sep 28, 2022

Abstract:Dynamic movement primitives are widely used for learning skills which can be demonstrated to a robot by a skilled human or controller. While their generalization capabilities and simple formulation make them very appealing to use, they possess no strong guarantees to satisfy operational safety constraints for a task. In this paper, we present constrained dynamic movement primitives (CDMP) which can allow for constraint satisfaction in the robot workspace. We present a formulation of a non-linear optimization to perturb the DMP forcing weights regressed by locally-weighted regression to admit a Zeroing Barrier Function (ZBF), which certifies workspace constraint satisfaction. We demonstrate the proposed CDMP under different constraints on the end-effector movement such as obstacle avoidance and workspace constraints on a physical robot. A video showing the implementation of the proposed algorithm using different manipulators in different environments could be found here https://youtu.be/hJegJJkJfys.

Design of Adaptive Compliance Controllers for Safe Robotic Assembly

Apr 22, 2022

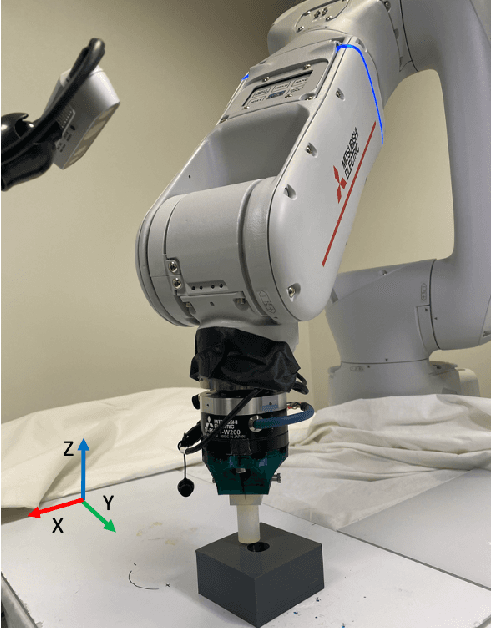

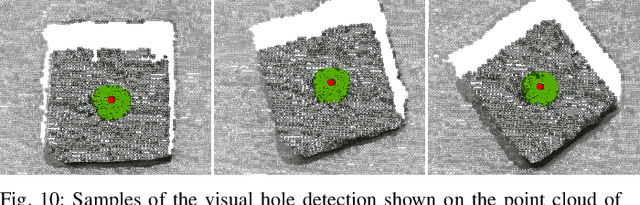

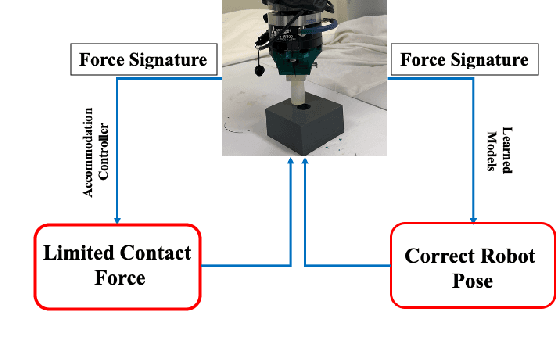

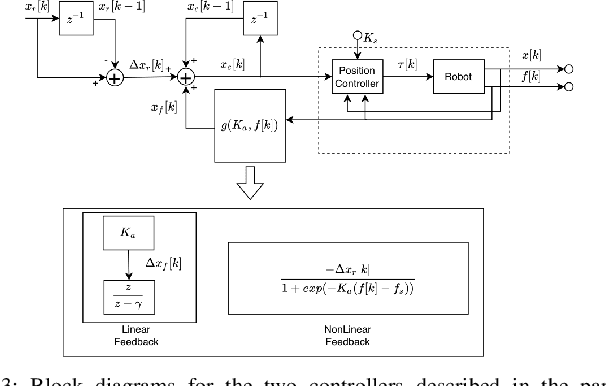

Abstract:Insertion operations are a critical element of most robotic assembly operation, and peg-in-hole (PiH) insertion is one of the most widely studied tasks in the industrial and academic manipulation communities. PiH insertion is in fact an entire class of problems, where the complexity of the problem can depend on the type of misalignment and contact formation during an insertion attempt. In this paper, we present the design and analysis of adaptive compliance controllers which can be used in insertion-type assembly tasks, including learning-based compliance controllers which can be used for insertion problems in the presence of uncertainty in the goal location during robotic assembly. We first present the design of compliance controllers which can ensure safe operation of the robot by limiting experienced contact forces during contact formation. Consequently, we present analysis of the force signature obtained during the contact formation to learn the corrective action needed to perform insertion. Finally, we use the proposed compliance controllers and learned models to design a policy that can successfully perform insertion in novel test conditions with almost perfect success rate. We validate the proposed approach on a physical robotic test-bed using a 6-DoF manipulator arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge