Daniela Rus

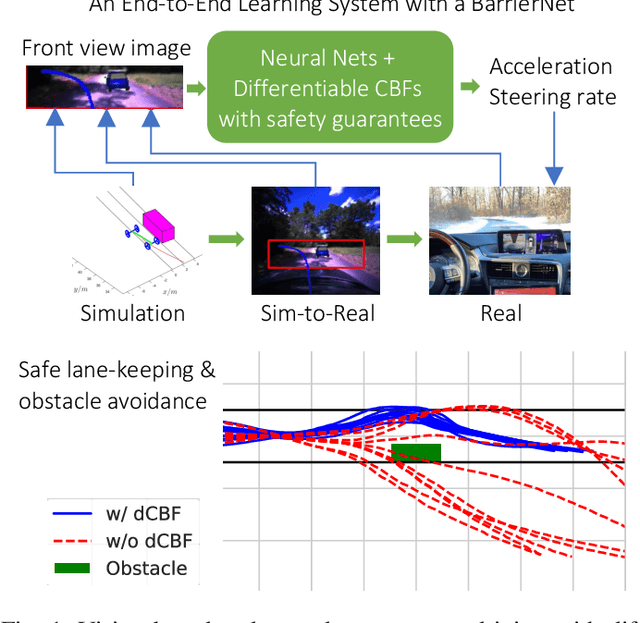

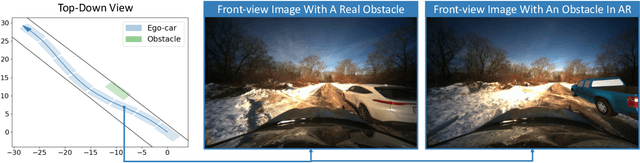

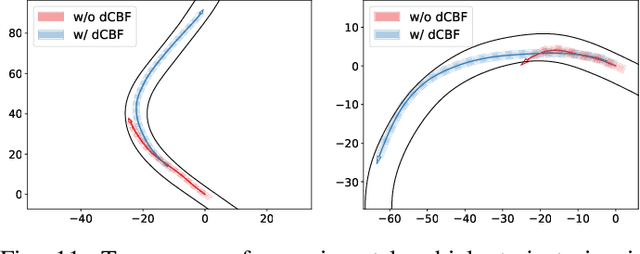

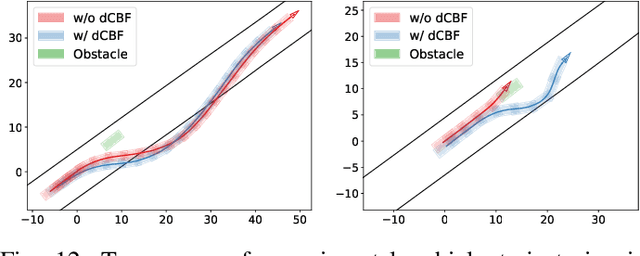

Differentiable Control Barrier Functions for Vision-based End-to-End Autonomous Driving

Mar 04, 2022

Abstract:Guaranteeing safety of perception-based learning systems is challenging due to the absence of ground-truth state information unlike in state-aware control scenarios. In this paper, we introduce a safety guaranteed learning framework for vision-based end-to-end autonomous driving. To this end, we design a learning system equipped with differentiable control barrier functions (dCBFs) that is trained end-to-end by gradient descent. Our models are composed of conventional neural network architectures and dCBFs. They are interpretable at scale, achieve great test performance under limited training data, and are safety guaranteed in a series of autonomous driving scenarios such as lane keeping and obstacle avoidance. We evaluated our framework in a sim-to-real environment, and tested on a real autonomous car, achieving safe lane following and obstacle avoidance via Augmented Reality (AR) and real parked vehicles.

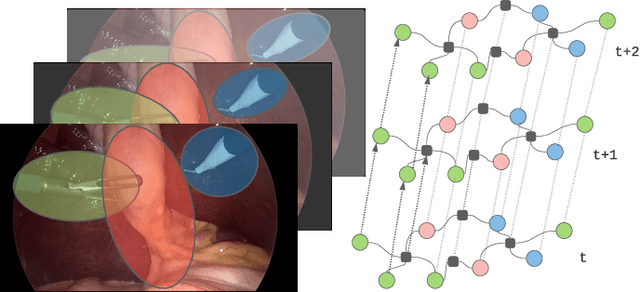

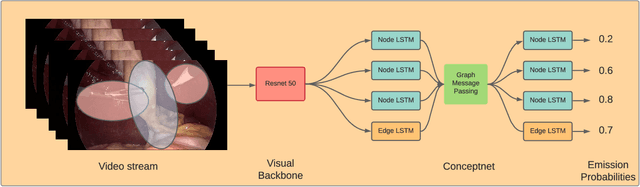

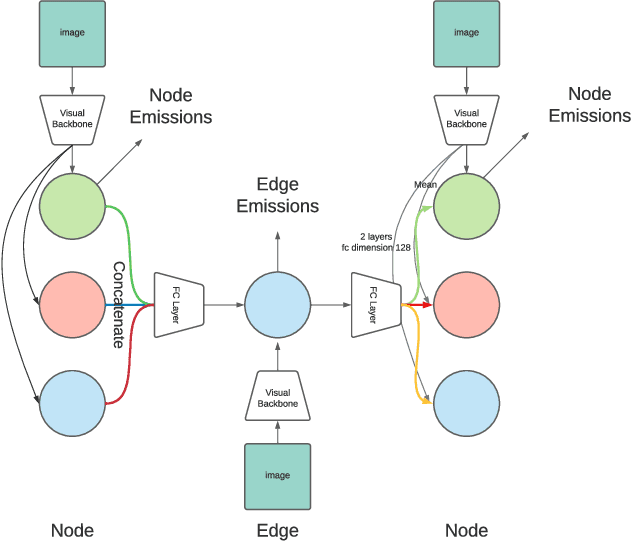

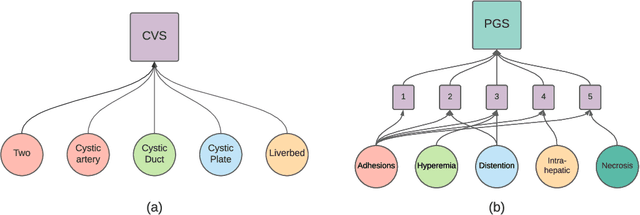

Concept Graph Neural Networks for Surgical Video Understanding

Feb 27, 2022

Abstract:We constantly integrate our knowledge and understanding of the world to enhance our interpretation of what we see. This ability is crucial in application domains which entail reasoning about multiple entities and concepts, such as AI-augmented surgery. In this paper, we propose a novel way of integrating conceptual knowledge into temporal analysis tasks via temporal concept graph networks. In the proposed networks, a global knowledge graph is incorporated into the temporal analysis of surgical instances, learning the meaning of concepts and relations as they apply to the data. We demonstrate our results in surgical video data for tasks such as verification of critical view of safety, as well as estimation of Parkland grading scale. The results show that our method improves the recognition and detection of complex benchmarks as well as enables other analytic applications of interest.

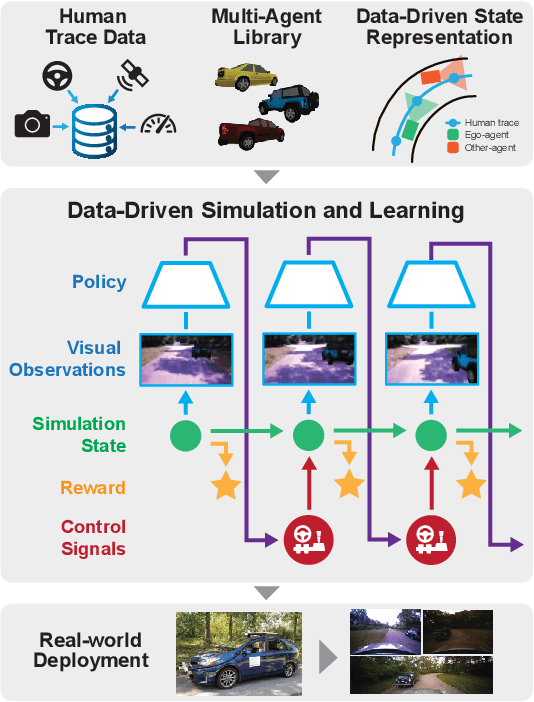

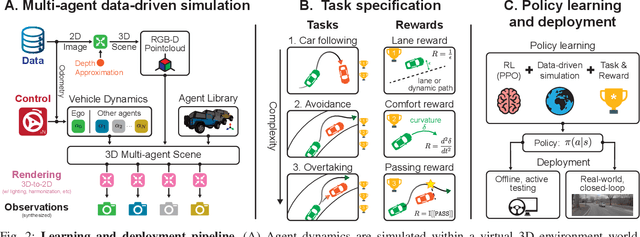

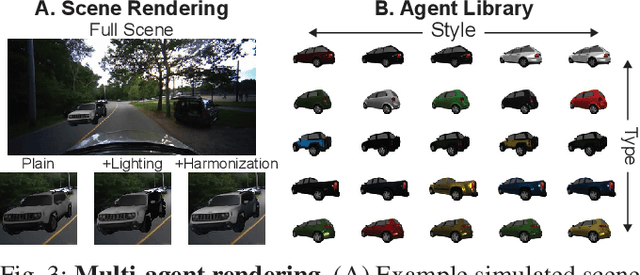

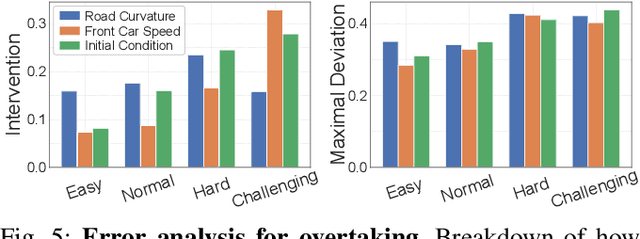

Learning Interactive Driving Policies via Data-driven Simulation

Nov 23, 2021

Abstract:Data-driven simulators promise high data-efficiency for driving policy learning. When used for modelling interactions, this data-efficiency becomes a bottleneck: Small underlying datasets often lack interesting and challenging edge cases for learning interactive driving. We address this challenge by proposing a simulation method that uses in-painted ado vehicles for learning robust driving policies. Thus, our approach can be used to learn policies that involve multi-agent interactions and allows for training via state-of-the-art policy learning methods. We evaluate the approach for learning standard interaction scenarios in driving. In extensive experiments, our work demonstrates that the resulting policies can be directly transferred to a full-scale autonomous vehicle without making use of any traditional sim-to-real transfer techniques such as domain randomization.

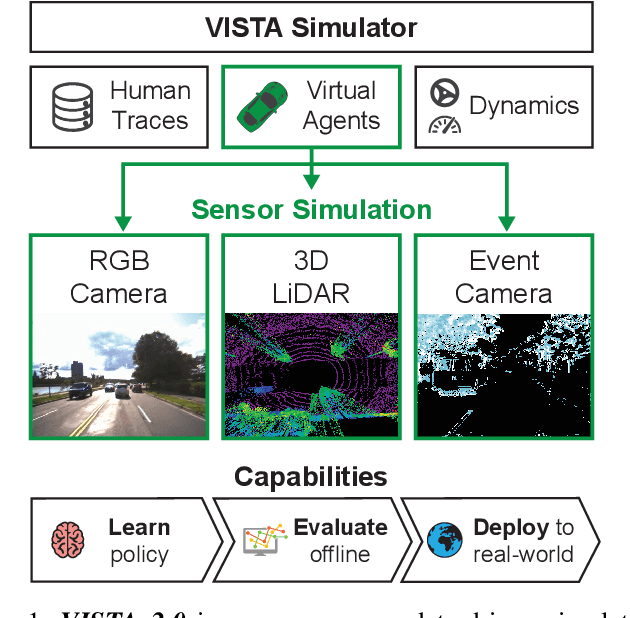

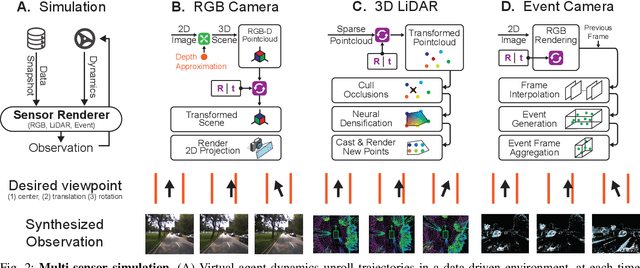

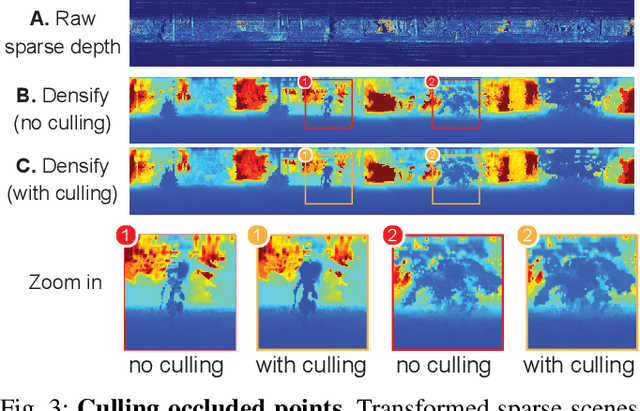

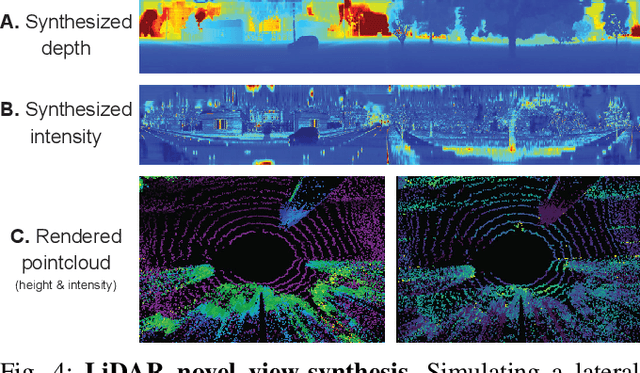

VISTA 2.0: An Open, Data-driven Simulator for Multimodal Sensing and Policy Learning for Autonomous Vehicles

Nov 23, 2021

Abstract:Simulation has the potential to transform the development of robust algorithms for mobile agents deployed in safety-critical scenarios. However, the poor photorealism and lack of diverse sensor modalities of existing simulation engines remain key hurdles towards realizing this potential. Here, we present VISTA, an open source, data-driven simulator that integrates multiple types of sensors for autonomous vehicles. Using high fidelity, real-world datasets, VISTA represents and simulates RGB cameras, 3D LiDAR, and event-based cameras, enabling the rapid generation of novel viewpoints in simulation and thereby enriching the data available for policy learning with corner cases that are difficult to capture in the physical world. Using VISTA, we demonstrate the ability to train and test perception-to-control policies across each of the sensor types and showcase the power of this approach via deployment on a full scale autonomous vehicle. The policies learned in VISTA exhibit sim-to-real transfer without modification and greater robustness than those trained exclusively on real-world data.

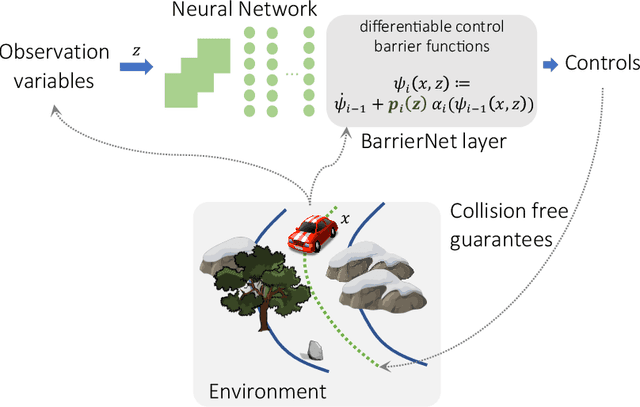

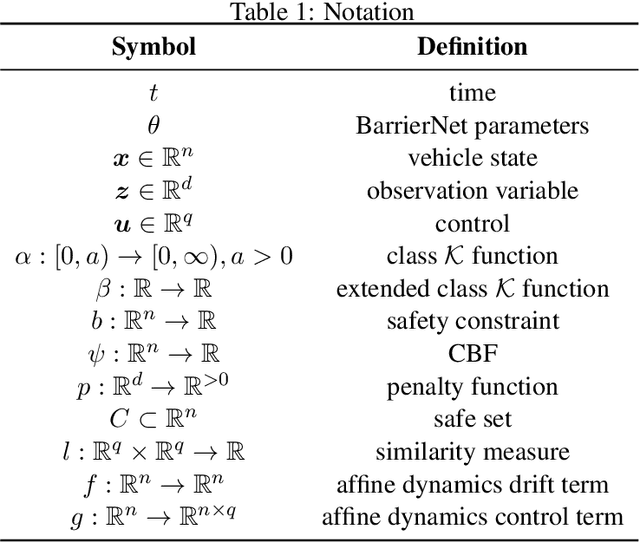

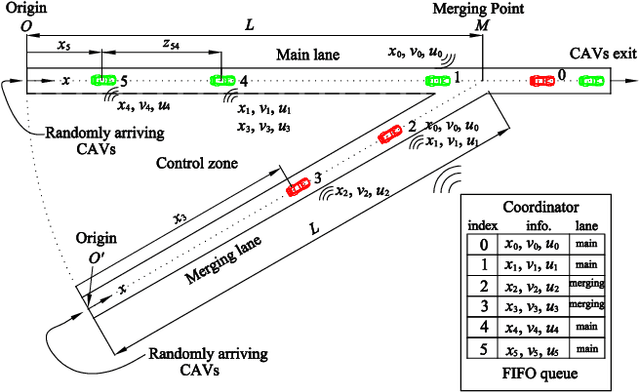

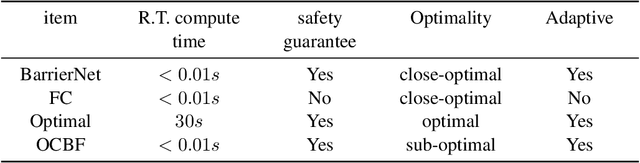

BarrierNet: A Safety-Guaranteed Layer for Neural Networks

Nov 22, 2021

Abstract:This paper introduces differentiable higher-order control barrier functions (CBF) that are end-to-end trainable together with learning systems. CBFs are usually overly conservative, while guaranteeing safety. Here, we address their conservativeness by softening their definitions using environmental dependencies without loosing safety guarantees, and embed them into differentiable quadratic programs. These novel safety layers, termed a BarrierNet, can be used in conjunction with any neural network-based controller, and can be trained by gradient descent. BarrierNet allows the safety constraints of a neural controller be adaptable to changing environments. We evaluate them on a series of control problems such as traffic merging and robot navigations in 2D and 3D space, and demonstrate their effectiveness compared to state-of-the-art approaches.

Is Bang-Bang Control All You Need? Solving Continuous Control with Bernoulli Policies

Nov 03, 2021

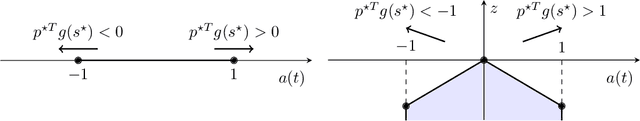

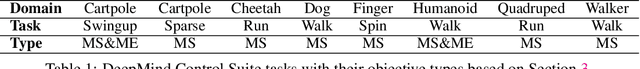

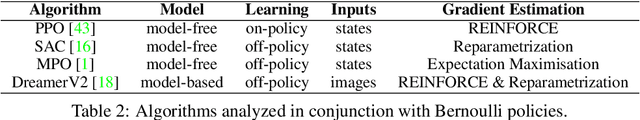

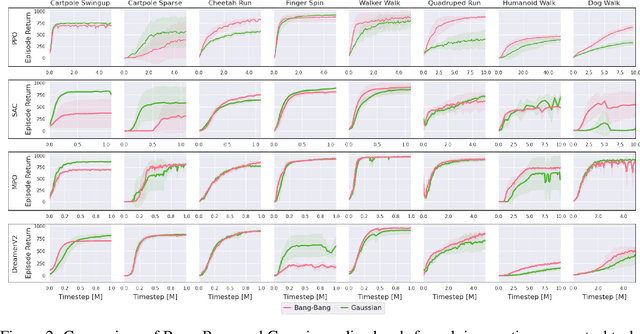

Abstract:Reinforcement learning (RL) for continuous control typically employs distributions whose support covers the entire action space. In this work, we investigate the colloquially known phenomenon that trained agents often prefer actions at the boundaries of that space. We draw theoretical connections to the emergence of bang-bang behavior in optimal control, and provide extensive empirical evaluation across a variety of recent RL algorithms. We replace the normal Gaussian by a Bernoulli distribution that solely considers the extremes along each action dimension - a bang-bang controller. Surprisingly, this achieves state-of-the-art performance on several continuous control benchmarks - in contrast to robotic hardware, where energy and maintenance cost affect controller choices. Since exploration, learning,and the final solution are entangled in RL, we provide additional imitation learning experiments to reduce the impact of exploration on our analysis. Finally, we show that our observations generalize to environments that aim to model real-world challenges and evaluate factors to mitigate the emergence of bang-bang solutions. Our findings emphasize challenges for benchmarking continuous control algorithms, particularly in light of potential real-world applications.

Model Based Control of Soft Robots: A Survey of the State of the Art and Open Challenges

Oct 04, 2021

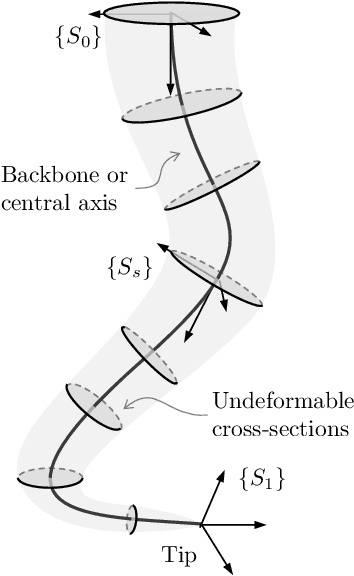

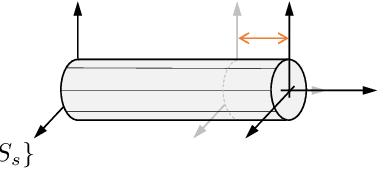

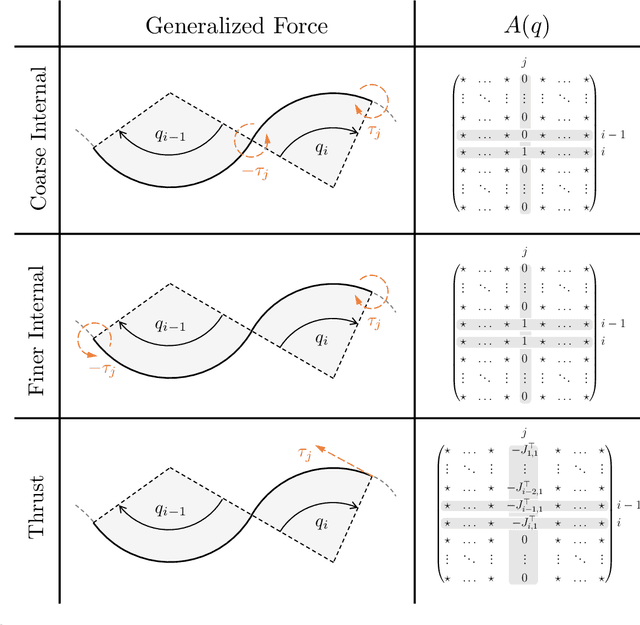

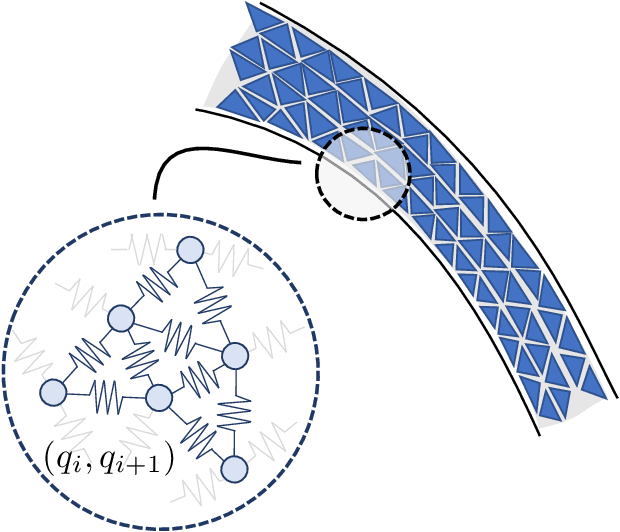

Abstract:Continuum soft robots are mechanical systems entirely made of continuously deformable elements. This design solution aims to bring robots closer to invertebrate animals and soft appendices of vertebrate animals (e.g., an elephant's trunk, a monkey's tail). This work aims to introduce the control theorist perspective to this novel development in robotics. We aim to remove the barriers to entry into this field by presenting existing results and future challenges using a unified language and within a coherent framework. Indeed, the main difficulty in entering this field is the wide variability of terminology and scientific backgrounds, making it quite hard to acquire a comprehensive view on the topic. Another limiting factor is that it is not obvious where to draw a clear line between the limitations imposed by the technology not being mature yet and the challenges intrinsic to this class of robots. In this work, we argue that the intrinsic effects are the continuum or multi-body dynamics, the presence of a non-negligible elastic potential field, and the variability in sensing and actuation strategies.

Compressing Neural Networks: Towards Determining the Optimal Layer-wise Decomposition

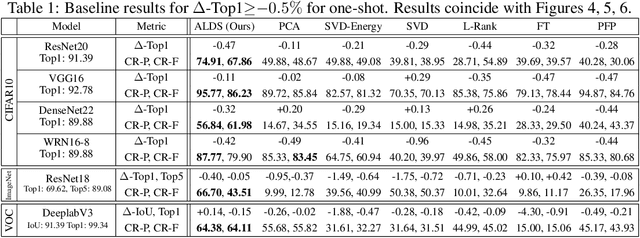

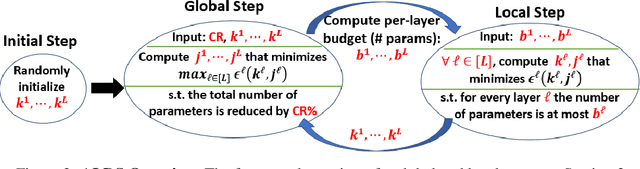

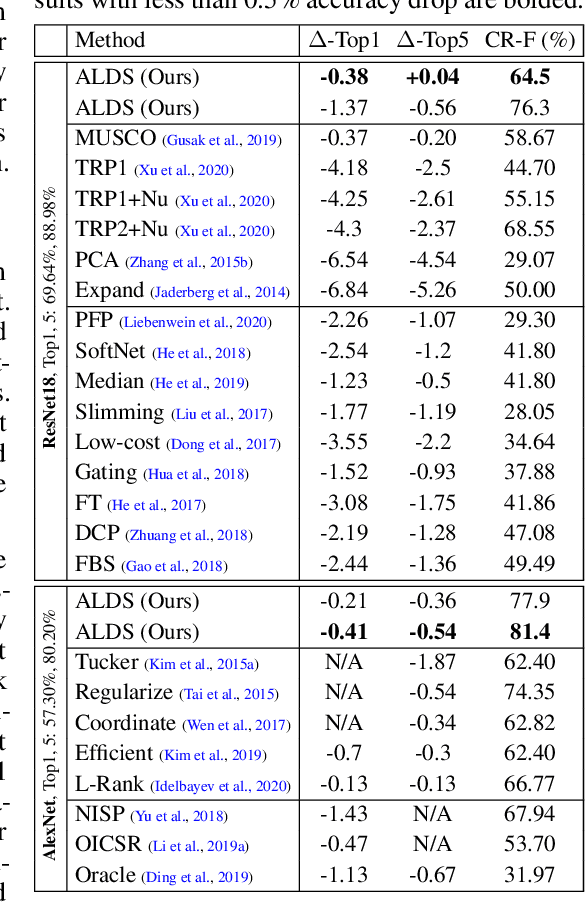

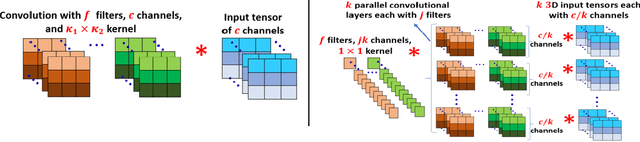

Jul 23, 2021

Abstract:We present a novel global compression framework for deep neural networks that automatically analyzes each layer to identify the optimal per-layer compression ratio, while simultaneously achieving the desired overall compression. Our algorithm hinges on the idea of compressing each convolutional (or fully-connected) layer by slicing its channels into multiple groups and decomposing each group via low-rank decomposition. At the core of our algorithm is the derivation of layer-wise error bounds from the Eckart Young Mirsky theorem. We then leverage these bounds to frame the compression problem as an optimization problem where we wish to minimize the maximum compression error across layers and propose an efficient algorithm towards a solution. Our experiments indicate that our method outperforms existing low-rank compression approaches across a wide range of networks and data sets. We believe that our results open up new avenues for future research into the global performance-size trade-offs of modern neural networks. Our code is available at https://github.com/lucaslie/torchprune.

GoTube: Scalable Stochastic Verification of Continuous-Depth Models

Jul 18, 2021

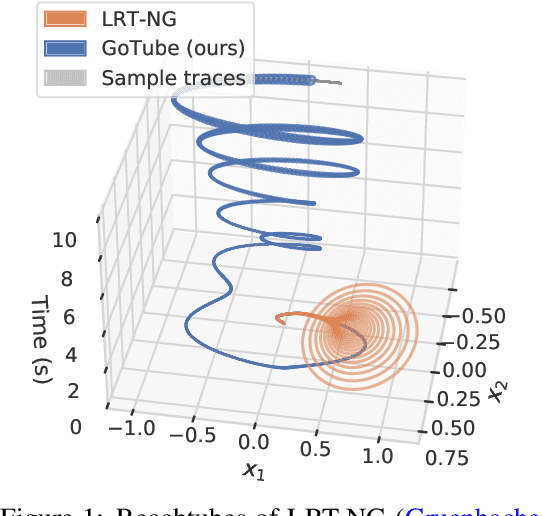

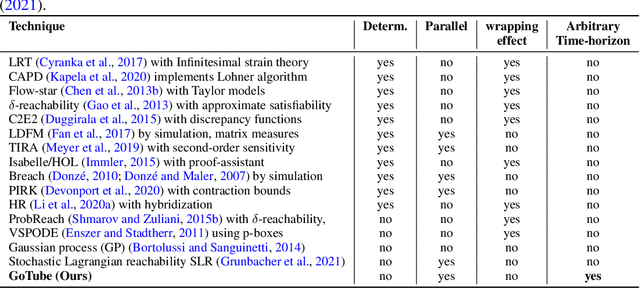

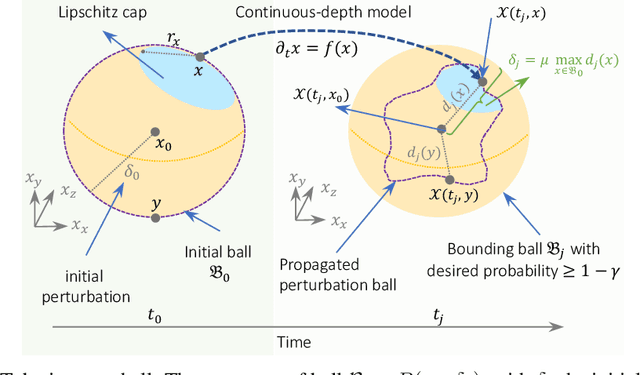

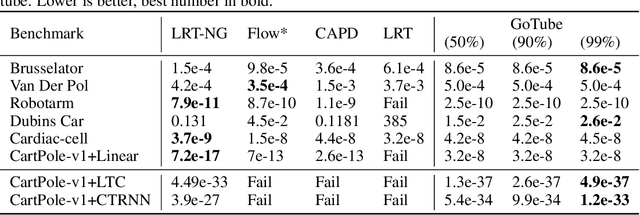

Abstract:We introduce a new stochastic verification algorithm that formally quantifies the behavioral robustness of any time-continuous process formulated as a continuous-depth model. The algorithm solves a set of global optimization (Go) problems over a given time horizon to construct a tight enclosure (Tube) of the set of all process executions starting from a ball of initial states. We call our algorithm GoTube. Through its construction, GoTube ensures that the bounding tube is conservative up to a desired probability. GoTube is implemented in JAX and optimized to scale to complex continuous-depth models. Compared to advanced reachability analysis tools for time-continuous neural networks, GoTube provably does not accumulate over-approximation errors between time steps and avoids the infamous wrapping effect inherent in symbolic techniques. We show that GoTube substantially outperforms state-of-the-art verification tools in terms of the size of the initial ball, speed, time-horizon, task completion, and scalability, on a large set of experiments. GoTube is stable and sets the state-of-the-art for its ability to scale up to time horizons well beyond what has been possible before.

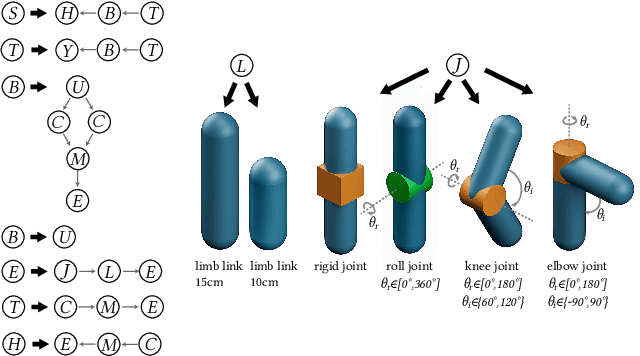

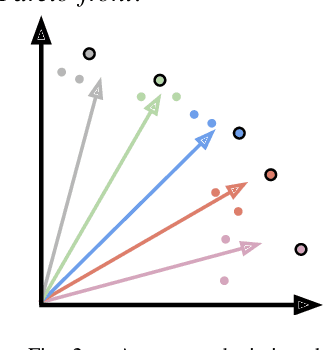

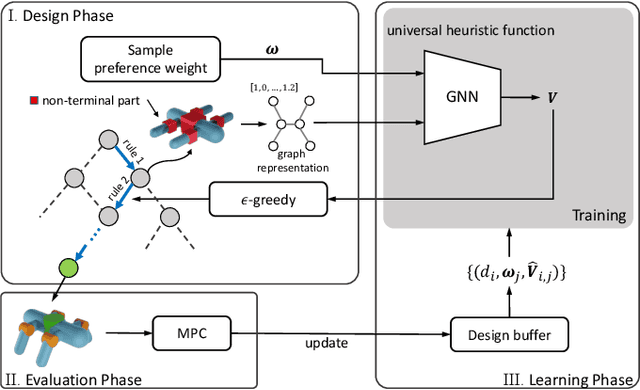

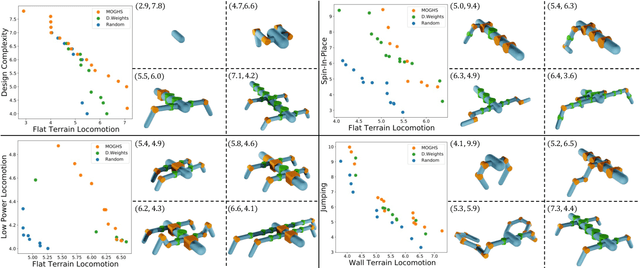

Multi-Objective Graph Heuristic Search for Terrestrial Robot Design

Jul 13, 2021

Abstract:We present methods for co-designing rigid robots over control and morphology (including discrete topology) over multiple objectives. Previous work has addressed problems in single-objective robot co-design or multi-objective control. However, the joint multi-objective co-design problem is extremely important for generating capable, versatile, algorithmically designed robots. In this work, we present Multi-Objective Graph Heuristic Search, which extends a single-objective graph heuristic search from previous work to enable a highly efficient multi-objective search in a combinatorial design topology space. Core to this approach, we introduce a new universal, multi-objective heuristic function based on graph neural networks that is able to effectively leverage learned information between different task trade-offs. We demonstrate our approach on six combinations of seven terrestrial locomotion and design tasks, including one three-objective example. We compare the captured Pareto fronts across different methods and demonstrate that our multi-objective graph heuristic search quantitatively and qualitatively outperforms other techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge