Time Series Denoising

Time series denoising is the process of removing noise from time series data to improve the quality of the data.

Papers and Code

TransConv-DDPM: Enhanced Diffusion Model for Generating Time-Series Data in Healthcare

Feb 03, 2026The lack of real-world data in clinical fields poses a major obstacle in training effective AI models for diagnostic and preventive tools in medicine. Generative AI has shown promise in increasing data volume and enhancing model training, particularly in computer vision and natural language processing (NLP) domains. However, generating physiological time-series data, a common type in medical AI applications, presents unique challenges due to its inherent complexity and variability. This paper introduces TransConv-DDPM, an enhanced generative AI method for biomechanical and physiological time-series data generation. The model employs a denoising diffusion probabilistic model (DDPM) with U-Net, multi-scale convolution modules, and a transformer layer to capture both global and local temporal dependencies. We evaluated TransConv-DDPM on three diverse datasets, generating both long and short-sequence time-series data. Quantitative comparisons against state-of-the-art methods, TimeGAN and Diffusion-TS, using four performance metrics, demonstrated promising results, particularly on the SmartFallMM and EEG datasets, where it effectively captured the more gradual temporal change patterns between data points. Additionally, a utility test on the SmartFallMM dataset revealed that adding synthetic fall data generated by TransConv-DDPM improved predictive model performance, showing a 13.64% improvement in F1-score and a 14.93% increase in overall accuracy compared to the baseline model trained solely on fall data from the SmartFallMM dataset. These findings highlight the potential of TransConv-DDPM to generate high-quality synthetic data for real-world applications.

* Previously published at IEEE COMPSAC 2025

Changepoint Detection As Model Selection: A General Framework

Jan 30, 2026This dissertation presents a general framework for changepoint detection based on L0 model selection. The core method, Iteratively Reweighted Fused Lasso (IRFL), improves upon the generalized lasso by adaptively reweighting penalties to enhance support recovery and minimize criteria such as the Bayesian Information Criterion (BIC). The approach allows for flexible modeling of seasonal patterns, linear and quadratic trends, and autoregressive dependence in the presence of changepoints. Simulation studies demonstrate that IRFL achieves accurate changepoint detection across a wide range of challenging scenarios, including those involving nuisance factors such as trends, seasonal patterns, and serially correlated errors. The framework is further extended to image data, where it enables edge-preserving denoising and segmentation, with applications spanning medical imaging and high-throughput plant phenotyping. Applications to real-world data demonstrate IRFL's utility. In particular, analysis of the Mauna Loa CO2 time series reveals changepoints that align with volcanic eruptions and ENSO events, yielding a more accurate trend decomposition than ordinary least squares. Overall, IRFL provides a robust, extensible tool for detecting structural change in complex data.

FusAD: Time-Frequency Fusion with Adaptive Denoising for General Time Series Analysis

Dec 16, 2025

Time series analysis plays a vital role in fields such as finance, healthcare, industry, and meteorology, underpinning key tasks including classification, forecasting, and anomaly detection. Although deep learning models have achieved remarkable progress in these areas in recent years, constructing an efficient, multi-task compatible, and generalizable unified framework for time series analysis remains a significant challenge. Existing approaches are often tailored to single tasks or specific data types, making it difficult to simultaneously handle multi-task modeling and effectively integrate information across diverse time series types. Moreover, real-world data are often affected by noise, complex frequency components, and multi-scale dynamic patterns, which further complicate robust feature extraction and analysis. To ameliorate these challenges, we propose FusAD, a unified analysis framework designed for diverse time series tasks. FusAD features an adaptive time-frequency fusion mechanism, integrating both Fourier and Wavelet transforms to efficiently capture global-local and multi-scale dynamic features. With an adaptive denoising mechanism, FusAD automatically senses and filters various types of noise, highlighting crucial sequence variations and enabling robust feature extraction in complex environments. In addition, the framework integrates a general information fusion and decoding structure, combined with masked pre-training, to promote efficient learning and transfer of multi-granularity representations. Extensive experiments demonstrate that FusAD consistently outperforms state-of-the-art models on mainstream time series benchmarks for classification, forecasting, and anomaly detection tasks, while maintaining high efficiency and scalability. Code is available at https://github.com/zhangda1018/FusAD.

FORMSpoT: A Decade of Tree-Level, Country-Scale Forest Monitoring

Dec 18, 2025

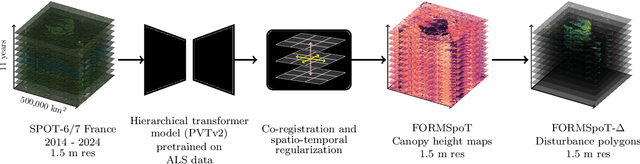

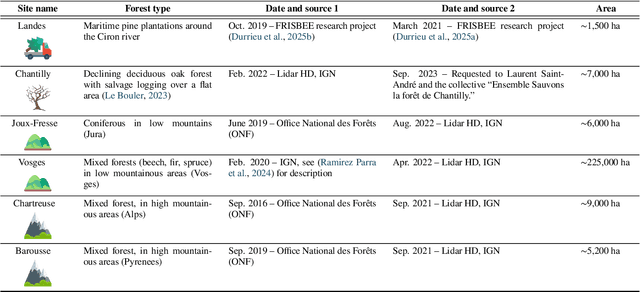

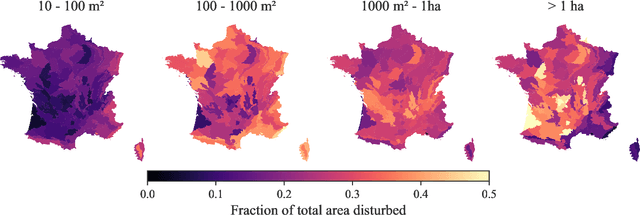

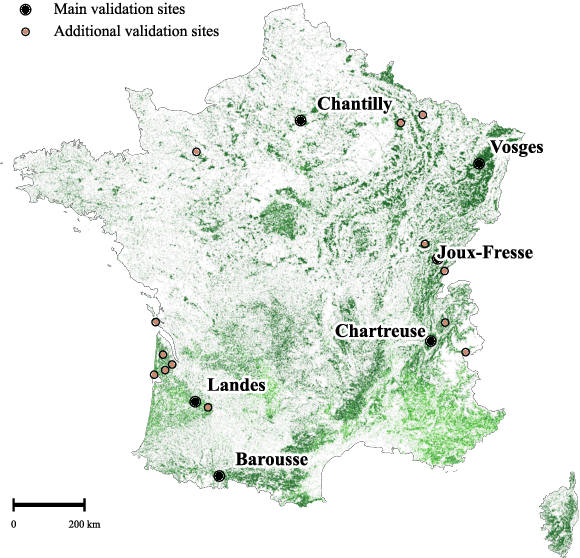

The recent decline of the European forest carbon sink highlights the need for spatially explicit and frequently updated forest monitoring tools. Yet, existing satellite-based disturbance products remain too coarse to detect changes at the scale of individual trees, typically below 100 m$^{2}$. Here, we introduce FORMSpoT (Forest Mapping with SPOT Time series), a decade-long (2014-2024) nationwide mapping of forest canopy height at 1.5 m resolution, together with annual disturbance polygons (FORMSpoT-$Δ$) covering mainland France. Canopy heights were derived from annual SPOT-6/7 composites using a hierarchical transformer model (PVTv2) trained on high-resolution airborne laser scanning (ALS) data. To enable robust change detection across heterogeneous acquisitions, we developed a dedicated post-processing pipeline combining co-registration and spatio-temporal total variation denoising. Validation against ALS revisits across 19 sites and 5,087 National Forest Inventory plots shows that FORMSpoT-$Δ$ substantially outperforms existing disturbance products. In mountainous forests, where disturbances are small and spatially fragmented, FORMSpoT-$Δ$ achieves an F1-score of 0.44, representing an order of magnitude higher than existing benchmarks. By enabling tree-level monitoring of forest dynamics at national scale, FORMSpoT-$Δ$ provides a unique tool to analyze management practices, detect early signals of forest decline, and better quantify carbon losses from subtle disturbances such as thinning or selective logging. These results underscore the critical importance of sustaining very high-resolution satellite missions like SPOT and open-data initiatives such as DINAMIS for monitoring forests under climate change.

q3-MuPa: Quick, Quiet, Quantitative Multi-Parametric MRI using Physics-Informed Diffusion Models

Dec 19, 2025The 3D fast silent multi-parametric mapping sequence with zero echo time (MuPa-ZTE) is a novel quantitative MRI (qMRI) acquisition that enables nearly silent scanning by using a 3D phyllotaxis sampling scheme. MuPa-ZTE improves patient comfort and motion robustness, and generates quantitative maps of T1, T2, and proton density using the acquired weighted image series. In this work, we propose a diffusion model-based qMRI mapping method that leverages both a deep generative model and physics-based data consistency to further improve the mapping performance. Furthermore, our method enables additional acquisition acceleration, allowing high-quality qMRI mapping from a fourfold-accelerated MuPa-ZTE scan (approximately 1 minute). Specifically, we trained a denoising diffusion probabilistic model (DDPM) to map MuPa-ZTE image series to qMRI maps, and we incorporated the MuPa-ZTE forward signal model as an explicit data consistency (DC) constraint during inference. We compared our mapping method against a baseline dictionary matching approach and a purely data-driven diffusion model. The diffusion models were trained entirely on synthetic data generated from digital brain phantoms, eliminating the need for large real-scan datasets. We evaluated on synthetic data, a NISM/ISMRM phantom, healthy volunteers, and a patient with brain metastases. The results demonstrated that our method produces 3D qMRI maps with high accuracy, reduced noise and better preservation of structural details. Notably, it generalised well to real scans despite training on synthetic data alone. The combination of the MuPa-ZTE acquisition and our physics-informed diffusion model is termed q3-MuPa, a quick, quiet, and quantitative multi-parametric mapping framework, and our findings highlight its strong clinical potential.

Combining digital data streams and epidemic networks for real time outbreak detection

Nov 10, 2025

Responding to disease outbreaks requires close surveillance of their trajectories, but outbreak detection is hindered by the high noise in epidemic time series. Aggregating information across data sources has shown great denoising ability in other fields, but remains underexplored in epidemiology. Here, we present LRTrend, an interpretable machine learning framework to identify outbreaks in real time. LRTrend effectively aggregates diverse health and behavioral data streams within one region and learns disease-specific epidemic networks to aggregate information across regions. We reveal diverse epidemic clusters and connections across the United States that are not well explained by commonly used human mobility networks and may be informative for future public health coordination. We apply LRTrend to 2 years of COVID-19 data in 305 hospital referral regions and frequently detect regional Delta and Omicron waves within 2 weeks of the outbreak's start, when case counts are a small fraction of the wave's resulting peak.

Diffolio: A Diffusion Model for Multivariate Probabilistic Financial Time-Series Forecasting and Portfolio Construction

Nov 10, 2025Probabilistic forecasting is crucial in multivariate financial time-series for constructing efficient portfolios that account for complex cross-sectional dependencies. In this paper, we propose Diffolio, a diffusion model designed for multivariate financial time-series forecasting and portfolio construction. Diffolio employs a denoising network with a hierarchical attention architecture, comprising both asset-level and market-level layers. Furthermore, to better reflect cross-sectional correlations, we introduce a correlation-guided regularizer informed by a stable estimate of the target correlation matrix. This structure effectively extracts salient features not only from historical returns but also from asset-specific and systematic covariates, significantly enhancing the performance of forecasts and portfolios. Experimental results on the daily excess returns of 12 industry portfolios show that Diffolio outperforms various probabilistic forecasting baselines in multivariate forecasting accuracy and portfolio performance. Moreover, in portfolio experiments, portfolios constructed from Diffolio's forecasts show consistently robust performance, thereby outperforming those from benchmarks by achieving higher Sharpe ratios for the mean-variance tangency portfolio and higher certainty equivalents for the growth-optimal portfolio. These results demonstrate the superiority of our proposed Diffolio in terms of not only statistical accuracy but also economic significance.

DenoGrad: Deep Gradient Denoising Framework for Enhancing the Performance of Interpretable AI Models

Nov 13, 2025The performance of Machine Learning (ML) models, particularly those operating within the Interpretable Artificial Intelligence (Interpretable AI) framework, is significantly affected by the presence of noise in both training and production data. Denoising has therefore become a critical preprocessing step, typically categorized into instance removal and instance correction techniques. However, existing correction approaches often degrade performance or oversimplify the problem by altering the original data distribution. This leads to unrealistic scenarios and biased models, which is particularly problematic in contexts where interpretable AI models are employed, as their interpretability depends on the fidelity of the underlying data patterns. In this paper, we argue that defining noise independently of the solution may be ineffective, as its nature can vary significantly across tasks and datasets. Using a task-specific high quality solution as a reference can provide a more precise and adaptable noise definition. To this end, we propose DenoGrad, a novel Gradient-based instance Denoiser framework that leverages gradients from an accurate Deep Learning (DL) model trained on the target data -- regardless of the specific task -- to detect and adjust noisy samples. Unlike conventional approaches, DenoGrad dynamically corrects noisy instances, preserving problem's data distribution, and improving AI models robustness. DenoGrad is validated on both tabular and time series datasets under various noise settings against the state-of-the-art. DenoGrad outperforms existing denoising strategies, enhancing the performance of interpretable IA models while standing out as the only high quality approach that preserves the original data distribution.

Conditional Diffusion as Latent Constraints for Controllable Symbolic Music Generation

Nov 10, 2025

Recent advances in latent diffusion models have demonstrated state-of-the-art performance in high-dimensional time-series data synthesis while providing flexible control through conditioning and guidance. However, existing methodologies primarily rely on musical context or natural language as the main modality of interacting with the generative process, which may not be ideal for expert users who seek precise fader-like control over specific musical attributes. In this work, we explore the application of denoising diffusion processes as plug-and-play latent constraints for unconditional symbolic music generation models. We focus on a framework that leverages a library of small conditional diffusion models operating as implicit probabilistic priors on the latents of a frozen unconditional backbone. While previous studies have explored domain-specific use cases, this work, to the best of our knowledge, is the first to demonstrate the versatility of such an approach across a diverse array of musical attributes, such as note density, pitch range, contour, and rhythm complexity. Our experiments show that diffusion-driven constraints outperform traditional attribute regularization and other latent constraints architectures, achieving significantly stronger correlations between target and generated attributes while maintaining high perceptual quality and diversity.

DK-Root: A Joint Data-and-Knowledge-Driven Framework for Root Cause Analysis of QoE Degradations in Mobile Networks

Nov 13, 2025

Diagnosing the root causes of Quality of Experience (QoE) degradations in operational mobile networks is challenging due to complex cross-layer interactions among kernel performance indicators (KPIs) and the scarcity of reliable expert annotations. Although rule-based heuristics can generate labels at scale, they are noisy and coarse-grained, limiting the accuracy of purely data-driven approaches. To address this, we propose DK-Root, a joint data-and-knowledge-driven framework that unifies scalable weak supervision with precise expert guidance for robust root-cause analysis. DK-Root first pretrains an encoder via contrastive representation learning using abundant rule-based labels while explicitly denoising their noise through a supervised contrastive objective. To supply task-faithful data augmentation, we introduce a class-conditional diffusion model that generates KPIs sequences preserving root-cause semantics, and by controlling reverse diffusion steps, it produces weak and strong augmentations that improve intra-class compactness and inter-class separability. Finally, the encoder and the lightweight classifier are jointly fine-tuned with scarce expert-verified labels to sharpen decision boundaries. Extensive experiments on a real-world, operator-grade dataset demonstrate state-of-the-art accuracy, with DK-Root surpassing traditional ML and recent semi-supervised time-series methods. Ablations confirm the necessity of the conditional diffusion augmentation and the pretrain-finetune design, validating both representation quality and classification gains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge